先看下搭建好的副本集显示情况

27017(主节点)如下

C:Users78204>mongod --replSet rs0 --port 27017 --dbpath c:srvmongodb s0-0 --smallfiles --oplogSize 128 2019-07-03T15:46:46.815+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none' 2019-07-03T15:46:46.826+0800 I CONTROL [initandlisten] MongoDB starting : pid=12124 port=27017 dbpath=c:srvmongodb s0-0 64-bit host=DESKTOP-65Q8KM9 2019-07-03T15:46:46.826+0800 I CONTROL [initandlisten] targetMinOS: Windows 7/Windows Server 2008 R2 2019-07-03T15:46:46.826+0800 I CONTROL [initandlisten] db version v4.0.9 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] git version: fc525e2d9b0e4bceff5c2201457e564362909765 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] allocator: tcmalloc 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] modules: none 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] build environment: 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] distmod: 2008plus-ssl 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] distarch: x86_64 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] target_arch: x86_64 2019-07-03T15:46:46.827+0800 I CONTROL [initandlisten] options: { net: { port: 27017 }, replication: { oplogSizeMB: 128, replSet: "rs0" }, storage: { dbPath: "c:srvmongodb s0-0", mmapv1: { smallFiles: true } } } 2019-07-03T15:46:46.831+0800 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=3543M,session_max=20000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000),statistics_log=(wait=0),verbose=(recovery_progress), 2019-07-03T15:46:46.870+0800 I STORAGE [initandlisten] WiredTiger message [1562140006:870173][12124:140724264981088], txn-recover: Set global recovery timestamp: 0 2019-07-03T15:46:46.881+0800 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0) 2019-07-03T15:46:46.910+0800 W STORAGE [initandlisten] Detected configuration for non-active storage engine mmapv1 when current storage engine is wiredTiger 2019-07-03T15:46:46.910+0800 I CONTROL [initandlisten] 2019-07-03T15:46:46.910+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-07-03T15:46:46.911+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-07-03T15:46:46.911+0800 I CONTROL [initandlisten] 2019-07-03T15:46:46.911+0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost. 2019-07-03T15:46:46.912+0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server. 2019-07-03T15:46:46.912+0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP 2019-07-03T15:46:46.913+0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to 2019-07-03T15:46:46.914+0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the 2019-07-03T15:46:46.914+0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning. 2019-07-03T15:46:46.915+0800 I CONTROL [initandlisten] 2019-07-03T15:46:46.924+0800 I STORAGE [initandlisten] createCollection: local.startup_log with generated UUID: 5d9fea77-57e8-46ee-8200-08b51deaf90f 2019-07-03T15:46:47.174+0800 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory 'c:/srv/mongodb/rs0-0/diagnostic.data' 2019-07-03T15:46:47.177+0800 I STORAGE [initandlisten] createCollection: local.replset.oplogTruncateAfterPoint with generated UUID: 912b0806-d50a-4ddb-a565-437c1a680754 2019-07-03T15:46:47.189+0800 I STORAGE [initandlisten] createCollection: local.replset.minvalid with generated UUID: 48471e38-a57b-4d2d-984b-bf4d1cd8a280 2019-07-03T15:46:47.205+0800 I REPL [initandlisten] Did not find local voted for document at startup. 2019-07-03T15:46:47.206+0800 I REPL [initandlisten] Did not find local Rollback ID document at startup. Creating one. 2019-07-03T15:46:47.207+0800 I STORAGE [initandlisten] createCollection: local.system.rollback.id with generated UUID: 742ec76f-d229-4e66-8641-1b61f16508ea 2019-07-03T15:46:47.220+0800 I REPL [initandlisten] Initialized the rollback ID to 1 2019-07-03T15:46:47.220+0800 I REPL [initandlisten] Did not find local replica set configuration document at startup; NoMatchingDocument: Did not find replica set configuration document in local.system.replset 2019-07-03T15:46:47.223+0800 I CONTROL [LogicalSessionCacheRefresh] Sessions collection is not set up; waiting until next sessions refresh interval: Replication has not yet been configured 2019-07-03T15:46:47.224+0800 I NETWORK [initandlisten] waiting for connections on port 27017 2019-07-03T15:46:47.225+0800 I CONTROL [LogicalSessionCacheReap] Sessions collection is not set up; waiting until next sessions reap interval: config.system.sessions does not exist 2019-07-03T15:48:15.418+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63164 #1 (1 connection now open) 2019-07-03T15:48:15.419+0800 I NETWORK [conn1] received client metadata from 127.0.0.1:63164 conn1: { application: { name: "MongoDB Shell" }, driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:50.516+0800 I REPL [conn1] replSetInitiate admin command received from client 2019-07-03T15:49:50.540+0800 I REPL [conn1] replSetInitiate config object with 3 members parses ok 2019-07-03T15:49:50.541+0800 I ASIO [Replication] Connecting to 127.0.0.1:27018 2019-07-03T15:49:50.543+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T15:49:50.546+0800 I REPL [conn1] ****** 2019-07-03T15:49:50.546+0800 I REPL [conn1] creating replication oplog of size: 128MB... 2019-07-03T15:49:50.546+0800 I STORAGE [conn1] createCollection: local.oplog.rs with generated UUID: 7d633267-dcad-433a-b367-19d3f8019b52 2019-07-03T15:49:50.547+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63190 #6 (2 connections now open) 2019-07-03T15:49:50.548+0800 I NETWORK [conn6] received client metadata from 127.0.0.1:63190 conn6: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:50.548+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63191 #7 (3 connections now open) 2019-07-03T15:49:50.549+0800 I NETWORK [conn7] received client metadata from 127.0.0.1:63191 conn7: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:50.553+0800 I STORAGE [conn1] Starting OplogTruncaterThread local.oplog.rs 2019-07-03T15:49:50.554+0800 I STORAGE [conn1] The size storer reports that the oplog contains 0 records totaling to 0 bytes 2019-07-03T15:49:50.554+0800 I STORAGE [conn1] Scanning the oplog to determine where to place markers for truncation 2019-07-03T15:49:50.578+0800 I REPL [conn1] ****** 2019-07-03T15:49:50.578+0800 I STORAGE [conn1] createCollection: local.system.replset with generated UUID: 67825d84-2f70-4e76-87e1-d66510ef0280 2019-07-03T15:49:50.601+0800 I STORAGE [conn1] createCollection: admin.system.version with provided UUID: c30ef668-a63a-41b0-a617-f0d9be489f23 2019-07-03T15:49:50.617+0800 I COMMAND [conn1] setting featureCompatibilityVersion to 4.0 2019-07-03T15:49:50.617+0800 I NETWORK [conn1] Skip closing connection for connection # 7 2019-07-03T15:49:50.618+0800 I NETWORK [conn1] Skip closing connection for connection # 6 2019-07-03T15:49:50.618+0800 I NETWORK [conn1] Skip closing connection for connection # 1 2019-07-03T15:49:50.619+0800 I REPL [conn1] New replica set config in use: { _id: "rs0", version: 1, protocolVersion: 1, writeConcernMajorityJournalDefault: true, members: [ { _id: 0, host: "127.0.0.1:27017", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 }, { _id: 1, host: "127.0.0.1:27018", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 }, { _id: 2, host: "127.0.0.1:27019", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 } ], settings: { chainingAllowed: true, heartbeatIntervalMillis: 2000, heartbeatTimeoutSecs: 10, electionTimeoutMillis: 10000, catchUpTimeoutMillis: -1, catchUpTakeoverDelayMillis: 30000, getLastErrorModes: {}, getLastErrorDefaults: { w: 1, wtimeout: 0 }, replicaSetId: ObjectId('5d1c5e1e207949b5278727c6') } } 2019-07-03T15:49:50.620+0800 I REPL [conn1] This node is 127.0.0.1:27017 in the config 2019-07-03T15:49:50.620+0800 I REPL [conn1] transition to STARTUP2 from STARTUP 2019-07-03T15:49:50.621+0800 I REPL [conn1] Starting replication storage threads 2019-07-03T15:49:50.621+0800 I REPL [replexec-1] Member 127.0.0.1:27018 is now in state STARTUP 2019-07-03T15:49:50.622+0800 I REPL [replexec-0] Member 127.0.0.1:27019 is now in state STARTUP 2019-07-03T15:49:50.624+0800 I REPL [conn1] transition to RECOVERING from STARTUP2 2019-07-03T15:49:50.624+0800 I REPL [conn1] Starting replication fetcher thread 2019-07-03T15:49:50.625+0800 I REPL [conn1] Starting replication applier thread 2019-07-03T15:49:50.625+0800 I REPL [conn1] Starting replication reporter thread 2019-07-03T15:49:50.625+0800 I REPL [rsSync-0] Starting oplog application 2019-07-03T15:49:50.626+0800 I COMMAND [conn1] command local.system.replset appName: "MongoDB Shell" command: replSetInitiate { replSetInitiate: { _id: "rs0", members: [ { _id: 0.0, host: "127.0.0.1:27017" }, { _id: 1.0, host: "127.0.0.1:27018" }, { _id: 2.0, host: "127.0.0.1:27019" } ] }, lsid: { id: UUID("159b56d2-00cc-47e0-a7a5-2498c6661e29") }, $clusterTime: { clusterTime: Timestamp(0, 0), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } }, $db: "admin" } numYields:0 reslen:163 locks:{ Global: { acquireCount: { r: 14, w: 6, W: 2 }, acquireWaitCount: { W: 1 }, timeAcquiringMicros: { W: 47 } }, Database: { acquireCount: { r: 2, w: 3, W: 3 } }, Collection: { acquireCount: { r: 1, w: 2 } }, oplog: { acquireCount: { r: 1, w: 2 } } } storage:{} protocol:op_msg 109ms 2019-07-03T15:49:50.627+0800 I REPL [rsSync-0] transition to SECONDARY from RECOVERING 2019-07-03T15:49:50.627+0800 I REPL [rsSync-0] Resetting sync source to empty, which was :27017 2019-07-03T15:49:52.550+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63193 #8 (4 connections now open) 2019-07-03T15:49:52.550+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63194 #9 (5 connections now open) 2019-07-03T15:49:52.551+0800 I NETWORK [conn9] end connection 127.0.0.1:63194 (4 connections now open) 2019-07-03T15:49:52.561+0800 I NETWORK [conn8] end connection 127.0.0.1:63193 (3 connections now open) 2019-07-03T15:49:52.622+0800 I REPL [replexec-0] Member 127.0.0.1:27018 is now in state STARTUP2 2019-07-03T15:49:52.623+0800 I REPL [replexec-0] Member 127.0.0.1:27019 is now in state STARTUP2 2019-07-03T15:49:52.681+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63199 #10 (4 connections now open) 2019-07-03T15:49:52.682+0800 I NETWORK [conn10] received client metadata from 127.0.0.1:63199 conn10: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:52.684+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63200 #11 (5 connections now open) 2019-07-03T15:49:52.688+0800 I NETWORK [conn11] received client metadata from 127.0.0.1:63200 conn11: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:52.689+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63201 #12 (6 connections now open) 2019-07-03T15:49:52.689+0800 I NETWORK [conn12] received client metadata from 127.0.0.1:63201 conn12: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:52.691+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63202 #13 (7 connections now open) 2019-07-03T15:49:52.692+0800 I NETWORK [conn13] received client metadata from 127.0.0.1:63202 conn13: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:53.123+0800 I REPL [replexec-0] Member 127.0.0.1:27018 is now in state SECONDARY 2019-07-03T15:49:53.124+0800 I REPL [replexec-0] Member 127.0.0.1:27019 is now in state SECONDARY 2019-07-03T15:50:01.595+0800 I REPL [replexec-0] Starting an election, since we've seen no PRIMARY in the past 10000ms 2019-07-03T15:50:01.595+0800 I REPL [replexec-0] conducting a dry run election to see if we could be elected. current term: 0 2019-07-03T15:50:01.596+0800 I REPL [replexec-1] VoteRequester(term 0 dry run) received a yes vote from 127.0.0.1:27018; response message: { term: 0, voteGranted: true, reason: "", ok: 1.0, operationTime: Timestamp(1562140190, 1), $clusterTime: { clusterTime: Timestamp(1562140190, 1), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } } } 2019-07-03T15:50:01.597+0800 I REPL [replexec-1] dry election run succeeded, running for election in term 1 2019-07-03T15:50:01.597+0800 I STORAGE [replexec-1] createCollection: local.replset.election with generated UUID: 29123673-7e5c-4950-903a-fa279f6d3cc4 2019-07-03T15:50:01.629+0800 I ASIO [Replication] Connecting to 127.0.0.1:27018 2019-07-03T15:50:01.629+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T15:50:01.630+0800 I REPL [replexec-1] VoteRequester(term 1) received a yes vote from 127.0.0.1:27018; response message: { term: 1, voteGranted: true, reason: "", ok: 1.0, operationTime: Timestamp(1562140190, 1), $clusterTime: { clusterTime: Timestamp(1562140190, 1), signature: { hash: BinData(0, 0000000000000000000000000000000000000000), keyId: 0 } } } 2019-07-03T15:50:01.632+0800 I REPL [replexec-1] election succeeded, assuming primary role in term 1 2019-07-03T15:50:01.633+0800 I REPL [replexec-1] transition to PRIMARY from SECONDARY 2019-07-03T15:50:01.635+0800 I REPL [replexec-1] Resetting sync source to empty, which was :27017 2019-07-03T15:50:01.636+0800 I REPL [replexec-1] Entering primary catch-up mode. 2019-07-03T15:50:01.636+0800 I REPL [replexec-0] Caught up to the latest optime known via heartbeats after becoming primary. 2019-07-03T15:50:01.636+0800 I REPL [replexec-0] Exited primary catch-up mode. 2019-07-03T15:50:01.636+0800 I REPL [replexec-0] Stopping replication producer 2019-07-03T15:50:02.636+0800 I STORAGE [rsSync-0] createCollection: config.transactions with generated UUID: 58e7e6ae-12cd-4c14-80ba-4175445b5b04 2019-07-03T15:50:02.652+0800 I REPL [rsSync-0] transition to primary complete; database writes are now permitted 2019-07-03T15:50:02.654+0800 I STORAGE [monitoring keys for HMAC] createCollection: admin.system.keys with generated UUID: 963fabbf-d80b-430a-b879-a45f306b5c34 2019-07-03T15:50:02.684+0800 I NETWORK [conn10] end connection 127.0.0.1:63199 (6 connections now open) 2019-07-03T15:50:02.692+0800 I NETWORK [conn12] end connection 127.0.0.1:63201 (5 connections now open) 2019-07-03T15:50:03.759+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63206 #16 (6 connections now open) 2019-07-03T15:50:03.760+0800 I NETWORK [conn16] received client metadata from 127.0.0.1:63206 conn16: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:50:03.760+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63207 #17 (7 connections now open) 2019-07-03T15:50:03.761+0800 I STORAGE [conn16] Triggering the first stable checkpoint. Initial Data: Timestamp(1562140190, 1) PrevStable: Timestamp(0, 0) CurrStable: Timestamp(1562140202, 1) 2019-07-03T15:50:03.764+0800 I NETWORK [conn17] received client metadata from 127.0.0.1:63207 conn17: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:50:03.801+0800 I COMMAND [monitoring keys for HMAC] command admin.system.keys command: insert { insert: "system.keys", bypassDocumentValidation: false, ordered: true, documents: [ { _id: 6709341079356833793, purpose: "HMAC", key: BinData(0, 4DCB2FF0018A8E15DFDF4C7B5FCB7BB840ADAED0), expiresAt: Timestamp(1569916202, 0) } ], writeConcern: { w: "majority", wtimeout: 60000 }, allowImplicitCollectionCreation: true, $db: "admin" } ninserted:1 keysInserted:1 numYields:0 reslen:230 locks:{ Global: { acquireCount: { r: 3, w: 3 } }, Database: { acquireCount: { W: 3 } }, Collection: { acquireCount: { w: 2 } } } storage:{} protocol:op_msg 1146ms 2019-07-03T15:50:09.639+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T15:50:11.615+0800 I CONNPOOL [Replication] Ending connection to host 127.0.0.1:27019 due to bad connection status; 2 connections to that host remain open 2019-07-03T15:51:01.631+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27018 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T15:51:47.224+0800 I CONTROL [LogicalSessionCacheReap] Sessions collection is not set up; waiting until next sessions reap interval: config.system.sessions does not exist 2019-07-03T15:51:47.224+0800 I STORAGE [LogicalSessionCacheRefresh] createCollection: config.system.sessions with generated UUID: 2eb274a8-af02-4f11-9d64-a4814d32d4ac 2019-07-03T15:51:47.251+0800 I INDEX [LogicalSessionCacheRefresh] build index on: config.system.sessions properties: { v: 2, key: { lastUse: 1 }, name: "lsidTTLIndex", ns: "config.system.sessions", expireAfterSeconds: 1800 } 2019-07-03T15:51:47.251+0800 I INDEX [LogicalSessionCacheRefresh] building index using bulk method; build may temporarily use up to 500 megabytes of RAM 2019-07-03T15:51:47.259+0800 I INDEX [LogicalSessionCacheRefresh] build index done. scanned 0 total records. 0 secs 2019-07-03T15:52:21.374+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63238 #19 (8 connections now open) 2019-07-03T15:52:21.375+0800 I NETWORK [conn19] received client metadata from 127.0.0.1:63238 conn19: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:52:21.389+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63240 #20 (9 connections now open) 2019-07-03T15:52:21.399+0800 I NETWORK [conn20] received client metadata from 127.0.0.1:63240 conn20: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:52:32.821+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63245 #21 (10 connections now open) 2019-07-03T15:52:32.822+0800 I NETWORK [conn21] received client metadata from 127.0.0.1:63245 conn21: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:52:32.824+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63246 #22 (11 connections now open) 2019-07-03T15:52:32.825+0800 I NETWORK [conn22] received client metadata from 127.0.0.1:63246 conn22: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:59:03.791+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T16:00:59.835+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T16:02:01.837+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T16:02:07.858+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T16:08:56.666+0800 I STORAGE [conn1] createCollection: admin.zt with generated UUID: 707bf97e-1fb1-47e8-993a-5e05577e2eff 2019-07-03T16:40:36.513+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T16:41:02.534+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T16:54:30.742+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T16:55:02.768+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T17:26:41.313+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T17:26:49.327+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T17:30:23.376+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T17:30:45.402+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T17:40:23.561+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T17:40:51.587+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T17:41:51.589+0800 I CONNPOOL [Replication] Ending idle connection to host 127.0.0.1:27019 because the pool meets constraints; 1 connections to that host remain open 2019-07-03T17:44:53.666+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019

27018(辅助节点)如下

C:Users78204>mongod --replSet rs0 --port 27018 --dbpath c:srvmongodb s0-1 --smallfiles --oplogSize 128 2019-07-03T15:47:21.004+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none' 2019-07-03T15:47:21.007+0800 I CONTROL [initandlisten] MongoDB starting : pid=6336 port=27018 dbpath=c:srvmongodb s0-1 64-bit host=DESKTOP-65Q8KM9 2019-07-03T15:47:21.007+0800 I CONTROL [initandlisten] targetMinOS: Windows 7/Windows Server 2008 R2 2019-07-03T15:47:21.007+0800 I CONTROL [initandlisten] db version v4.0.9 2019-07-03T15:47:21.007+0800 I CONTROL [initandlisten] git version: fc525e2d9b0e4bceff5c2201457e564362909765 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] allocator: tcmalloc 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] modules: none 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] build environment: 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] distmod: 2008plus-ssl 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] distarch: x86_64 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] target_arch: x86_64 2019-07-03T15:47:21.008+0800 I CONTROL [initandlisten] options: { net: { port: 27018 }, replication: { oplogSizeMB: 128, replSet: "rs0" }, storage: { dbPath: "c:srvmongodb s0-1", mmapv1: { smallFiles: true } } } 2019-07-03T15:47:21.019+0800 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=3543M,session_max=20000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000),statistics_log=(wait=0),verbose=(recovery_progress), 2019-07-03T15:47:21.057+0800 I STORAGE [initandlisten] WiredTiger message [1562140041:56409][6336:140724264981088], txn-recover: Set global recovery timestamp: 0 2019-07-03T15:47:21.069+0800 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0) 2019-07-03T15:47:21.091+0800 W STORAGE [initandlisten] Detected configuration for non-active storage engine mmapv1 when current storage engine is wiredTiger 2019-07-03T15:47:21.091+0800 I CONTROL [initandlisten] 2019-07-03T15:47:21.091+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-07-03T15:47:21.091+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-07-03T15:47:21.092+0800 I CONTROL [initandlisten] 2019-07-03T15:47:21.092+0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost. 2019-07-03T15:47:21.092+0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server. 2019-07-03T15:47:21.093+0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP 2019-07-03T15:47:21.093+0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to 2019-07-03T15:47:21.094+0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the 2019-07-03T15:47:21.094+0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning. 2019-07-03T15:47:21.094+0800 I CONTROL [initandlisten] 2019-07-03T15:47:21.097+0800 I STORAGE [initandlisten] createCollection: local.startup_log with generated UUID: ff3cc52b-ccc0-4032-96b1-7fdab9ebf66b 2019-07-03T15:47:21.325+0800 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory 'c:/srv/mongodb/rs0-1/diagnostic.data' 2019-07-03T15:47:21.329+0800 I STORAGE [initandlisten] createCollection: local.replset.oplogTruncateAfterPoint with generated UUID: 89156dc6-d197-4730-9a22-a54353caafba 2019-07-03T15:47:21.341+0800 I STORAGE [initandlisten] createCollection: local.replset.minvalid with generated UUID: a370b3a0-c138-4f07-ac0b-32aebc2c3c0d 2019-07-03T15:47:21.354+0800 I REPL [initandlisten] Did not find local voted for document at startup. 2019-07-03T15:47:21.354+0800 I REPL [initandlisten] Did not find local Rollback ID document at startup. Creating one. 2019-07-03T15:47:21.356+0800 I STORAGE [initandlisten] createCollection: local.system.rollback.id with generated UUID: 716778cf-0c4d-4561-bac5-fbf0bc19e67b 2019-07-03T15:47:21.371+0800 I REPL [initandlisten] Initialized the rollback ID to 1 2019-07-03T15:47:21.371+0800 I REPL [initandlisten] Did not find local replica set configuration document at startup; NoMatchingDocument: Did not find replica set configuration document in local.system.replset 2019-07-03T15:47:21.373+0800 I CONTROL [LogicalSessionCacheRefresh] Sessions collection is not set up; waiting until next sessions refresh interval: Replication has not yet been configured 2019-07-03T15:47:21.374+0800 I CONTROL [LogicalSessionCacheReap] Sessions collection is not set up; waiting until next sessions reap interval: config.system.sessions does not exist 2019-07-03T15:47:21.374+0800 I NETWORK [initandlisten] waiting for connections on port 27018 2019-07-03T15:49:50.528+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63186 #1 (1 connection now open) 2019-07-03T15:49:50.529+0800 I NETWORK [conn1] end connection 127.0.0.1:63186 (0 connections now open) 2019-07-03T15:49:50.543+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63188 #2 (1 connection now open) 2019-07-03T15:49:50.544+0800 I NETWORK [conn2] received client metadata from 127.0.0.1:63188 conn2: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:50.545+0800 I ASIO [Replication] Connecting to 127.0.0.1:27017 2019-07-03T15:49:52.551+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63195 #4 (2 connections now open) 2019-07-03T15:49:52.561+0800 I NETWORK [conn4] end connection 127.0.0.1:63195 (1 connection now open) 2019-07-03T15:49:52.566+0800 I STORAGE [replexec-0] createCollection: local.system.replset with generated UUID: 8b3d7bf2-3a9a-47c6-9975-2065aeadd30a 2019-07-03T15:49:52.582+0800 I REPL [replexec-0] New replica set config in use: { _id: "rs0", version: 1, protocolVersion: 1, writeConcernMajorityJournalDefault: true, members: [ { _id: 0, host: "127.0.0.1:27017", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 }, { _id: 1, host: "127.0.0.1:27018", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 }, { _id: 2, host: "127.0.0.1:27019", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 } ], settings: { chainingAllowed: true, heartbeatIntervalMillis: 2000, heartbeatTimeoutSecs: 10, electionTimeoutMillis: 10000, catchUpTimeoutMillis: -1, catchUpTakeoverDelayMillis: 30000, getLastErrorModes: {}, getLastErrorDefaults: { w: 1, wtimeout: 0 }, replicaSetId: ObjectId('5d1c5e1e207949b5278727c6') } } 2019-07-03T15:49:52.584+0800 I REPL [replexec-0] This node is 127.0.0.1:27018 in the config 2019-07-03T15:49:52.584+0800 I REPL [replexec-0] transition to STARTUP2 from STARTUP 2019-07-03T15:49:52.585+0800 I ASIO [Replication] Connecting to 127.0.0.1:27019 2019-07-03T15:49:52.585+0800 I REPL [replexec-0] Starting replication storage threads 2019-07-03T15:49:52.586+0800 I REPL [replexec-3] Member 127.0.0.1:27017 is now in state SECONDARY 2019-07-03T15:49:52.586+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63198 #8 (2 connections now open) 2019-07-03T15:49:52.588+0800 I NETWORK [conn8] received client metadata from 127.0.0.1:63198 conn8: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:52.590+0800 I REPL [replexec-2] Member 127.0.0.1:27019 is now in state STARTUP2 2019-07-03T15:49:52.594+0800 I STORAGE [replexec-0] createCollection: local.temp_oplog_buffer with generated UUID: eeddd434-6f9b-4799-863b-0ec6f75b7ccd 2019-07-03T15:49:52.618+0800 I REPL [replication-0] Starting initial sync (attempt 1 of 10) 2019-07-03T15:49:52.618+0800 I STORAGE [replication-0] Finishing collection drop for local.temp_oplog_buffer (eeddd434-6f9b-4799-863b-0ec6f75b7ccd). 2019-07-03T15:49:52.626+0800 I STORAGE [replication-0] createCollection: local.temp_oplog_buffer with generated UUID: 06bae427-bab8-4eb5-ab03-8a98c194865b 2019-07-03T15:49:52.640+0800 I REPL [replication-0] sync source candidate: 127.0.0.1:27017 2019-07-03T15:49:52.640+0800 I REPL [replication-0] Initial syncer oplog truncation finished in: 0ms 2019-07-03T15:49:52.641+0800 I REPL [replication-0] ****** 2019-07-03T15:49:52.642+0800 I REPL [replication-0] creating replication oplog of size: 128MB... 2019-07-03T15:49:52.642+0800 I STORAGE [replication-0] createCollection: local.oplog.rs with generated UUID: c16523d4-c07f-43b8-83df-4c5f1e0fbf7b 2019-07-03T15:49:52.649+0800 I STORAGE [replication-0] Starting OplogTruncaterThread local.oplog.rs 2019-07-03T15:49:52.649+0800 I STORAGE [replication-0] The size storer reports that the oplog contains 0 records totaling to 0 bytes 2019-07-03T15:49:52.650+0800 I STORAGE [replication-0] Scanning the oplog to determine where to place markers for truncation 2019-07-03T15:49:52.684+0800 I REPL [replication-0] ****** 2019-07-03T15:49:52.684+0800 I STORAGE [replication-0] dropAllDatabasesExceptLocal 1 2019-07-03T15:49:52.687+0800 I ASIO [RS] Connecting to 127.0.0.1:27017 2019-07-03T15:49:52.691+0800 I ASIO [RS] Connecting to 127.0.0.1:27017 2019-07-03T15:49:52.692+0800 I REPL [replication-0] CollectionCloner::start called, on ns:admin.system.version 2019-07-03T15:49:52.693+0800 I STORAGE [repl writer worker 15] createCollection: admin.system.version with provided UUID: c30ef668-a63a-41b0-a617-f0d9be489f23 2019-07-03T15:49:52.714+0800 I INDEX [repl writer worker 15] build index on: admin.system.version properties: { v: 2, key: { _id: 1 }, name: "_id_", ns: "admin.system.version" } 2019-07-03T15:49:52.716+0800 I INDEX [repl writer worker 15] building index using bulk method; build may temporarily use up to 500 megabytes of RAM 2019-07-03T15:49:52.717+0800 I COMMAND [repl writer worker 0] setting featureCompatibilityVersion to 4.0 2019-07-03T15:49:52.718+0800 I NETWORK [repl writer worker 0] Skip closing connection for connection # 8 2019-07-03T15:49:52.718+0800 I NETWORK [repl writer worker 0] Skip closing connection for connection # 2 2019-07-03T15:49:52.718+0800 I REPL [repl writer worker 0] CollectionCloner ns:admin.system.version finished cloning with status: OK 2019-07-03T15:49:52.727+0800 I REPL [repl writer worker 0] Finished cloning data: OK. Beginning oplog replay. 2019-07-03T15:49:52.728+0800 I REPL [replication-1] No need to apply operations. (currently at { : Timestamp(1562140190, 1) }) 2019-07-03T15:49:52.730+0800 I REPL [replication-1] Finished fetching oplog during initial sync: CallbackCanceled: error in fetcher batch callback: oplog fetcher is shutting down. Last fetched optime and hash: { ts: Timestamp(0, 0), t: -1 }[0] 2019-07-03T15:49:52.731+0800 I REPL [replication-1] Initial sync attempt finishing up. 2019-07-03T15:49:52.732+0800 I REPL [replication-1] Initial Sync Attempt Statistics: { failedInitialSyncAttempts: 0, maxFailedInitialSyncAttempts: 10, initialSyncStart: new Date(1562140192618), initialSyncAttempts: [], fetchedMissingDocs: 0, appliedOps: 0, initialSyncOplogStart: Timestamp(1562140190, 1), initialSyncOplogEnd: Timestamp(1562140190, 1), databases: { databasesCloned: 1, admin: { collections: 1, clonedCollections: 1, start: new Date(1562140192691), end: new Date(1562140192728), elapsedMillis: 37, admin.system.version: { documentsToCopy: 1, documentsCopied: 1, indexes: 1, fetchedBatches: 1, start: new Date(1562140192692), end: new Date(1562140192728), elapsedMillis: 36 } } } } 2019-07-03T15:49:52.733+0800 I STORAGE [replication-1] Finishing collection drop for local.temp_oplog_buffer (06bae427-bab8-4eb5-ab03-8a98c194865b). 2019-07-03T15:49:52.744+0800 I REPL [replication-1] initial sync done; took 0s. 2019-07-03T15:49:52.744+0800 I REPL [replication-1] transition to RECOVERING from STARTUP2 2019-07-03T15:49:52.745+0800 I REPL [replication-1] Starting replication fetcher thread 2019-07-03T15:49:52.747+0800 I REPL [replication-1] Starting replication applier thread 2019-07-03T15:49:52.747+0800 I REPL [rsBackgroundSync] could not find member to sync from 2019-07-03T15:49:52.747+0800 I REPL [replication-1] Starting replication reporter thread 2019-07-03T15:49:52.747+0800 I REPL [rsSync-0] Starting oplog application 2019-07-03T15:49:52.749+0800 I REPL [rsSync-0] transition to SECONDARY from RECOVERING 2019-07-03T15:49:52.749+0800 I REPL [rsSync-0] Resetting sync source to empty, which was :27017 2019-07-03T15:49:52.749+0800 I REPL [replexec-2] Member 127.0.0.1:27019 is now in state RECOVERING 2019-07-03T15:49:53.250+0800 I REPL [replexec-2] Member 127.0.0.1:27019 is now in state SECONDARY 2019-07-03T15:50:01.616+0800 I STORAGE [conn2] createCollection: local.replset.election with generated UUID: 934c442f-9d18-4ed9-9891-ac9061740dbf 2019-07-03T15:50:01.630+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63203 #11 (3 connections now open) 2019-07-03T15:50:01.631+0800 I NETWORK [conn11] received client metadata from 127.0.0.1:63203 conn11: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:50:01.757+0800 I REPL [replexec-3] Member 127.0.0.1:27017 is now in state PRIMARY 2019-07-03T15:50:02.692+0800 I CONNPOOL [RS] Ending connection to host 127.0.0.1:27017 due to bad connection status; 1 connections to that host remain open 2019-07-03T15:50:03.754+0800 I REPL [rsBackgroundSync] sync source candidate: 127.0.0.1:27017 2019-07-03T15:50:03.755+0800 I REPL [rsBackgroundSync] Changed sync source from empty to 127.0.0.1:27017 2019-07-03T15:50:03.757+0800 I STORAGE [repl writer worker 5] createCollection: config.transactions with provided UUID: 58e7e6ae-12cd-4c14-80ba-4175445b5b04 2019-07-03T15:50:03.758+0800 I ASIO [RS] Connecting to 127.0.0.1:27017 2019-07-03T15:50:03.776+0800 I STORAGE [repl writer worker 7] createCollection: admin.system.keys with provided UUID: 963fabbf-d80b-430a-b879-a45f306b5c34 2019-07-03T15:50:03.805+0800 I STORAGE [replication-1] Triggering the first stable checkpoint. Initial Data: Timestamp(1562140190, 1) PrevStable: Timestamp(0, 0) CurrStable: Timestamp(1562140202, 4) 2019-07-03T15:51:01.632+0800 I NETWORK [conn2] end connection 127.0.0.1:63188 (2 connections now open) 2019-07-03T15:51:47.244+0800 I STORAGE [repl writer worker 4] createCollection: config.system.sessions with provided UUID: 2eb274a8-af02-4f11-9d64-a4814d32d4ac 2019-07-03T15:51:47.273+0800 I INDEX [repl writer worker 2] build index on: config.system.sessions properties: { v: 2, key: { lastUse: 1 }, name: "lsidTTLIndex", expireAfterSeconds: 1800, ns: "config.system.sessions" } 2019-07-03T15:51:47.273+0800 I INDEX [repl writer worker 2] building index using bulk method; build may temporarily use up to 500 megabytes of RAM 2019-07-03T15:51:47.279+0800 I INDEX [repl writer worker 2] build index done. scanned 0 total records. 0 secs 2019-07-03T15:52:21.373+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:52:21.375+0800 I NETWORK [LogicalSessionCacheRefresh] Successfully connected to 127.0.0.1:27019 (1 connections now open to 127.0.0.1:27019 with a 5 second timeout) 2019-07-03T15:52:21.375+0800 I NETWORK [ReplicaSetMonitor-TaskExecutor] Successfully connected to 127.0.0.1:27017 (1 connections now open to 127.0.0.1:27017 with a 5 second timeout) 2019-07-03T15:52:21.376+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63239 #15 (3 connections now open) 2019-07-03T15:52:21.386+0800 I NETWORK [conn15] received client metadata from 127.0.0.1:63239 conn15: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:52:21.388+0800 I NETWORK [LogicalSessionCacheRefresh] Successfully connected to 127.0.0.1:27018 (1 connections now open to 127.0.0.1:27018 with a 5 second timeout) 2019-07-03T15:52:21.399+0800 I NETWORK [LogicalSessionCacheRefresh] Successfully connected to 127.0.0.1:27017 (1 connections now open to 127.0.0.1:27017 with a 0 second timeout) 2019-07-03T15:52:21.400+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:52:21.402+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:52:32.816+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63244 #18 (4 connections now open) 2019-07-03T15:52:32.820+0800 I NETWORK [conn18] received client metadata from 127.0.0.1:63244 conn18: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:57:21.374+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:57:21.376+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:57:21.376+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:01:42.914+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63386 #19 (5 connections now open) 2019-07-03T16:01:42.914+0800 I NETWORK [conn19] received client metadata from 127.0.0.1:63386 conn19: { application: { name: "MongoDB Shell" }, driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T16:02:21.375+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:02:21.377+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:02:21.391+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:02:21.392+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:21.375+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:21.376+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:21.377+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:21.377+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:08:56.681+0800 I STORAGE [repl writer worker 2] createCollection: admin.zt with provided UUID: 707bf97e-1fb1-47e8-993a-5e05577e2eff 2019-07-03T16:12:21.375+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019

27019(辅助节点)如下

C:Users78204>mongod --replSet rs0 --port 27019 --dbpath c:srvmongodb s0-2 --smallfiles --oplogSize 128 2019-07-03T15:47:32.479+0800 I CONTROL [main] Automatically disabling TLS 1.0, to force-enable TLS 1.0 specify --sslDisabledProtocols 'none' 2019-07-03T15:47:32.483+0800 I CONTROL [initandlisten] MongoDB starting : pid=10364 port=27019 dbpath=c:srvmongodb s0-2 64-bit host=DESKTOP-65Q8KM9 2019-07-03T15:47:32.483+0800 I CONTROL [initandlisten] targetMinOS: Windows 7/Windows Server 2008 R2 2019-07-03T15:47:32.483+0800 I CONTROL [initandlisten] db version v4.0.9 2019-07-03T15:47:32.483+0800 I CONTROL [initandlisten] git version: fc525e2d9b0e4bceff5c2201457e564362909765 2019-07-03T15:47:32.484+0800 I CONTROL [initandlisten] allocator: tcmalloc 2019-07-03T15:47:32.484+0800 I CONTROL [initandlisten] modules: none 2019-07-03T15:47:32.484+0800 I CONTROL [initandlisten] build environment: 2019-07-03T15:47:32.484+0800 I CONTROL [initandlisten] distmod: 2008plus-ssl 2019-07-03T15:47:32.484+0800 I CONTROL [initandlisten] distarch: x86_64 2019-07-03T15:47:32.484+0800 I CONTROL [initandlisten] target_arch: x86_64 2019-07-03T15:47:32.485+0800 I CONTROL [initandlisten] options: { net: { port: 27019 }, replication: { oplogSizeMB: 128, replSet: "rs0" }, storage: { dbPath: "c:srvmongodb s0-2", mmapv1: { smallFiles: true } } } 2019-07-03T15:47:32.487+0800 I STORAGE [initandlisten] wiredtiger_open config: create,cache_size=3543M,session_max=20000,eviction=(threads_min=4,threads_max=4),config_base=false,statistics=(fast),log=(enabled=true,archive=true,path=journal,compressor=snappy),file_manager=(close_idle_time=100000),statistics_log=(wait=0),verbose=(recovery_progress), 2019-07-03T15:47:32.518+0800 I STORAGE [initandlisten] WiredTiger message [1562140052:517899][10364:140724264981088], txn-recover: Set global recovery timestamp: 0 2019-07-03T15:47:32.529+0800 I RECOVERY [initandlisten] WiredTiger recoveryTimestamp. Ts: Timestamp(0, 0) 2019-07-03T15:47:32.551+0800 W STORAGE [initandlisten] Detected configuration for non-active storage engine mmapv1 when current storage engine is wiredTiger 2019-07-03T15:47:32.551+0800 I CONTROL [initandlisten] 2019-07-03T15:47:32.552+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-07-03T15:47:32.552+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-07-03T15:47:32.552+0800 I CONTROL [initandlisten] 2019-07-03T15:47:32.552+0800 I CONTROL [initandlisten] ** WARNING: This server is bound to localhost. 2019-07-03T15:47:32.553+0800 I CONTROL [initandlisten] ** Remote systems will be unable to connect to this server. 2019-07-03T15:47:32.554+0800 I CONTROL [initandlisten] ** Start the server with --bind_ip <address> to specify which IP 2019-07-03T15:47:32.554+0800 I CONTROL [initandlisten] ** addresses it should serve responses from, or with --bind_ip_all to 2019-07-03T15:47:32.555+0800 I CONTROL [initandlisten] ** bind to all interfaces. If this behavior is desired, start the 2019-07-03T15:47:32.555+0800 I CONTROL [initandlisten] ** server with --bind_ip 127.0.0.1 to disable this warning. 2019-07-03T15:47:32.556+0800 I CONTROL [initandlisten] 2019-07-03T15:47:32.559+0800 I STORAGE [initandlisten] createCollection: local.startup_log with generated UUID: 964763a9-cc3e-4deb-994b-522d542ea077 2019-07-03T15:47:32.770+0800 I FTDC [initandlisten] Initializing full-time diagnostic data capture with directory 'c:/srv/mongodb/rs0-2/diagnostic.data' 2019-07-03T15:47:32.772+0800 I STORAGE [initandlisten] createCollection: local.replset.oplogTruncateAfterPoint with generated UUID: 924a1e4c-6d84-46b7-944f-be1a49bc4a4e 2019-07-03T15:47:32.784+0800 I STORAGE [initandlisten] createCollection: local.replset.minvalid with generated UUID: 49df2b88-da4d-42e5-bc9c-c5aada81d12c 2019-07-03T15:47:32.795+0800 I REPL [initandlisten] Did not find local voted for document at startup. 2019-07-03T15:47:32.796+0800 I REPL [initandlisten] Did not find local Rollback ID document at startup. Creating one. 2019-07-03T15:47:32.796+0800 I STORAGE [initandlisten] createCollection: local.system.rollback.id with generated UUID: b45d2f57-d5fa-4f6f-a4c8-a3d19e650988 2019-07-03T15:47:32.811+0800 I REPL [initandlisten] Initialized the rollback ID to 1 2019-07-03T15:47:32.812+0800 I REPL [initandlisten] Did not find local replica set configuration document at startup; NoMatchingDocument: Did not find replica set configuration document in local.system.replset 2019-07-03T15:47:32.815+0800 I CONTROL [LogicalSessionCacheRefresh] Sessions collection is not set up; waiting until next sessions refresh interval: Replication has not yet been configured 2019-07-03T15:47:32.816+0800 I CONTROL [LogicalSessionCacheReap] Sessions collection is not set up; waiting until next sessions reap interval: config.system.sessions does not exist 2019-07-03T15:47:32.816+0800 I NETWORK [initandlisten] waiting for connections on port 27019 2019-07-03T15:49:50.529+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63187 #1 (1 connection now open) 2019-07-03T15:49:50.540+0800 I NETWORK [conn1] end connection 127.0.0.1:63187 (0 connections now open) 2019-07-03T15:49:50.543+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63189 #2 (1 connection now open) 2019-07-03T15:49:50.544+0800 I NETWORK [conn2] received client metadata from 127.0.0.1:63189 conn2: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:50.546+0800 I ASIO [Replication] Connecting to 127.0.0.1:27017 2019-07-03T15:49:52.565+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63196 #6 (2 connections now open) 2019-07-03T15:49:52.565+0800 I STORAGE [replexec-1] createCollection: local.system.replset with generated UUID: dd430eb4-95fd-4272-96c2-daff19d0b3ae 2019-07-03T15:49:52.566+0800 I NETWORK [conn6] end connection 127.0.0.1:63196 (1 connection now open) 2019-07-03T15:49:52.580+0800 I REPL [replexec-1] New replica set config in use: { _id: "rs0", version: 1, protocolVersion: 1, writeConcernMajorityJournalDefault: true, members: [ { _id: 0, host: "127.0.0.1:27017", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 }, { _id: 1, host: "127.0.0.1:27018", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 }, { _id: 2, host: "127.0.0.1:27019", arbiterOnly: false, buildIndexes: true, hidden: false, priority: 1.0, tags: {}, slaveDelay: 0, votes: 1 } ], settings: { chainingAllowed: true, heartbeatIntervalMillis: 2000, heartbeatTimeoutSecs: 10, electionTimeoutMillis: 10000, catchUpTimeoutMillis: -1, catchUpTakeoverDelayMillis: 30000, getLastErrorModes: {}, getLastErrorDefaults: { w: 1, wtimeout: 0 }, replicaSetId: ObjectId('5d1c5e1e207949b5278727c6') } } 2019-07-03T15:49:52.581+0800 I REPL [replexec-1] This node is 127.0.0.1:27019 in the config 2019-07-03T15:49:52.582+0800 I REPL [replexec-1] transition to STARTUP2 from STARTUP 2019-07-03T15:49:52.585+0800 I REPL [replexec-1] Starting replication storage threads 2019-07-03T15:49:52.585+0800 I ASIO [Replication] Connecting to 127.0.0.1:27018 2019-07-03T15:49:52.586+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63197 #7 (2 connections now open) 2019-07-03T15:49:52.586+0800 I REPL [replexec-0] Member 127.0.0.1:27017 is now in state SECONDARY 2019-07-03T15:49:52.588+0800 I NETWORK [conn7] received client metadata from 127.0.0.1:63197 conn7: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:49:52.589+0800 I REPL [replexec-2] Member 127.0.0.1:27018 is now in state STARTUP2 2019-07-03T15:49:52.595+0800 I STORAGE [replexec-1] createCollection: local.temp_oplog_buffer with generated UUID: 26138468-5e84-4bf4-9aae-c4e11e60ae17 2019-07-03T15:49:52.609+0800 I REPL [replication-0] Starting initial sync (attempt 1 of 10) 2019-07-03T15:49:52.610+0800 I STORAGE [replication-0] Finishing collection drop for local.temp_oplog_buffer (26138468-5e84-4bf4-9aae-c4e11e60ae17). 2019-07-03T15:49:52.616+0800 I STORAGE [replication-0] createCollection: local.temp_oplog_buffer with generated UUID: 5b228686-20d0-4311-8bfa-4f562180c3e7 2019-07-03T15:49:52.631+0800 I REPL [replication-1] sync source candidate: 127.0.0.1:27017 2019-07-03T15:49:52.631+0800 I REPL [replication-1] Initial syncer oplog truncation finished in: 0ms 2019-07-03T15:49:52.633+0800 I REPL [replication-1] ****** 2019-07-03T15:49:52.634+0800 I REPL [replication-1] creating replication oplog of size: 128MB... 2019-07-03T15:49:52.634+0800 I STORAGE [replication-1] createCollection: local.oplog.rs with generated UUID: 2e71d9d2-10c8-4c2b-81de-443a7679cf3b 2019-07-03T15:49:52.642+0800 I STORAGE [replication-1] Starting OplogTruncaterThread local.oplog.rs 2019-07-03T15:49:52.643+0800 I STORAGE [replication-1] The size storer reports that the oplog contains 0 records totaling to 0 bytes 2019-07-03T15:49:52.644+0800 I STORAGE [replication-1] Scanning the oplog to determine where to place markers for truncation 2019-07-03T15:49:52.678+0800 I REPL [replication-1] ****** 2019-07-03T15:49:52.678+0800 I STORAGE [replication-1] dropAllDatabasesExceptLocal 1 2019-07-03T15:49:52.680+0800 I ASIO [RS] Connecting to 127.0.0.1:27017 2019-07-03T15:49:52.684+0800 I ASIO [RS] Connecting to 127.0.0.1:27017 2019-07-03T15:49:52.685+0800 I REPL [replication-1] CollectionCloner::start called, on ns:admin.system.version 2019-07-03T15:49:52.689+0800 I STORAGE [repl writer worker 15] createCollection: admin.system.version with provided UUID: c30ef668-a63a-41b0-a617-f0d9be489f23 2019-07-03T15:49:52.711+0800 I INDEX [repl writer worker 15] build index on: admin.system.version properties: { v: 2, key: { _id: 1 }, name: "_id_", ns: "admin.system.version" } 2019-07-03T15:49:52.711+0800 I INDEX [repl writer worker 15] building index using bulk method; build may temporarily use up to 500 megabytes of RAM 2019-07-03T15:49:52.714+0800 I COMMAND [repl writer worker 0] setting featureCompatibilityVersion to 4.0 2019-07-03T15:49:52.716+0800 I NETWORK [repl writer worker 0] Skip closing connection for connection # 7 2019-07-03T15:49:52.716+0800 I NETWORK [repl writer worker 0] Skip closing connection for connection # 2 2019-07-03T15:49:52.717+0800 I REPL [repl writer worker 0] CollectionCloner ns:admin.system.version finished cloning with status: OK 2019-07-03T15:49:52.725+0800 I REPL [repl writer worker 0] Finished cloning data: OK. Beginning oplog replay. 2019-07-03T15:49:52.726+0800 I REPL [replication-0] No need to apply operations. (currently at { : Timestamp(1562140190, 1) }) 2019-07-03T15:49:52.727+0800 I REPL [replication-0] Finished fetching oplog during initial sync: CallbackCanceled: error in fetcher batch callback: oplog fetcher is shutting down. Last fetched optime and hash: { ts: Timestamp(0, 0), t: -1 }[0] 2019-07-03T15:49:52.729+0800 I REPL [replication-0] Initial sync attempt finishing up. 2019-07-03T15:49:52.731+0800 I REPL [replication-0] Initial Sync Attempt Statistics: { failedInitialSyncAttempts: 0, maxFailedInitialSyncAttempts: 10, initialSyncStart: new Date(1562140192609), initialSyncAttempts: [], fetchedMissingDocs: 0, appliedOps: 0, initialSyncOplogStart: Timestamp(1562140190, 1), initialSyncOplogEnd: Timestamp(1562140190, 1), databases: { databasesCloned: 1, admin: { collections: 1, clonedCollections: 1, start: new Date(1562140192684), end: new Date(1562140192726), elapsedMillis: 42, admin.system.version: { documentsToCopy: 1, documentsCopied: 1, indexes: 1, fetchedBatches: 1, start: new Date(1562140192686), end: new Date(1562140192726), elapsedMillis: 40 } } } } 2019-07-03T15:49:52.733+0800 I STORAGE [replication-0] Finishing collection drop for local.temp_oplog_buffer (5b228686-20d0-4311-8bfa-4f562180c3e7). 2019-07-03T15:49:52.742+0800 I REPL [replication-0] initial sync done; took 0s. 2019-07-03T15:49:52.743+0800 I REPL [replication-0] transition to RECOVERING from STARTUP2 2019-07-03T15:49:52.743+0800 I REPL [replication-0] Starting replication fetcher thread 2019-07-03T15:49:52.745+0800 I REPL [replication-0] Starting replication applier thread 2019-07-03T15:49:52.745+0800 I REPL [rsBackgroundSync] could not find member to sync from 2019-07-03T15:49:52.745+0800 I REPL [replication-0] Starting replication reporter thread 2019-07-03T15:49:52.745+0800 I REPL [rsSync-0] Starting oplog application 2019-07-03T15:49:52.748+0800 I REPL [replexec-0] Member 127.0.0.1:27018 is now in state RECOVERING 2019-07-03T15:49:52.750+0800 I REPL [rsSync-0] transition to SECONDARY from RECOVERING 2019-07-03T15:49:52.750+0800 I REPL [rsSync-0] Resetting sync source to empty, which was :27017 2019-07-03T15:49:53.249+0800 I REPL [replexec-0] Member 127.0.0.1:27018 is now in state SECONDARY 2019-07-03T15:50:01.616+0800 I STORAGE [conn2] createCollection: local.replset.election with generated UUID: d254240c-e045-48a2-a402-6e3f4513157e 2019-07-03T15:50:01.630+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63204 #11 (3 connections now open) 2019-07-03T15:50:01.632+0800 I NETWORK [conn11] received client metadata from 127.0.0.1:63204 conn11: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:50:01.756+0800 I REPL [replexec-3] Member 127.0.0.1:27017 is now in state PRIMARY 2019-07-03T15:50:02.684+0800 I CONNPOOL [RS] Ending connection to host 127.0.0.1:27017 due to bad connection status; 1 connections to that host remain open 2019-07-03T15:50:03.753+0800 I REPL [rsBackgroundSync] sync source candidate: 127.0.0.1:27017 2019-07-03T15:50:03.754+0800 I REPL [rsBackgroundSync] Changed sync source from empty to 127.0.0.1:27017 2019-07-03T15:50:03.757+0800 I STORAGE [repl writer worker 5] createCollection: config.transactions with provided UUID: 58e7e6ae-12cd-4c14-80ba-4175445b5b04 2019-07-03T15:50:03.759+0800 I ASIO [RS] Connecting to 127.0.0.1:27017 2019-07-03T15:50:03.785+0800 I STORAGE [repl writer worker 7] createCollection: admin.system.keys with provided UUID: 963fabbf-d80b-430a-b879-a45f306b5c34 2019-07-03T15:50:03.805+0800 I STORAGE [replication-1] Triggering the first stable checkpoint. Initial Data: Timestamp(1562140190, 1) PrevStable: Timestamp(0, 0) CurrStable: Timestamp(1562140202, 4) 2019-07-03T15:50:09.640+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63210 #13 (4 connections now open) 2019-07-03T15:50:09.642+0800 I NETWORK [conn13] received client metadata from 127.0.0.1:63210 conn13: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:50:11.616+0800 I NETWORK [conn2] end connection 127.0.0.1:63189 (3 connections now open) 2019-07-03T15:51:47.244+0800 I STORAGE [repl writer worker 14] createCollection: config.system.sessions with provided UUID: 2eb274a8-af02-4f11-9d64-a4814d32d4ac 2019-07-03T15:51:47.274+0800 I INDEX [repl writer worker 2] build index on: config.system.sessions properties: { v: 2, key: { lastUse: 1 }, name: "lsidTTLIndex", expireAfterSeconds: 1800, ns: "config.system.sessions" } 2019-07-03T15:51:47.275+0800 I INDEX [repl writer worker 2] building index using bulk method; build may temporarily use up to 500 megabytes of RAM 2019-07-03T15:51:47.280+0800 I INDEX [repl writer worker 2] build index done. scanned 0 total records. 0 secs 2019-07-03T15:52:21.374+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63237 #14 (4 connections now open) 2019-07-03T15:52:21.374+0800 I NETWORK [conn14] received client metadata from 127.0.0.1:63237 conn14: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:52:32.816+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:52:32.816+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63243 #17 (5 connections now open) 2019-07-03T15:52:32.819+0800 I NETWORK [conn17] received client metadata from 127.0.0.1:63243 conn17: { driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T15:52:32.820+0800 I NETWORK [LogicalSessionCacheRefresh] Successfully connected to 127.0.0.1:27019 (1 connections now open to 127.0.0.1:27019 with a 5 second timeout) 2019-07-03T15:52:32.821+0800 I NETWORK [ReplicaSetMonitor-TaskExecutor] Successfully connected to 127.0.0.1:27018 (1 connections now open to 127.0.0.1:27018 with a 5 second timeout) 2019-07-03T15:52:32.824+0800 I NETWORK [LogicalSessionCacheRefresh] Successfully connected to 127.0.0.1:27017 (1 connections now open to 127.0.0.1:27017 with a 5 second timeout) 2019-07-03T15:52:32.825+0800 I NETWORK [LogicalSessionCacheRefresh] Successfully connected to 127.0.0.1:27017 (1 connections now open to 127.0.0.1:27017 with a 0 second timeout) 2019-07-03T15:52:32.825+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:52:32.826+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:52:32.827+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:57:32.815+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T15:59:03.792+0800 I NETWORK [conn13] end connection 127.0.0.1:63210 (4 connections now open) 2019-07-03T16:00:59.836+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63368 #20 (5 connections now open) 2019-07-03T16:00:59.837+0800 I NETWORK [conn20] received client metadata from 127.0.0.1:63368 conn20: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T16:02:01.838+0800 I NETWORK [conn20] end connection 127.0.0.1:63368 (4 connections now open) 2019-07-03T16:02:07.859+0800 I NETWORK [listener] connection accepted from 127.0.0.1:63390 #21 (5 connections now open) 2019-07-03T16:02:07.859+0800 I NETWORK [conn21] received client metadata from 127.0.0.1:63390 conn21: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T16:02:32.815+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:02:32.816+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:02:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:02:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:32.816+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:07:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:08:56.681+0800 I STORAGE [repl writer worker 2] createCollection: admin.zt with provided UUID: 707bf97e-1fb1-47e8-993a-5e05577e2eff 2019-07-03T16:12:32.815+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:17:32.816+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:17:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:17:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:22:32.816+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:22:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:22:32.819+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:23:20.832+0800 I NETWORK [listener] connection accepted from 127.0.0.1:64042 #22 (6 connections now open) 2019-07-03T16:23:20.833+0800 I NETWORK [conn22] received client metadata from 127.0.0.1:64042 conn22: { application: { name: "MongoDB Shell" }, driver: { name: "MongoDB Internal Client", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T16:27:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:27:32.831+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:32:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:32:32.843+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:37:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:37:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:40:36.514+0800 I NETWORK [conn11] end connection 127.0.0.1:63204 (5 connections now open) 2019-07-03T16:41:02.535+0800 I NETWORK [listener] connection accepted from 127.0.0.1:64417 #23 (6 connections now open) 2019-07-03T16:41:02.535+0800 I NETWORK [conn23] received client metadata from 127.0.0.1:64417 conn23: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T16:42:32.817+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:42:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:42:32.819+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:47:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:47:32.819+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:52:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:52:32.819+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:52:32.820+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:52:32.820+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019 2019-07-03T16:54:30.742+0800 I NETWORK [conn23] end connection 127.0.0.1:64417 (5 connections now open) 2019-07-03T16:55:02.769+0800 I NETWORK [listener] connection accepted from 127.0.0.1:64708 #24 (6 connections now open) 2019-07-03T16:55:02.770+0800 I NETWORK [conn24] received client metadata from 127.0.0.1:64708 conn24: { driver: { name: "NetworkInterfaceTL", version: "4.0.9" }, os: { type: "Windows", name: "Microsoft Windows 10", architecture: "x86_64", version: "10.0 (build 17763)" } } 2019-07-03T16:57:32.818+0800 I NETWORK [LogicalSessionCacheRefresh] Starting new replica set monitor for rs0/127.0.0.1:27017,127.0.0.1:27018,127.0.0.1:27019

在看下面搭建副本集过程前你最好先参照这个文档来搭建

https://docs.mongodb.com/manual/tutorial/deploy-replica-set-for-testing/

一:搭建副本集 开始

一台电脑 三个不同的端口 进行副本集的测试 在搭建前 先关闭防火墙

step1 : 新开一个dos窗口 创建如下三个文件夹 : 为每个成员创建必要的数据目录

mkdir c:srvmongodb s0-0 mkdir c:srvmongodb s0-1 mkdir c:srvmongodb s0-2

step2 :

First member:新打开一个dos窗口 执行如下命令

C:Users78204>mongod --replSet rs0 --port 27017 --dbpath c:srvmongodb s0-0 --smallfiles --oplogSize 128

Second member:新打开一个dos窗口 执行如下命令

C:Users78204>mongod --replSet rs0 --port 27018 --dbpath c:srvmongodb s0-1 --smallfiles --oplogSize 128

Third member:新打开一个dos窗口 执行如下命令

C:Users78204>mongod --replSet rs0 --port 27019 --dbpath c:srvmongodb s0-2 --smallfiles --oplogSize 128

这将以名为rs0的副本集的成员的身份启动每个实例,每个实例运行在不同的端口上(27017,27018,27019),并使用 --dbpath设置指定数据目录的路径

smallfiles和oplogSize设置减少了每个mongod实例使用的磁盘空间。这是测试和开发部署的理想选择,因为它可以防止机器过载。

step3 :

1) 通过mongo shell连接到您的mongod实例之一。您将需要通过指定其端口号来指示哪个实例

C:Users78204>mongo --port 27017 MongoDB shell version v4.0.9 connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("159b56d2-00cc-47e0-a7a5-2498c6661e29") } MongoDB server version: 4.0.9

2) 您可以在mongo shell环境中创建一个副本集配置对象,如下面的示例所示

> rsconf = { ... _id: "rs0", ... members: [ ... { ... _id: 0, ... host: "127.0.0.1:27017" ... }, ... { ... _id: 1, ... host: "127.0.0.1:27018" ... }, ... { ... _id: 2, ... host: "127.0.0.1:27019" ... } ... ] ... }

3) 使用rs.initiate()初始化副本集,然后将rsconf文件传递给rs.initiate(),如下所示

> > rs.initiate(rsconf) { "ok" : 1, "operationTime" : Timestamp(1562140190, 1), "$clusterTime" : { "clusterTime" : Timestamp(1562140190, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } rs0:SECONDARY> rs0:PRIMARY>

4) 我们现在检查副本集的状态以便检查它是否被正确设置

rs0:PRIMARY> rs.status() { "set" : "rs0", "date" : ISODate("2019-07-03T07:55:34.027Z"), "myState" : 1, "term" : NumberLong(1), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "readConcernMajorityOpTime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "appliedOpTime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "durableOpTime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) } }, "lastStableCheckpointTimestamp" : Timestamp(1562140502, 1), "members" : [ { "_id" : 0, "name" : "127.0.0.1:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 528, "optime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-07-03T07:55:32Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "electionTime" : Timestamp(1562140201, 1), "electionDate" : ISODate("2019-07-03T07:50:01Z"), "configVersion" : 1, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 1, "name" : "127.0.0.1:27018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 343, "optime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-07-03T07:55:32Z"), "optimeDurableDate" : ISODate("2019-07-03T07:55:32Z"), "lastHeartbeat" : ISODate("2019-07-03T07:55:33.747Z"), "lastHeartbeatRecv" : ISODate("2019-07-03T07:55:33.841Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "127.0.0.1:27017", "syncSourceHost" : "127.0.0.1:27017", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 1 }, { "_id" : 2, "name" : "127.0.0.1:27019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 343, "optime" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1562140532, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-07-03T07:55:32Z"), "optimeDurableDate" : ISODate("2019-07-03T07:55:32Z"), "lastHeartbeat" : ISODate("2019-07-03T07:55:33.747Z"), "lastHeartbeatRecv" : ISODate("2019-07-03T07:55:33.841Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "127.0.0.1:27017", "syncSourceHost" : "127.0.0.1:27017", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 1 } ], "ok" : 1, "operationTime" : Timestamp(1562140532, 1), "$clusterTime" : { "clusterTime" : Timestamp(1562140532, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } rs0:PRIMARY>

该输出结果表明一切都OK,至此 该副本集已经被成功配置并且初始化了。

step4 :

如何判断主节点和辅助节点 : db.isMaster()

主节点信息显示如下

rs0:PRIMARY> db.isMaster() { "hosts" : [ "127.0.0.1:27017", "127.0.0.1:27018", "127.0.0.1:27019" ], "setName" : "rs0", "setVersion" : 1, "ismaster" : true, "secondary" : false, "primary" : "127.0.0.1:27017", "me" : "127.0.0.1:27017", "electionId" : ObjectId("7fffffff0000000000000001"), "lastWrite" : { "opTime" : { "ts" : Timestamp(1562141022, 1), "t" : NumberLong(1) }, "lastWriteDate" : ISODate("2019-07-03T08:03:42Z"), "majorityOpTime" : { "ts" : Timestamp(1562141022, 1), "t" : NumberLong(1) }, "majorityWriteDate" : ISODate("2019-07-03T08:03:42Z") }, "maxBsonObjectSize" : 16777216, "maxMessageSizeBytes" : 48000000, "maxWriteBatchSize" : 100000, "localTime" : ISODate("2019-07-03T08:03:43.491Z"), "logicalSessionTimeoutMinutes" : 30, "minWireVersion" : 0, "maxWireVersion" : 7, "readOnly" : false, "ok" : 1, "operationTime" : Timestamp(1562141022, 1), "$clusterTime" : { "clusterTime" : Timestamp(1562141022, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

辅助节点信息显示如下

rs0:SECONDARY> db.isMaster() { "hosts" : [ "127.0.0.1:27017", "127.0.0.1:27018", "127.0.0.1:27019" ], "setName" : "rs0", "setVersion" : 1, "ismaster" : false, "secondary" : true, "primary" : "127.0.0.1:27017", "me" : "127.0.0.1:27018", "lastWrite" : { "opTime" : { "ts" : Timestamp(1562140932, 1), "t" : NumberLong(1) }, "lastWriteDate" : ISODate("2019-07-03T08:02:12Z"), "majorityOpTime" : { "ts" : Timestamp(1562140932, 1), "t" : NumberLong(1) }, "majorityWriteDate" : ISODate("2019-07-03T08:02:12Z") }, "maxBsonObjectSize" : 16777216, "maxMessageSizeBytes" : 48000000, "maxWriteBatchSize" : 100000, "localTime" : ISODate("2019-07-03T08:02:13.667Z"), "logicalSessionTimeoutMinutes" : 30, "minWireVersion" : 0, "maxWireVersion" : 7, "readOnly" : false, "ok" : 1, "operationTime" : Timestamp(1562140932, 1), "$clusterTime" : { "clusterTime" : Timestamp(1562140932, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } }

二:验证副本集数据的一致性

step1 : 向主节点添加一条数据,查看辅助接点是否也有此条数据(主节点的端口是27017)

rs0:PRIMARY> db test rs0:PRIMARY> use admin switched to db admin rs0:PRIMARY> rs0:PRIMARY> p={"ammeterId" : "110004217635", "rulerCode" : "04000102", "dataItem" : "193710", "terminalTime" : "2019-06-20 19:29:51", "storageTime" : "2019-06-21 10:30:12" } { "ammeterId" : "110004217635", "rulerCode" : "04000102", "dataItem" : "193710", "terminalTime" : "2019-06-20 19:29:51", "storageTime" : "2019-06-21 10:30:12" } rs0:PRIMARY> db.zt.insert(p) WriteResult({ "nInserted" : 1 }) rs0:PRIMARY>

step2 : 新开一个dos窗口 登录到辅助节点 查看辅助节点(27018)是否有主节点(27017)插入的数据

#mongo+端口 登录指定辅助节点 C:Users78204>mongo --port 27018 ...省略登录成功的日志信息 rs0:SECONDARY> db test rs0:SECONDARY> use admin switched to db admin rs0:SECONDARY> db.zt.findOne() { "_id" : ObjectId("5d1c62983e33c5ca0358ac3d"), "ammeterId" : "110004217635", "rulerCode" : "04000102", "dataItem" : "193710", "terminalTime" : "2019-06-20 19:29:51", "storageTime" : "2019-06-21 10:30:12" } rs0:SECONDARY>

step3 : 同理 新开一个dos窗口 登录到另一个辅助节点 查看辅助节点(27019)是否有主节点(27017)插入的数据

C:Users78204>mongo --port 27019 ...省略登录成功的日志信息 rs0:SECONDARY> db test rs0:SECONDARY> rs0:SECONDARY> use admin switched to db admin rs0:SECONDARY> db.zt.findOne() { "_id" : ObjectId("5d1c62983e33c5ca0358ac3d"), "ammeterId" : "110004217635", "rulerCode" : "04000102", "dataItem" : "193710", "terminalTime" : "2019-06-20 19:29:51", "storageTime" : "2019-06-21 10:30:12" }

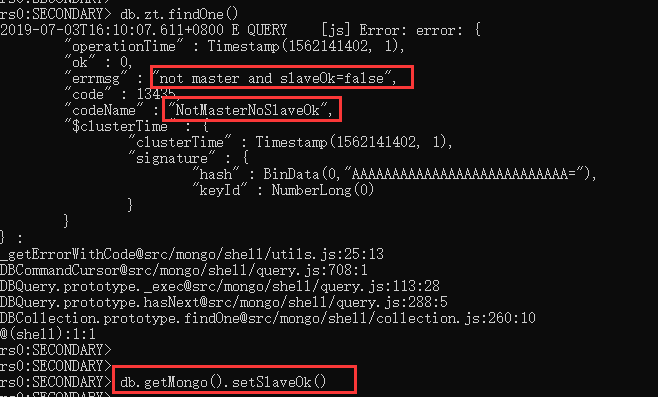

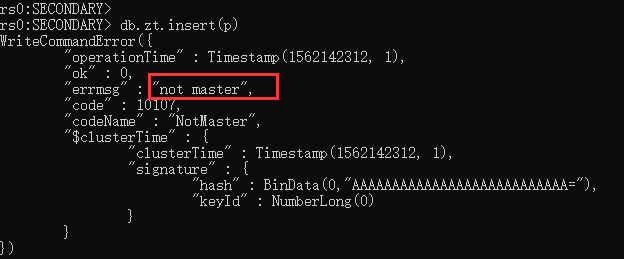

在step2和step3的步骤中 查询数据会报错 解决方法如下 : db.getMongo().setSlaveOk()

辅助节点不允许插入数据 只能是主节点插入数据 如下

三:移除一台服务器

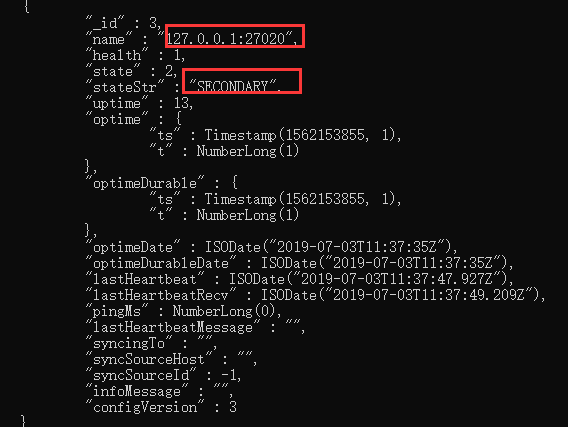

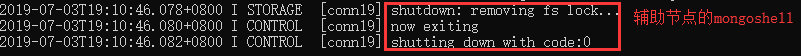

咱们移除27018这个辅助节点

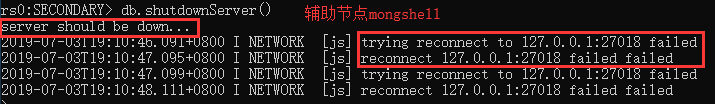

step1 :

你要连接到第二个成员的mongo实例 通过mongo+端口号

执行以下命令 db.shutdownServer()

rs0:SECONDARY> db admin rs0:SECONDARY> rs0:SECONDARY> db.shutdownServer() server should be down... 2019-07-03T19:10:46.091+0800 I NETWORK [js] trying reconnect to 127.0.0.1:27018 failed 2019-07-03T19:10:47.095+0800 I NETWORK [js] reconnect 127.0.0.1:27018 failed failed 2019-07-03T19:10:47.099+0800 I NETWORK [js] trying reconnect to 127.0.0.1:27018 failed 2019-07-03T19:10:48.111+0800 I NETWORK [js] reconnect 127.0.0.1:27018 failed failed >

此时在主节点上查看状态rs.status()是会看到一些辅助节点的错误信息的如下

{ "_id" : 1, "name" : "127.0.0.1:27018", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDurable" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "optimeDurableDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2019-07-03T11:12:05.401Z"), "lastHeartbeatRecv" : ISODate("2019-07-03T11:10:45.158Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Error connecting to 127.0.0.1:27018 :: caused by :: ����Ŀ�����������ܾ��������ӡ�", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : -1 },

step2 :

接着你要连接到主节点mongoshell

执行以下命令来移除该成员 rs.remove("127.0.0.1:27018")

rs0:PRIMARY> db admin rs0:PRIMARY> rs0:PRIMARY> rs.remove("127.0.0.1:27018") { "ok" : 1, "operationTime" : Timestamp(1562152380, 1), "$clusterTime" : { "clusterTime" : Timestamp(1562152380, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } rs0:PRIMARY>

此时再在主节点查看副本集状态信息 则不会有辅助节点的错误信息 表明 删除辅助节点(移除一台服务器)成功

rs0:PRIMARY> rs.status() { "set" : "rs0", "date" : ISODate("2019-07-03T11:13:24.627Z"), "myState" : 1, "term" : NumberLong(1), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) }, "readConcernMajorityOpTime" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) }, "appliedOpTime" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) }, "durableOpTime" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) } }, "lastStableCheckpointTimestamp" : Timestamp(1562152380, 1), "members" : [ { "_id" : 0, "name" : "127.0.0.1:27017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 12398, "optime" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-07-03T11:13:23Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "electionTime" : Timestamp(1562140201, 1), "electionDate" : ISODate("2019-07-03T07:50:01Z"), "configVersion" : 2, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 2, "name" : "127.0.0.1:27019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 12214, "optime" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1562152403, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-07-03T11:13:23Z"), "optimeDurableDate" : ISODate("2019-07-03T11:13:23Z"), "lastHeartbeat" : ISODate("2019-07-03T11:13:24.491Z"), "lastHeartbeatRecv" : ISODate("2019-07-03T11:13:24.519Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "127.0.0.1:27017", "syncSourceHost" : "127.0.0.1:27017", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 2 } ], "ok" : 1, "operationTime" : Timestamp(1562152403, 1), "$clusterTime" : { "clusterTime" : Timestamp(1562152403, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } rs0:PRIMARY>

mongoshell效果图

四:添加一台服务器

你要将一个新的活跃成员添加到副本集,就像处理其他成员一样

step1 :

首先打开一个新的命令提示符并且创建数据目录

C:Users78204>mkdir c:srvmongodb s0-3

接下来以命令启动mongod (新成员的端口为 27020)

C:Users78204>mongod --replSet rs0 --port 27020 --dbpath c:srvmongodb s0-3 --smallfiles --oplogSize 128

step2 :

连接到主节点(27017)的mongoshell控制台,将这个新成员添加到副本集 rs.add("127.0.0.1:27020")