本系列博客根据Geoffrey Hinton课程Neural Network for Machine Learning总结. 课程网址为:

https://www.coursera.org/course/neuralnets

1、Some examples of tasks best solved by learning 机器学习最适用的领域举例

- Recognizing patterns: 模式识别

– Objects in real scenes 物体识别

– Facial identities or facial expressions 人脸检测

– Spoken words 语言

• Recognizing anomalies: 识别异常

– Unusual sequences of credit card transactions 信用卡交易的不寻常序列

– Unusual patterns of sensor readings in a nuclear power plant 核电站传感器的不寻常读数

• Prediction: 预测

– Future stock prices or currency exchange rates 未来股票价格或者货币兑换汇率

– Which movies will a person like? 一个人喜欢看哪种电影

2、用一个机器学习的标准实例来解释许多机器学习算法,许多学科都采用这一种方式

以遗传学为例,A lot of genetics is done on fruit flies(实蝇类).

– They are convenient because they breed fast.

– We already know a lot about them

The MNIST database of hand-written digits is the the machine learning equivalent of fruit flies

– They are publicly available and we can get machine learning algorithm to learn how to recognize these handwritten digits, so it is easy to try lots of variations. them quite fast in a moderate-sized neural net.

– We know a huge amount about how well various machine learning methods do on MNIST. And particular, the different machine learning methods were implemented by people who believed in them, so we can rely on those results.

所以,我们选择MNIST数据库作为我们的标准测试任务。

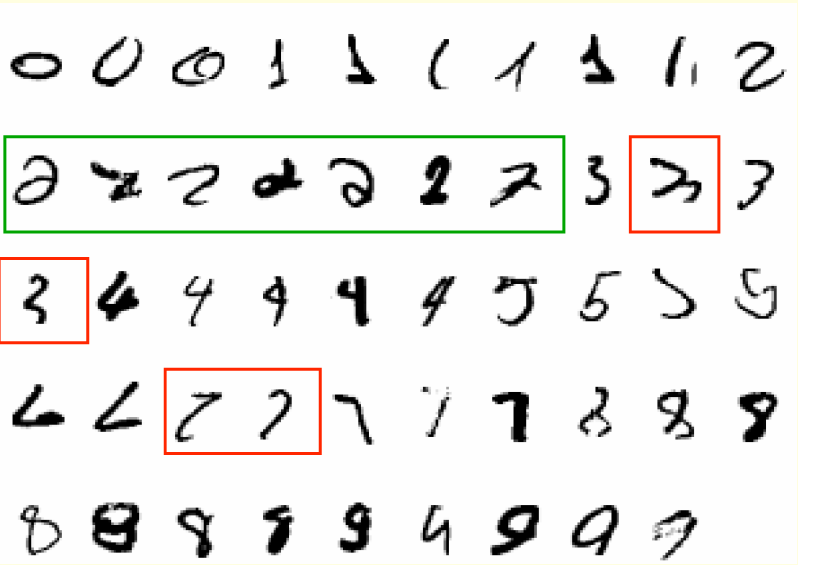

比如在MNIST里的一些手写数字如下:

比如第二行的2,用其中一个覆盖其他任何一个,很难有匹配很好的。所以模板不能做这项工作。很难找到模板适合绿色框中这些2和红色框中的2,所以手写数字对于机器学习来说比较合适。

Beyond MNIST: ImageNet task

MNIST现在对于机器学习来说相对简单。我们现在有神经网络接近一百万个参数,在1.3百万高清像素的训练图片中识别出不同的物体类别

Jitendra Malik (an eminent neural net sceptic) said that this competition is a good test of whether deep neural networks work well for object recognition

– A very deep neural net (Krizhevsky et. al. 2012) gets less that 40% error for its first choice and less than 20% for its top 5 choices

神经网络的早期版本

正确的结果是红色的,中间的一副正确识别出图中的物体是snowplow,他对其他的选项不是敏感的,他一点也不像drilling platform, 但是看起来像第三个选项lifeboat. 左边的图得到错误的识别,但是在top5中识别出来了。右边的图完全错了。

神经网络现在擅长的另一个任务是语言识别,语言识别有几个阶段:

– Pre-processing: Convert the sound wave into a vector of acoustic coefficients. Extract a new vector about every 10 mille seconds.

– The acoustic model: Use a few adjacent vectors of acoustic coefficients to place bets on which part of which phoneme(音位) is being spoken.

– Decoding: Find the sequence of bets that does the best job of fitting the acoustic data and also fitting a model of the kinds of things people say

Deep neural networks pioneered by George Dahl and Abdel-rahman Mohamed are now replacing the previous machine learning method for the acoustic model.

Phone recognition on the TIMIT benchmark

Dahl 和 Mohamed开发了一个系统使用许多层和二值神经元to take some acoustic frames, and make bets about the labels.They are doing it on a fairly small database and then used 183 alternative labels. 为了使他们的系统工作好,他们做了一些提前训练。在标准的后处理结束后,他们得到了20.7%错误率。而且、此前最好的识别错误率为24.4%。