1. Linux 系统版本

[root@localhost local]# cat /proc/version

Linux version 3.10.0-229.el7.x86_64 (builder@kbuilder.dev.centos.org) (gcc version 4.8.2 20140120 (Red Hat 4.8.2-16) (GCC) ) #1 SMP Fri Mar 6 11:36:42 UTC 2015

CentOS7

2. 环境准备

2.1. Java 官网下载

2.2. Hadoop 官网下载

2.3. 解压缩文件

Java 解压至:/usr/local/jdk1.8.0_241

Hadoop 解压至:/usr/local/hadoop-2.10.0

2.3.1. 配置环境变量

设置 JAVA_HOME 和 HADOOP_HOME

打开文件:vi /etc/bashrc

export JAVA_HOME=/usr/local/jdk1.8.0_241

export HADOOP_HOME=/usr/local/hadoop-2.10.0/

export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/bin/:$HADOOP_HOME/sbin:$PATH

使配置实时生效

source /etc/bashrc

2.4. 安装 ssh 服务

通常CentOS系统默认是安装好的

yum install openssh-server

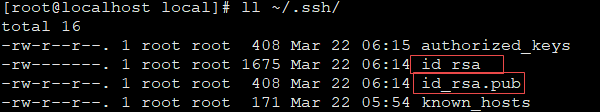

2.5. 配置免密登录

ssh-keygen -t rsa

生成两个文件,分别为公钥和私钥

如果 ~/.ssh/ 目录中没有 authorized_keys 文件,自己新建一个。并将公钥丢进该文件中

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

3. Hadoop 介绍

Hadoop 主要包含模块:

- Hadoop Common: The common utilities that support the other Hadoop modules.

- Hadoop Distributed File System (HDFS™): A distributed file system that provides high-throughput access to application data.

- Hadoop YARN: A framework for job scheduling and cluster resource management.

- Hadoop MapReduce: A YARN-based system for parallel processing of large data sets.

- Hadoop Ozone: An object store for Hadoop

Hadoop 支持三种启动模式

- Local (Standalone) Mode - 本地模式

- Pseudo-Distributed Mode - 伪分布式

- Fully-Distributed Mode - 全分布式

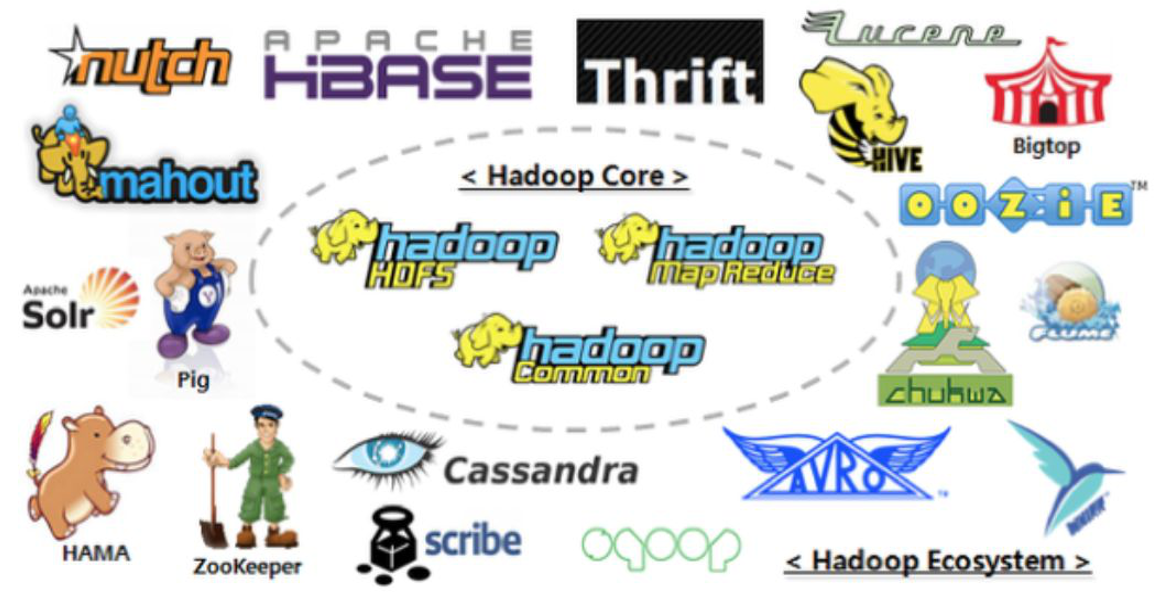

3.1. Hadoop 生态圈

4. Hadoop 安装过程

进入目录 cd /usr/local/hadoop-2.10.0/etc/hadoop

4.1. 编辑配置文件

- 编辑环境变量

文件名称:hadoop-env.sh

修改 JAVA_HOME 路径

- 编辑核心文件

文件名称:core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop</value>

</property>

- 编辑 HDFS 文件

文件名称:hdfs-site.xml

<property>

<name>dfs.repliction</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>localhost:50090</value>

</property>

- 编辑 YARN 配置文件

文件名称:yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

- 编辑 mapred 配置文件

文件名称:mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>localhost:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>localhost:19888</value>

</property>

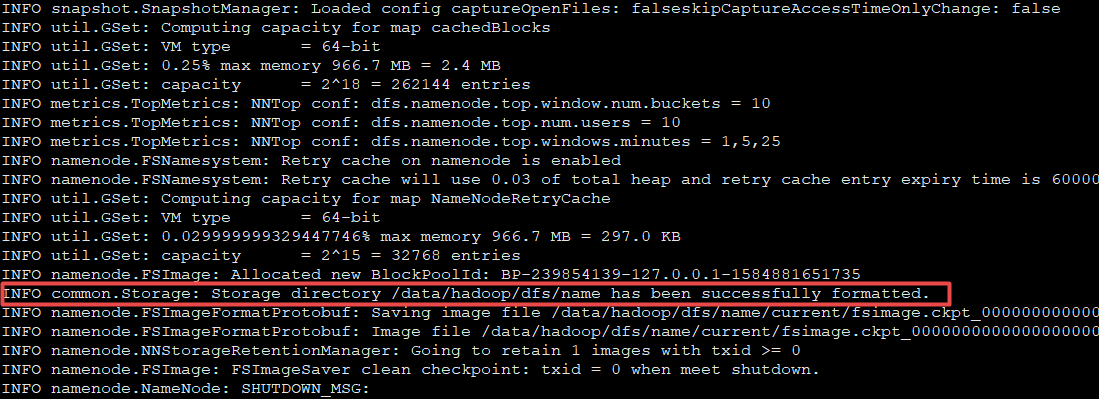

4.2. 启动 Hadoop

第一次启动 Hadoop 需要进行初始化,执行如下命令:

hdfs namenode -format

启动所有服务节点:start-all.sh

停止所有服务节点:start-all.sh

单独启动HDFS服务:start-dfs.sh

单独启动YARN服务:start-yarn.sh

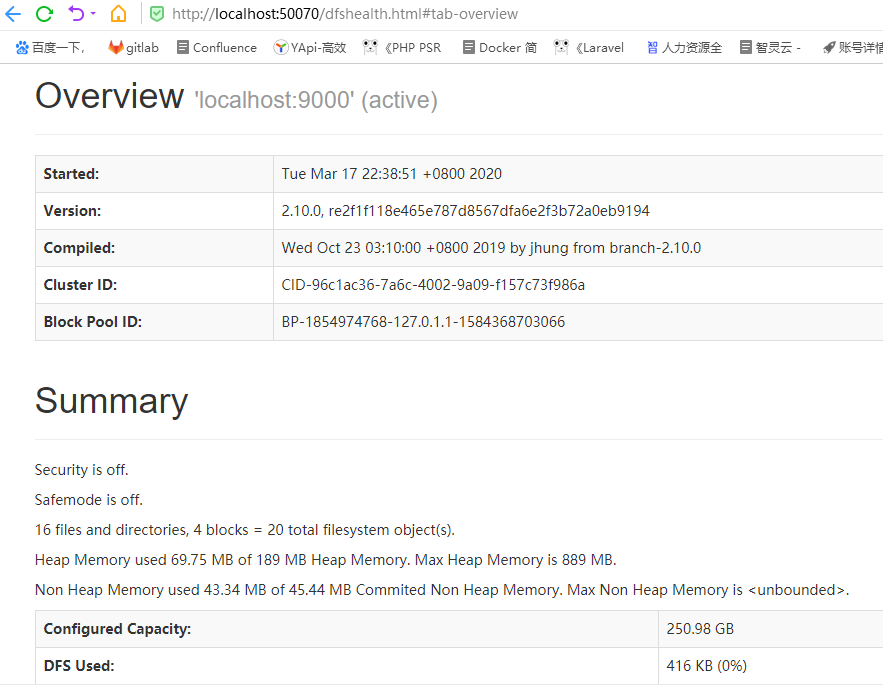

4.3. 访问HDFS地址

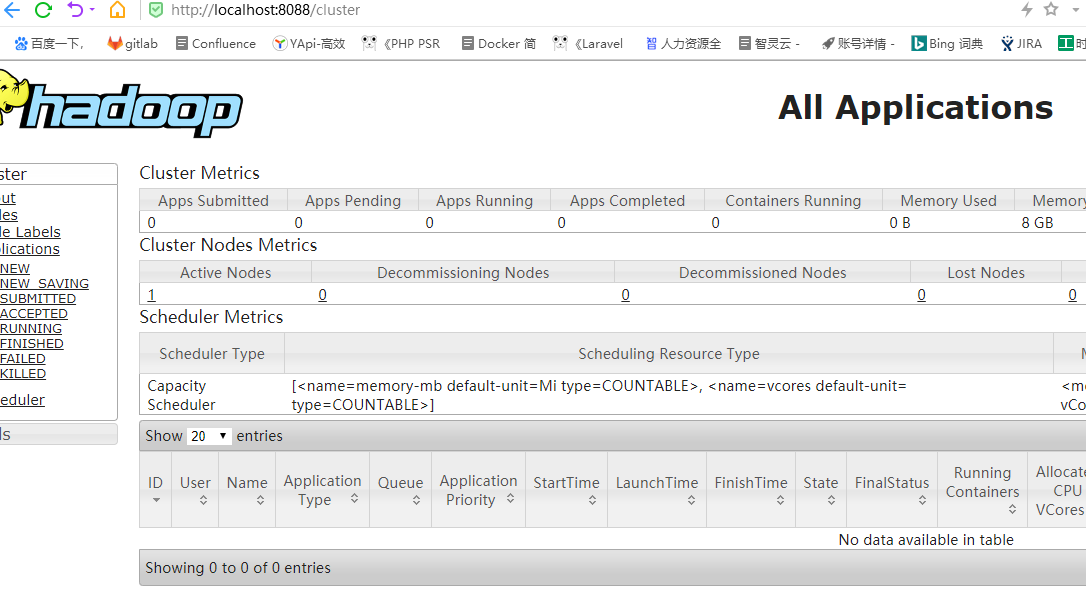

4.4. 访问YARN资源管理地址

4.5. HDFS 常用命令

[root@localhost hadoop-2.10.0]# hdfs

Usage: hdfs [--config confdir] [--loglevel loglevel] COMMAND

where COMMAND is one of:

dfs run a filesystem command on the file systems supported in Hadoop.

classpath prints the classpath

namenode -format format the DFS filesystem

secondarynamenode run the DFS secondary namenode

namenode run the DFS namenode

journalnode run the DFS journalnode

zkfc run the ZK Failover Controller daemon

datanode run a DFS datanode

debug run a Debug Admin to execute HDFS debug commands

dfsadmin run a DFS admin client

dfsrouter run the DFS router

dfsrouteradmin manage Router-based federation

haadmin run a DFS HA admin client

fsck run a DFS filesystem checking utility

balancer run a cluster balancing utility

jmxget get JMX exported values from NameNode or DataNode.

mover run a utility to move block replicas across

storage types

oiv apply the offline fsimage viewer to an fsimage

oiv_legacy apply the offline fsimage viewer to an legacy fsimage

oev apply the offline edits viewer to an edits file

fetchdt fetch a delegation token from the NameNode

getconf get config values from configuration

groups get the groups which users belong to

snapshotDiff diff two snapshots of a directory or diff the

current directory contents with a snapshot

lsSnapshottableDir list all snapshottable dirs owned by the current user

Use -help to see options

portmap run a portmap service

nfs3 run an NFS version 3 gateway

cacheadmin configure the HDFS cache

crypto configure HDFS encryption zones

storagepolicies list/get/set block storage policies

version print the version

5. 常见问题

5.1. mkdir: Cannot create directory /aaa. Name node is in safe mode.

# 强制离开安全模式

hdfs dfsadmin -safemode leave

5.2 如有发现一些节点启动不了,查看Hadoop日志

tail -f *.log