requests+beautifulsoup爬取

import requests from bs4 import BeautifulSoup import json #构造url #通过url发送请求 #返回结果处理 #写入文件 headers={ 'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64; rv:58.0) Gecko/20100101 Firefox/58.0', 'proxy':"http://60.13.42.109:9999", } def url_create(start_page,end_page): for page in range(start_page,end_page+1): url = 'https://book.douban.com/tag/%E5%B0%8F%E8%AF%B4?start='+str(20*(page-1)) get_response(url) def get_response(url): print(url) response = requests.get(url,headers=headers).text check_response(response) def check_response(response): soup = BeautifulSoup(response,'lxml') result = soup.find_all(class_='subject-item') for item in result: name = item.find(class_='info').find(name='a').get_text().split()[0] author = item.find(class_='info').find(class_='pub').get_text().split()[-1] score = item.find(class_='info').find(class_='rating_nums').get_text().split()[0] list = { '书名:':name, '价格:':author, '评分:':score } write_file(list) def write_file(list): with open('result1.txt', 'a', encoding='utf-8') as f: f.write(json.dumps(list, ensure_ascii=False) + ' ') if __name__ == '__main__': start_page = int(input("爬取开始页面:")) end_page = int(input("爬取结束页面:")) url_create(start_page, end_page)

urllib+xpath爬取

import urllib.request from lxml import etree import json response = urllib.request.urlopen('https://book.douban.com/tag/%E5%B0%8F%E8%AF%B4?start=0') html = response.read().decode('utf-8') result = etree.HTML(html) name_list = "".join(result.xpath('//ul[@class="subject-list"]//h2//a/text()')).split() author_list = "".join(result.xpath('//ul[@class="subject-list"]//div[@class="pub"]/text()')).split(' ') score_list = result.xpath('//ul[@class="subject-list"]//span[@class="rating_nums"]/text()') list = [] k=3 for i in range(len(author_list)//4): try: list.append(author_list[k]) k+=5 except: pass for i in range(len(name_list)): dict = { '书名:':name_list[i], '作者:': list[i], '评分:': score_list[i] } with open('result2.txt','a',encoding='utf-8') as f: f.write(json.dumps(dict,ensure_ascii=False) + ' ')

TXT文本的存储/读取

id= '10001' name = 'Bob' age = '22' with open('data.txt','w') as f: f.write(' '.join([id,name,age]))

with open('data.txt','r') as f: data = f.readline() print(data)

JSON文件存储/读取

CSV文件存储/读取

方法一

import csv

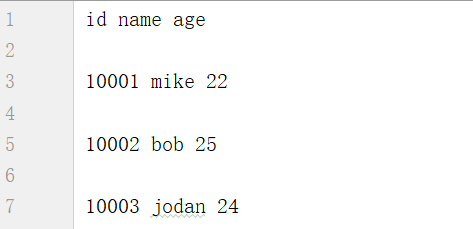

with open('data.csv','w',newline="") as csvfile:

writer = csv.writer(csvfile,delimiter=' ') #delimiter参数表示用空格隔开,没有此参数默认用逗号隔开id和name和age,每一行都是

writer.writerow(['id','name','age'])

writer.writerow(['10001', 'mike', '22'])

writer.writerow(['10002', 'bob', '25'])

writer.writerow(['10003','jodan','24'])

图一

图一

方法二

import csv with open('data.csv','w',newline="") as csvfile: writer = csv.writer(csvfile,delimiter=' ') writer.writerow(['id','name','age']) writer.writerows([['10001', 'mike', '22'],['10002', 'bob', '25'],['10003','jodan','24']]) #结果看图一

方法三

import csv with open('data.csv','w',newline="") as csvfile: #encoding=“utf-8” 增加这个参数写入中文不会乱码 增加一个newline=“”就不会像图一空一行打印 fieldnames = ['id','name','age'] writer = csv.DictWriter(csvfile,fieldnames=fieldnames) writer.writeheader() writer.writerow({'id':'10001','name':'mike','age':22}) writer.writerow({'id':'10002', 'name':'bob', 'age':25}) writer.writerow({'id':'10003', 'name':'jodan', 'age':24}) #结果看图一

读取

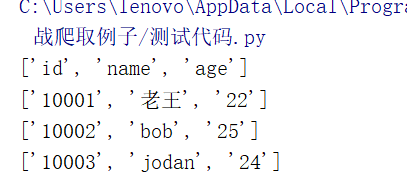

方法一

with open('data.csv','r',encoding='utf-8') as csvfile: reader = csv.reader(csvfile) for row in reader: print(row)

方法二

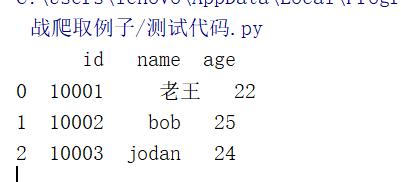

import pandas as pd df = pd.read_csv('data.csv') print(df)