1、Improving Convolutional Networks with Self-calibrated Convolutions

论文地址:http://mftp.mmcheng.net/Papers/20cvprSCNet.pdf

代码地址:https://github.com/MCG-NKU/SCNet

2、DO-Conv: Depthwise Over-parameterized Convolutional Layer

地址:https://arxiv.org/pdf/2006.12030.pdf

github:https://github.com/yangyanli/DO-Conv.

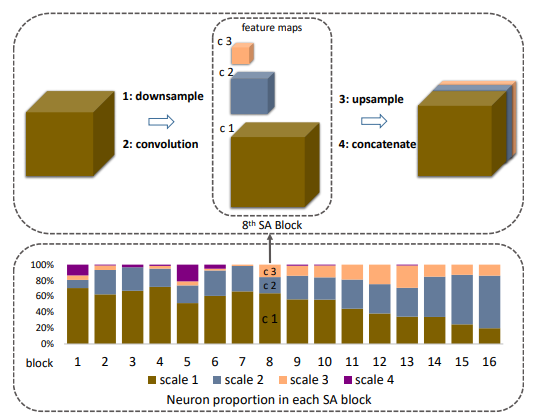

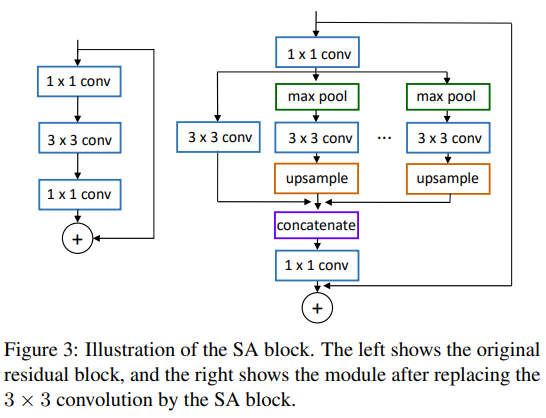

3、Data-Driven Neuron Allocation for Scale Aggregation Networks

地址:https://arxiv.org/pdf/1904.09460.pdf

github:https://github.com/Eli-YiLi/ScaleNet

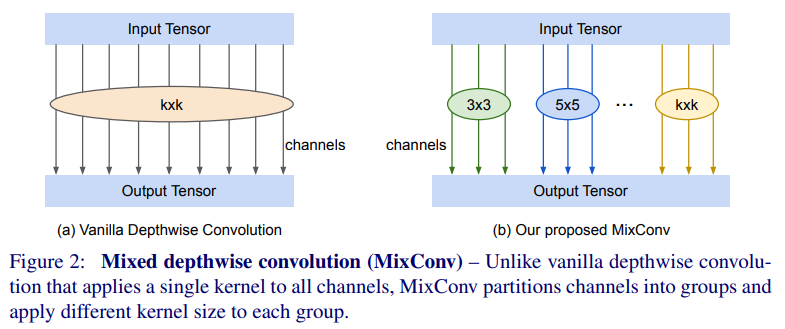

4、MixConv: Mixed Depthwise Convolutional Kernels

地址:https://arxiv.org/pdf/1907.09595.pdf

github:https://github.com/ tensorflow/tpu/tree/master/models/official/mnasnet/mixnet

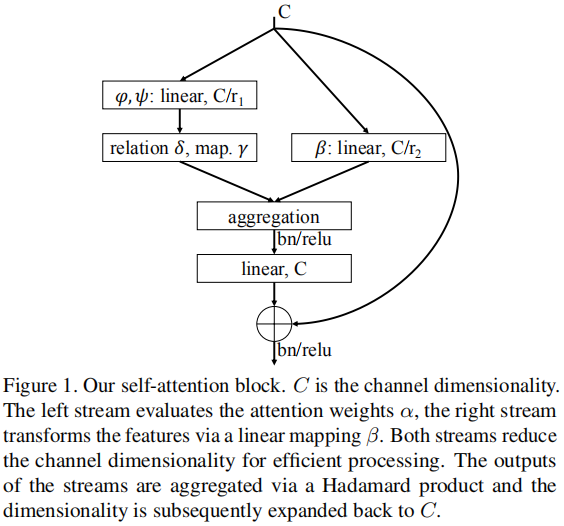

5、 exploring self-attention for image recognition

地址:https://hszhao.github.io/papers/cvpr20_san.pdf

github:https://github.com/hszhao/SAN

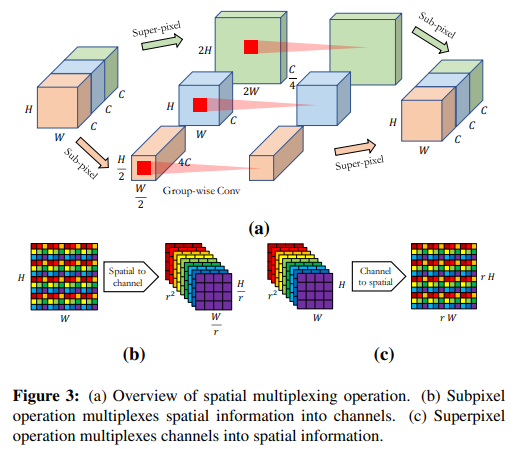

6、MUXConv: Information Multiplexing in Convolutional Neural Networks

地址:https://arxiv.org/pdf/2003.13880.pdf

github:https://github.com/ human-analysis/MUXConv

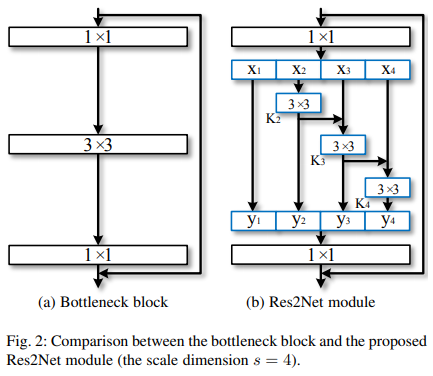

7、Res2Net: A New Multi-scale Backbone Architecture

地址:https://arxiv.org/pdf/1904.01169.pdf

github:https://mmcheng.net/res2net

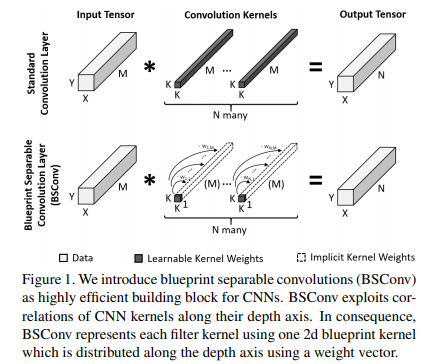

8、Rethinking Depthwise Separable Convolutions: How Intra-Kernel Correlations Lead to Improved MobileNets

地址:https://arxiv.org/pdf/2003.13549.pdf

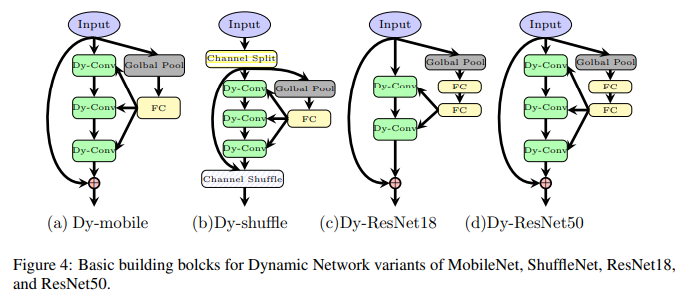

9、DYNET: DYNAMIC CONVOLUTION FOR ACCELERATING CONVOLUTIONAL NEURAL NETWORKS

地址:https://arxiv.org/pdf/2004.10694.pdf

10、Dynamic Convolution: Attention over Convolution Kernels

地址:https://arxiv.org/pdf/1912.03458.pdf

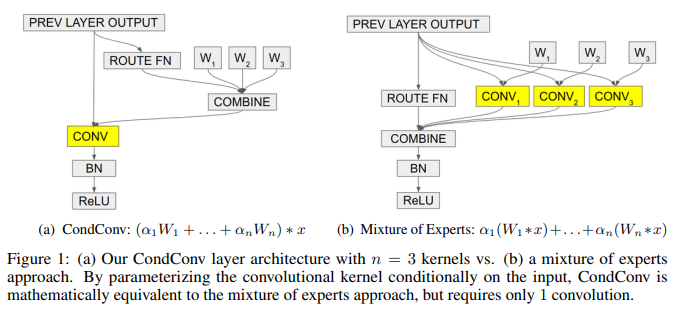

11、CondConv: Conditionally Parameterized Convolutions for Efficient Inference

地址:https://arxiv.org/pdf/1904.04971.pdf

github:https://github.com/tensorflow/tpu/tree/master/ models/official/efficientnet/condconv

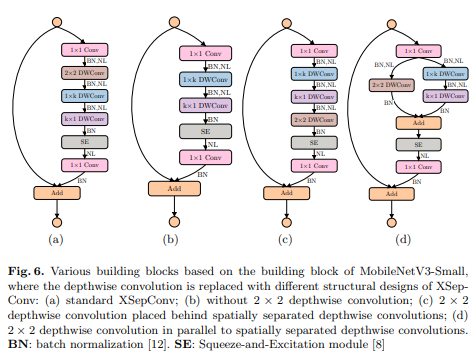

12、XSepConv: Extremely Separated Convolution

地址:https://arxiv.org/pdf/2002.12046.pdf

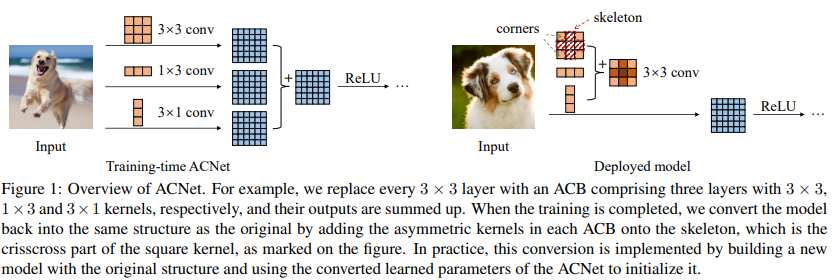

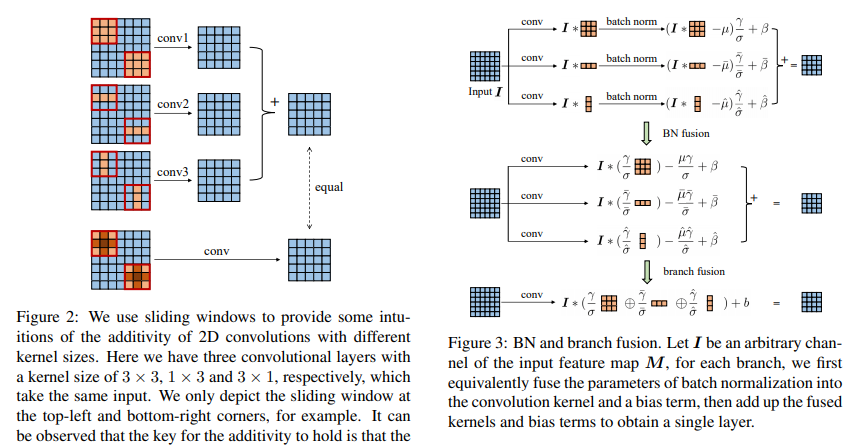

13、ACNet: Strengthening the Kernel Skeletons for Powerful CNN via Asymmetric Convolution Blocks

地址:https://arxiv.org/pdf/1908.03930.pdf

14、Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution

地址:https://arxiv.org/pdf/1904.05049.pdf