经实验表明:

①多层gmetad与ganglia版本无关,且可以多版本兼容

②多层gmetad只有最底层gmetad能保存详细指标,非底层gmetad收集到的都只能是summary信息,当然也许我配置的有问题,目前的配置能得到这个结论

③如果想看到remote grid的详细信息,需要在remote grid的gmetad节点上开启gweb服务

测试 Ⅰ 如下

测试准备

*本测试共有两级gmetad,底层为一级gmetad,高层为二级gmetad,二级gmetad为3.1.7版本,一级gmetad为3.7.2版本

*为保证二级gemtad所收集到的指标信息确为一级gmetad所传,而非gmond,本测试只启动一个gmond

*gmond节点:hdp3

一级gmetad节点:hdp2

二级gmetad节点:hdp1

gweb节点:hdp1

测试过程:

①配置文件

hdp1

cluster {

name = "hdp3"

owner = "unspecified"

latlong = "unspecified"

url = "unspecified"

}

/* The host section describes attributes of the host, like the location */

host {

location = "unspecified"

}

/* Feel free to specify as many udp_send_channels as you like. Gmond

used to only support having a single channel */

udp_send_channel {

#bind_hostname = yes # Highly recommended, soon to be default.

# This option tells gmond to use a source address

# that resolves to the machine's hostname. Without

# this, the metrics may appear to come from any

# interface and the DNS names associated with

# those IPs will be used to create the RRDs.

#mcast_join = 239.2.11.71

host = hdp3(实践证明,只能是这个,不能是localhost)

port = 8649

ttl = 1

}

/* You can specify as many udp_recv_channels as you like as well. */

udp_recv_channel {

#mcast_join = 239.2.11.71

port = 8649

#bind = 239.2.11.71

#retry_bind = true

# Size of the UDP buffer. If you are handling lots of metrics you really

# should bump it up to e.g. 10MB or even higher.

# buffer = 10485760

}

/* You can specify as many tcp_accept_channels as you like to share

an xml description of the state of the cluster */

tcp_accept_channel {

port = 8655

# If you want to gzip XML output

gzip_output = no

}

hdp2

[root@hdp2 ganglia]# grep -v ^# gmetad.conf

data_source "hdp3" hdp3:8655

RRAs "RRA:AVERAGE:0.5:1:244" "RRA:AVERAGE:0.5:24:244" "RRA:AVERAGE:0.5:168:244" "RRA:AVERAGE:0.5:672:244"

gridname "hdp3-grid"

all_trusted on

setuid_username ganglia

xml_port 8651

interactive_port 8652

case_sensitive_hostnames 0

hdp1

[root@hdp1 ganglia]# grep -v ^# gmetad.conf

data_source "hdp3-grid" hdp2:8651

gridname "MyGrid"

case_sensitive_hostnames 1

②启动

hdp3:service gmond start

hdp2:service gmetad start

hdp1:service gmetad start

service httpd start

③结果验证

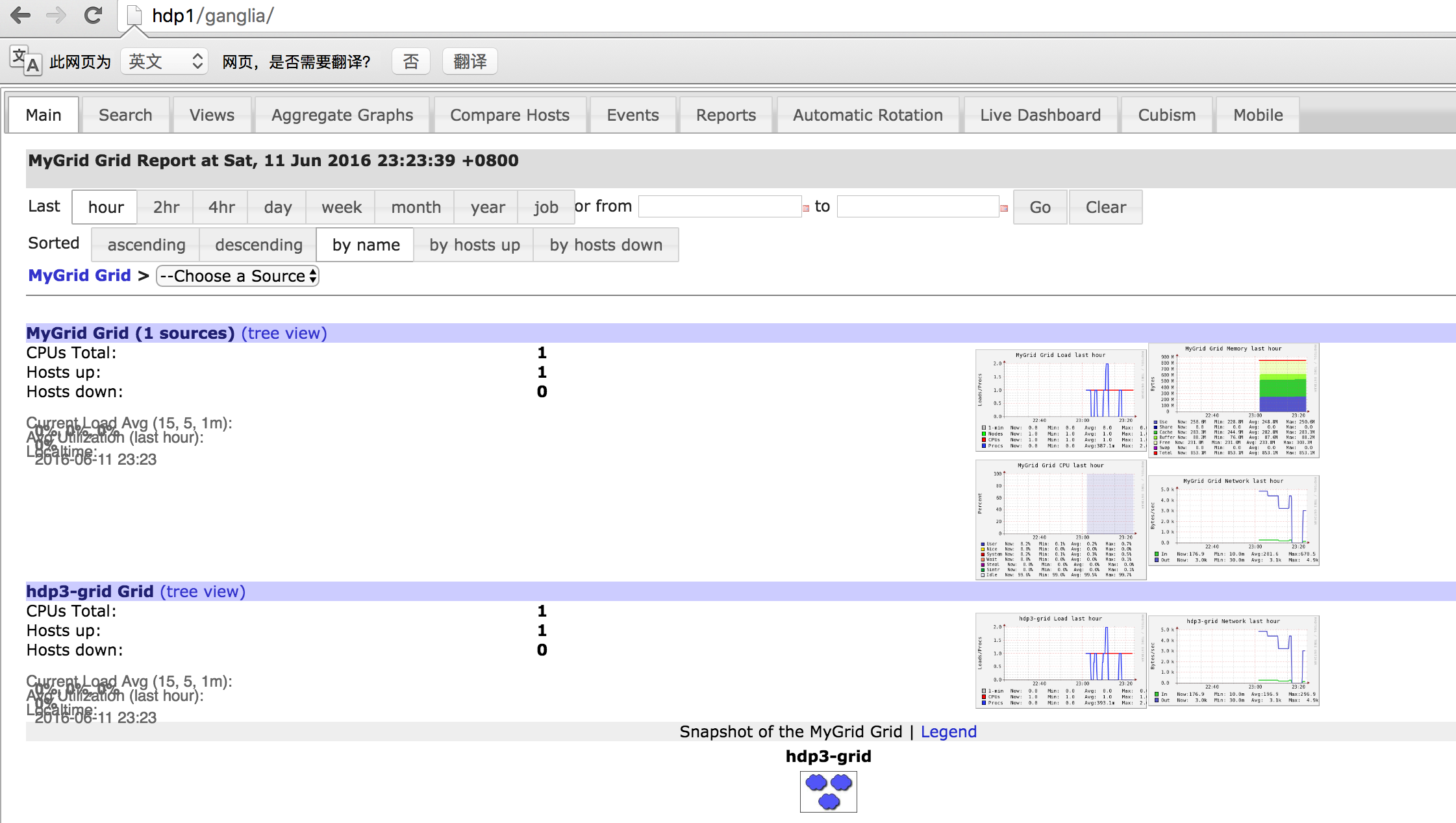

实现了分grid展现集群,在集群数量少的情况下,这没什么必要,但是如果集群很多,全部以同一grid下的集群展现,页面会很长,很不好看

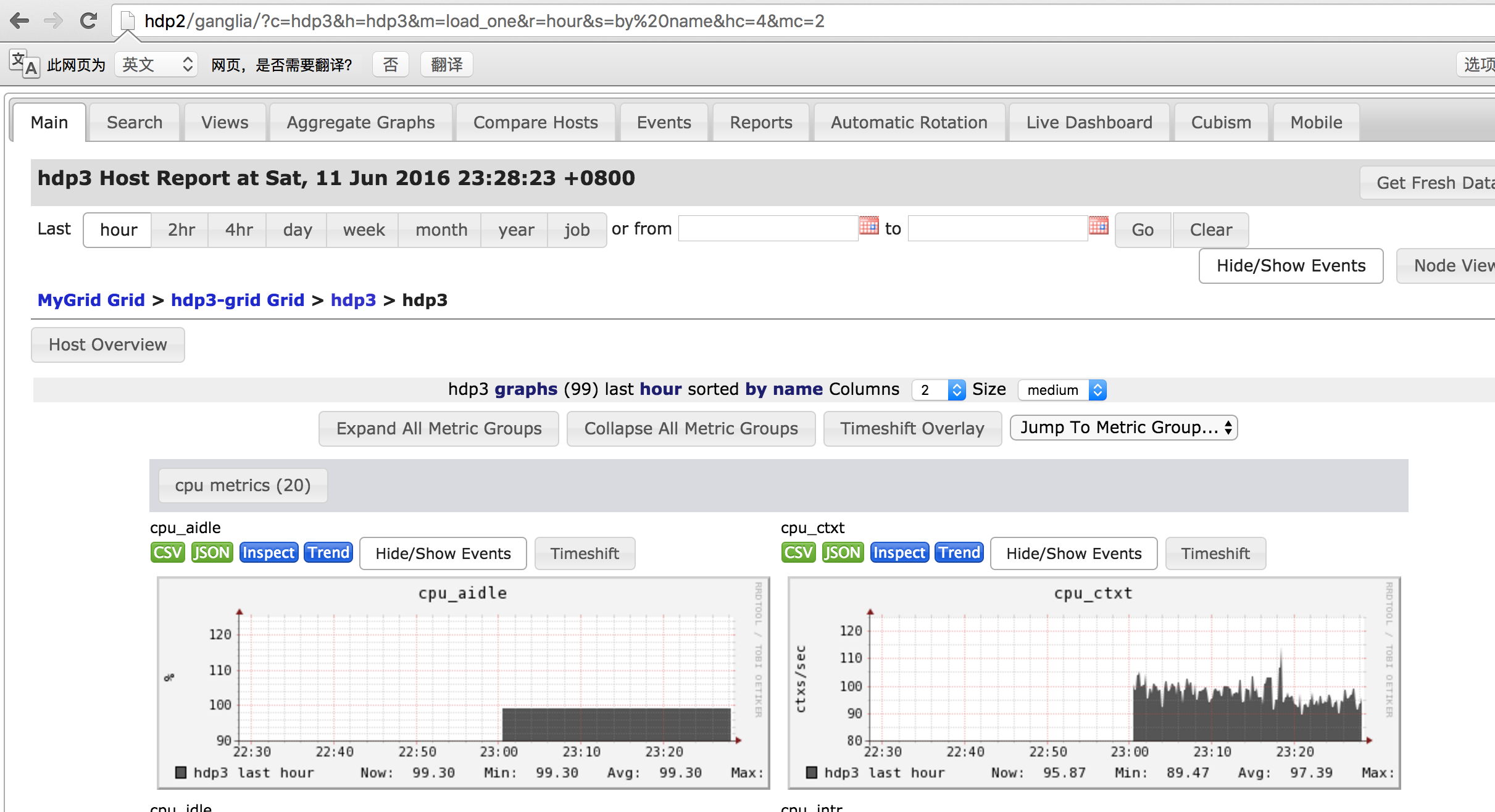

但是分grid展现有一个问题,就是如果你想看总grid下的某个grid(即remote grid),需要该grid也打开了gweb服务,如下图

测试Ⅱ如下

测试准备

同测试Ⅰ,不同点:一级gmetad为3.1.7版本,二级gmetad版本为3.7.2

测试过程

①配置文件

参考测试Ⅰ-①

②同测试Ⅰ-②

③验证结果

同测试Ⅰ