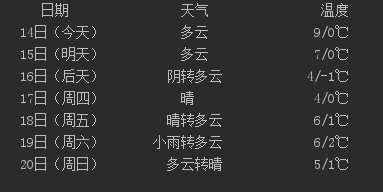

0x00 获取某地七天天气预报

打开中国天气网,随便查询当地天气,查看返回页面的源码

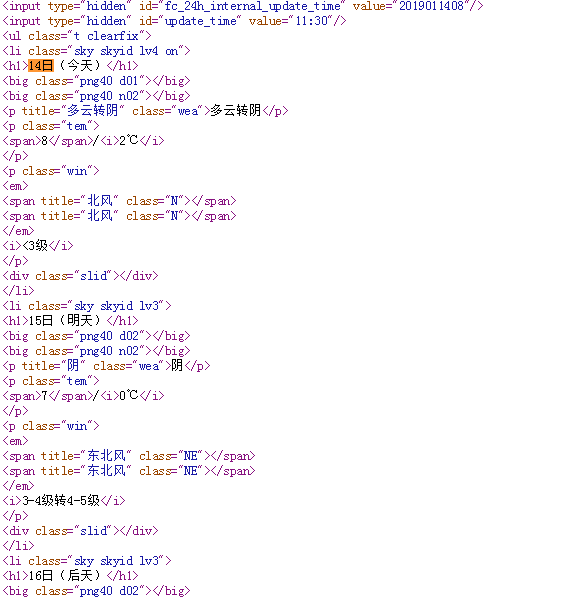

发现我们需要的信息都在<ul>标签下的<li>标签中,所以这里的基本思路就是遍历<ul>标签下的<li>,每次获取日期,天气,温度三个数据,

首先定义四个函数来实现全部功能。第一个函数获取网页信息,第二个函数将信息提取存入列表,第三个函数打印这些数据,最后是一个main函数

import requests from bs4 import BeautifulSoup def getTHMLText(url): #获取网页 try: header={"User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0"} r = requests.get(url, headers = header) r.raise_for_status() r.encoding = r.apparent_encoding return r.text except: return '' def weather_info(html, lilist): soup = BeautifulSoup(html, "html.parser") li = soup.select("ul[class='t clearfix'] li") for i in li: #遍历li标签,并将数据存入列表 try: date = i.select("h1")[0].text wea = i.select("p[class='wea']")[0].text temp = i.select('p[class="tem"] span')[0].text + "/" + i.select('p[class="tem"] i')[0].text lilist.append([date, wea, temp]) except: return "" def printList(ulist, num): #打印列表 tplt = "{0:^10} {1:{3}^10} {2:^10}" print(tplt.format("日期", "天气", "温度", chr(12288))) for i in range(num): u = ulist[i] print(tplt.format(u[0], u[1], u[2], chr(12288))) def main(): lilist = [] url = 'http://www.weather.com.cn/weather/101190501.shtml' html = getTHMLText(url) weather_info(html, lilist) printList(lilist, 7) main()

0x01 单线程下载网页全部图片

目标网站:http://www.weather.com.cn/weather/101190501.shtml

查看源码获取思路,筛选出所有img标签,图片url是每个img标签下src的值,将其储存为列表,遍历列表下载图片

from bs4 import BeautifulSoup import requests def getHTMLText(url): try: header = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0"} r = requests.get(url, headers=header, timeout= 30) r.raise_for_status() r.encoding = r.apparent_encoding return r.text except: return '' def PictureUrlList(html, urls): soup = BeautifulSoup(html, "html.parser") img = soup.select('img') for i in img: try: src = i['src'] urls.append(src) except: return '' def downloader(urls): try: for u in range(len(urls)): header = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0"} r = requests.get(urls[u], headers = header) pic = r.content path = 'D:/PIC/'+str(u)+'.png' with open(path, 'wb') as f: f.write(pic) except: pass def main(): urls = [] url = "http://www.weather.com.cn/weather/101190501.shtml" html = getHTMLText(url) PictureUrlList(html, urls) downloader(urls) main()