目标:前十数据

过程:

# -*- coding:utf-8 -*- #不加这个报错 import requests from bs4 import BeautifulSoup import re import csv import datetime url = 'http://data.10jqka.com.cn/market/rzrq/' today = datetime.date.today().strftime('%Y%m%d') #采集日期 res = requests.get(url) res.encoding = res.apparent_encoding html = BeautifulSoup(res.text,'lxml') data = html.select('#table1 > table > tbody') data = str(data).replace('-','') datas = re.findall('(d+.?dd)',data) exc = [datas[i:i+13] for i in range(0,len(datas),13)] f = open('rzrq.csv', 'w', newline="") writer = csv.writer(f) writer.writerow(('交易日期','本日融资余额(亿元)上海', '本日融资余额(亿元)深圳', '本日融资余额(亿元)沪深合计', '本日融资买入额(亿元)上海', '本日融资买入额(亿元)深圳', '本日融资买入额(亿元)沪深合计','本日融券余量余额(亿元)上海', '本日融券余量余额(亿元)深圳', '本日融券余量余额(亿元)沪深合计','本日融资融券余额(亿元)上海', '本日融资融券余额(亿元)深圳', '本日融资融券余额(亿元)沪深合计','采集日期')) for i in range(len(exc)): line = exc[i] line.append(today) #这里每一行追加采集日期 writer.writerow(line)

结果:

拓展:虽然需求只要前十个,但是以防万一还是全取出来。

这时候我发现一个问题,就是这个: ,我点这东西,网址竟然不动,还是跑到源代码看一看把。

,我点这东西,网址竟然不动,还是跑到源代码看一看把。

javascript:void(0) what????,这东西隐藏了#,怎么办?继续找找吧,看看隐藏到哪去了。

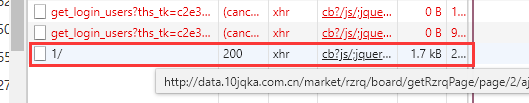

看图:

按顺序点,看看效果,嘿!出来了

ojbk ,网址有了,大功告成!按照动态网页的办法去写吧,附上代码

# -*- coding:utf-8 -*- #不加这个报错 import requests from bs4 import BeautifulSoup import re import csv import datetime today = datetime.date.today().strftime('%Y%m%d') #采集日期 Cookie = "Hm_lvt_60bad21af9c824a4a0530d5dbf4357ca=1587644691; Hm_lvt_f79b64788a4e377c608617fba4c736e2=1587644692; Hm_lvt_78c58f01938e4d85eaf619eae71b4ed1=1587644692; Hm_lpvt_f79b64788a4e377c608617fba4c736e2=1587644737; Hm_lpvt_60bad21af9c824a4a0530d5dbf4357ca=1587644737; Hm_lpvt_78c58f01938e4d85eaf619eae71b4ed1=1587644737; v=AmU_JzrXV8VWALMZXrG3U_-tdCqcohk0Y1b9iGdKIRyrfotcL_IpBPOmDVT0" url = "http://data.10jqka.com.cn/market/rzrq/board/getRzrqPage/page/{}/ajax/1/" headers = { 'User-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.113 Safari/537.36', 'Cookie': Cookie, 'Connection': 'keep-alive', 'Accept': '*/*', 'Accept-Encoding': 'gzip, deflate', 'Accept-Language': 'zh-CN,zh;q=0.9', 'Host': 'data.10jqka.com.cn', # 'Referer': 'http://www.sse.com.cn/market/stockdata/overview/weekly/' } #文件放在循环外打开,如果放在内部,那么前一次循环的数据会被覆盖掉 f = open('rzrq.csv', 'w', newline="") writer = csv.writer(f) writer.writerow(('交易日期','本日融资余额(亿元)上海', '本日融资余额(亿元)深圳', '本日融资余额(亿元)沪深合计', '本日融资买入额(亿元)上海', '本日融资买入额(亿元)深圳', '本日融资买入额(亿元)沪深合计','本日融券余量余额(亿元)上海', '本日融券余量余额(亿元)深圳', '本日融券余量余额(亿元)沪深合计','本日融资融券余额(亿元)上海', '本日融资融券余额(亿元)深圳', '本日融资融券余额(亿元)沪深合计','采集日期')) for i in range(1,6): req = requests.get(url.format(i),headers=headers) html = BeautifulSoup(req.text,'lxml') data = html.select('#table1 > table > tbody') data = str(data).replace('-','') datas = re.findall('(d+.?dd)',data) exc = [datas[i:i+13] for i in range(0,len(datas),13)] for j in range(len(exc)): line = exc[j] line.append(today) #这里每一行追加采集日期 writer.writerow(line) f.close()

结果:

嗯 ,完美!