事件起因:我家夫人说有一个新浪博客的文章写得非常好,他想用打印机打印出来看(说电子的她看着难受),夫人所托,没有办法,动手呗

语言:C#

用到的第三方库:

HtmlAgilityPack,NPOI(均可从NUGET下载到)

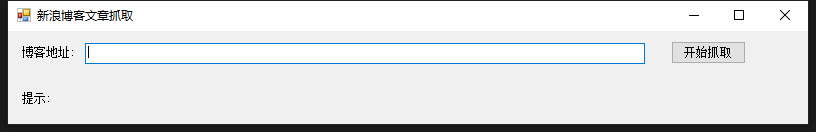

软件界面:

由于原理非常简单,花了一个小时写了一下,直接上代码

using System; using System.IO; using System.Net; using System.Text; using System.Text.RegularExpressions; using System.Threading; using System.Threading.Tasks; using System.Windows.Forms; using HtmlAgilityPack; using NPOI.XWPF.UserModel; namespace blogSpider { public partial class Form1 : Form { private int _successNum; private static readonly string wordFilePath = Environment.CurrentDirectory + @""; public Form1() { InitializeComponent(); } /// <summary> /// 抓取按钮 /// </summary> /// <param name="sender"></param> /// <param name="e"></param> private void button1_Click(object sender, EventArgs e) { if (!txt_url.Text.Contains("http")) { MessageBox.Show(@"请输入正确的网址"); return; } //开启线程 Task.Run(() => { if (!Directory.Exists(wordFilePath + "word")) { Directory.CreateDirectory(wordFilePath + "word"); } using (var webClient = new WebClient()) { GetBlogContentRecursion(txt_url.Text.Trim(), webClient); } Invoke((MethodInvoker) (() => { lb_tip.Text = @"抓取完成"; })); }); } private void GetBlogContentRecursion(string url,WebClient wb) { wb.Encoding = Encoding.UTF8; var doc = new HtmlAgilityPack.HtmlDocument(); string html = wb.DownloadString(url); doc.LoadHtml(html); HtmlNode parentNode = doc.DocumentNode; HtmlNodeCollection hrefNode = parentNode.SelectNodes("//span[@class='atc_title']/a"); //当前页面所有的链接地址集合 //Random rd = new Random(); foreach (HtmlNode node in hrefNode) { //获取文章详情,要加入延迟 Console.WriteLine(node.Attributes["href"].Value); var contentDoc = new HtmlAgilityPack.HtmlDocument(); string contentHtml = wb.DownloadString(node.Attributes["href"].Value); contentDoc.LoadHtml(contentHtml); HtmlNode contentNode = contentDoc.DocumentNode; var titleNode = contentNode.SelectSingleNode("//h2[@class='titName SG_txta']"); //标题 var articleNode = contentNode.SelectSingleNode("//div[@class='articalContent ']"); //内容 if (titleNode != null && articleNode != null) { if (File.Exists($"{wordFilePath}word\{titleNode.InnerText}.docx")) continue; //如果有同样标题的word文档就跳过 GenerateWord(titleNode.InnerText.Replace(" ", ""), articleNode.InnerText.Replace(" ","")); } Invoke((MethodInvoker)(() => { lb_tip.Text = $@"成功抓取文章{++_successNum}篇,标题:{node.InnerText}"; })); Thread.Sleep(1000); //加入延迟是为了防止新浪封IP } var nextPageNode = parentNode.SelectSingleNode("//li[@class='SG_pgnext']/a"); //下一页的地址 if (nextPageNode != null) { GetBlogContentRecursion(nextPageNode.Attributes["href"].Value, wb); } } /// <summary> /// 生成word文档 /// </summary> /// <param name="title"></param> /// <param name="content"></param> private void GenerateWord(string title, string content) { var doc = new XWPFDocument(); var p1 = doc.CreateParagraph(); p1.Alignment = ParagraphAlignment.CENTER; var r1 = p1.CreateRun(); r1.FontSize = 16; r1.IsBold = true; r1.SetText(title); var p2 = doc.CreateParagraph(); p2.Alignment = ParagraphAlignment.LEFT; var r2 = p2.CreateRun(); r2.SetText(content);

title = Regex.Replace(title, "[ \[ \] \^ \-_*×――(^)$%~!@#$…&%¥—+=<>《》!!???::•`·、。,;,.;"‘’“”-]", "");

var crtFileName = $@"{wordFilePath}word\{title}.docx";

doc.Write(f);

f.Close();

}

}

}