dfcf_spider

实验源代码:

# 目标采集:帖子标题,阅读数、评论数、链接、发布时间

import requests

from bs4 import BeautifulSoup

import time

import csv

import re

#复制请求头

# 在请求网页爬取的时候,输出的text信息中会出现抱歉,无法访问等字眼,这就是禁止爬取,需要通过反爬机制去解决这个问题。

# headers是解决requests请求反爬的方法之一,相当于我们进去这个网页的服务器本身,假装自己本身在爬取数据。

# 对反爬虫网页,可以设置一些headers信息,模拟成浏览器取访问网站 。

head ={'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

'Accept-Encoding':'gzip,deflate',

'Accept-Language':'zh-CN,zh;q=0.9',

'Cache-Control':'max-age=0',

'Connection':'keep-alive',

'Cookie':'st_pvi=87732908203428;st_si=12536249509085;qgqp_b_id=9777e9c5e51986508024bda7f12e6544;_adsame_fullscreen_16884=1',

'Host':'guba.eastmoney.com',

'Referer':'http://guba.eastmoney.com/list,600596,f_1.html',

'Upgrade-Insecure-Requests':'1',

'User-Agent':'Mozilla/5.0(WindowsNT6.1;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/65.0.3325.181Safari/537.36'}

#设置数据存储方式,csv表格写入

f = open('dfcw.csv','a',newline='')

w = csv.writer(f)

#获取帖子详细时间,列表也没有年份,可以作为获取帖子其他详细内容的通用方法

def get_time(url):

try:

q = requests.get(url,headers=head)

soup = BeautifulSoup(q.text,'html.parser')

ptime = soup.find('div',{'class':'zwfbtime'}).get_text()

ptime = re.findall(r'd{4}-d{2}-d{2} d{2}:d{2}:d{2}',ptime)[0]

print(ptime)

return ptime

except:

return ''

#获取列表页第n页的具体目标信息,由BeautifulSoup解析完成

def get_urls(url):

print("url:"+url)

baseurl = 'http://guba.eastmoney.com/'

q = requests.get(url,headers=head)

soup = BeautifulSoup(q.text,'html.parser')

urllist = soup.findAll('div',{'class':'articleh'})

# print(urllist)

for i in urllist:

if i.find('a') != None:

try:

detailurl = i.find('a').attrs['href'].replace('/','')

# 找到详情页地址

# print("ddd"+detailurl)

titel = i.find('a').get_text()

yuedu = i.find('span',{'class':'l1'}).get_text()

pinlun = i.find('span', {'class': 'l2'}).get_text()

#获取帖子时间

ptime = get_time(baseurl+detailurl)

w.writerow([detailurl,titel,yuedu,pinlun,ptime])

# print("zz"+baseurl + detailurl)

except:

pass

#循环所有页数

for i in range(1,2):

print(i)

#新安股份吧第1~2页,这里可以改到第N页

get_urls('http://guba.eastmoney.com/list,600596,f_'+str(i)+'.html')

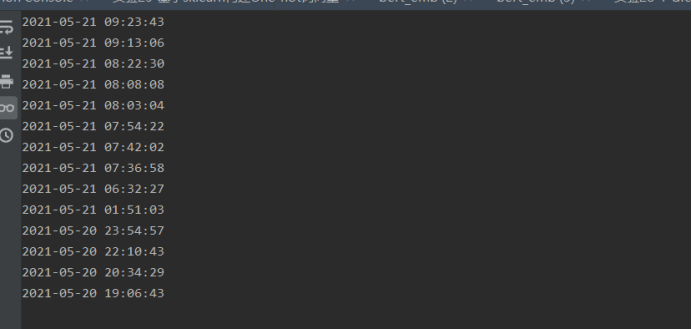

实验结果截图: