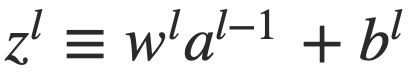

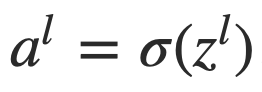

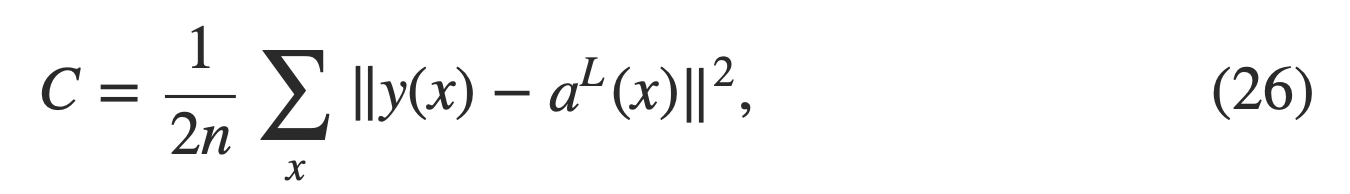

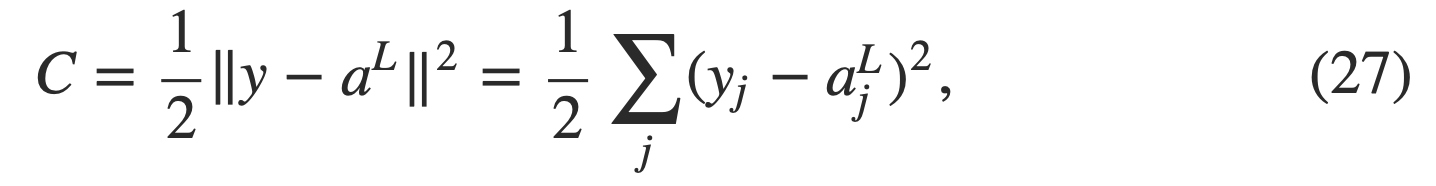

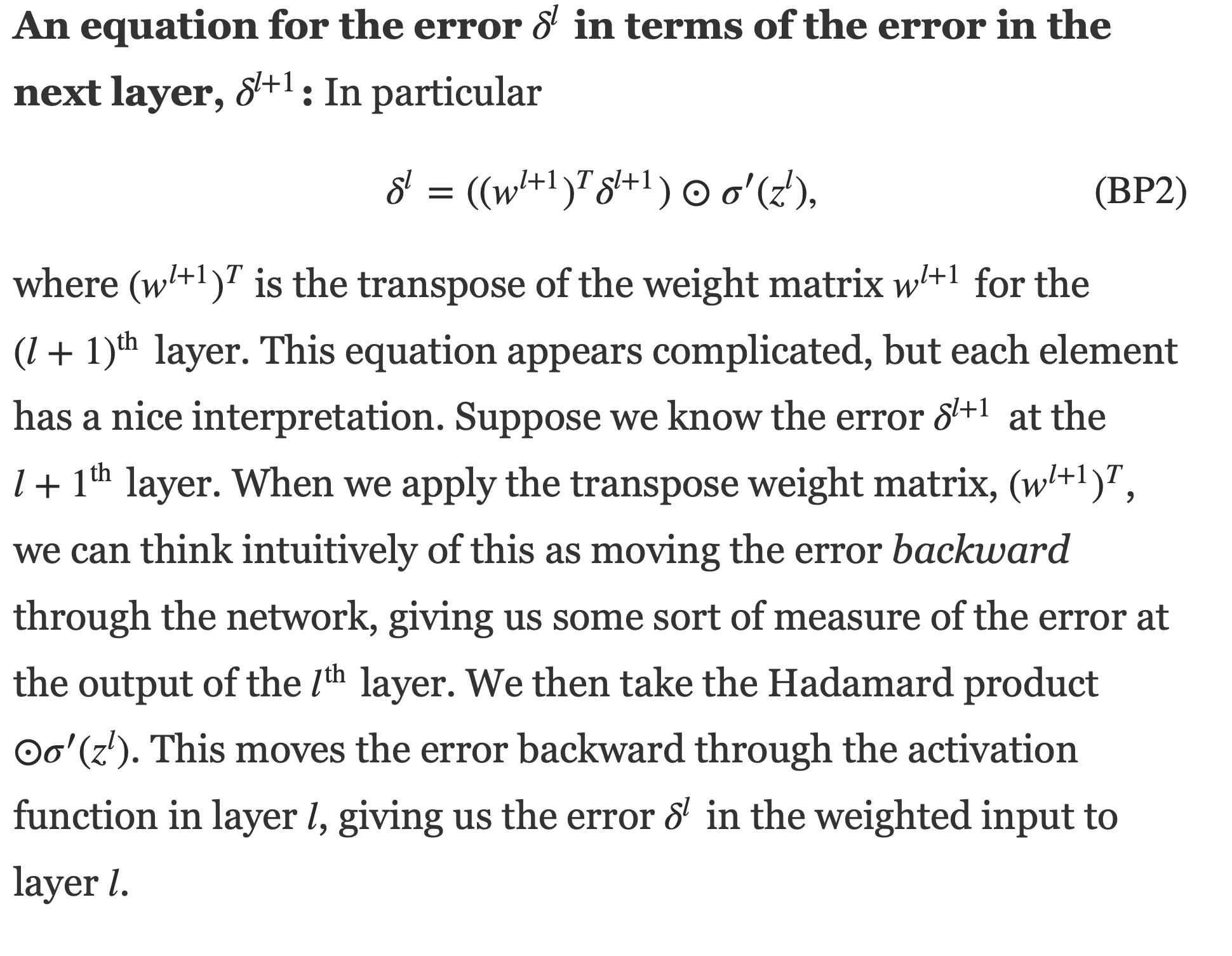

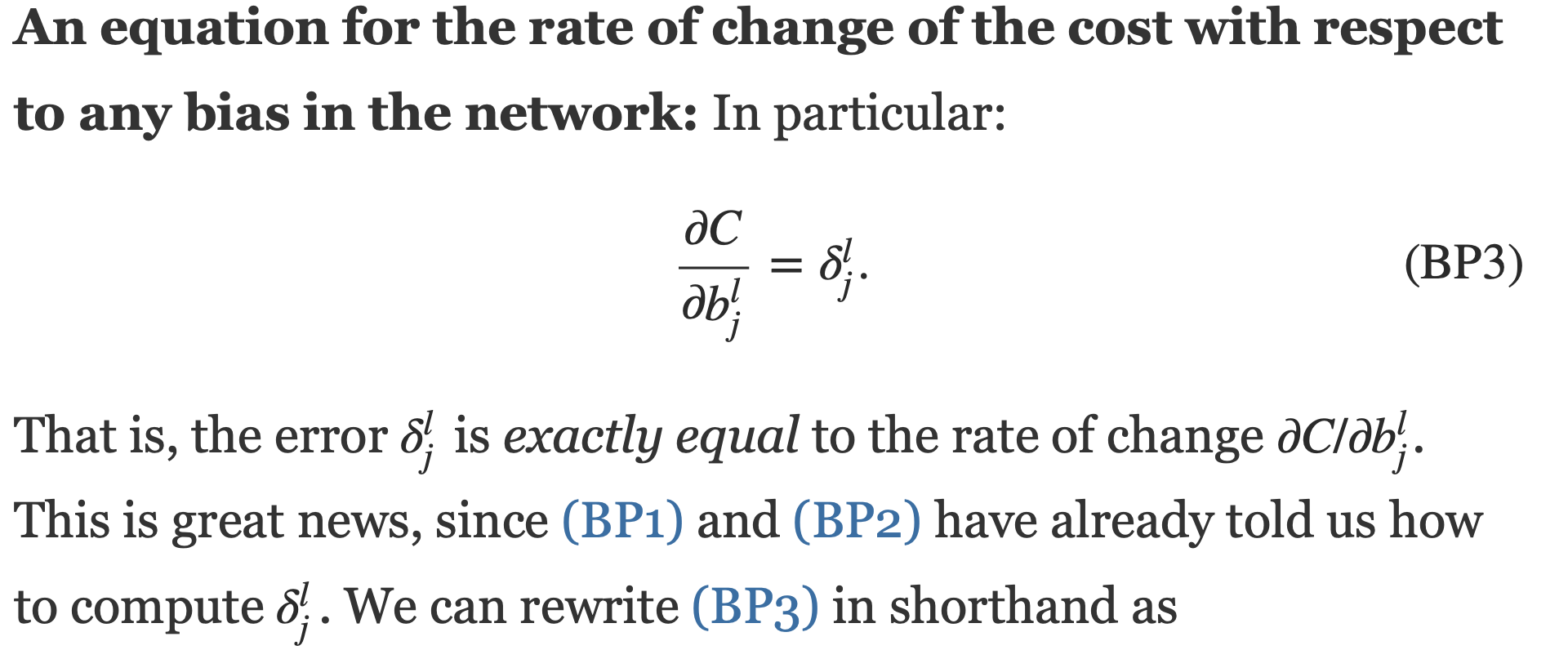

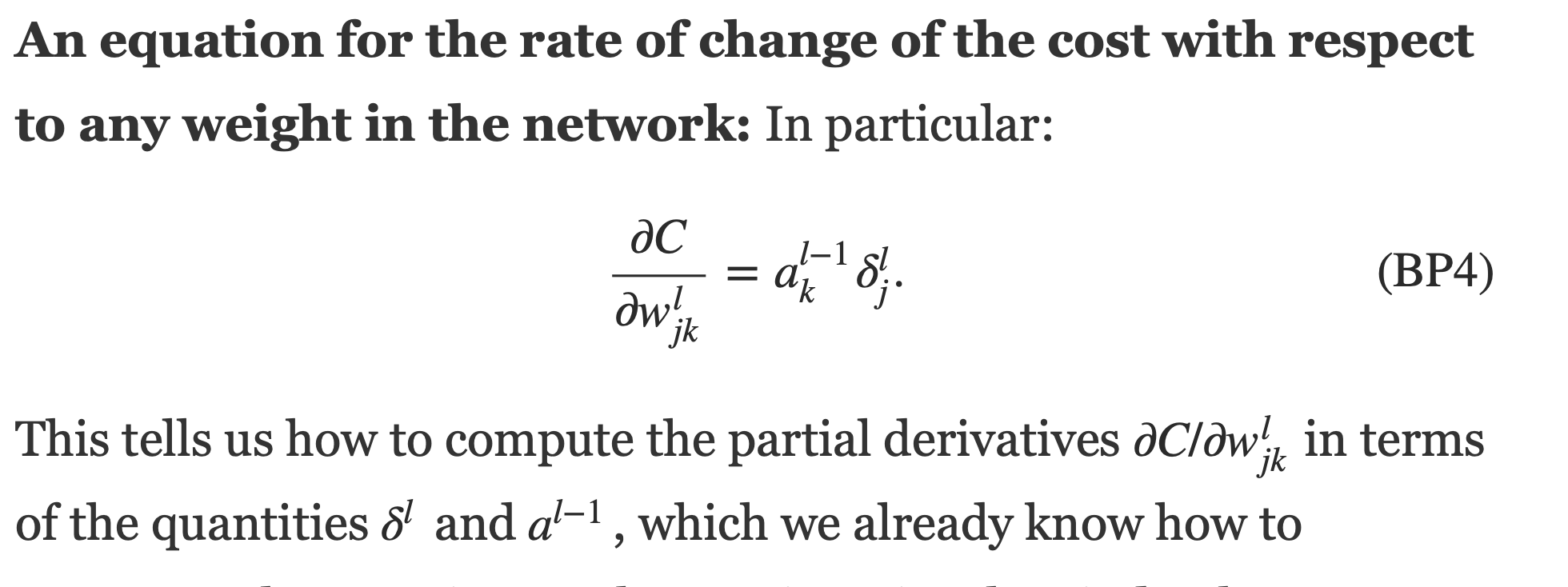

Simply, in summary, error is related to the partial derivative (z) of cost function (C), so it can be write as the derivative of the composite funtion C(a, y) on a BP1. And through BP2, the neuron pass the error backward through the network. So Combine BP1 and BP2 together, we can calculate each error in different layer. Finally, by following the bias and weight partial derivatives, we get what we want.