http的流程图

一、路由的作用

1、路由匹配(match)

(2)高级匹配:headers和query_patameters

2、路由

(1)路由(route) :把请求报文转发到相应的集群

(2)重定向(redirect) : 把请求报文重定向到另一个域名或host主机

(3)直接响应(direct_response): 直接返回请求报文的应答

二、HTTP连接管理

1、Envoy通过内置的L4过滤器HTTP连接管理器将原始字节转换为HTTP应用层协议级别的 消息和事 件,例如 接收到的标头和主体等 ,以及处理 所有HTTP连接和请求共有的功能 , 包括访问日志、生成和跟踪请求ID, 请求/响应头处理、路由表管理和统计信息等。

1)支持HTTP/1.1、WebSockets和HTTP/2,但不支持SPDY 2)关联的路由表可静态配置,亦可经由xDS API中的RDS动态生成; 3)内建重试插件,可用于配置重试行为 (1)Host Predicates (2)Priority Predicates 4)内建支持302重定向,它可以捕获302重定向响应,合成新请求后将其发送到新的路由匹配 ( match)所指定的上游端点,并将收到的响应作为对原始请求的响应返回 客户端 5)支持适用于HTTP连接及其组成流(constituent streams)的多种可配置超时机制 (1)连接级别:空闲超时和排空超时(GOAWAY); (2)流级别:空闲超时、每路由相关的上游端点超时和每路由相关的gRPC最大超时时长; 6)基于自定义集群的动态转发代理;

2、HTTP协议相关的功能通过各HTTP过滤器实现 ,这些过滤器大体可分为编码器、 解码器和 编 / 解码器三类;

router (envoy.router )是最常的过滤器之一 ,它基于路由表完成请求的转发或重定向,以及处理重试操作和生成统计信息等

三、HTTP高级路由功能

(1)将域名映射到虚拟主机; (2)path的前缀(prefix)匹配、精确匹配或正则表达式匹配; 虚拟主机级别的TLS重定向; (3)path级别的path/host重定向; (4)由Envoy直接生成响应报文; (5)显式host rewrite; (6)prefix rewrite; (7)基于HTTP标头或路由配置的请 求 重 试 与请 求 超 时; 基于运行时参数的流量迁移; (8)基于权重或百分比的跨集群流量分割; (9)基于任意标头匹配路由规则; (10)基于优先级的路由; (11)基于hash策略的路由; ... ...

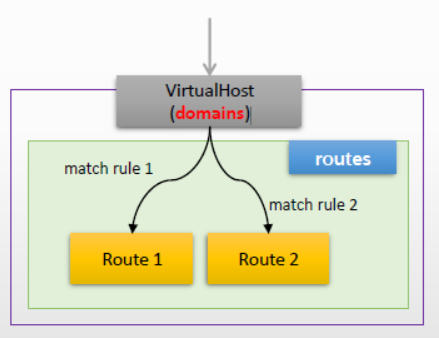

1、路由配置中的顶级元素是虚拟主机

1)每个虚拟主机都有一个逻辑名称以及一组域名,请求报文中的主机头将根据此处的域名进行路由; 2)基于域名选择虚拟主机后,将基于配置的路由机制完成请求路由或进行重定向; (1)每个虚拟主机都有一个逻辑名称(name)以及一组域名(domains),请求报文中的主机头将根据此处的域名进行路 由; (2)基于域名选择虚拟主机后,将基于配置的路由机制(routes)完成请求路由或进行重定向;

配置框架

--- listeners: - name: address: {...} filter_chians: [] - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: ... virutal_hosts: [] - name : ... domains: [] # 虚拟主机的域名,路由匹配时将请求报文中的host标头值与此处列表项进行匹配检测; routes: [] # 路由条目,匹配到当前虚拟主机的请求中的path匹配检测将针对各route中由match定义条件进行 ; - name: ... match: {...} # 常用内嵌字段 prefix|path|sate_regex|connect_matcher ,用于定义基于路径前缀、路径、正则表达式或连接匹配器四者之一定义匹配条件 ; route: {...} # 常用内嵌字段cluster|cluster_header|weighted_clusters ,基于集群 、请求报文中的集群标头 或加权集群 (流量分割)定义路由目标 ; redirect: {…} #重定向请求,但不可与route或direct_response一同使用; direct_response: {…} #直接响应请求,不可与route和redirect一同使用; virtual_clusters: [] # 为此虚拟主机定义的 用于 收 集统 计 信息 的虚 拟 集群 列 表; ... ...

3、VirtualHost的配置

{ "name": "...", "virtual_hosts": [], #虚拟主机的具体配置如下 "vhds": "{...}", "internal_only_headers": [], "response_headers_to_add": [], "response_headers_to_remove": [], "request_headers_to_add": [], "request_headers_to_remove": [], "most_specific_header_mutations_wins": "...", "validate_clusters": "{...}", "max_direct_response_body_size_bytes": "{...}" }

virtual_hosts

{ "name": "...", "domains": [], "routes": [], "require_tls": "...", "virtual_clusters": [], "rate_limits": [], "request_headers_to_add": [], "request_headers_to_remove": [], "response_headers_to_add": [], "response_headers_to_remove": [], "cors": "{...}", "typed_per_filter_config": "{...}", "include_request_attempt_count": "...", "include_attempt_count_in_response": "...", "retry_policy": "{...}", "hedge_policy": "{...}", "per_request_buffer_limit_bytes": "{...}" }

4、Envoy匹配路由时,它基于如下工作过程进行

(1)检测HTTP请求的host标头或:authority,并将其同路由配置中定义的虚拟主机作匹配检查; (2)在匹配到的虚拟主机配置中按顺序检查虚拟主机中的每个route条目中的匹配条件,直到第一个匹配的为止 (短路); (3)若定义了虚拟集群,按顺序检查虚拟主机中的每个虚拟集群,直到第一个匹配的为止;

--- listeners: - name: address: {...} filter_chians: [] - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: ... virutal_hosts: [] - name: ... domains: [] # 虚拟主机的域名,路由匹配时将请求报文中的host标头值与此处列表项进行匹配检测; routes: [] # 路由条目,匹配到当前虚拟主机的请求中的path匹配检测将针对各route中由match 定义条件进行; - name: ... match : {...} # 常用内嵌字段 prefix|path|sate_regex|connect_matcher,用于定义基于路径前缀、路径、正则表达式或连接匹配器四者之一定义匹配条件; route: {...} # 常用内嵌字段cluster|cluster_header|weighted_clusters,基于集群、请求报文中的集群标头或加权集群(流量分割)定义路由目标; virtual_clusters: [] # 为此虚拟主机定义的用于收集统计信息的虚拟集群列表; ... ...

下面的配置重点说明match的基本匹配机制及不同的路由方式

virtual_hosts: - name: vh_001 domains: ["ilinux.io", "*.ilinux.io", "ilinux.*"] routes: #精确匹配到"/service/blue",路由到blue集群 - match: path: "/service/blue" route: cluster: blue #正则匹配到 "^/service/.*blue$",跳转到/service/blue,再转发到blue集群 - match: safe_regex: google_re2: {} regex: "^/service/.*blue$" redirect: path_redirect: "/service/blue" #精确匹配到"/service/yellow",则直接回复"This page will be provided soon later.\n" - match: prefix: "/service/yellow" direct_response: status: 200 body: inline_string: "This page will be provided soon later.\n" #默认匹配转发到red集群 - match: prefix: "/" route: cluster: red - name: vh_002 domains: ["*"] routes: #匹配到"/",转发到gray集群 - match: prefix: "/" route: cluster: gray

下面的配置则侧重基于标头和查询参数

virtual_hosts: - name: vh_001 domains: ["*"] routes: #精确匹配到X-Canary,转发到demoappv12集群 - match: prefix: "/" headers: - name: X-Canary exact_match: "true" route: cluster: demoappv12 #查询匹配到"username"并且前缀匹配到"vip_",转发到demoappv12集群 - match: prefix: "/" query_parameters: - name: "username" string_match: prefix: "vip_" route: cluster: demoappv11 #其它匹配转发到denoappv10 - match: prefix: "/" route: cluster: demoappv10

1、域名搜索顺序

1)将请求报文中的host标头值依次与路由表中定义的各Virtualhost的domain属性值进行比较,并于第一 次匹配时终止搜索; 2)Domain search order (1)Exact domain names: www.ilinux.io. (2)Prefix domain wildcards: *.ilinux.io or *-envoy.ilinux.io. Suffix domain wildcards: ilinux.* or ilinux-*. (3)Special wildcard * matching any domain.

七、路由基础配置说明

(1)基于prefix、path、safe_regex和connect_matchter 四者其中任何一个进行URL匹配。 提示:早期版本中的regex已经被safe_regex取代。 (2)可额外根据headers 和query_parameters 完成报文匹配 (3)匹配的到报文可有三种路由机制 (1)redirect (2)direct_response (3)route

2、route

(1)支持cluster、weighted_clusters和cluster_header三者之一定义流量路由目标 (2)转发期间可根据prefix_rewrite和host_rewrite完成URL重写; (3)还可以额外配置流量管理机制,例如 韧性相关:timeout、retry_policy 测试相关: request_mirror_policies 流控相关: rate_limits 访问控制相关:cors

1、路由配置框架

1) 符合匹配条件的请求要由如下三种方式之一处理

(1)route:路由到指定位置

(2)redirect:重定向到指定位置

(3)direct_response :直接以给定的内容进行响应

2)路由中也可按需在请求及响应报文中添加或删除响应标头

{ "name": "...", "match": "{...}", # 定义匹配条件 “route”: “{...}”, # 定义流量路由目标,与redirect和direct_response互斥 “redirect”: “{...}”, # 将请求进行重定向,与route和direct_response互斥 “direct_response”: “{...}”, # 用指定的内容直接响应请求,与redirect和redirect互斥 “metadata”: “{...}”, #为路由机制提供额外的元数据,常用于configuration、stats和logging相关功能,则通常需要先定义相关的 filter "decorator": "{...}", "typed_per_filter_config": "{...}", "request_headers_to_add": [], "request_headers_to_remove": [], "response_headers_to_add": [], "response_headers_to_remove": [], "tracing": "{...}", "per_request_buffer_limit_bytes": "{...}" }

1、 匹配条件是定义的检测机制,用于过滤出符合条件的请求并对其作出所需的处理,例如路由、重定向或直接响应等。必须要定义prefix、path和regex三种匹配条件中的一种形式。

2、除了必须设置上述三者其中之一外,还可额外完成如下限定

(1)区分字符大小写(case_sensitive ) (2)匹配指定的运行键值表示的比例进行流量迁移(runtime_fraction); 不断地修改运行时键值完成流量迁移。 (3)基于标头的路由:匹配指定的一组标头(headers); (4)基于参数的路由:匹配指定的一组URL查询参数(query_parameters); (5)仅匹配grpc流量(grpc);

{ “prefix”: “...”, #URL中path前缀匹配条件 "path": "...", #path精确匹配条件 "safe_regex": "{...}", #整个path(不包含query字串)必须与 "google_re2": "{...}", #指定的正则表达式匹配 "regex": "..." "connect_matcher": "{...}", "case_sensitive": "{...}", "runtime_fraction": "{...}", "headers": [], "query_parameters": [], "grpc": "{...}", "tls_context": "{...}", "dynamic_metadata": [] }

8.2 基于标头的路由匹配(route.HeaderMatch)

1)路由器将根据路由配置中的所有指定标头检查请求的标头 (1)若路由中指定的所有标头都存在于请求中且具有相同值,则匹配 (2)若配置中未指定标头值,则基于标头的存在性进行判断 2)标头及其值的上述检查机制仅能定义exact_match、safe_regex_match range_match、 present_match 、 prefix_match 、suffix_match 、contains_match及string_match其中之一;

{ routes.match.headers “name”: “...”, "exact_match": "...", # 精确值匹配 “safe_regex_match”: “{...}”, # 正则表达式模式匹配 “range_match”: “{...}”, # 值范围匹配,检查标头值是否在指定的范围内 “present_match ”: “...”, # 标头存在性匹配,检查标头存在与否 “prefix_match”: “...”, # 值前缀匹配 “suffix_match”: “...”, # 值后缀匹配 “contains_match”: “...”, # 检测标头值是否包含此处指定的字符串 “string_match”: “{...}”, # 检测标头值是否匹配该处指定的字符串 “invert_match”: “...“ # 是否将匹配的检测结果取反,即以不满足条件为”真” ,默认为fase }

8.3 基于查询参数的路由匹配(route.QueryParameterMatcher)

路由器将根据路由配置中指定的所有查询参数检查路径头中的查询字符串。

(1)查询参数匹配将请求的URL中查询字符串视为以&符号分隔的“键”或“键=值”元素列表 (2)若存在指定的查询参数,则所有参数都必须与URL中的查询字符串匹配 (3)匹配条件指定为value、regex、string_match或present_match其中之一

query_parameters: name: "..." string_match: "{...}" # 参数值的字符串匹配检查,支持使用以下五种检查方式其中之一进行字符串匹配 exact: "...“ prefix: "..." suffix: "..." contains: "..." safe_regex: "{...}" ignore_case: "…" present_match: "..." routes.match.query_parameters

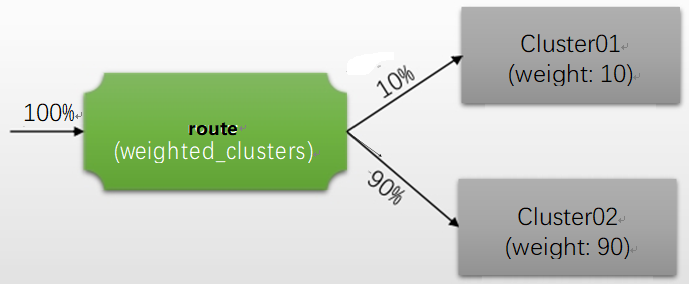

匹配到的流量可路由至如下三种目标之一

(1)cluster:路由至指定的上游集群; (2)cluster_header:路由至请求标头中由cluster_header的 值指定的上游集群; (3)weighted_clusters:基于权重将请求路由至多个上游集群,进行流量分割;

注意:路由到所有集群的流量之和要等于100%

{ “cluster”: “... ”, # 路由到指定的目标集群 "cluster_header ": "...", “weighted_clusters”: “{...}”, # 路由并按权重比例分配到多个上游集群 "cluster_not_found_response_code": "...", "metadata_match ": "{...}", "prefix_rewrite": "...", "regex_rewrite": "{...}", "host_rewrite_literal": "...", "auto_host_rewrite": "{...}", "host_rewrite_header": "...", "host_rewrite_path_regex": "{...}", "timeout": "{...}", "idle_timeout": "{...}", "retry_policy": "{...}", "request_mirror_policies": [], "priority": "...", "rate_limits": [], "include_vh_rate_limits": "{...}", "hash_policy": [], "cors": "{...}", "max_grpc_timeout": "{...}", "grpc_timeout_offset": "{...}", "upgrade_configs": [], "internal_redirect_policy": "{...}", "internal_redirect_action": "...", "max_internal_redirects": "{...}", "hedge_policy": "{...}", "max_stream_duration": "{...}" }

1)为请求响应一个301应答,从而将请求从一个URL永久重定向至另一个URL

2) Envoy支持如下重定向行为

(1)协议重定向:https_redirect或scheme_redirect 二者只能使用其一; 主机重定向:host_redirect (2)端口重定向:port_redirect (3)路径重定向:path_redirect (4)路径前缀重定向:prefix_redirect (5)正则表达式模式定义的重定向:regex_rewrite (6)重设响应码:response_code,默认为301; (6)strip_query:是否在重定向期间删除URL中的查询参数,默认为false ;

{ "https_redirect": "...", "scheme_redirect": "...", "host_redirect": "...", "port_redirect": "...", "path_redirect": "...", "prefix_rewrite": "...", "regex_rewrite": "{...}", "response_code": "...", "strip_query": "..." }

Envoy还可以直接响应请求

{ "status": "..." "body": "{...}" }

2)status:指定响应码的状态

{ "filename": "...", "inline_bytes": "...", "inline_string": "..." }

指定的路由需要额外匹配的一组URL查询参数。

路由器将根据路由配置中指定的所有查询参数检查路径头中 的查询字符串:

(1)查询参数匹配将请求的URL中查询字符串视为以&符号分隔的“键”或“键=值”元素列表 (2)若存在指定的查询参数,则所有参数都必须与URL中的查询字符串匹配 (3)匹配条件指定为value、regex、string_match或present_match其中之一

query_parameters: name: "..." string_match: "{...}" # 参数值的字符串匹配检查,支持使用以下五种检查方式其中之一进行字符串匹配 exact: "...“ prefix: "..." suffix: "..." contains: "..." safe_regex: "{...}" ignore_case: "…" present_match: "..."

九、小结

1、基础路由配置

1)在match中简单通过prefix、path或regex指定匹配条件

2)将匹配到的请求进行重定向、直接响应或路由到指定目标集群

2、高级路由策略

1)在match中通过prefix、path或regex指定匹配条件,并使用高级匹配机制

(2) 结合headers按指定的标头路由,例如基于cookie进行,将其值分组后路由到不同目标;

(3) 结合query_parameters按指定的参数路由,例如基于参数group进行,将其值分组后路由到不同的目标;

(4) 提示:可灵活组合多种条件构建复杂的匹配机制

2) 复杂路由目标

(1) 结合请求报文标头中cluster_header的值进行定向路由

(2) weighted_clusters:将请求根据目标集群权重进行流量分割

(3) 配置高级路由属性,例如重试、超时、CORS、限速等;

十、实验案例

1、httproute-simple-match

实验环境

envoy:Front Proxy,地址为172.31.50.10 7个后端服务 light_blue和dark_blue:对应于Envoy中的blue集群 light_red和dark_red:对应于Envoy中的red集群 light_green和dark_green:对应Envoy中的green集群 gray:对应于Envoy中的gray集群

front-envoy.yaml

admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901 static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: vh_001 domains: ["ilinux.io", "*.ilinux.io", "ilinux.*"] routes: - match: path: "/service/blue" route: cluster: blue - match: safe_regex: google_re2: {} regex: "^/service/.*blue$" redirect: path_redirect: "/service/blue" - match: prefix: "/service/yellow" direct_response: status: 200 body: inline_string: "This page will be provided soon later.\n" - match: prefix: "/" route: cluster: red - name: vh_002 domains: ["*"] routes: - match: prefix: "/" route: cluster: gray http_filters: - name: envoy.filters.http.router clusters: - name: blue connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN http2_protocol_options: {} load_assignment: cluster_name: blue endpoints: - lb_endpoints: - endpoint: address: socket_address: address: blue port_value: 80 - name: red connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN http2_protocol_options: {} load_assignment: cluster_name: red endpoints: - lb_endpoints: - endpoint: address: socket_address: address: red port_value: 80 - name: green connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN http2_protocol_options: {} load_assignment: cluster_name: green endpoints: - lb_endpoints: - endpoint: address: socket_address: address: green port_value: 80 - name: gray connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN http2_protocol_options: {} load_assignment: cluster_name: gray endpoints: - lb_endpoints: - endpoint: address: socket_address: address: gray port_value: 80

docker-compose.yaml

version: '3' services: front-envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./front-envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.50.10 expose: # Expose ports 80 (for general traffic) and 9901 (for the admin server) - "80" - "9901" light_blue: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - light_blue - blue environment: - SERVICE_NAME=light_blue expose: - "80" dark_blue: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - dark_blue - blue environment: - SERVICE_NAME=dark_blue expose: - "80" light_green: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - light_green - green environment: - SERVICE_NAME=light_green expose: - "80" dark_green: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - dark_green - green environment: - SERVICE_NAME=dark_green expose: - "80" light_red: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - light_red - red environment: - SERVICE_NAME=light_red expose: - "80" dark_red: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - dark_red - red environment: - SERVICE_NAME=dark_red expose: - "80" gray: image: ikubernetes/servicemesh-app:latest networks: envoymesh: aliases: - gray - grey environment: - SERVICE_NAME=gray expose: - "80" networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.50.0/24

路由说明

virtual_hosts: - name: vh_001 domains: ["ilinux.io", "*.ilinux.io", "ilinux.*"] routes: - match: path: "/service/blue" route: cluster: blue - match: safe_regex: google_re2: {} regex: "^/service/.*blue$" redirect: path_redirect: "/service/blue" - match: prefix: "/service/yellow" direct_response: status: 200 body: inline_string: "This page will be provided soon later.\n" - match: prefix: "/" route: cluster: red - name: vh_002 domains: ["*"] routes: - match: prefix: "/" route: cluster: gray

实验验证

docker-compose up

测试domain的匹配机制

# 首先访问无法匹配到vh_001的域名 root@test:~# curl -H "Host: www.magedu.com" http://172.31.50.10/service/a Hello from App behind Envoy (service gray)! hostname: e5f05afa0a68 resolved hostname: 172.31.50.3 root@test:~# curl -v -H "Host: www.magedu.com" http://172.31.50.10/service/a * Trying 172.31.50.10:80... * TCP_NODELAY set * Connected to 172.31.50.10 (172.31.50.10) port 80 (#0) > GET /service/a HTTP/1.1 > Host: www.magedu.com > User-Agent: curl/7.68.0 > Accept: */* > * Mark bundle as not supporting multiuse < HTTP/1.1 200 OK < content-type: text/html; charset=utf-8 < content-length: 98 < server: envoy < date: Fri, 03 Dec 2021 07:04:02 GMT < x-envoy-upstream-service-time: 3 < Hello from App behind Envoy (service gray)! hostname: e5f05afa0a68 resolved hostname: 172.31.50.3 * Connection #0 to host 172.31.50.10 left intact #没有匹配到vh_001,匹配上了vh_002 # 接着访问可以匹配vh_001的域名 root@test:~# curl -H "Host: www.ilinux.io" http://172.31.50.10/service/a Hello from App behind Envoy (service light_red)! hostname: 235f09398734 resolved hostname: 172.31.50.8 root@test:~# curl -H "Host: www.ilinux.io" http://172.31.50.10/service/a Hello from App behind Envoy (service dark_red)! hostname: 171330891e5c resolved hostname: 172.31.50.2 #直接返回vh_001后端集群的结果

测试路由匹配机制

# 首先访问“/service/blue” #直接返回集群结果 root@test:~# curl -H "Host: www.ilinux.io" http://172.31.50.10/service/blue Hello from App behind Envoy (service dark_blue)! hostname: cf263713476d resolved hostname: 172.31.50.5 root@test:~# curl -H "Host: www.ilinux.io" http://172.31.50.10/service/blue Hello from App behind Envoy (service light_blue)! hostname: ce070602a111 resolved hostname: 172.31.50.6 root@test:~# curl -H "Host: www.ilinux.io" http://172.31.50.10/service/blue #匹配到直接返回后端集群结果 # 接着访问“/service/dark_blue” #会跳转信息 root@test:~# curl -I -H "Host: www.ilinux.io" http://172.31.50.10/service/dark_blue HTTP/1.1 301 Moved Permanently location: http://www.ilinux.io/service/blue date: Fri, 03 Dec 2021 07:08:35 GMT server: envoy transfer-encoding: chunked # 然后访问“/serevice/yellow” #直接返回结果,不需要经过后端集群 root@test:~# curl -H "Host: www.ilinux.io" http://172.31.50.10/service/yellow This page will be provided soon later.

2、httproute-headers-match

envoy:Front Proxy,地址为172.31.52.10 5个后端服务 demoapp-v1.0-1和demoapp-v1.0-2:对应于Envoy中的demoappv10集群 demoapp-v1.1-1和demoapp-v1.1-2:对应于Envoy中的demoappv11集群 demoapp-v1.2-1:对应于Envoy中的demoappv12集群

front-envoy.yaml

admin: profile_path: /tmp/envoy.prof access_log_path: /tmp/admin_access.log address: socket_address: address: 0.0.0.0 port_value: 9901 static_resources: listeners: - name: listener_0 address: socket_address: { address: 0.0.0.0, port_value: 80 } filter_chains: - filters: - name: envoy.filters.network.http_connection_manager typed_config: "@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager stat_prefix: ingress_http codec_type: AUTO route_config: name: local_route virtual_hosts: - name: vh_001 domains: ["*"] routes: - match: prefix: "/" headers: - name: X-Canary exact_match: "true" route: cluster: demoappv12 - match: prefix: "/" query_parameters: - name: "username" string_match: prefix: "vip_" route: cluster: demoappv11 - match: prefix: "/" route: cluster: demoappv10 http_filters: - name: envoy.filters.http.router clusters: - name: demoappv10 connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN load_assignment: cluster_name: demoappv10 endpoints: - lb_endpoints: - endpoint: address: socket_address: address: demoappv10 port_value: 80 - name: demoappv11 connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN load_assignment: cluster_name: demoappv11 endpoints: - lb_endpoints: - endpoint: address: socket_address: address: demoappv11 port_value: 80 - name: demoappv12 connect_timeout: 0.25s type: STRICT_DNS lb_policy: ROUND_ROBIN load_assignment: cluster_name: demoappv12 endpoints: - lb_endpoints: - endpoint: address: socket_address: address: demoappv12 port_value: 80

docker-compose.yaml

version: '3' services: front-envoy: image: envoyproxy/envoy-alpine:v1.20.0 environment: - ENVOY_UID=0 volumes: - ./front-envoy.yaml:/etc/envoy/envoy.yaml networks: envoymesh: ipv4_address: 172.31.52.10 expose: # Expose ports 80 (for general traffic) and 9901 (for the admin server) - "80" - "9901" demoapp-v1.0-1: image: ikubernetes/demoapp:v1.0 hostname: demoapp-v1.0-1 networks: envoymesh: aliases: - demoappv10 expose: - "80" demoapp-v1.0-2: image: ikubernetes/demoapp:v1.0 hostname: demoapp-v1.0-2 networks: envoymesh: aliases: - demoappv10 expose: - "80" demoapp-v1.1-1: image: ikubernetes/demoapp:v1.1 hostname: demoapp-v1.1-1 networks: envoymesh: aliases: - demoappv11 expose: - "80" demoapp-v1.1-2: image: ikubernetes/demoapp:v1.1 hostname: demoapp-v1.1-2 networks: envoymesh: aliases: - demoappv11 expose: - "80" demoapp-v1.2-1: image: ikubernetes/demoapp:v1.2 hostname: demoapp-v1.2-1 networks: envoymesh: aliases: - demoappv12 expose: - "80" networks: envoymesh: driver: bridge ipam: config: - subnet: 172.31.52.0/24

使用的路由

virtual_hosts: - name: vh_001 domains: ["*"] routes: - match: prefix: "/" headers: - name: X-Canary exact_match: "true" route: cluster: demoappv12 - match: prefix: "/" query_parameters: - name: "username" string_match: prefix: "vip_" route: cluster: demoappv11 - match: prefix: "/" route: cluster: demoappv10

实验验证

docker-compose up

窗口克隆测试

# 不使用任何独特的访问条件,直接返回默认的demoappv10的结果 root@test:~# curl 172.31.52.10/hostname ServerName: demoapp-v1.0-2 root@test:~# curl 172.31.52.10/hostname ServerName: demoapp-v1.0-1 root@test:~# curl 172.31.52.10/hostname ServerName: demoapp-v1.0-2 root@test:~# curl 172.31.52.10/hostname ServerName: demoapp-v1.0-1

测试使用“X-Canary: true”村头的请求

# 使用特定的标头发起请求,返回demoappv12的结果 root@test:~# curl -H "X-Canary: true" 172.31.52.10/hostname ServerName: demoapp-v1.2-1

测试使用特定的查询条件

# 在请求中使用特定的查询条件,返回demoappv11的结果 root@test:~# curl 172.31.52.10/hostname?username=vip_mageedu ServerName: demoapp-v1.1-1 root@test:~# curl 172.31.52.10/hostname?username=vip_mageedu ServerName: demoapp-v1.1-2 root@test:~# curl 172.31.52.10/hostname?username=vip_ilinux ServerName: demoapp-v1.1-1 root@test:~# curl 172.31.52.10/hostname?username=vip_ilinux ServerName: demoapp-v1.1-2