操作

https://www.cnblogs.com/Rostov/p/13530423.html

https://blog.csdn.net/weixin_42831855/article/details/91980398

compose.yml 文件解析 https://www.cnblogs.com/Rostov/p/13528918.html

附上yml文件

version: '2'

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

container_name: zoo1

ports:

- 2184:2181

volumes:

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo1/data:/data"

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo1/datalog:/datalog"

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo1/conf:/conf"

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

zookeeper_kafka:

ipv4_address: 172.19.0.11

zoo2:

image: zookeeper

restart: always

hostname: zoo2

container_name: zoo2

ports:

- 2185:2181

volumes:

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo2/data:/data"

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo2/datalog:/datalog"

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo2/conf:/conf"

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181

networks:

zookeeper_kafka:

ipv4_address: 172.19.0.12

zoo3:

image: zookeeper

restart: always

hostname: zoo3

container_name: zoo3

ports:

- 2186:2181

volumes:

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo3/data:/data"

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo3/datalog:/datalog"

- "/Users/sun9/dockerKafkaService/volume/zkcluster/zoo3/conf:/conf"

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

networks:

zookeeper_kafka:

ipv4_address: 172.19.0.13

kafka1:

image: wurstmeister/kafka

restart: always

hostname: kafka1

container_name: kafka1

ports:

- 9092:9092

environment:

KAFKA_BROKER_ID: 1

KAFKA_ADVERTISED_HOST_NAME: kafka1

KAFKA_ADVERTISED_PORT: 9092

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka1:9092

KAFKA_LISTENERS: PLAINTEXT://kafka1:9092

volumes:

- /Users/sun9/dockerKafkaService/volume/kfkluster/kafka1/logs:/kafka

external_links:

- zoo1

- zoo2

- zoo3

networks:

zookeeper_kafka:

ipv4_address: 172.19.0.14

depends_on:

- zoo1

- zoo2

- zoo3

kafka2:

image: wurstmeister/kafka

restart: always

hostname: kafka2

container_name: kafka2

ports:

- 9093:9093

environment:

KAFKA_BROKER_ID: 2

KAFKA_ADVERTISED_HOST_NAME: kafka2

KAFKA_ADVERTISED_PORT: 9093

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka2:9093

KAFKA_LISTENERS: PLAINTEXT://kafka2:9093

volumes:

- /Users/sun9/dockerKafkaService/volume/kfkluster/kafka2/logs:/kafka

external_links:

- zoo1

- zoo2

- zoo3

networks:

zookeeper_kafka:

ipv4_address: 172.19.0.15

depends_on:

- zoo1

- zoo2

- zoo3

kafka3:

image: wurstmeister/kafka

restart: always

hostname: kafka3

container_name: kafka3

ports:

- 9094:9094

environment:

KAFKA_BROKER_ID: 3

KAFKA_ADVERTISED_HOST_NAME: kafka3

KAFKA_ADVERTISED_PORT: 9094

KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka3:9094

KAFKA_LISTENERS: PLAINTEXT://kafka3:9094

volumes:

- /Users/sun9/dockerKafkaService/volume/kfkluster/kafka3/logs:/kafka

external_links:

- zoo1

- zoo2

- zoo3

networks:

zookeeper_kafka:

ipv4_address: 172.19.0.16

depends_on:

- zoo1

- zoo2

- zoo3

networks:

zookeeper_kafka:

external:

name: zookeeper_kafka

网络环境配置:

docker network create --subnet 172.19.0.0/16 --gateway 172.19.0.1 zookeeper_kafka

踩过的shi坑

1.zookeeper集群connect refused

java.net.ConnectException: Connection refused (Connection refused)

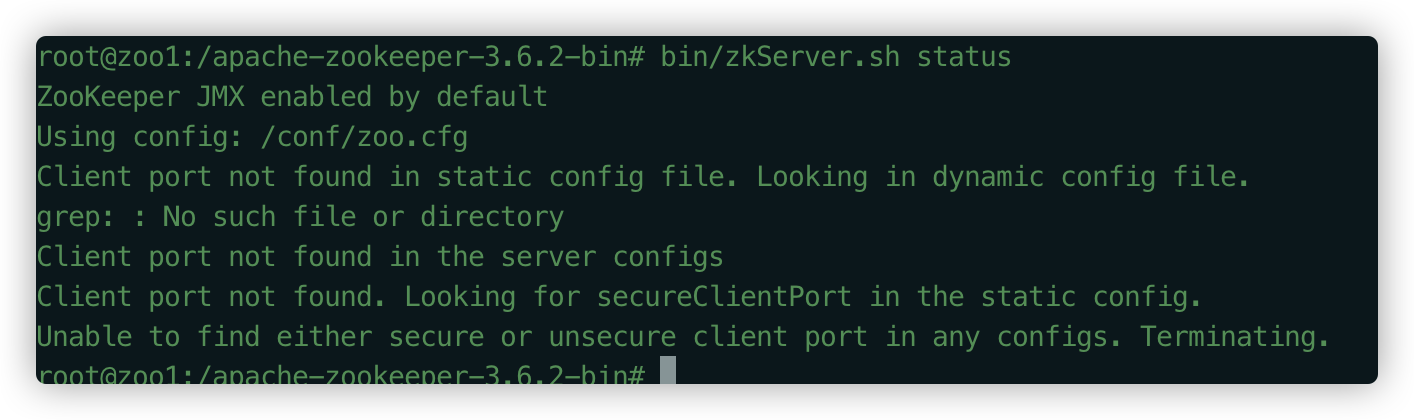

进入容器,调用zkServer.sh status 查看状态,发现没有找到对应的配置

最好通过控制变量法,不停的调试,终于是找到了问题点,在yml文件中需要额外加上

最后成果可以发现,已经可以启动成功

ps: 当指定容器数据卷/conf也要映射到宿主机的时候,zkServer.sh start 命令打印的日志会很清爽

但是原因目前还不清楚?初步估计会优先采用容器数据卷中的配置,不行,才会去其他地方查找

2.kafka集群连接zookeeper连接不上

kafka.common.InconsistentClusterIdException: The Cluster ID kg34qdAcRIWxF77tuPLZ_w doesn’t match stored clusterId Some(RiWfOLDaR2KmNYeern_1ow) in meta.properties. The broker is trying to join the wrong cluster. Configured zookeeper.connect may be wrong.

参考链接 : https://lixiaogang5.blog.csdn.net/article/details/105679680?utm_medium=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.channel_param&depth_1-utm_source=distribute.pc_relevant_t0.none-task-blog-BlogCommendFromMachineLearnPai2-1.channel_param

其主要原因就是volume 挂载的目录没有及时清除,(这是我感觉docker设计上很shi的一点,既然它的生命周期的都已经结束了,为什么不顺手把它删除掉)

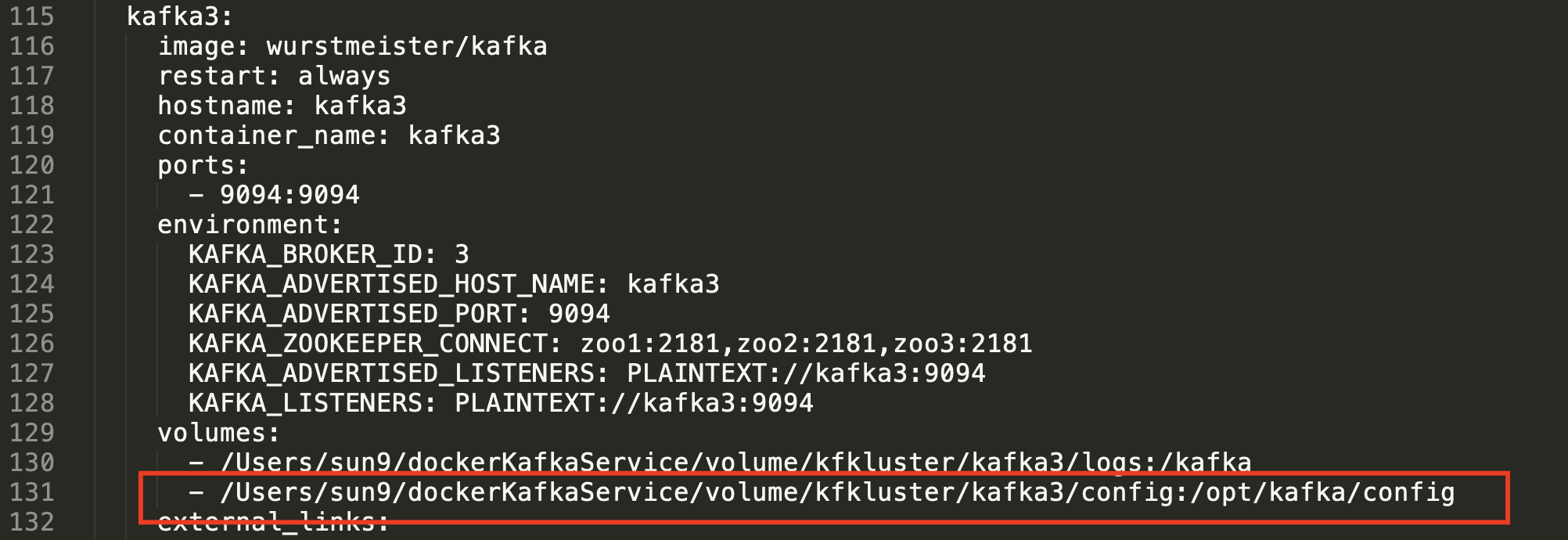

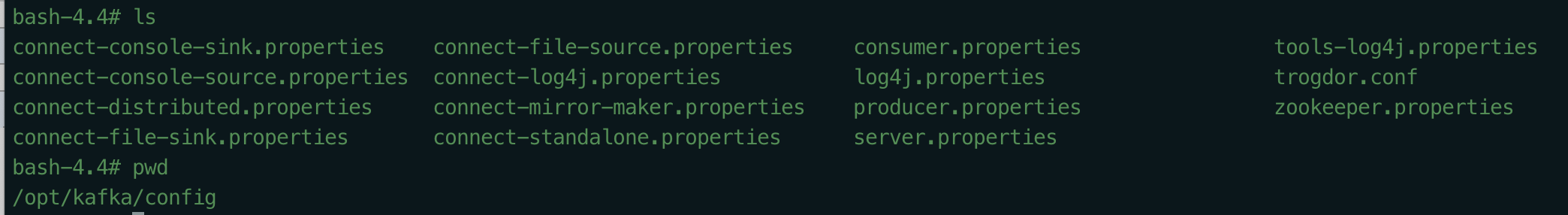

3.kafka 启动时找不到配置文件

其原因上面也讲过了,将config文件夹挂载了出去,然后发现原本满满当当的config文件夹只剩下一个server.properties了,其他文件全部被删掉了,这个原因也是找不到

正常应该是

update: 原因已经找到,在官网里的解释是这样的

如果使用绑定挂载的方式挂载非空目录,容器内的目录会被主机上的目录覆盖掉,至于为什么会剩下一个server.properties,应该是先覆盖后根据配置文件生成的

要想避免这种情况,可以采用volume 挂载,https://docs.docker.com/storage/volumes/ ,这种方式是不会覆盖的,但缺点就是主机上的目录对用户是不可见的,只适合持久化存储数据