6.1 图像识别问题简介及经典数据集

CIFAR 数据集就是一个影响力很大的图像分类数据集。CIFAR数据集分为了CIFAR-10 和 CIFAR-100 两个问题,它们都是图像词典项目( Visual Dictionary ) 中 800 万张图片的一个子集。CIFAR数据集中的图片为32×32的彩色图片。

CIFAR-10 问题收集了来自 10 个不同种类的 60000 张图片。每张图片大小固定且仅含一个种类的实体。与MNIST相比,最大区别是图片由黑白变成彩色,且分类难度更高。

无论是 MNIST 数据集还是 CIFAR 数据集,相比真实环境下的图像识别问题,有 2 个最大的问题。第一,现实生活中的图片分辨率要远高于 32 × 32,而且图像的分辨率也不会是固定的。第二,现实生活中的物体类别很多,无论是 10 种还是 100 种都远远不够,而且一张图片中不会只出现一个种类的物体。

ImageNet很大程度上解决了这两个问题,更加贴近真实环境下的图像识别问题。

ImageNet是一个基于WordNet的大型图像数据库。有将近1500万图片被关联到了WordNet的大约2000个名词同义词集上。每一个与ImageNet相关的WordNet同义词集都代表了现实世界中的一个实体,可以被认为是分类问题中的一个类别。一张图片中可能有多个同义词集所代表的实体。

ILSVRC2012图像分类数据集是ImageNet的子集,包含了来自1000个类别的120万张图片,其中每张图片只属于一个类别。图片是直接从网上爬取的,所以图片的大小从几千字节到几百万字节不等。

top-N正确率是指图像识别算法给出前N个答案中有一个是正确的概率。在图像分类问题上,很多学术论文都将前N个答案的正确率作为比较的方法,其中N的取值一般为3或5。

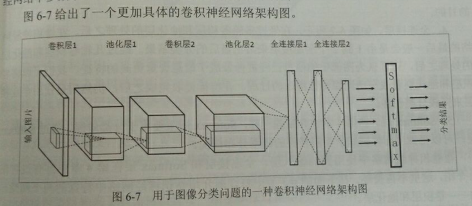

6.2 卷积神经网络简介

在全连接神经网络中,每相邻两层之间的节点都有边相连,于是一般会将每一层全连接层中的节点组织成一列,这样方便显示连接结构。而对于卷积神经网络,相邻两层之间只有部分节点相连,一般会将每一层卷积层的节点组织成一个三维矩阵。虽然直观上差异很大,实际上整体架构非常相似,而且输入输出、训练流程也基本一致。二者唯一的区别在于神经网络中相邻两层的连接方式。

使用全连接神经网络处理图像的最大问题在于全连接层的参数太多,参数增多除了导致计算速度减慢,还很容易导致过拟合问题。卷积神经网络的目的就是为了减少参数个数。

卷积神经网络中前几层中每个节点只和上一层中的部分节点相连。

卷积神经网络的五种结构:

1.输入层:一张图片的像素矩阵,长×宽×深度(色道)

2.卷积层:卷积层中每个节点的输入只是上一层神经网络的一小块,这个小块的常用大小有3×3或5×5。卷积层试图将神经网络中的每一小块进行更深入地分析从而得到抽象程度更高的特征。一般来说,通过卷积层处理过的节点矩阵深度会增加。

3.池化层:不改变三维矩阵的深度,但可以缩小矩阵的大小。池化操作可以认为是将一张分辨率较高的图片转化为分辨率较低的图片。通过池化层,可以进一步缩小最后全连接层中节点的个数,从而达到减少整个神经网络中参数的目的。

4.全连接层:经过几轮卷积层和池化层的处理之后,可以认为图像中的信息已经被抽象成了信息含量更高的特征。在卷积层和池化层完成自动图像特征提取之后,仍然需要全连接层完成分类任务。

5.Softmax层:用于分类问题。通过Softmax层,可以得到当前样例属于不同种类的概率分布情况。

6.3 卷积神经网络常用结构

卷积层神经网络结构中最重要的部分是过滤器(filter)或者内核(kernel),过滤器可以把当前层神经网络上的一个子节点矩阵转化为下一层神经网络上的一个单位节点矩阵,即长宽为1,深度不限的节点矩阵。

过滤器所处理的节点矩阵的长和宽都是人工指定的,这个节点矩阵的尺寸也被称为过滤器的尺寸。常用尺寸有3×3或5×5。因为过滤器处理的矩阵深度和当前层神经网络节点矩阵的深度是一致的,所以虽然节点矩阵是三维的,但过滤器的尺寸只需指定两个维度。

过滤器中另外一个需要人工指定的设置是处理得到的单位节点矩阵的深度,称为过滤器的深度。

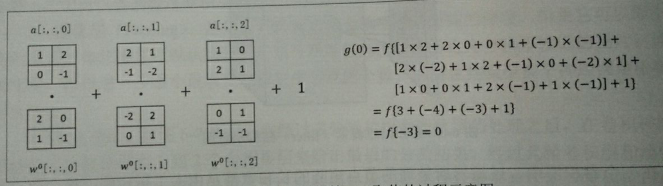

(局部)过滤器的前向传播过程就是通过左侧小矩阵中的节点计算出右侧单位节点矩阵中节点的过程。与全连接层类似,也是权重和偏置项。如图6-8

(整体)卷积层的前向传播过程就是通过将一个过滤器从神经网络当前层的左上角移动到右下角,并且在移动中计算每一个对应的单位矩阵得到的。如图6-10

过滤器每移动一次,可以计算出一个值(当深度为 k 时会计算出 k 个值),将这些数值拼接成一个新的矩阵,就完成了卷积层前向传播的过程。

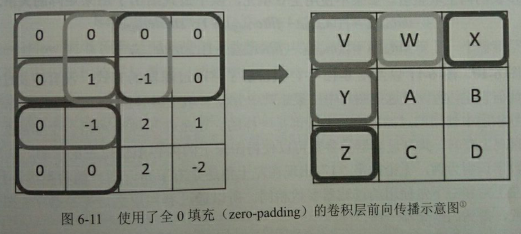

当过滤器的大小不为 1×1 时,卷积层前向传播得到的矩阵的尺寸要小于当前层矩阵的尺寸。

为了避免尺寸的变化,可以在当前层矩阵的边界上加入全0填充。如图6-11

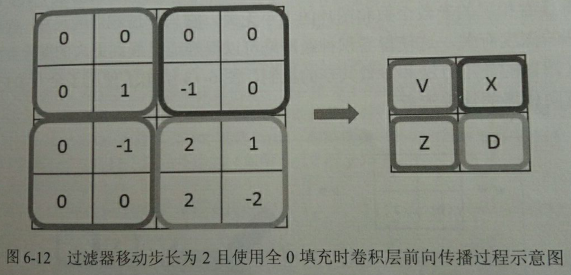

除了使用全0填充,还可以通过设置过滤器移动的步长来调整结果矩阵的大小。图6-12显示了当移动步长为2且使用全0填充时,卷积层前向传播的过程。

输出层大小的确定:

使用全0填充时,向上取整

out_length =in_length / stride_length

out_width = in_width / stride_width

不使用全0填充时,向上取整

out_length = (in_length - filter_length +1) / stride_length

out_width = (in_width - filter_width + 1) / stride_width

卷积神经网络有一个非常重要的性质就是每一个卷积层中使用的过滤器中的参数相同,这可以使得图像上的内容不受位置的影响。以mnist手写体数字识别为例,无论数字“1”出现在左上角还是右下角,图片的种类都是不变的。而且,共享每个卷积层中过滤器的参数可以巨幅减少神经网络上的参数。以CIFAR-10问题为例,输入层矩阵的维度为32×32×3,假设卷积层使用的过滤器尺寸为5×5,深度为16,那么这个卷积层的参数个数为5*5*3*16+16=1216个(可以想象为输入层为5*5*3、输出层为16*1的全连接层)。而且,参数个数只与过滤器的尺寸、深度以及当前层节点矩阵的深度有关,而与图片大小无关,这使得卷积神经网络可以很好地扩展到更大的图像数据上。

通过tensorflow实现卷积层的前向传播,

1 x = tf.placeholder(tf.float32, shape=[None, 32, 32, 3], name='x-input') 2 # shape分别为过滤器尺寸、当前层深度、过滤器深度 3 filter_weight = tf.get_variable( 4 'weights', shape=[5, 5, 3, 16], initializer=tf.truncated_normal_initializer(stddev=0.1) 5 ) 6 biases = tf.get_variable( 7 'biases', shape=[16], initializer=tf.constant_initializer(0.1) # shape为过滤器深度 8 ) 9 # 第一个输入为当前层的节点矩阵,该矩阵为四维矩阵,第一个维度对应一个输入batch, 后三个维度对应一个节点矩阵(长*宽*深) 10 # 第二个输入为卷积层的权重,也就是过滤器 11 # 第三个输入为不同维度上的步长,长度为4的数组,要求第一维度和第四维度一定为1, 因为卷积层的步长只对矩阵的长和宽有效 12 # 第四个输入为padding, 取值可以为SAME或VALID 13 conv = tf.nn.conv2d( 14 x, filter_weight, strides=[1, 1, 1, 1], padding='SAME' 15 ) 16 # print(conv.shape) # (?, 32, 32, 16) # 深度变成16, 根据公式,使用全0填充时为32, 不使用时为28 17 18 # 不能直接使用加法,因为矩阵上不同位置的节点都需要加上同样的偏置项。 19 # 例如图6-13所示, 虽然下一层神经网络的大小为 2×2, 但是偏置项只有一个数(因为深度为1), 而2×2矩阵中的每一个值都需要加上这个偏置项。 20 bias = tf.nn.bias_add(conv, biases) 21 actived_conv = tf.nn.relu(bias) 22 23 # 注意区分输入的四个维度、权重的四个维度、步长的四个维度。

6.3.2 池化层

池化层主要用于减小矩阵的尺寸,从而减少最后全连接层中的参数。使用池化层既可以加快计算速度也有防止过拟合问题的作用。

池化层的前向传播过程也是通过移动一个类似过滤器的结构完成的。但池化层过滤器中的计算不是节点的加权和,而是采用更简单的最大值或平均值运算。使用最大值操作的池化层称为最大池化层,使用平均值操作的池化层称为平均池化层。

与卷积层的过滤器类似,池化层的过滤器也需要人工设定过滤器的尺寸、是否使用全0填充以及过滤器移动的步长等。卷积层和池化层中过滤器的移动方式是相似的,唯一的区别在于卷积层使用的过滤器是横跨整个深度的,而池化层使用的过滤器只影响一个深度上的节点。所以池化层的过滤器除了在长和宽上移动,还需要在深度上移动。

卷积层,深度为3,3个相加

池化层,深度为2,分别处理

通过tensorflow实现池化层的前向传播,

1 # 第一个输入为当前层节点矩阵(四维矩阵) 2 # 第二个为过滤器尺寸,长度为4的数组,第一维度和第四维度必须为1,这意味着过滤器不可跨不同输入样例和节点矩阵深度,使用最多的是[1,2,2,1]或[1,3,3,1] # 与卷积层不同 3 # 第三个输入为步长,长度为4的数组,第一维度和第四维度必须为1,这意味着池化层不能减少节点矩阵的深度或输入样例的个数。 4 # 第四个输入为padding 5 pool = tf.nn.max_pool(actived_conv, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME')

卷积层和池化层的最大不同在于过滤器:[5, 5, 3, 16] [1, 3, 3, 1]

6.4 经典卷积网络模型

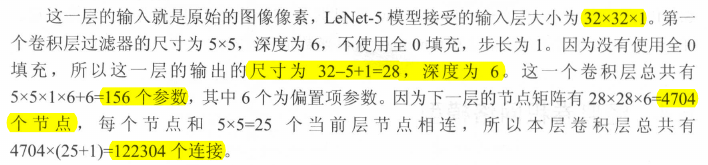

6.4.1 LeNet-5模型

第一个成功应用于数字识别问题的卷积神经网络。

LetNet-5模型接受的输入层大小为三维矩阵(长×宽×深)。

参数个数远远小于连接个数,但卷积层的连接个数??没搞懂为啥还要加1。

只有全连接层的权重需要加入正则化。

relu和dropout不在最后一层使用。

1 # mnist_inference.py 2 3 import tensorflow as tf 4 5 IMAGE_SIZE = 28 6 NUM_CHANNELS = 1 # 黑白 7 NUM_LABELS = 10 8 9 # 第一层卷积层的尺寸和深度 10 CONV1_SIZE = 5 11 CONV1_DEEP = 32 12 # 第二层卷积层的尺寸和深度 13 CONV2_SIZE = 5 14 CONV2_DEEP = 64 15 # 全连接层的节点个数 16 FC_SIZE = 512 17 18 def get_weight_variable(shape, regularizer): 19 weights = tf.get_variable('weight', shape, initializer=tf.truncated_normal_initializer(stddev=0.1)) 20 if regularizer: 21 tf.add_to_collection('losses', regularizer(weights)) 22 return weights 23 24 def inference(input_tensor, train, regularizer): 25 with tf.variable_scope('layer1-conv1'): 26 # 输入层为28×28×1,尺寸为5×5,深度为32,步长为1,输出层为28×28×32 27 conv1_weights = get_weight_variable([CONV1_SIZE, CONV1_SIZE, NUM_CHANNELS, CONV1_DEEP], None) 28 conv1_biases = tf.get_variable('bias', [CONV1_DEEP], initializer=tf.constant_initializer(0.0)) 29 conv1 = tf.nn.conv2d(input_tensor, conv1_weights, strides=[1, 1, 1, 1], padding='SAME') 30 relu1 = tf.nn.relu(tf.nn.bias_add(conv1, conv1_biases)) 31 32 with tf.name_scope('layer2-pool1'): 33 # 输出层为14*14*32 34 pool1 = tf.nn.max_pool(relu1, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME') 35 36 with tf.variable_scope('layer3-conv2'): 37 # 尺寸为5*5,深度为64,输出层为14*14*64 38 conv2_weights = get_weight_variable([CONV2_SIZE, CONV2_SIZE, CONV1_DEEP, CONV2_DEEP], None) 39 conv2_biases = tf.get_variable('bias', [CONV2_DEEP], initializer=tf.constant_initializer(0.0)) 40 conv2 = tf.nn.conv2d(pool1, conv2_weights, strides=[1, 1, 1, 1], padding='SAME') 41 relu2 = tf.nn.relu(tf.nn.bias_add(conv2, conv2_biases)) 42 43 with tf.name_scope('layer4-pool2'): 44 # 输出层为7*7*64 45 pool2 = tf.nn.max_pool(relu2, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME') 46 47 # 全连接层的输入格式为特征向量,这就需要将三维矩阵拉直成一维向量。 48 pool_shape = pool2.get_shape().as_list() # 包含一个batch 49 nodes = pool_shape[1] * pool_shape[2] * pool_shape[3] # 3136 50 reshaped = tf.reshape(pool2, [pool_shape[0], nodes]) 51 52 # dropout在训练时会随机将部分节点的输出改为0。dropout方法可以进一步提升模型可靠性并防止过拟合,dropout过程只在训练时使用。 53 with tf.variable_scope('layer5-fc1'): 54 # 只有全连接层的权重需要加入正则化 55 fc1_weights = get_weight_variable([nodes, FC_SIZE], regularizer) 56 fc1_biases = tf.get_variable('bias', shape=[FC_SIZE], initializer=tf.constant_initializer(0.1)) 57 fc1 = tf.nn.relu(tf.matmul(reshaped, fc1_weights) + fc1_biases) 58 if train: 59 fc1 = tf.nn.dropout(fc1, 0.5) 60 61 with tf.variable_scope('layer6-fc2'): 62 fc2_weights = get_weight_variable([FC_SIZE, NUM_LABELS], regularizer) 63 fc2_biases = tf.get_variable('bias', shape=[NUM_LABELS], initializer=tf.constant_initializer(0.1)) 64 logit = tf.matmul(fc1, fc2_weights) + fc2_biases 65 66 # relu和dropout不在最后一层使用。 后面会使用sparse_softmax_cross_entropy_with_logits计算交叉熵。 67 return logit 68 69 72 # mnist_train.py 73 74 #!coding:utf8 75 import tensorflow as tf 76 from tensorflow.examples.tutorials.mnist import input_data 77 import mnist_inference 78 import os 79 import numpy as np 80 81 BATCH_SIZE = 100 82 83 LEARNING_RATE_BASE = 0.8 84 LEARNING_RATE_DECAY = 0.99 85 REGULARIZATION_RATE = 0.0001 # lambda 86 TRAINING_STEPS = 30000 87 MOVING_AVERAGE_DACAY = 0.99 88 89 MODEL_SAVE_PATH = '/home/yangxl/files/save_model' 90 MODEL_NAME = 'conv2d.ckpt' 91 92 93 def train(mnist): 94 # 因为从池化层到全连接层要进行reshape,所以不能为shape[0]不能为None。 95 x = tf.placeholder(tf.float32, [BATCH_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.NUM_CHANNELS], 'x-input') 96 y_ = tf.placeholder(tf.float32, [BATCH_SIZE, mnist_inference.NUM_LABELS], 'y-input') 97 98 # 正则化 99 from tensorflow.contrib.layers import l2_regularizer 100 regularizer = l2_regularizer(REGULARIZATION_RATE) 101 102 y = mnist_inference.inference(x, True, regularizer) 103 104 global_step = tf.Variable(0, trainable=False) 105 106 # 滑动平均 107 variables_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DACAY, global_step) 108 variables_averages_op = variables_averages.apply(tf.trainable_variables()) 109 # 互斥分类问题; 110 # 因为标准答案是一个长度为10的一维数组,而该函数需要提供的是一个正确答案的数字,所以需要使用tf.argmax 函数来得到正确答案对应的类别编号。 111 cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_, 1)) 112 cross_entropy_mean = tf.reduce_mean(cross_entropy) 113 loss = cross_entropy_mean + tf.add_n(tf.get_collection('losses')) 114 115 learning_rate = tf.train.exponential_decay(LEARNING_RATE_BASE, global_step, mnist.train.num_examples / BATCH_SIZE, 116 LEARNING_RATE_DECAY, staircase=True) 117 train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step) 118 with tf.control_dependencies([train_step, variables_averages_op]): 119 train_op = tf.no_op(name='train') 120 121 saver = tf.train.Saver() 122 123 with tf.Session() as sess: 124 tf.global_variables_initializer().run() 125 126 for i in range(TRAINING_STEPS): 127 xs, ys = mnist.train.next_batch(BATCH_SIZE) # xs.shape=(100, 784) 128 reshaped_xs = np.reshape(xs, [BATCH_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.NUM_CHANNELS]) 129 _, loss_value, step = sess.run([train_op, loss, global_step], feed_dict={x: reshaped_xs, y_: ys}) 130 131 if i % 1000 == 0: 132 print('after %d training steps, loss on training batch is %g ' % (i, loss_value)) 133 saver.save(sess, os.path.join(MODEL_SAVE_PATH, MODEL_NAME), global_step=global_step) 134 135 136 def main(argv=None): 137 mnist = input_data.read_data_sets('/home/yangxl/files/mnist', one_hot=True) 138 import time 139 # print('start...', int(time.time())) 140 train(mnist) 141 # print(int(time.time())) 142 143 144 if __name__ == '__main__': 145 tf.app.run() 146 147 150 # mnist_eval.py 151 152 #!coding:utf8 153 import tensorflow as tf 154 from tensorflow.examples.tutorials.mnist import input_data 155 import mnist_inference 156 import mnist_train 157 import time 158 import numpy as np 159 160 # 每10秒加载一次最新模型,并在测试数据上测试最新模型的正确率。 161 EVAL_INTERVAL_SECS = 60 162 163 def evaluate(mnist): 164 x = tf.placeholder(tf.float32, [mnist.test.num_examples, mnist_inference.IMAGE_SIZE, mnist_inference.IMAGE_SIZE, mnist_inference.NUM_CHANNELS], 'x-input') 165 y_ = tf.placeholder(tf.float32, [mnist.test.num_examples, mnist_inference.NUM_LABELS], 'y-input') 166 167 y = mnist_inference.inference(x, False, None) 168 169 correct_prediction = tf.equal(tf.argmax(y, 1), tf.argmax(y_, 1)) 170 accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) 171 172 # 滑动平均 173 variables_averages = tf.train.ExponentialMovingAverage(mnist_train.MOVING_AVERAGE_DACAY) 174 variables_to_restore = variables_averages.variables_to_restore() 175 176 saver = tf.train.Saver(variables_to_restore) # 训练时需要保存滑动平均模型,验证时才能加载到。 177 178 while True: 179 with tf.Session() as sess: 180 reshape_x = np.reshape(mnist.test.images, [-1, 28, 28, 1]) 181 validate_feed = {x: reshape_x, y_: mnist.test.labels} 182 183 # 通过checkpoint文件自动找到目录中最新模型的文件名 184 ckpt = tf.train.get_checkpoint_state(mnist_train.MODEL_SAVE_PATH) 185 if ckpt and ckpt.model_checkpoint_path: 186 saver.restore(sess, ckpt.model_checkpoint_path) 187 188 global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1] 189 accuracy_score = sess.run(accuracy, feed_dict=validate_feed) 190 print('after %s training steps, validation accuracy = %g ' % (global_step, accuracy_score)) 191 else: 192 print('No checkpoint file found') 193 return 194 time.sleep(EVAL_INTERVAL_SECS) 195 196 197 def main(argv=None): 198 mnist = input_data.read_data_sets('/home/yangxl/files/mnist', one_hot=True) 199 print('start...') 200 evaluate(mnist) 201 202 203 if __name__ == '__main__': 204 tf.app.run()

代码么问题,损失函数不固定,准确率大约为0.117,应该大约为99.4%才对啊。

一种卷积神经网络架构不能解决所有问题。比如,LeNet-5模型就无法很好地处理类似ImageNet这样比较大的图像数据集。

以下正则表达式公式总结了一些经典的用于图片分类问题的卷积神经网络架构:输入层 --> (卷积层+ --> 池化层?)+ --> 全连接层+

大部分卷积神经网络中一般最多连续使用三层卷积层。

在多轮卷积层和池化层之后,卷积神经网络在输出之前一般会经过1~2个全连接层。

在过滤器的深度上,大部分卷积神经网络都采用逐层递增的方式。

6.4.2 Inception-v3模型

LeNet-5模型中,不同卷积层通过串联的方式连接在一起,而Inception-v3模型中,inception结构是将不同卷积层通过并联的方式结合在一起。

在6.4.1中提到了一个卷积层可以使用边长为1、3或5的过滤器,那么如何在这些边长中选择呢?inception模型给出了一个方案,那就是同时使用所有不同尺寸的过滤器,然后再将得到的矩阵拼接起来。

虽然过滤器的尺寸不同,但如果所有的过滤器都使用全0填充并且步长为1,那么前向传播得到的结果矩阵的长和宽都与输入矩阵一致。这样经过不同过滤器处理的结果矩阵可以拼接成一个更深的矩阵。

Inception-v3 模型总共有46 层(图中方框里的层数),由 11 个(图中方框) Inception 模块组成。在 Inception-v3 模型中有 96 个卷积层。

inception-v3模型的代码和slim库,

1 import tensorflow as tf 2 import tensorflow.contrib.slim as slim 3 4 trunc_normal = lambda stddev: tf.truncated_normal_initializer(0.0, stddev) 5 6 def inception_v3_base(inputs, 7 final_endpoint='Mixed_7c', 8 min_depth=16, 9 depth_multiplier=1.0, 10 scope=None): 11 end_points = {} 12 13 if depth_multiplier <= 0: 14 raise ValueError('depth_multiplier is not greater than zero.') 15 depth = lambda d: max(int(d * depth_multiplier), min_depth) 16 17 with tf.variable_scope(scope, 'InceptionV3', [inputs]): 18 # arg_scope用于设置默认的参数取值 19 with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], 20 stride=1, 21 padding='VALID'): 22 # 299 × 299 × 3 23 end_point = 'Conv2d_1a_3x3' # 字母数字下划线,乘号用x代替 24 # 不使用全0填充 25 net = slim.conv2d(inputs, depth(32), [3, 3], stride=2, scope=end_point) 26 end_points[end_point] = net 27 if end_point == final_endpoint: 28 return net, end_points 29 # 149 × 149 × 32 30 end_point = 'Conv2d_2a_3x3' 31 # 不使用全0填充,步长为1 32 net = slim.conv2d(net, depth(32), [3, 3], scope=end_point) 33 end_points[end_point] = net 34 if end_point == final_endpoint: 35 return net, end_points 36 # 147 × 147 × 32 37 end_point = 'Conv2d_2b_3x3' 38 net = slim.conv2d(net, depth(64), [3, 3], padding='SAME', scope=end_point) 39 end_points[end_point] = net 40 if end_point == final_endpoint: 41 return net, end_points 42 # 147 × 147 × 64 43 end_point = 'MaxPool_3a_3x3' 44 net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point) 45 end_points[end_point] = net 46 if end_point == final_endpoint: 47 return net, end_points 48 # 73 × 73 × 64 49 end_point = 'Conv2d_3b_1x1' 50 net = slim.conv2d(net, depth(80), [1, 1], scope=end_point) 51 end_points[end_point] = net 52 if end_point == final_endpoint: 53 return net, end_points 54 # 73 × 73 × 80 55 end_point = 'Conv2d_4a_3x3' 56 net = slim.conv2d(net, depth(192), [3, 3], scope=end_point) 57 end_points[end_point] = net 58 if end_point == final_endpoint: 59 return net, end_points 60 # 71 × 71 × 192 61 end_point = 'MaxPool_5a_3x3' 62 net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point) 63 end_points[end_point] = net 64 if end_point == final_endpoint: 65 return net, end_points 66 # 35 × 35 × 192 67 68 # Inception blocks 69 with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], 70 stride=1, 71 padding='SAME'): 72 # mixed: 35 × 35 × 256 73 end_point = 'Mixed_5b' 74 with tf.variable_scope(end_point): 75 with tf.variable_scope('Branch_0'): 76 branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 77 with tf.variable_scope('Branch_1'): 78 branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0a_1x1') 79 branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv2d_0b_1x1') 80 with tf.variable_scope('Branch_2'): 81 branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 82 branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0b_1x1') 83 branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_1x1') 84 with tf.variable_scope('Branch_3'): 85 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 86 branch_3 = slim.conv2d(branch_3, depth(32), [1, 1], scope='Conv2d_0b_1x1') 87 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 88 end_points[end_point] = net 89 if end_point == final_endpoint: 90 return net, end_points 91 92 # mixed_1: 35 × 35 × 288 93 end_point = 'Mixed_5c' 94 with tf.variable_scope(end_point): 95 with tf.variable_scope('Branch_0'): 96 branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 97 with tf.variable_scope('Branch_1'): 98 branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0b_1x1') 99 branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv_1_0c_1x1') 100 with tf.variable_scope('Branch_2'): 101 branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 102 branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0b_3x3') 103 branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_3x3') 104 with tf.variable_scope('Branch_3'): 105 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 106 branch_3 = slim.conv2d(branch_3, depth(64), [1, 1], scope='Conv2d_0b_1x1') 107 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 108 end_points[end_point] = net 109 if end_point == final_endpoint: 110 return net, end_points 111 112 # mixed_2: 35 × 35 × 288 113 end_point = 'Mixed_5d' 114 with tf.variable_scope(end_point): 115 with tf.variable_scope('Branch_0'): 116 branch_0 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 117 with tf.variable_scope('Branch_1'): 118 branch_1 = slim.conv2d(net, depth(48), [1, 1], scope='Conv2d_0a_1x1') 119 branch_1 = slim.conv2d(branch_1, depth(64), [5, 5], scope='Conv2d_0b_5x5') 120 with tf.variable_scope('Branch_2'): 121 branch_2 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 122 branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0b_3x3') 123 branch_2 = slim.conv2d(branch_2, depth(96), [3, 3], scope='Conv2d_0c_3x3') 124 with tf.variable_scope('Branch_3'): 125 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 126 branch_3 = slim.conv2d(branch_3, depth(64), [1, 1], scope='Conv2d_0b_1x1') 127 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 128 end_points[end_point] = net 129 if end_point == final_endpoint: 130 return net, end_points 131 132 # mixed_3: 17 × 17 × 768 133 end_point = 'Mixed_6a' 134 with tf.variable_scope(end_point): 135 with tf.variable_scope('Branch_0'): 136 branch_0 = slim.conv2d(net, depth(384), [3, 3], stride=2, padding='VALID', scope='Conv2d_1a_1x1') 137 with tf.variable_scope('Branch_1'): 138 branch_1 = slim.conv2d(net, depth(64), [1, 1], scope='Conv2d_0a_1x1') 139 branch_1 = slim.conv2d(branch_1, depth(96), [3, 3], scope='Conv2d_0b_3x3') 140 branch_1 = slim.conv2d(branch_1, depth(96), [3, 3], stride=2, padding='VALID', scope='Conv2d_1a_1x1') 141 with tf.variable_scope('Branch_2'): 142 branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID', scope='MaxPool_1a_3x3') 143 net = tf.concat([branch_0, branch_1, branch_2], 3) 144 end_points[end_point] = net 145 if end_point == final_endpoint: 146 return net, end_points 147 148 # mixed_4: 17 x 17 x 768. 149 end_point = 'Mixed_6b' 150 with tf.variable_scope(end_point): 151 with tf.variable_scope('Branch_0'): 152 branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 153 with tf.variable_scope('Branch_1'): 154 branch_1 = slim.conv2d(net, depth(128), [1, 1], scope='Conv2d_0a_1x1') 155 branch_1 = slim.conv2d(branch_1, depth(128), [1, 7], scope='Conv2d_0b_1x7') # 输出层大小不变,即使过滤器长宽不同 156 branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') 157 with tf.variable_scope('Branch_2'): 158 branch_2 = slim.conv2d(net, depth(128), [1, 1], scope='Conv2d_0a_1x1') 159 branch_2 = slim.conv2d(branch_2, depth(128), [7, 1], scope='Conv2d_0b_7x1') 160 branch_2 = slim.conv2d(branch_2, depth(128), [1, 7], scope='Conv2d_0c_1x7') 161 branch_2 = slim.conv2d(branch_2, depth(128), [7, 1], scope='Conv2d_0d_7x1') 162 branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7') 163 with tf.variable_scope('Branch_3'): 164 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 165 branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') 166 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 167 end_points[end_point] = net 168 if end_point == final_endpoint: 169 return net, end_points 170 171 # mixed_5: 17 x 17 x 768. 172 end_point = 'Mixed_6c' 173 with tf.variable_scope(end_point): 174 with tf.variable_scope('Branch_0'): 175 branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 176 with tf.variable_scope('Branch_1'): 177 branch_1 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') 178 branch_1 = slim.conv2d(branch_1, depth(160), [1, 7], scope='Conv2d_0b_1x7') 179 branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') 180 with tf.variable_scope('Branch_2'): 181 branch_2 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') 182 branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0b_7x1') 183 branch_2 = slim.conv2d(branch_2, depth(160), [1, 7], scope='Conv2d_0c_1x7') 184 branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0d_7x1') 185 branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7') 186 with tf.variable_scope('Branch_3'): 187 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 188 branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') 189 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 190 end_points[end_point] = net 191 if end_point == final_endpoint: 192 return net, end_points 193 194 # mixed_6: 17 x 17 x 768. 195 end_point = 'Mixed_6d' 196 with tf.variable_scope(end_point): 197 with tf.variable_scope('Branch_0'): 198 branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 199 with tf.variable_scope('Branch_1'): 200 branch_1 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') 201 branch_1 = slim.conv2d(branch_1, depth(160), [1, 7], scope='Conv2d_0b_1x7') 202 branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') 203 with tf.variable_scope('Branch_2'): 204 branch_2 = slim.conv2d(net, depth(160), [1, 1], scope='Conv2d_0a_1x1') 205 branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0b_7x1') 206 branch_2 = slim.conv2d(branch_2, depth(160), [1, 7], scope='Conv2d_0c_1x7') 207 branch_2 = slim.conv2d(branch_2, depth(160), [7, 1], scope='Conv2d_0d_7x1') 208 branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7') 209 with tf.variable_scope('Branch_3'): 210 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 211 branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') 212 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 213 end_points[end_point] = net 214 if end_point == final_endpoint: 215 return net, end_points 216 217 # mixed_7: 17 x 17 x 768. 218 end_point = 'Mixed_6e' 219 with tf.variable_scope(end_point): 220 with tf.variable_scope('Branch_0'): 221 branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 222 with tf.variable_scope('Branch_1'): 223 branch_1 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 224 branch_1 = slim.conv2d(branch_1, depth(192), [1, 7], scope='Conv2d_0b_1x7') 225 branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') 226 with tf.variable_scope('Branch_2'): 227 branch_2 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 228 branch_2 = slim.conv2d(branch_2, depth(192), [7, 1], scope='Conv2d_0b_7x1') 229 branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0c_1x7') 230 branch_2 = slim.conv2d(branch_2, depth(192), [7, 1], scope='Conv2d_0d_7x1') 231 branch_2 = slim.conv2d(branch_2, depth(192), [1, 7], scope='Conv2d_0e_1x7') 232 with tf.variable_scope('Branch_3'): 233 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 234 branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') 235 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 236 end_points[end_point] = net 237 if end_point == final_endpoint: 238 return net, end_points 239 240 # mixed_8: 8 x 8 x 1280. 241 end_point = 'Mixed_7a' 242 with tf.variable_scope(end_point): 243 with tf.variable_scope('Branch_0'): 244 branch_0 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 245 branch_0 = slim.conv2d(branch_0, depth(320), [3, 3], stride=2, padding='VALID', scope='Conv2d_1a_3x3') 246 with tf.variable_scope('Branch_1'): 247 branch_1 = slim.conv2d(net, depth(192), [1, 1], scope='Conv2d_0a_1x1') 248 branch_1 = slim.conv2d(branch_1, depth(192), [1, 7], scope='Conv2d_0b_1x7') 249 branch_1 = slim.conv2d(branch_1, depth(192), [7, 1], scope='Conv2d_0c_7x1') 250 branch_1 = slim.conv2d(branch_1, depth(192), [3, 3], stride=2, padding='VALID', scope='Conv2d_1a_3x3') 251 with tf.variable_scope('Branch_2'): 252 branch_2 = slim.max_pool2d(net, [3, 3], stride=2, padding='VALID', scope='MaxPool_1a_3x3') 253 net = tf.concat([branch_0, branch_1, branch_2], 3) 254 end_points[end_point] = net 255 if end_point == final_endpoint: 256 return net, end_points 257 258 # mixed_9: 8 x 8 x 2048. 259 end_point = 'Mixed_7b' 260 with tf.variable_scope(end_point): 261 with tf.variable_scope('Branch_0'): 262 branch_0 = slim.conv2d(net, depth(320), [1, 1], scope='Conv2d_0a_1x1') 263 with tf.variable_scope('Branch_1'): 264 branch_1 = slim.conv2d(net, depth(384), [1, 1], scope='Conv2d_0a_1x1') 265 branch_1 = tf.concat( 266 [ 267 slim.conv2d(branch_1, depth(384), [1, 3], scope='Conv2d_0b_1x3'), 268 slim.conv2d(branch_1, depth(384), [3, 1], scope='Conv2d_0b_3x1') 269 ], 270 3) 271 with tf.variable_scope('Branch_2'): 272 branch_2 = slim.conv2d(net, depth(448), [1, 1], scope='Conv2d_0a_1x1') 273 branch_2 = slim.conv2d(branch_2, depth(384), [3, 3], scope='Conv2d_0b_3x3') 274 branch_2 = tf.concat( 275 [ 276 slim.conv2d(branch_2, depth(384), [1, 3], scope='Conv2d_0c_1x3'), 277 slim.conv2d(branch_2, depth(384), [3, 1], scope='Conv2d_0d_3x1') 278 ], 279 3) 280 with tf.variable_scope('Branch_3'): 281 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 282 branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') 283 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 284 end_points[end_point] = net 285 if end_point == final_endpoint: 286 return net, end_points 287 288 # mixed_10: 8 x 8 x 2048. 289 end_point = 'Mixed_7c' 290 with tf.variable_scope(end_point): 291 with tf.variable_scope('Branch_0'): 292 branch_0 = slim.conv2d(net, depth(320), [1, 1], scope='Conv2d_0a_1x1') 293 with tf.variable_scope('Branch_1'): 294 branch_1 = slim.conv2d(net, depth(384), [1, 1], scope='Conv2d_0a_1x1') 295 branch_1 = tf.concat( 296 [ 297 slim.conv2d(branch_1, depth(384), [1, 3], scope='Conv2d_0b_1x3'), 298 slim.conv2d(branch_1, depth(384), [3, 1], scope='Conv2d_0c_3x1') 299 ], 300 3) 301 with tf.variable_scope('Branch_2'): 302 branch_2 = slim.conv2d(net, depth(448), [1, 1], scope='Conv2d_0a_1x1') 303 branch_2 = slim.conv2d(branch_2, depth(384), [3, 3], scope='Conv2d_0b_3x3') 304 branch_2 = tf.concat( 305 [ 306 slim.conv2d(branch_2, depth(384), [1, 3], scope='Conv2d_0c_1x3'), 307 slim.conv2d(branch_2, depth(384), [3, 1], scope='Conv2d_0d_3x1') 308 ], 309 3) 310 with tf.variable_scope('Branch_3'): 311 branch_3 = slim.avg_pool2d(net, [3, 3], scope='AvgPool_0a_3x3') 312 branch_3 = slim.conv2d(branch_3, depth(192), [1, 1], scope='Conv2d_0b_1x1') 313 net = tf.concat([branch_0, branch_1, branch_2, branch_3], 3) 314 end_points[end_point] = net 315 if end_point == final_endpoint: 316 return net, end_points 317 raise ValueError('Unknown final endpoint %s' % final_endpoint) 318 319 320 # 源文件中该函数放在了后面也能调用,why?? 321 def _reduced_kernel_size_for_small_input(input_tensor, kernel_size): 322 ''' 323 Define kernel size which is automatically reduced for small input. 324 325 If the shape of the input images is unknown at graph construction time this 326 function assumes that the input images are is large enough. 327 328 ''' 329 shape = input_tensor.get_shape().as_list() # [?, 5, 5, 128] 330 if shape[1] is None or shape[2] is None: 331 kernel_size_out = kernel_size 332 else: 333 kernel_size_out = [min(shape[1], kernel_size[0]), min(shape[2], kernel_size[1])] 334 return kernel_size_out 335 336 337 def incepiton_v3(inputs, 338 num_classes=1000, 339 is_training=True, 340 dropout_keep_prob=0.8, 341 min_depth=16, 342 depth_multiplier=1.0, 343 prediction_fn=slim.softmax, 344 spatial_squeeze=True, 345 reuse=None, 346 scope='InceptionV3'): 347 if depth_multiplier <= 0: 348 raise ValueError('depth_multiplier is not greater than zero.') 349 depth = lambda d: max(int(d * depth_multiplier), min_depth) 350 351 with tf.variable_scope(scope, 'InceptionV3', [inputs, num_classes], reuse=reuse) as scope: 352 with slim.arg_scope([slim.batch_norm, slim.dropout], is_training=is_training): 353 net, end_points = inception_v3_base(inputs, scope=scope, min_depth=min_depth, depth_multiplier=depth_multiplier) 354 # Auxiliary Head logits 355 # 这一部分是做啥的?? 356 with slim.arg_scope([slim.conv2d, slim.max_pool2d, slim.avg_pool2d], 357 stride=1, 358 padding='SAME'): 359 aux_logits = end_points['Mixed_6e'] # mixed_7: 17 x 17 x 768 360 with tf.variable_scope('AuxLogits'): 361 # 5 × 5 × 768 362 aux_logits = slim.avg_pool2d(aux_logits, [5, 5], stride=3, padding='VALID', scope='AvgPool_1a_5x5') 363 # 5 × 5 × 128 364 aux_logits = slim.conv2d(aux_logits, depth(128), [1, 1], scope='Conv2d_1b_1x1') 365 366 # shape of feature map before the final layer. 367 kernel_size = _reduced_kernel_size_for_small_input(aux_logits, [5, 5]) 368 # 1 × 1 × 768 输入层大小与过滤器尺寸相同,按照公式计算就没问题 369 aux_logits = slim.conv2d(aux_logits, depth(768), kernel_size, padding='VALID', weights_initializer=trunc_normal(0.01), scope='Conv2d_2a_{}x{}'.format(*kernel_size)) 370 # 1 × 1 × 1000 371 aux_logits = slim.conv2d(aux_logits, num_classes, [1, 1], activation_fn=None, normalizer_fn=None, weights_initializer=trunc_normal(0.001), scope='Conv2d_2b_1x1') 372 if spatial_squeeze: 373 # (?, 1000) 374 aux_logits = tf.squeeze(aux_logits, [1, 2], name='SpatialSqueeze') 375 end_points['AuxLogits'] = aux_logits 376 377 # final pooling and prediction 378 with tf.variable_scope('Logits'): 379 kernel_size = _reduced_kernel_size_for_small_input(net, [8, 8]) 380 # 1 × 1 × 2048 381 net = slim.avg_pool2d(net, kernel_size, padding='VALID', scope='AvgPool_1a_{}x{}'.format(*kernel_size)) 382 # 1 × 1 × 2048 383 # 这里居然有一个dropout方法?? 384 net = slim.dropout(net, keep_prob=dropout_keep_prob, scope='Dropout_1b') 385 end_points['Predictions'] = net 386 slim.conv2d(net, num_classes, [1, 1], activation_fn=None, normalizer_fn=None, scope='Conv2d_1c_1x1') 387 if spatial_squeeze: 388 # (?, 2048) 389 logits = tf.squeeze(net, [1, 2], name='SpatialSqueeze') 390 end_points['Logits'] = logits 391 end_points['Predictions'] = slim.softmax(logits, scope='Predictions') 392 393 return logits, end_points 394 395 396 # 在迁移中,定义模型时会用到 397 def inception_v3_arg_scope(weight_decay=0.00004, 398 batch_norm_var_collection='moving_vars', 399 batch_norm_decay=0.9997, 400 batch_norm_epsilon=0.001, 401 updates_collections=tf.GraphKeys.UPDATE_OPS, 402 use_fused_batchnorm=True): 403 """Defines the default InceptionV3 arg scope. 404 Returns: 405 An `arg_scope` to use for the inception v3 model. 406 """ 407 batch_norm_params = { 408 # Decay for the moving averages. 409 'decay': batch_norm_decay, 410 # epsilon to prevent 0s in variance. 411 'epsilon': batch_norm_epsilon, 412 # collection containing update_ops. 413 'updates_collections': updates_collections, 414 # Use fused batch norm if possible. 415 'fused': use_fused_batchnorm, 416 # collection containing the moving mean and moving variance. 417 'variables_collections': { 418 'beta': None, 419 'gamma': None, 420 'moving_mean': [batch_norm_var_collection], 421 'moving_variance': [batch_norm_var_collection], 422 } 423 } 424 425 # Set weight_decay for weights in Conv and FC layers. 426 with slim.arg_scope([slim.conv2d, slim.fully_connected], weights_regularizer=slim.l2_regularizer(weight_decay)): 427 with slim.arg_scope( 428 [slim.conv2d], 429 weights_initializer=slim.variance_scaling_initializer(), 430 activation_fn=tf.nn.relu, 431 normalizer_fn=slim.batch_norm, 432 normalizer_params=batch_norm_params) as sc: 433 return sc 434 435 436 inputs = tf.placeholder(tf.float32, shape=[None, 299, 299, 3], name='X') 437 # inception_v3_base(inputs) 438 incepiton_v3(inputs)

输入层为299*299*3的三维矩阵。

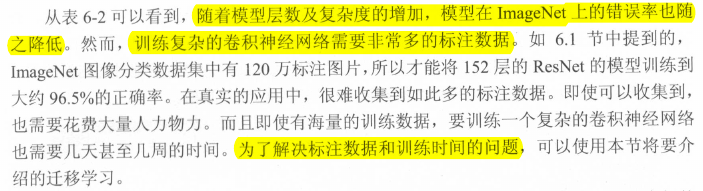

6.5 卷积神经网络迁移学习

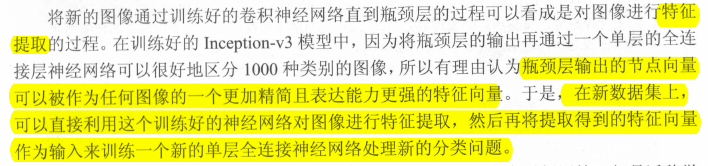

迁移学习,就是将一个问题上训练好的模型通过简单的调整使其适用于一个新的问题。

利用 ImageNet 数据集上训练好的 Inception-v3 模型来解决一个新的图像分类问题 。可以保留训练好的 Inception-v3 模型中所有卷积层的参数,只是替换最后一层全连接层。在最后这一层全连接层之前的网络层称之为瓶颈层( bottleneck ) 。瓶颈层指的是一层。

一般来说,在数据足够的情况下,迁移学习的效果不如完全重新训练。

迁移学习处理

处理文件样例,需要在2核8g上才能执行

1 import os 2 import glob 3 import tensorflow as tf 4 import numpy as np 5 6 INPUT_DATA = '/home/yangxl/flower_photos' # 输入文件 7 OUTPUT_DATA = '/home/yangxl/flower_processed_data.npy' # 输出文件 8 9 VALIDATION_PERCENTAGE = 10 10 TEST_PERCENTAGE = 10 11 12 def create_image_lists(sess, testing_percentage, validation_percentage): 13 sub_dirs = [x[0] for x in os.walk(INPUT_DATA)] # 当前目录和子目录 14 # print(sub_dirs) 15 is_root_dir = True 16 17 # 初始化各个数据集 18 training_images = [] 19 training_labels = [] 20 testing_images = [] 21 testing_labels = [] 22 validation_images = [] 23 validation_labels = [] 24 current_labels = 0 25 26 # 读取所有子目录 27 for sub_dir in sub_dirs: 28 if is_root_dir: # 把第一个排除了 29 is_root_dir = False 30 continue 31 32 # 获取一个子目录中所有的图片文件 33 extensions = ['jpg', 'jpeg', 'JPG', 'JPEG'] 34 file_list = [] 35 dir_name = os.path.basename(sub_dir) # '/'最后面的部分 36 print(dir_name) 37 for extension in extensions: 38 file_glob = os.path.join(INPUT_DATA, dir_name, '*.' + extension) 39 file_list.extend(glob.glob(file_glob)) # glob.glob返回一个匹配该模式的列表, glob和os配合使用来操作文件 40 if not file_list: 41 continue 42 43 # 处理图片数据 44 for file_name in file_list: 45 image_raw_data = tf.gfile.GFile(file_name, 'rb').read() # 二进制数据 46 image = tf.image.decode_jpeg(image_raw_data) # tensor, dtype=uint8 333×500×3 色道0~255 47 if image.dtype != tf.float32: 48 image = tf.image.convert_image_dtype(image, dtype=tf.float32) # 色道值0~1 49 image = tf.image.resize_images(image, [299, 299]) 50 image_value = sess.run(image) # numpy.ndarray 51 52 # 随机划分数据集 53 chance = np.random.randint(100) 54 if chance < validation_percentage: 55 validation_images.append(image_value) 56 validation_labels.append(current_labels) 57 elif chance < validation_percentage + testing_percentage: 58 testing_images.append(image_value) 59 testing_labels.append(current_labels) 60 else: 61 training_images.append(image_value) 62 training_labels.append(current_labels) 63 current_labels += 1 64 65 # 将训练数据随机打乱以获得更好的训练效果, 将数据打乱,但仍保持training_images和training_labels的对应关系。 66 state = np.random.get_state() 67 np.random.shuffle(training_images) 68 np.random.set_state(state) 69 np.random.shuffle(training_labels) 70 71 print("it's time to return") 72 return np.asarray([training_images, training_labels, 73 validation_images, validation_labels, 74 testing_images, testing_labels]) 75 76 def main(): 77 with tf.Session() as sess: 78 processed_data = create_image_lists(sess, TEST_PERCENTAGE, VALIDATION_PERCENTAGE) 79 # 通过numpy格式保存处理后的数据 80 np.save(OUTPUT_DATA, processed_data) 81 82 if __name__ == '__main__': 83 main()

获取交叉熵更简便的方式:

tf.losses.softmax_cross_entropy(tf.one_hot(labels, N_CLASSES), logits, weights=1.0)

train_step = tf.train.RMSPropOptimizer(LEARNING_RATE).minimize(tf.losses.get_total_loss())

迁移学习示例,

1 #!coding:utf8 2 3 import tensorflow as tf 4 import numpy as np 5 import tensorflow.contrib.slim as slim 6 7 # 加载inception-v3模型 8 import tensorflow.contrib.slim.python.slim.nets.inception_v3 as inception_v3 9 10 INPUT_DATA = '/home/yangxl/files/flower_processed_data.npy' 11 12 TRAIN_FILE = '/home/yangxl/files/save_model' 13 CKPT_FILE = '/home/yangxl/files/inception_v3.ckpt' 14 15 LEARNING_RATE = 0.0001 16 STEPS = 300 17 BATCH = 32 18 N_CLASSES = 5 # 5种花 19 20 CHECKPOINT_EXCLUDE_SCOPES = 'InceptionV3/Logits,InceptionV3/AuxLogits' 21 TRAINABLE_SCOPES = 'InceptionV3/Logits,InceptionV3/AuxLogits' 22 23 # 获取所有需要从训练好的模型中加载的参数 24 def get_tuned_variables(): 25 exclusions = [scope.strip() for scope in CHECKPOINT_EXCLUDE_SCOPES.split(',')] 26 variables_to_restore = [] 27 28 # 过滤参数 29 for var in slim.get_model_variables(): # 先定义了inception-v3模型,之后才会有变量 30 excluded = False 31 for exclusion in exclusions: 32 if var.op.name.startswith(exclusion): 33 excluded = True 34 break 35 if not excluded: 36 variables_to_restore.append(var) 37 return variables_to_restore 38 39 # 获取所有需要训练的变量列表 40 def get_trainable_variables(): 41 scopes = [scope.strip() for scope in TRAINABLE_SCOPES.split(',')] 42 variables_to_train = [] 43 for scope in scopes: 44 variables = tf.get_collection(tf.GraphKeys.TRAINABLE_VARIABLES, scope) # 对scope进行正则匹配 45 variables_to_train.append(variables) 46 return variables_to_train 47 48 def main(arg=None): 49 processed_data = np.load(INPUT_DATA) 50 training_images = processed_data[0] 51 n_training_example = len(training_images) 52 training_labels = processed_data[1] 53 validation_images = processed_data[2] 54 validation_labels = processed_data[3] 55 testing_images = processed_data[4] 56 testing_labels = processed_data[5] 57 print('%d training examples, %s validation examples and %d tseting examples.' % (n_training_example, len(validation_labels), len(testing_labels))) 58 59 images = tf.placeholder(tf.float32, [None, 299, 299, 3], name='input_images') 60 labels = tf.placeholder(tf.int64, [None], name='labels') # 5种花 61 62 # 定义inception-v3模型,因为谷歌给出的只有模型参数取值,所以这里需要在这个代码中定义inception-v3的结构。 63 with slim.arg_scope(inception_v3.inception_v3_arg_scope()): 64 # inception_v3.inception_v3_arg_scope()是一个包含两个键的字典。嵌套的arg_scope函数返回的字典会整合到一起。 65 # inception_v3.inception_v3函数里的一些函数可能会使用字典中的参数。 66 logits, _ = inception_v3.inception_v3(images, num_classes=N_CLASSES) 67 68 # 获取需要训练的变量 69 trainable_variables = get_trainable_variables() 70 # print('==', len(trainable_variables), trainable_variables) 71 ''' 72 [[<tf.Variable 'InceptionV3/Logits/Conv2d_1c_1x1/weights:0' shape=(1, 1, 2048, 5) dtype=float32_ref>, 73 <tf.Variable 'InceptionV3/Logits/Conv2d_1c_1x1/biases:0' shape=(5,) dtype=float32_ref>], 74 [<tf.Variable 'InceptionV3/AuxLogits/Conv2d_1b_1x1/weights:0' shape=(1, 1, 768, 128) dtype=float32_ref>, 75 <tf.Variable 'InceptionV3/AuxLogits/Conv2d_1b_1x1/BatchNorm/beta:0' shape=(128,) dtype=float32_ref>, 76 <tf.Variable 'InceptionV3/AuxLogits/Conv2d_2a_5x5/weights:0' shape=(5, 5, 128, 768) dtype=float32_ref>, 77 <tf.Variable 'InceptionV3/AuxLogits/Conv2d_2a_5x5/BatchNorm/beta:0' shape=(768,) dtype=float32_ref>, 78 <tf.Variable 'InceptionV3/AuxLogits/Conv2d_2b_1x1/weights:0' shape=(1, 1, 768, 5) dtype=float32_ref>, 79 <tf.Variable 'InceptionV3/AuxLogits/Conv2d_2b_1x1/biases:0' shape=(5,) dtype=float32_ref>]] 80 81 ''' 82 # 定义损失函数。在模型定义的时候已经将正则化损失加入损失集合了。 83 tf.losses.softmax_cross_entropy(tf.one_hot(labels, N_CLASSES), logits, weights=1.0) 84 85 # 定义训练过程 86 train_step = tf.train.RMSPropOptimizer(LEARNING_RATE).minimize(tf.losses.get_total_loss()) 87 88 # 计算正确率 89 with tf.name_scope('evaluation'): 90 correct_prediction = tf.equal(tf.argmax(logits, 1), labels) 91 evaluation_step = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) 92 93 # 定义加载模型的函数。返回一个回调函数callback,执行callback(sess)就会加载get_tuned_variables()变量列表到当前图。 94 load_fn = slim.assign_from_checkpoint_fn(CKPT_FILE, get_tuned_variables(), ignore_missing_vars=True) 95 96 # 定义保存新的训练好的模型的函数 97 saver = tf.train.Saver() 98 99 with tf.Session() as sess: 100 # 初始化没有加载进来的变量。这个过程一定要在模型加载之前,否则初始化过程会将已经加载好的变量重新赋值。 101 tf.global_variables_initializer().run() 102 # 加载已经训练好的模型 103 print('Loading tuned variables from %s' % CKPT_FILE) 104 load_fn(sess) 105 106 start = 0 107 end = BATCH 108 for i in range(STEPS): 109 sess.run(train_step, feed_dict={ 110 images: training_images[start: end], 111 labels: training_labels[start: end] 112 }) 113 114 # 输出日志 115 if i % 30 == 0 or i + 1 == STEPS: 116 saver.save(sess, TRAIN_FILE, global_step=i) 117 validation_accuracy = sess.run(evaluation_step, feed_dict={ 118 images: validation_images, labels: validation_labels 119 }) 120 print('Step %d: Validation accuracy = %.1f%%' % (i, validation_accuracy * 100.0)) 121 122 start = end 123 if start == n_training_example: 124 start = 0 125 end = start + BATCH 126 if end > n_training_example: 127 end = n_training_example 128 test_accuracy = sess.run(evaluation_step, feed_dict={ 129 images: testing_images, labels: testing_labels 130 }) 131 print('Final test accuracy = %.1f%%' % (test_accuracy * 100.0)) 132 133 if __name__ == '__main__': 134 tf.app.run()

执行过程:

代码执行了12个小时,但是top命令中的TIME+显示只有300多分钟,why??

执行过程中,`load average`相当高,但是进程的CPU、MEM使用率很低,可能是CPU执行了内存和swap之间的调度,really??