1:如果同一台服务器上安装有多个MongoDB实例,telegraf.conf 中关于 MongoDB 如何配置?配置数据在【INPUT PLUGINS的[[inputs.mongodb]]】部分。

单个实例配置

servers = ["mongodb://UID:PWD@XXX.XXX.XXX.124:27218"]

错误的多实例配置(例如两个实例);

servers = ["mongodb://UID:PWD@XXX.XXX.XXX.124:27218"] servers = ["mongodb://UID:PWD@XXX0.XXX.XXX.124:27213"]

重启服务,查看服务状态,提示错误信息如下;

Failed to start The plugin-driven server agent for reporting metrics into InfluxDB.

正确的配置应该为;

servers = ["mongodb://UID:PWD@XXX.XXX.XXX.124:27213","mongodb://UID:PWD@XXX.XXX.XXX.124:27218"]

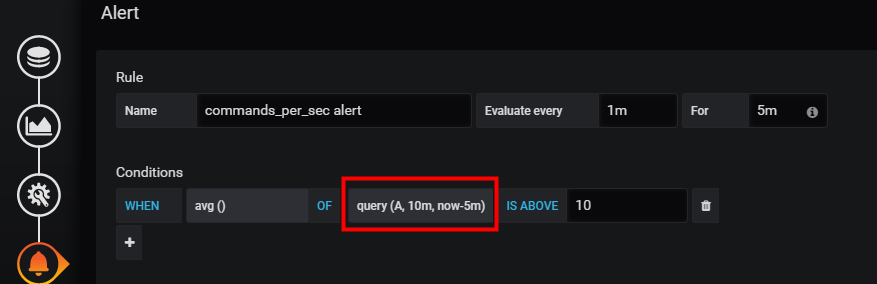

2.配置Grafana 告警规则后,发现只是告警一次,后面恢复后再报警一次。即异常持续期间没有一直告警。

解决办法,这个设置其实在【Alterting】--》【Notification channels】-->【Send reminders】

例如以下的设置可以理解为,每5分钟触发一下告警信息。

3.告警检查显示没有数据。

这个时候有两种原因

(1)收集监控项的代理程序有问题 ;

(2)或者是代理程序没问题,是汇报数据不及时的问题。

针对第二问题,我们可以调整代理程序执行频率;如果实时性要求不是很高,还可以调整告警规则检查数据的时间范围。

例如,我们可以从检查 过去5分钟到过去1分钟内的数据,调整为过去10分钟到过去5分钟内的数据。对应的设置如下:

调整前;

调整后

4.随着需要监控的子项的增多,收集时间必然增多,需要调整运行周期。

否则,报错信息如下;

telegraf[2908]: 2019-03-01T02:40:46Z E! Error in plugin [inputs.mysql]: took longer to collect than collection interval (10s)

解决方案:调整 telegraf.conf 文件中 [agent] 部分的interval参数。

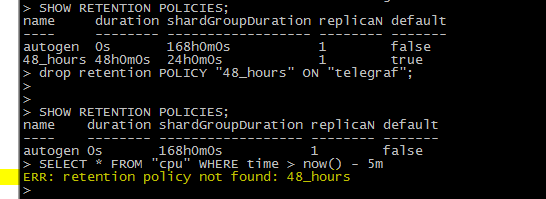

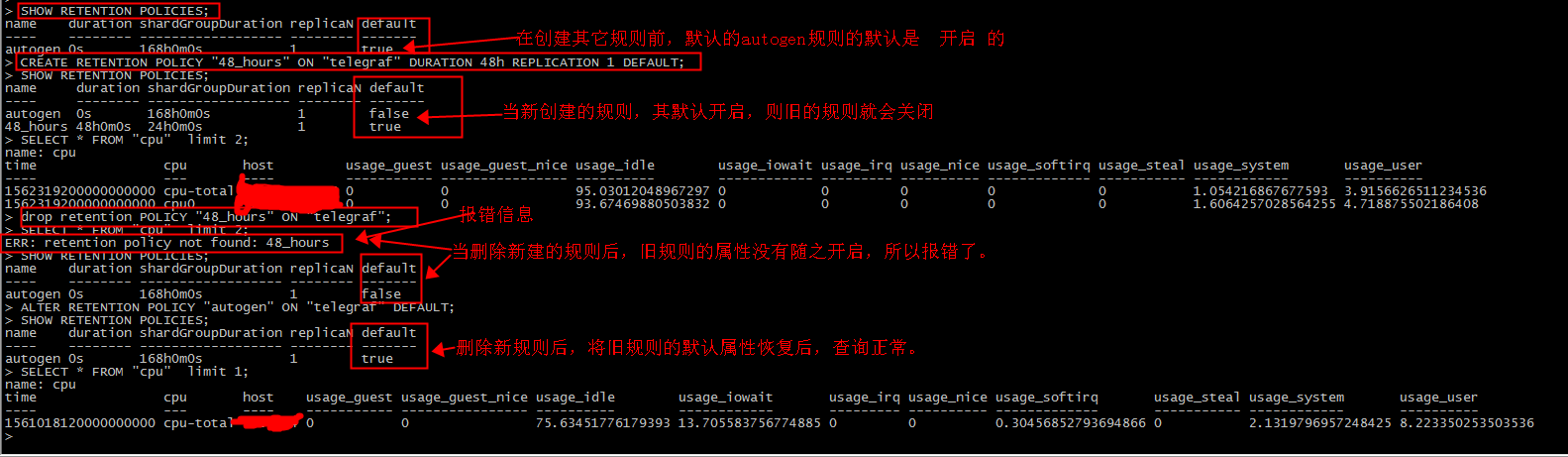

5.InfluxDB 既有的规则不建议删除,删除后查询写入都报错。

例如我们创建了如下一个规则:

CREATE RETENTION POLICY "48_hours" ON "telegraf" DURATION 48h REPLICATION 1 DEFAULT;

查看规则的命令:

SHOW RETENTION POLICIES;

然后执行删除命令

drop retention POLICY "48_hours" ON "telegraf";

查询数据,提示以下错误;

ERR: retention policy not found: 48_hours

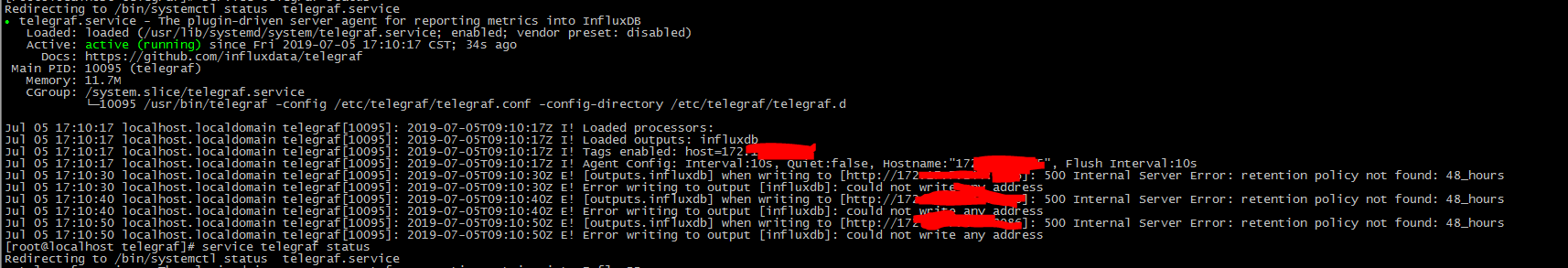

查看各个telegraf收集器,也开始报错了。

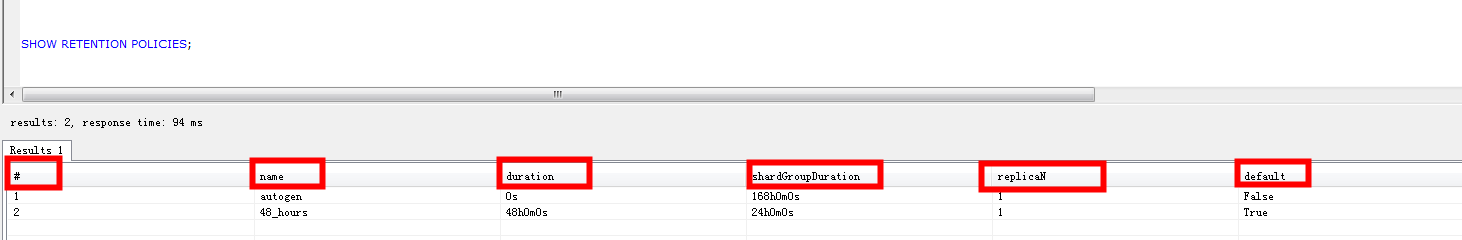

基础知识

| 字段 | 解释说明 |

| name | 名称, 此示例名称为autogen |

| duration | 持续时间, 0代表无限制 |

| shardGroupDuration | shardGroup的存储时间, shardGroup是InfluxDB的一个基本存储结构, 应该大于这个时间的数据在查询效率上应该有所降低 |

| replicaN | 全称是REPLICATION, 副本个数 |

| default | 是否是默认策略 |

解决方案;

新建的策略为默认策略,删除后没有了默认策略,要将一个策略设置为默认策略。

本例是将原来的autogen策略恢复为true,下面是完整的测试过程。

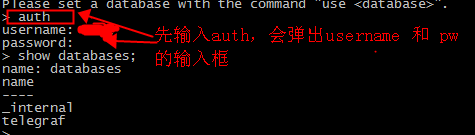

6.InfluxDB设置登入账号后,如何auth验证。

登入后,需要进行Auth验证,否则命令无法正常执行,报错如下:

ERR: unable to parse authentication credentials

需要输入,auth 命令,再分别输入账号命名即可。

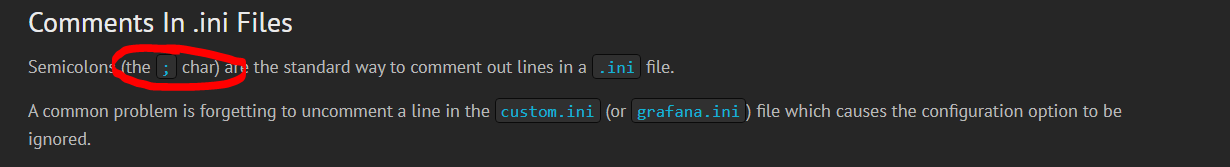

7. Grafana 关于报警邮件的配置

(1)我们明明配置了SMTP,但是提示错误:

"Failed to send alert notification email" logger=alerting.notifier.email error="SMTP not configured, check your grafana.ini config file's [smtp] section"

原因是:grafana.ini文件中,很多行的注释符是(;)

(2)与配置Linux系统的邮件服务不同,需要添加端口。(例如,添加25);否则报错:

"Failed to send alert notification email" logger=alerting.notifier.email error="address ygmail.yiguo.com: missing port in address"

(3)如无特别需要,请将skip_verify 设置为true。否则报错:

"Failed to send alert notification email" logger=alerting.notifier.email error="x509: certificate is valid for XXXXXX"

因此,grafana.ini中关于邮件部分的配置格式如下;

#################################### SMTP / Emailing ########################## [smtp] enabled = true host = 邮件服务(地址):port user = 用户名 # If the password contains # or ; you have to wrap it with trippel quotes. Ex """#password;""" password = XXXXXXX ;cert_file = ;key_file = skip_verify = true from_address = 告警邮件的地址 from_name = Grafana [emails] ;welcome_email_on_sign_up = false

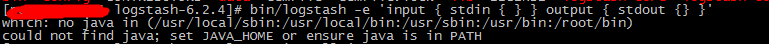

8.我们在搭建收集log的系统时,下载logstatsh,验证报错

验证代码:

bin/logstash -e 'input { stdin { } } output { stdout {} }'

which: no java in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin) could not find java; set JAVA_HOME or ensure java is in PATH

解决方案:

yum install java

再次验证:

[root@QQWeiXin—0081 logstash-6.2.4]# bin/logstash -e 'input { stdin { } } output { stdout {} }' Sending Logstash's logs to /data/logstash/logstash-6.2.4/logs which is now configured via log4j2.properties [2018-09-23T17:29:46,228][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"fb_apache", :directory=>"/data/logstash/logstash-6.2.4/modules/fb_apache/configuration"} [2018-09-23T17:29:46,243][INFO ][logstash.modules.scaffold] Initializing module {:module_name=>"netflow", :directory=>"/data/logstash/logstash-6.2.4/modules/netflow/configuration"} [2018-09-23T17:29:46,335][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.queue", :path=>"/data/logstash/logstash-6.2.4/data/queue"} [2018-09-23T17:29:46,342][INFO ][logstash.setting.writabledirectory] Creating directory {:setting=>"path.dead_letter_queue", :path=>"/data/logstash/logstash-6.2.4/data/dead_letter_queue"} [2018-09-23T17:29:46,661][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified [2018-09-23T17:29:46,702][INFO ][logstash.agent ] No persistent UUID file found. Generating new UUID {:uuid=>"c9e6fd92-0171-4a2b-87e5-36b98c21db16", :path=>"/data/logstash/logstash-6.2.4/data/uuid"} [2018-09-23T17:29:47,274][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.2.4"} [2018-09-23T17:29:47,607][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} [2018-09-23T17:29:49,568][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>40, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50} [2018-09-23T17:29:49,739][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#<Thread:0x718a7b78 sleep>"} The stdin plugin is now waiting for input: [2018-09-23T17:29:49,815][INFO ][logstash.agent ] Pipelines running {:count=>1, :pipelines=>["main"]} { "message" => "", "@version" => "1", "@timestamp" => 2018-09-23T09:30:24.535Z, "host" => "QQWeiXin—0081" } { "message" => "", "@version" => "1", "@timestamp" => 2018-09-23T09:30:24.969Z, "host" => "QQWeiXin—0081" } { "message" => "", "@version" => "1", "@timestamp" => 2018-09-23T09:30:25.189Z, "host" => "QQWeiXin—0081" }