下载一首英文的歌词或文章

news=''' ''',

生成词频统计

sep=''',.;:''""'''

for c in sep:

news=news.replace(c,' ')

wordlist=news.lower().split()

wordDict={}

for w in wordlist:

wordDict[w]=wordDict.get(w,0)+1

'''

wordSet=set(wordlist)

for w in wordSet:

wordDict[w]=wordlist.count(w)

'''

for w in wordDict:

print(w, wordDict[w])

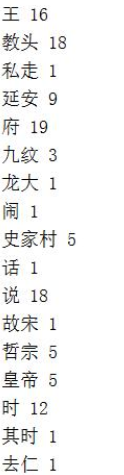

部分演示效果如下图所示:

排序

wordSet=set(wordlist)

for w in wordSet:

wordDict[w]=wordlist.count(w)

dictList=list(wordDict.items())

dictList.sort(key=lambda x:x[1],reverse=True)

print(dictList)

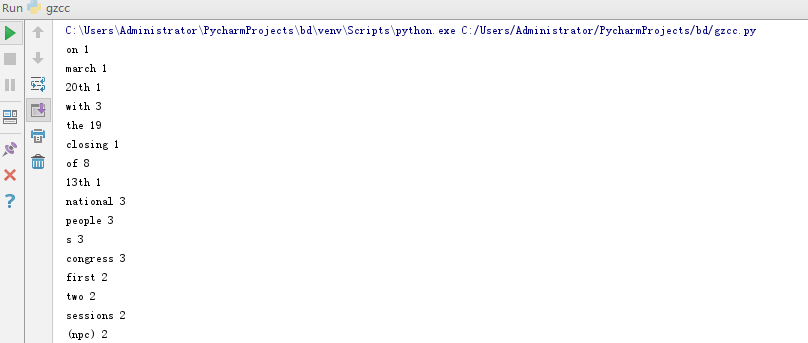

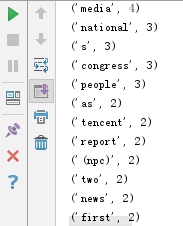

效果演示如下图所示:

排除语法型词汇,代词、冠词、连词

exclude={'the','a','an','and','of','with','to','by','am','are','is','which','on'}

wordSet=set(wordlist)-exclude

for w in wordSet:

wordDict[w]=wordlist.count(w)

dictList=list(wordDict.items())

dictList.sort(key=lambda x:x[1],reverse=True)

print(dictList)

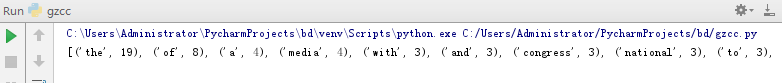

效果演示如下图所示:

输出词频最大TOP20

for i in range(20):

print(dictList[i])

效果演示如下图所示:

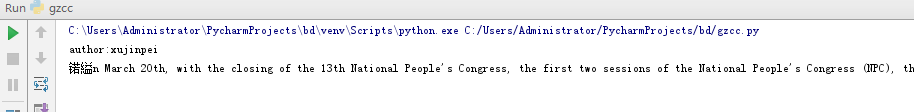

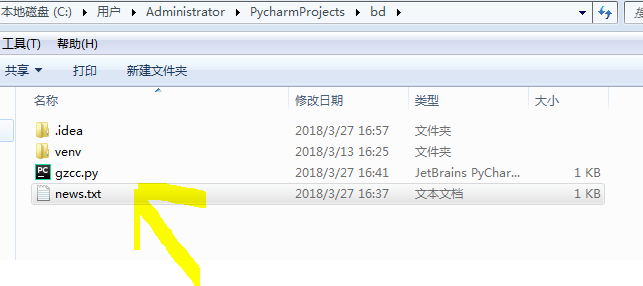

将分析对象存为utf-8编码的文件,通过文件读取的方式获得词频分析内容。

print('author:xujinpei')

f=open('news.txt','r')

news=f.read()

f.close()

print(news)

效果演示如下图所示:

中文词频统计,下载一长篇中文文章。

import jieba

#打开文件

file = open("gzccnews.txt",'r',encoding="utf-8")

notes = file.read();

file.close();

#替换标点符号

sep = ''':。,?!;∶ ...“”'''

for i in sep:

notes = notes.replace(i,' ');

notes_list = list(jieba.cut(notes));

#排除单词

exclude =[' ','\n','你','我','他','和','但','了','的','来','是','去','在','上','高']

#方法②,遍历列表

notes_dict={}

for w in notes_list:

notes_dict[w] = notes_dict.get(w,0)+1

# 排除不要的单词

for w in exclude:

del (notes_dict[w]);

for w in notes_dict:

print(w,notes_dict[w])

# 降序排序

dictList = list(notes_dict.items())

dictList.sort(key=lambda x:x[1],reverse=True);

print(dictList)

#输出词频最大TOP20

for i in range(20):

print(dictList[i])

#把结果存放到文件里

outfile = open("top20.txt","a")

for i in range(20):

outfile.write(dictList[i][0]+" "+str(dictList[i][1])+"\n")

outfile.close();

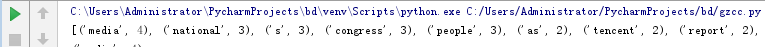

效果演示如下图所示: