KNN

概要:

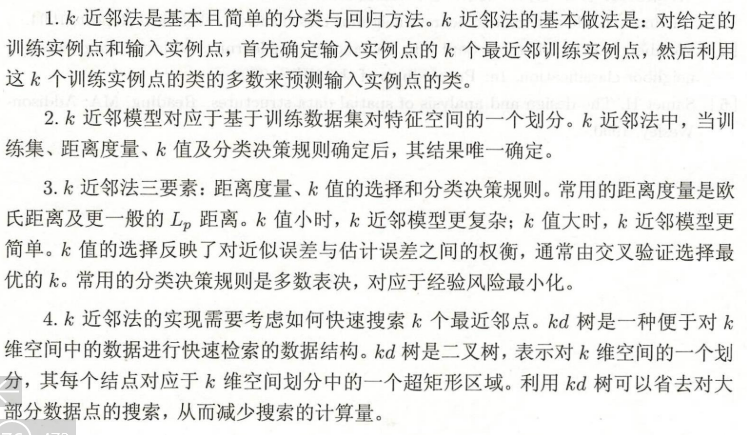

K邻近算法:将输入样本归为最近的K个样本中所属类别最多的一个类

三个基本要素:K的选择,距离的定义,分类决策的规则

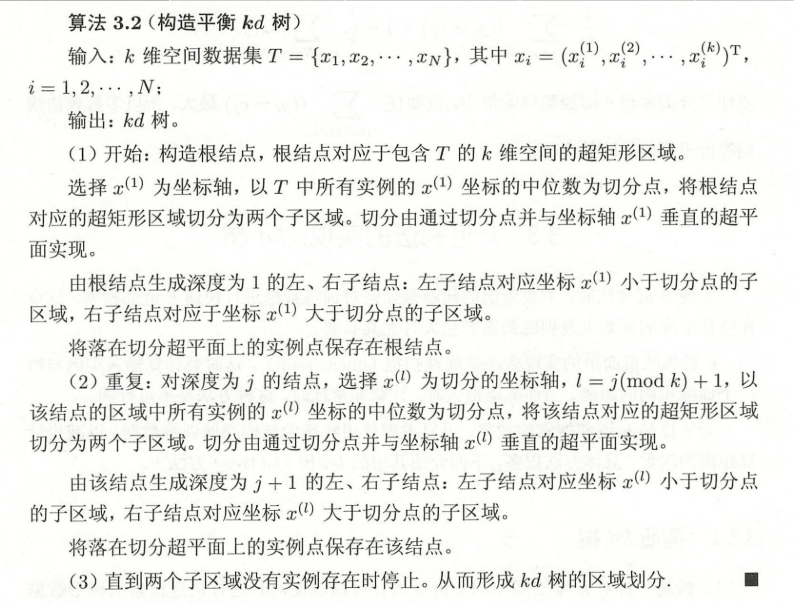

K邻近算法的实现(动手构造才能更加理解算法):kd树

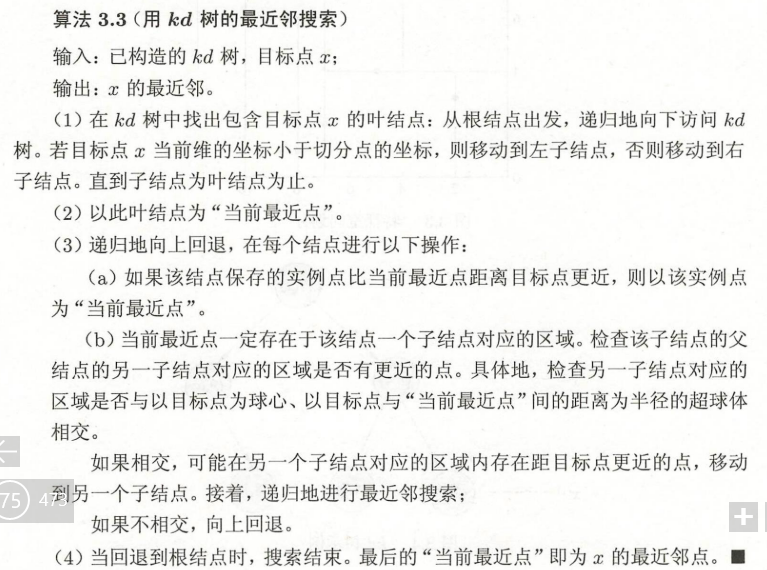

用kd树的最邻近搜索:

实现knn_classify:

#%%计算原理 from sklearn import datasets from collections import Counter # 为了做投票 from sklearn.model_selection import train_test_split import numpy as np # 导入iris数据 iris = datasets.load_iris() X = iris.data y = iris.target X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=20,test_size=0.3) # ============================================================================= # #help(train_test_split) # #random_state 相当于随机数种子,这里的random_state就是为了保证程序每次运行都分割一样的训练集和测试集。否则,同样的算法模型在不同的训练集和测试集上的效果不一样。 def dis(instance1,instance2): """ 欧氏距离 """ distan=np.sqrt(sum((instance1-instance2))**2) return distan def class_knn(X,y,instance,k): """ k不同结果会有变化 将实例instance和x_train中的实例计算距离,找到k个最小距离对应的样本归属的最多的类别 """ distance=[dis(x,instance) for x in X] neighbors=np.argsort(distance)[:k] count=Counter(y[neighbors]) return count.most_common()[0][0] # ============================================================================= #collections.Counter:Help on class Counter #most_common() list all unique # #np.argsort(-x) #按降序排列 # x[np.argsort(x)] #通过索引值排序后的数组 # ============================================================================= predictions=[class_knn(X_train,y_train,data,3) for data in X_test] np.vstack((y_test,predictions)) #合并比较查看 correct=np.count_nonzero((predictions==y_test)==True) print("Accurancy is: %.3f"%(correct/len(X_test))) #%%调包 import numpy as np from sklearn import datasets from sklearn.model_selection import train_test_split from sklearn.neighbors import KNeighborsClassifier iris=datasets.load_iris() x=iris.data y=iris.target x_train,x_test,y_train,y_test=train_test_split(x,y,test_size=0.3) #定义模型 knn=KNeighborsClassifier(n_neighbors=3) #k=3 knn.fit(x_train,y_train) #fit拟合 knn.predict(x_test) knn.classes_ knn.get_params # 计算准确率 from sklearn.metrics import accuracy_score correct = np.count_nonzero((knn.predict(X_test)==y_test)==True) #accuracy_score(y_test, clf.predict(X_test)) print ("Accuracy is: %.3f" %(correct/len(X_test)))

KNN——回归中的表现_learing