Python爬虫视频教程零基础小白到scrapy爬虫高手-轻松入门

https://item.taobao.com/item.htm?spm=a1z38n.10677092.0.0.482434a6EmUbbW&id=564564604865

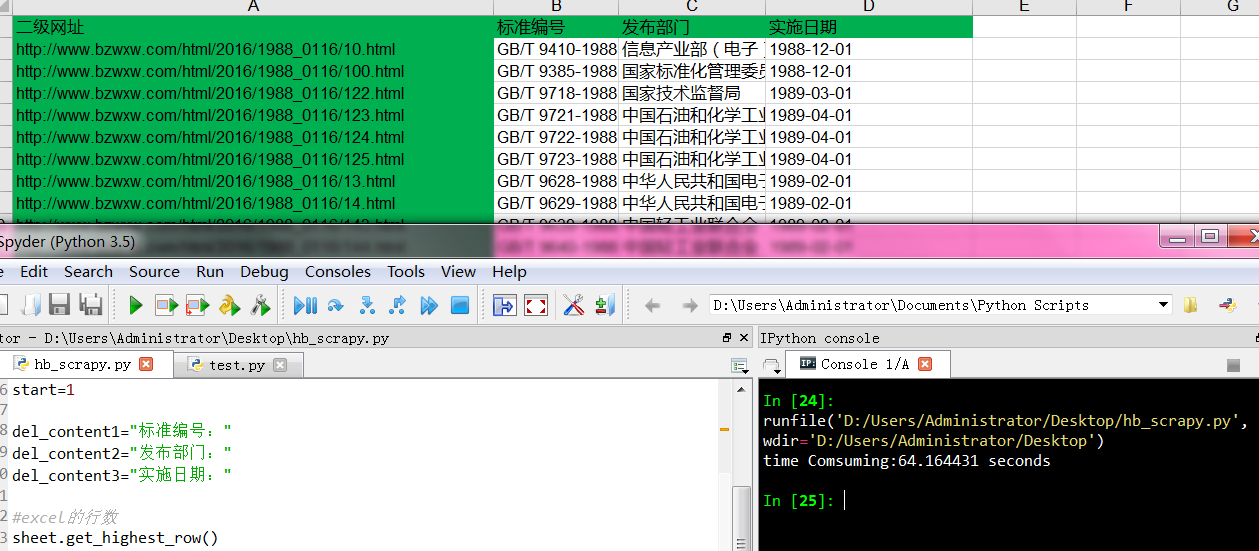

顺利100网站64秒

200网站570秒就搞不懂了,差距太大了。。

# -*- coding: utf-8 -*-

"""

Created on Tue Mar 15 08:53:08 2016

采集化工标准补录项目

@author: Administrator

"""

import requests,bs4,openpyxl,time

from openpyxl.cell import get_column_letter,column_index_from_string

#开始时间

timeBegin=time.clock()

excelName="hb_sites.xlsx"

sheetName="Sheet1"

wb1=openpyxl.load_workbook(excelName)

sheet=wb1.get_sheet_by_name(sheetName)

start=1

del_content1="标准编号:"

del_content2="发布部门:"

del_content3="实施日期:"

#excel的行数

sheet.get_highest_row()

#excel的列数

sheet.get_highest_column()

requests.codes.ok

#每个网站爬取相应数据

def Craw(site):

content_list=[]

res=requests.get(site)

res.encoding = 'gbk'

soup1=bs4.BeautifulSoup(res.text,"lxml")

StandardCode=soup1.select('h5')

for i in StandardCode:

content=i.getText()

content_list.append(content)

for i in content_list:

if "标准编号" in i:

i=i.strip(del_content1)

sheet['B'+str(row)].value=i

if "发布部门" in i:

i=i.strip(del_content2)

sheet['C'+str(row)].value=i

if "实施日期" in i:

i=i.strip(del_content3)

sheet['D'+str(row)].value=i

def TimeCount():

timeComsuming=timeEnd-timeBegin

print ("time Comsuming:%f seconds" % timeComsuming)

return timeComsuming

for row in range(2,200+1):

site=sheet['A'+str(row)].value

try:

Craw(site)

except:

continue

wb1.save(excelName)

#结束时间

timeEnd=time.clock()

timeComsuming=TimeCount()