一、系统环境

采用的是阿里云的Centos 7 64 bit 操作系统

二、安装方式

有两种安装方式:一种是安装已经编译好的Hadoop 安装压缩包;一种是自己编译安装。本次选择编译安装,因为我们从官网下载的Hadoop安装压缩包(hadoop-2.7.4.tar.gz)是32系统下的,而我们的系统是64位的,最好我们自己编译64位的安装包进行安装。当然也可以安装别人编译好的64位安装包。

三、安装依赖

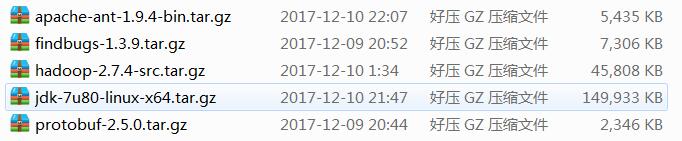

在编译Hadoop之前需要安装一些依赖软件,主要是Jdk、ant、findbugs和protobuf等,加上从官网下载的Hadoop源码包共需要5个安装包:

3.1 建立环境所需的文件夹

1)建立三个文件夹:

- tool:存放我们的安装包;

- softwores:软件安装路径

- data:数据存放

博主是在/home下建立的三个文件夹

[root@hadoop home]# mkdir tools [root@hadoop home]# mkdir softwares [root@hadoop home]# mkdir data [root@hadoop home]# ll total 12 drwxr-xr-x 2 root root 4096 Dec 11 11:22 data drwxr-xr-x 9 root root 4096 Dec 11 11:05 softwares drwxr-xr-x 2 root root 4096 Dec 10 22:14 tools [root@hadoop home]#

2)安装 jdk、ant 和findbugs,将上图中对应的安装包上传到tools文件夹中,并进行解压

[root@hadoop tools]# tar -zxvf apache-ant-1.9.4-bin.tar.gz -C /home/softwares/ [root@hadoop tools]# tar -zxvf findbugs-1.3.9.tar.gz -C /home/softwares/ [root@hadoop tools]# tar -zxvf jdk-7u80-linux-x64.tar.gz -C /home/softwares/

配置环境变量(编辑/etc/profile文件)

export JAVA_HOME=/home/softwares/jdk1.7.0_80 export ANT_HOME=/home/softwares/apache-ant-1.9.4 export FINDBUGS_HOME=/home/softwares/findbugs-1.3.9 export PATH=$PATH:$FINDBUGS_HOME/bin:$ANT_HOME/bin:$JAVA_HOME/bin

使配置生效并进行验证

[root@hadoop tools]# source /etc/profile [root@hadoop tools]# java -version openjdk version "1.8.0_151" OpenJDK Runtime Environment (build 1.8.0_151-b12) OpenJDK 64-Bit Server VM (build 25.151-b12, mixed mode) [root@hadoop tools]# ant -version Apache Ant(TM) version 1.9.4 compiled on April 29 2014 [root@hadoop tools]# findbugs -version 1.3.9 [root@hadoop tools]#

3)安装其他依赖软件(需联网)

[root@hadoop tools]# yum -y install maven svn ncurses-devel gcc* lzo-devel zlib-devel autoconf automake libtool cmake openssl-devel

4)安装protobuf,将上图中对应的安装包上传到tools文件夹中,并进行解压

[root@hadoop tools]# tar -zxvf protobuf-2.5.0.tar.gz -C /home/softwares/

protobuf安装并验证

[root@hadoop tools]# cd /home/softwares/protobuf-2.5.0/ [root@hadoop protobuf-2.5.0]# ./configure [root@hadoop protobuf-2.5.0]# make && make install [root@hadoop protobuf-2.5.0]# protoc --version libprotoc 2.5.0

至此,所有的依赖已经安装完成。

四、编译Hadoop源码

4.1 进行编译

[root@hadoop protobuf-2.5.0]# cd /home/softwares/hadoop-2.7.4 [root@hadoop hadoop-2.7.4]# mvn package -Pdist,native -DskipTests -Dtar

接下来是漫长的等待过程 .... .... ..... .... .... ...

[INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Apache Hadoop Main ................................. SUCCESS [ 3.533 s] [INFO] Apache Hadoop Project POM .......................... SUCCESS [ 2.023 s] [INFO] Apache Hadoop Annotations .......................... SUCCESS [ 3.679 s] [INFO] Apache Hadoop Assemblies ........................... SUCCESS [ 0.275 s] [INFO] Apache Hadoop Project Dist POM ..................... SUCCESS [ 2.875 s] [INFO] Apache Hadoop Maven Plugins ........................ SUCCESS [ 4.856 s] [INFO] Apache Hadoop MiniKDC .............................. SUCCESS [ 4.340 s] [INFO] Apache Hadoop Auth ................................. SUCCESS [ 4.534 s] [INFO] Apache Hadoop Auth Examples ........................ SUCCESS [ 5.398 s] [INFO] Apache Hadoop Common ............................... SUCCESS [03:02 min] [INFO] Apache Hadoop NFS .................................. SUCCESS [ 11.653 s] [INFO] Apache Hadoop KMS .................................. SUCCESS [ 24.501 s] [INFO] Apache Hadoop Common Project ....................... SUCCESS [ 0.112 s] [INFO] Apache Hadoop HDFS ................................. SUCCESS [07:28 min] [INFO] Apache Hadoop HttpFS ............................... SUCCESS [ 41.608 s] [INFO] Apache Hadoop HDFS BookKeeper Journal .............. SUCCESS [ 10.673 s] [INFO] Apache Hadoop HDFS-NFS ............................. SUCCESS [ 7.225 s] [INFO] Apache Hadoop HDFS Project ......................... SUCCESS [ 0.057 s] [INFO] hadoop-yarn ........................................ SUCCESS [ 0.110 s] [INFO] hadoop-yarn-api .................................... SUCCESS [03:36 min] [INFO] hadoop-yarn-common ................................. SUCCESS [ 45.418 s] [INFO] hadoop-yarn-server ................................. SUCCESS [ 0.164 s] [INFO] hadoop-yarn-server-common .......................... SUCCESS [ 12.942 s] [INFO] hadoop-yarn-server-nodemanager ..................... SUCCESS [ 19.200 s] [INFO] hadoop-yarn-server-web-proxy ....................... SUCCESS [ 3.315 s] [INFO] hadoop-yarn-server-applicationhistoryservice ....... SUCCESS [ 7.855 s] [INFO] hadoop-yarn-server-resourcemanager ................. SUCCESS [ 24.347 s] [INFO] hadoop-yarn-server-tests ........................... SUCCESS [ 6.439 s] [INFO] hadoop-yarn-client ................................. SUCCESS [ 6.393 s] [INFO] hadoop-yarn-server-sharedcachemanager .............. SUCCESS [ 3.445 s] [INFO] hadoop-yarn-applications ........................... SUCCESS [ 0.075 s] [INFO] hadoop-yarn-applications-distributedshell .......... SUCCESS [ 2.304 s] [INFO] hadoop-yarn-applications-unmanaged-am-launcher ..... SUCCESS [ 2.026 s] [INFO] hadoop-yarn-site ................................... SUCCESS [ 0.155 s] [INFO] hadoop-yarn-registry ............................... SUCCESS [ 7.255 s] [INFO] hadoop-yarn-project ................................ SUCCESS [ 11.871 s] [INFO] hadoop-mapreduce-client ............................ SUCCESS [ 0.254 s] [INFO] hadoop-mapreduce-client-core ....................... SUCCESS [ 26.029 s] [INFO] hadoop-mapreduce-client-common ..................... SUCCESS [ 25.002 s] [INFO] hadoop-mapreduce-client-shuffle .................... SUCCESS [ 3.792 s] [INFO] hadoop-mapreduce-client-app ........................ SUCCESS [ 7.797 s] [INFO] hadoop-mapreduce-client-hs ......................... SUCCESS [ 5.143 s] [INFO] hadoop-mapreduce-client-jobclient .................. SUCCESS [ 6.771 s] [INFO] hadoop-mapreduce-client-hs-plugins ................. SUCCESS [ 1.837 s] [INFO] Apache Hadoop MapReduce Examples ................... SUCCESS [ 4.513 s] [INFO] hadoop-mapreduce ................................... SUCCESS [ 6.842 s] [INFO] Apache Hadoop MapReduce Streaming .................. SUCCESS [ 4.355 s] [INFO] Apache Hadoop Distributed Copy ..................... SUCCESS [ 14.910 s] [INFO] Apache Hadoop Archives ............................. SUCCESS [ 2.844 s] [INFO] Apache Hadoop Rumen ................................ SUCCESS [ 6.931 s] [INFO] Apache Hadoop Gridmix .............................. SUCCESS [ 3.937 s] [INFO] Apache Hadoop Data Join ............................ SUCCESS [ 2.499 s] [INFO] Apache Hadoop Ant Tasks ............................ SUCCESS [ 2.268 s] [INFO] Apache Hadoop Extras ............................... SUCCESS [ 2.739 s] [INFO] Apache Hadoop Pipes ................................ SUCCESS [ 5.793 s] [INFO] Apache Hadoop OpenStack support .................... SUCCESS [ 4.444 s] [INFO] Apache Hadoop Amazon Web Services support .......... SUCCESS [ 4.258 s] [INFO] Apache Hadoop Azure support ........................ SUCCESS [ 47.689 s] [INFO] Apache Hadoop Client ............................... SUCCESS [ 19.524 s] [INFO] Apache Hadoop Mini-Cluster ......................... SUCCESS [ 0.305 s] [INFO] Apache Hadoop Scheduler Load Simulator ............. SUCCESS [ 5.581 s] [INFO] Apache Hadoop Tools Dist ........................... SUCCESS [ 25.708 s] [INFO] Apache Hadoop Tools ................................ SUCCESS [ 0.281 s] [INFO] Apache Hadoop Distribution ......................... SUCCESS [01:53 min] [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 24:49 min [INFO] Finished at: 2015-12-11T20:29:45+08:00 [INFO] Final Memory: 110M/493M [INFO] ------------------------------------------------------------------------

安装完成后如上所示(我自己的没有了,这个是网上找的,我自己用了1个多小时)

编译好的文件在 /home/softwares/hadoop-2.7.4-src/hadoop-dist/target/

[root@hadoop target]# pwd /home/softwares/hadoop-2.7.4-src/hadoop-dist/target [root@hadoop target]# ll total 591308 drwxr-xr-x 2 root root 4096 Dec 11 10:59 antrun drwxr-xr-x 3 root root 4096 Dec 11 10:59 classes -rw-r--r-- 1 root root 1872 Dec 11 10:59 dist-layout-stitching.sh -rw-r--r-- 1 root root 645 Dec 11 10:59 dist-tar-stitching.sh drwxr-xr-x 9 root root 4096 Dec 11 10:59 hadoop-2.7.4 -rw-r--r-- 1 root root 201372886 Dec 11 10:59 hadoop-2.7.4.tar.gz -rw-r--r-- 1 root root 26520 Dec 11 10:59 hadoop-dist-2.7.4.jar -rw-r--r-- 1 root root 403999148 Dec 11 11:00 hadoop-dist-2.7.4-javadoc.jar -rw-r--r-- 1 root root 24048 Dec 11 10:59 hadoop-dist-2.7.4-sources.jar -rw-r--r-- 1 root root 24048 Dec 11 10:59 hadoop-dist-2.7.4-test-sources.jar drwxr-xr-x 2 root root 4096 Dec 11 10:59 javadoc-bundle-options drwxr-xr-x 2 root root 4096 Dec 11 10:59 maven-archiver drwxr-xr-x 3 root root 4096 Dec 11 10:59 maven-shared-archive-resources drwxr-xr-x 3 root root 4096 Dec 11 10:59 test-classes drwxr-xr-x 2 root root 4096 Dec 11 10:59 test-dir [root@hadoop target]#

至此已经编译好了hadoop。

五、Hadoop 安装和配置启动(未完待续...)

六、踩过的坑

七、参考的博客

http://blog.csdn.net/miracle_yan/article/details/78288552

http://blog.csdn.net/young_kim1/article/details/50269501