1.Zookeeper官方网站下载 http://zookeeper.apache.org/

或者用wget命令下载 wget http://mirrors.hust.edu.cn/apache/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gz

2.安装,直接解压即可 tar -zxvf zookeeper-3.4.10.tar.gz -C /usr/local/

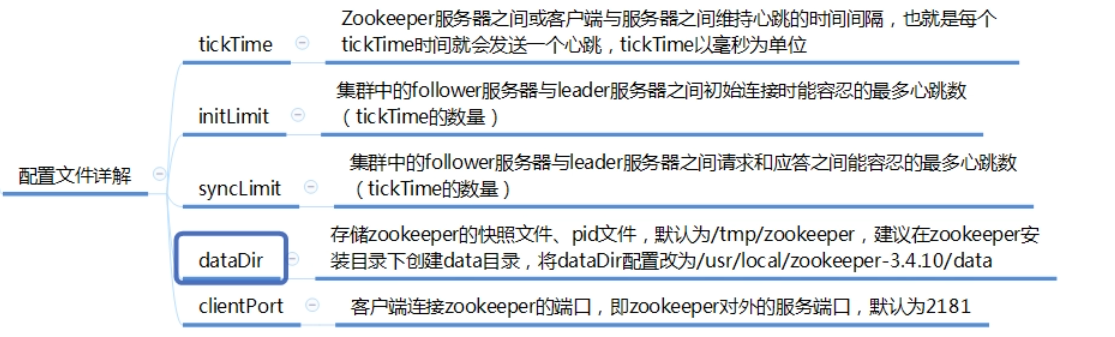

3.配置文件,在zookeeper的conf目录下,将zoo_sample.cfg拷贝一份,命名为zoo.cfg,cp zoo_sample.cfg zoo.cfg,zookeeper启动时会读取该配置文件作为默认配置文件

配置文件解读,修改

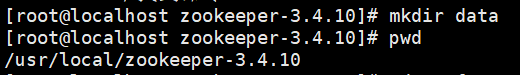

先创建数据目录 data

在配置文件中指定数据存储路径,保存,退出

4.启动(切换到安装目录的bin目录下): ./zkServer.sh start

5.关闭(切换到安装目录的bin目录下): ./zkServer.sh stop

bin目录下,客户端连接命令: ./zkCli.sh -server localhost:2181

Zookeeper集群搭建

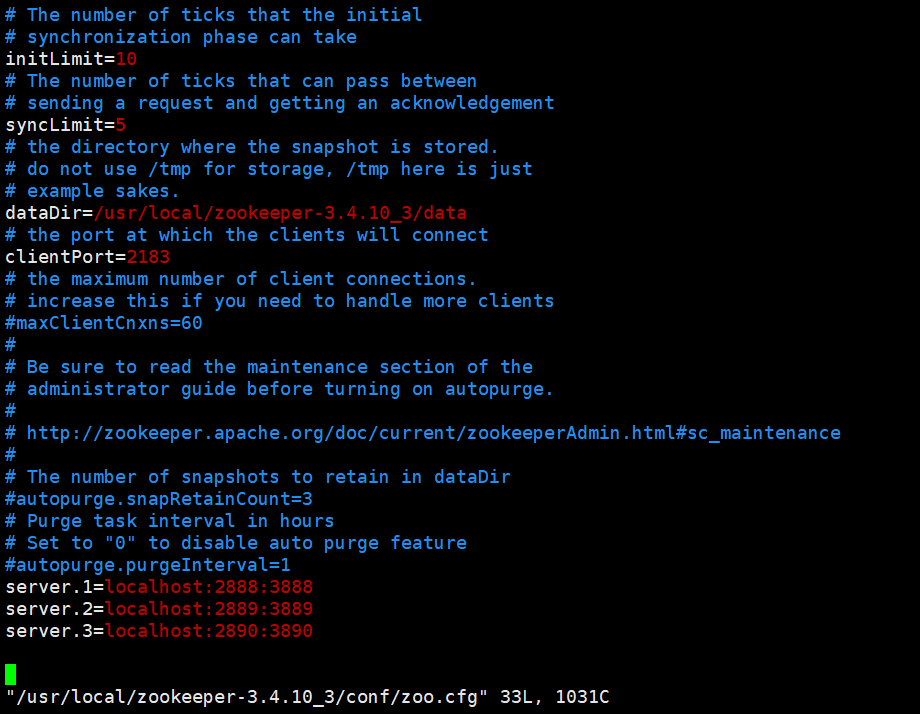

- 将下载的zookeeper-3.4.10 命名为zookeeper-3.4.10_1,然后再拷贝2份,做集群用。

cp -rf zookeeper-3.4.10_1 zookeeper-3.4.10_2

cp -rf zookeeper-3.4.10_1 zookeeper-3.4.10_3

- 分别进入zookeeper-3.4.10_1,zookeeper-3.4.10_3,zookeeper-3.4.10_3目录,创建存储目录data,并且在data文件夹下面创建一个叫myid的文件,在文件里写入zoo.cfg配置文件中server.X对应的X值,第一台的内容为 1,第二台的内容为 2,第二台的内容为 3

- 然后再分别进入conf目录,将zoo_sample.cfg拷贝一份,命名为zoo.cfg,命令: cp zoo_sample.cfg zoo.cfg,三台做如下配置:

- 将3台分别启动 ./zkServer.sh start

- 查看集群状态,如下图,可以看出,1为leader,2,3为follower。

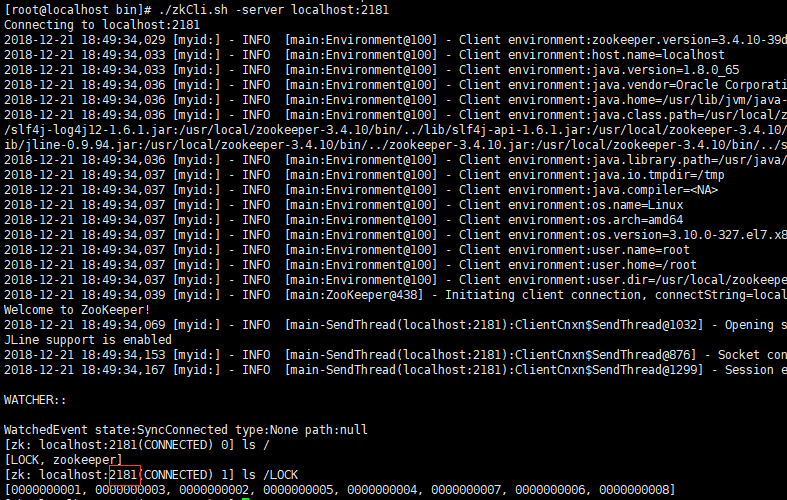

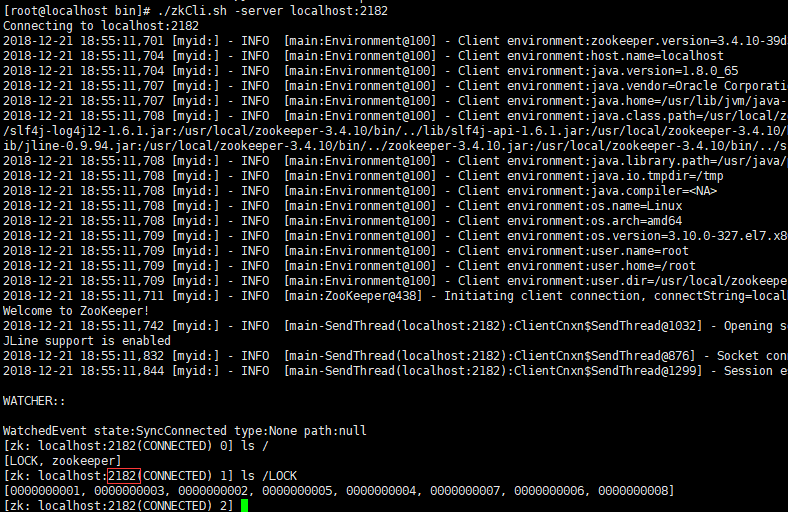

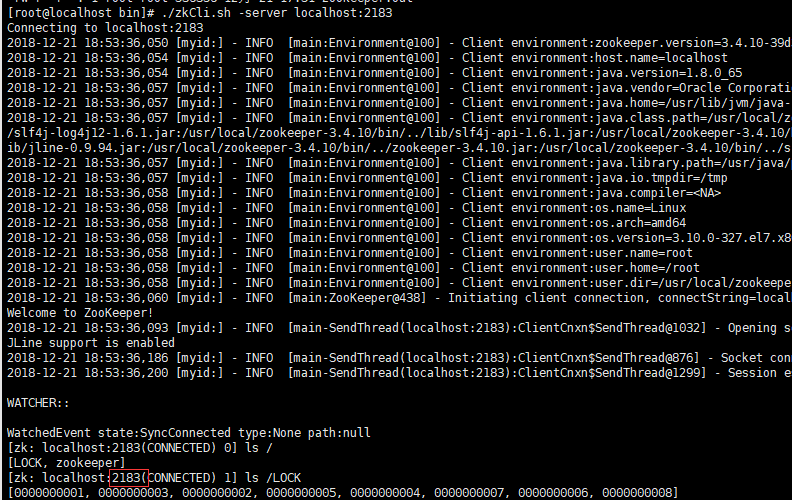

集群节点一致性特点

客户端连接服务器

./zkCli.sh -server localhost:2181

创建节点

create /n 100 表示在根节点/下创建持久节点n,值为100

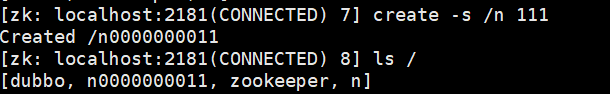

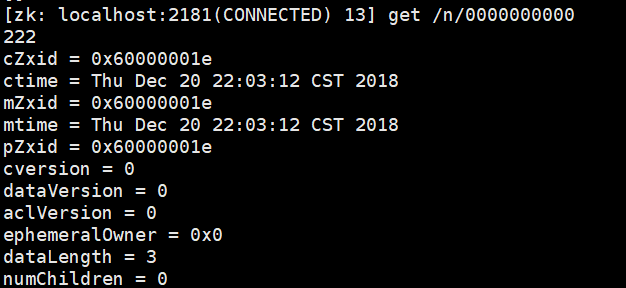

create -s /n 111 表示在根节点/下创建持久顺序节点,节点名字以n开通后面跟10个数字编号,如nxxx,值为111

create -s /n/ 222 表示在n节点下创建持久顺序节点,节点名字是10个数字编号,如xxx,值为222

zookeeper节点共分4类,持久节点,持久顺序节点,临时节点,临时顺序节点。

ephemeralOwner值非0,表示临时节点,如果是永久节点则其值为 0x0

create -e 表示创建临时节点,-s表示创建顺序节点,-e和-s可以一块使用

rmr 删除节点

修改相关命令:

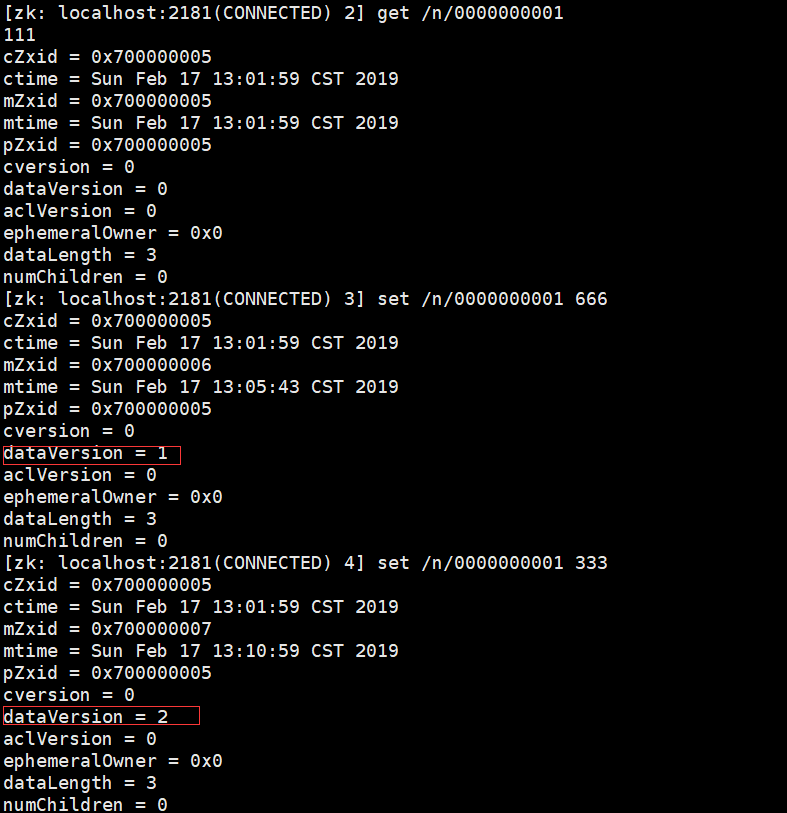

set path data [version]

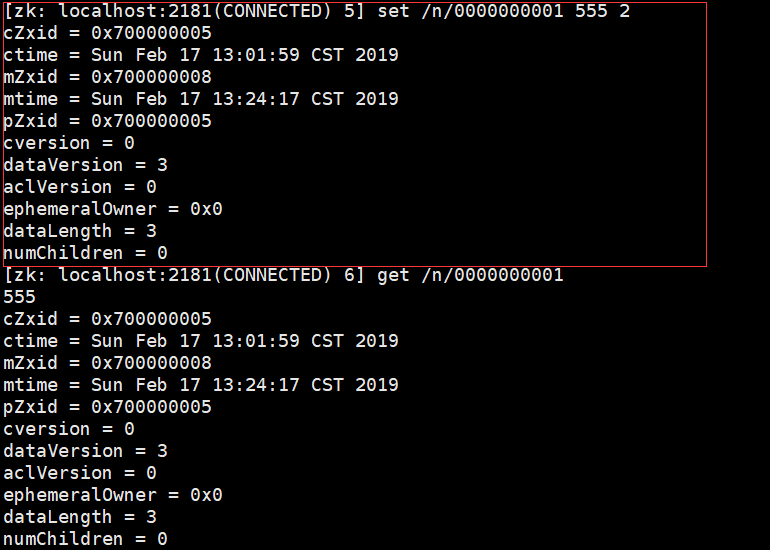

如果我们多次修改,会发现 dataVersion ,也就是数据版本,在不停得发生变化(自增)

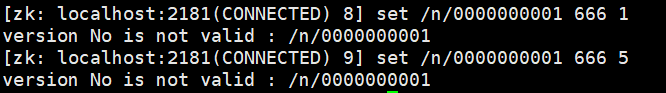

如果我们在set的时候手动去指定了版本号,就必须和上一次查询出来的结果一致,否则 就会报错。

这个可以用于我们在修改节点数据的时候,保证我们修改前数据没被别人修改过。因为如果别人修改过了,我们这次修改是不会成功的。

如上第二次把节点0000000001的值改为333后,dataVersion值为2,如果我们下次修改该节点值,指定了dataVersion值但值又不与当前版本一致时,则报错

若指定的dataVersion值正确,则可以修改

dubbo在zookeeper中的providers节点

[zk: localhost:2183(CONNECTED) 12] ls / [LOCK, dubbo, n0000000011, zookeeper, n] [zk: localhost:2183(CONNECTED) 13] ls /dubbo [com.zxp.dubbo.HelloService] [zk: localhost:2183(CONNECTED) 14] ls /dubbo/com.zxp.dubbo.HelloService [consumers, configurators, routers, providers] [zk: localhost:2183(CONNECTED) 15] ls /dubbo/com.zxp.dubbo.HelloService/providers [dubbo%3A%2F%2F192.168.220.1%3A20880%2Fcom.zxp.dubbo.HelloService%3Fanyhost%3Dtrue%26application%3Ddubboprovider%26dubbo%3D2.6.0%26generic%3Dfalse%26interface%3Dcom.zxp.dubbo.HelloService%26methods%3DgetMsg%2CgetHashMap%26pid%3D10916%26revision%3D0.0.1-SNAPSHOT%26side%3Dprovider%26timestamp%3D1550503289819]

dubbo%3A%2F%2F192.168.220.1%3A20880%2Fcom.zxp.dubbo.HelloService%3Fanyhost%3Dtrue%26application%3Ddubboprovider%26dubbo%3D2.6.0%26gene

ric%3Dfalse%26interface%3Dcom.zxp.dubbo.HelloService%26methods%3DgetMsg%2CgetHashMap%26pid%3D10916%26revision%3D0.0.1-SNAPSHOT%26side%

3Dprovider%26timestamp%3D1550503289819 经过UrlDecode解码后,结果如下:

dubbo://192.168.220.1:20880/com.zxp.dubbo.HelloService?anyhost=true&application=dubboprovider&dubbo=2.6.0&generic=false&interface=com.zxp.dubbo.HelloService&methods=getMsg,getHashMap&pid=10916&revision=0.0.1-SNAPSHOT&side=provider×tamp=1550503289819

继续查看该节点的值信息,可以看到是个临时节点

[zk: localhost:2183(CONNECTED) 18] get /dubbo/com.zxp.dubbo.HelloService/providers/dubbo%3A%2F%2F192.168.220.1%3A20880%2Fcom.zxp.dubbo.HelloService%3Fanyhost%3Dtrue%26application%3Ddubboprovider%26dubbo%3D2.6.0%26generic%3Dfalse%26interface%3Dcom.zxp.dubbo.HelloService%26methods%3DgetMsg%2CgetHashMap%26pid%3D10916%26revision%3D0.0.1-SNAPSHOT%26side%3Dprovider%26timestamp%3D1550503289819 null cZxid = 0x700000074 ctime = Sun Feb 17 14:55:45 CST 2019 mZxid = 0x700000074 mtime = Sun Feb 17 14:55:45 CST 2019 pZxid = 0x700000074 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x268f9c912ca0005 dataLength = 0 numChildren = 0

如果dubbo服务器断开,则节点自动删除

用之前的key查值,结果如下

[zk: localhost:2183(CONNECTED) 25] get /dubbo/com.zxp.dubbo.HelloService/providers/dubbo%3A%2F%2F192.168.220.1%3A20880%2Fcom.zxp.dubbo.HelloService%3Fanyhost%3Dtrue%26application%3Ddubboprovider%26dubbo%3D2.6.0%26generic%3Dfalse%26interface%3Dcom.zxp.dubbo.HelloService%26methods%3DgetMsg%2CgetHashMap%26pid%3D10916%26revision%3D0.0.1-SNAPSHOT%26side%3Dprovider%26timestamp%3D1550503289819 Node does not exist: /dubbo/com.zxp.dubbo.HelloService/providers/dubbo%3A%2F%2F192.168.220.1%3A20880%2Fcom.zxp.dubbo.HelloService%3Fanyhost%3Dtrue%26application%3Ddubboprovider%26dubbo%3D2.6.0%26generic%3Dfalse%26interface%3Dcom.zxp.dubbo.HelloService%26methods%3DgetMsg%2CgetHashMap%26pid%3D10916%26revision%3D0.0.1-SNAPSHOT%26side%3Dprovider%26timestamp%3D1550503289819

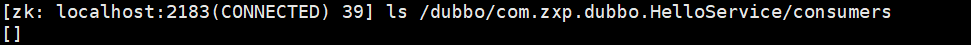

dubbo在zookeeper中的consumers节点,也是一个临时节点

[zk: localhost:2183(CONNECTED) 37] ls /dubbo/com.zxp.dubbo.HelloService/consumers [consumer%3A%2F%2F192.168.220.1%2Fcom.zxp.dubbo.HelloService%3Fapplication%3Ddubboconsumer%26category%3Dconsumers%26check%3Dfalse%26dubbo%3D2.6.0%26interface%3Dcom.zxp.dubbo.HelloService%26methods%3DgetMsg%2CgetHashMap%26pid%3D7924%26revision%3D0.0.1-SNAPSHOT%26side%3Dconsumer%26timestamp%3D1550504306650] [zk: localhost:2183(CONNECTED) 38] ls /dubbo/com.zxp.dubbo.HelloService/providers

经过UrlDecode解码后,结果如下:

consumer://192.168.220.1/com.zxp.dubbo.HelloService?application=dubboconsumer&category=consumers&check=false&dubbo=2.6.0&interface=com.zxp.dubbo.HelloService&methods=getMsg,getHashMap&pid=7924&revision=0.0.1-SNAPSHOT&side=consumer×tamp=1550504306650

当消费方断开后,节点也会自动删除

zookeeper实现分布式锁

<dependency>

<groupId>com.101tec</groupId>

<artifactId>zkclient</artifactId>

<version>0.10</version>

</dependency>

public class OrderCodeGenerator { // 自增长序列 private static int i = 0; // 按照“年-月-日-小时-分钟-秒-自增长序列”的规则生成订单编号 public String getOrderCode() { Date now = new Date(); SimpleDateFormat sdf = new SimpleDateFormat("yyyyMMddHHmmss"); return sdf.format(now) + ++i; } }

public class ZookeeperLock implements Lock { private static Logger logger = LoggerFactory.getLogger(ZookeeperLock.class); private static final String ZOOKEEPER_IP_PORT = "192.168.227.129:2181,192.168.227.129:2182,192.168.227.129:2183"; private static final String LOCK_PATH = "/LOCK"; private ZkClient client = new ZkClient(ZOOKEEPER_IP_PORT, 3000, 3000, new SerializableSerializer()); private CountDownLatch cdl; private String beforePath;// 当前请求的节点前一个节点 private String currentPath;// 当前请求的节点 // 判断有没有LOCK目录,没有则创建 public ZookeeperLock() { if (!this.client.exists(LOCK_PATH)) { this.client.createPersistent(LOCK_PATH); } } public void lock() { //尝试去获取分布式锁失败 if (!tryLock()) { //对次小节点进行监听 waitForLock(); lock(); } else { System.out.println(Thread.currentThread().getName() + "-->获得分布式锁!"); } } public boolean tryLock() { // 如果currentPath为空则为第一次尝试加锁,第一次加锁赋值currentPath if (currentPath == null || currentPath.length() <= 0) { // 在"/LOCK"节点下,创建一个临时顺序节点,值为"lock",

//currentPath是创建的临时节点路径 如:/LOCK/0000000180,字符串0000000180为自增长的临时节点名称 currentPath = this.client.createEphemeralSequential(LOCK_PATH + '/', "lock"); System.out.println(Thread.currentThread().getName()+"注册的路径-->" + currentPath); } // 获取"/LOCK"节点下面的所有临时节点名称,临时节点名称为自增长的字符串如:0000000100 List<String> childrens = this.client.getChildren(LOCK_PATH); //由小到大排序所有临时子节点名称集合 Collections.sort(childrens); //判断创建的子节点/LOCK/Node-n是否最小,即currentPath,如果当前节点等于childrens中的最小的一个就占用锁 if (currentPath.equals(LOCK_PATH + '/' + childrens.get(0))) { return true; } //找出比创建的临时顺序节子节点/LOCK/Node-n次小的节点,并赋值给beforePath else { int wz = Collections.binarySearch(childrens, currentPath.substring(6)); beforePath = LOCK_PATH + '/' + childrens.get(wz - 1); } return false; } //等待锁,对次小节点进行监听 private void waitForLock() { IZkDataListener listener = new IZkDataListener() { public void handleDataDeleted(String dataPath) throws Exception { logger.info(Thread.currentThread().getName() + ":捕获到DataDelete事件!--"); if (cdl != null) { cdl.countDown(); } } public void handleDataChange(String dataPath, Object data) throws Exception { } }; // 对次小节点进行监听,即beforePath-给排在前面的的节点增加数据删除的watcher this.client.subscribeDataChanges(beforePath, listener); if (this.client.exists(beforePath)) { cdl = new CountDownLatch(1); try { cdl.await(); } catch (InterruptedException e) { e.printStackTrace(); } } this.client.unsubscribeDataChanges(beforePath, listener); } //完成业务逻辑以后释放锁 public void unlock() { // 删除当前临时节点 client.delete(currentPath); } // ========================================== public void lockInterruptibly() throws InterruptedException { } public boolean tryLock(long time, TimeUnit unit) throws InterruptedException { return false; } public Condition newCondition() { return null; } }

public class Test { private static OrderCodeGenerator ong = new OrderCodeGenerator(); // 同时并发的线程数 private static final int NUM = 100; // 按照线程数初始化倒计数器,倒计数器 private static CountDownLatch cdl = new CountDownLatch(NUM); public static void main(String[] args) { for (int i = 0; i < NUM; i++) { new Thread(() -> { Lock lock = new ZookeeperLock(); try { cdl.await(); lock.lock(); String orderCode = ong.getOrderCode(); // ……业务代码 System.out.println(Thread.currentThread().getName() + "得到的id:==>" + orderCode); } catch (Exception e) { } finally { lock.unlock(); } }).start(); cdl.countDown(); } } }