Hadoop 2.7.4

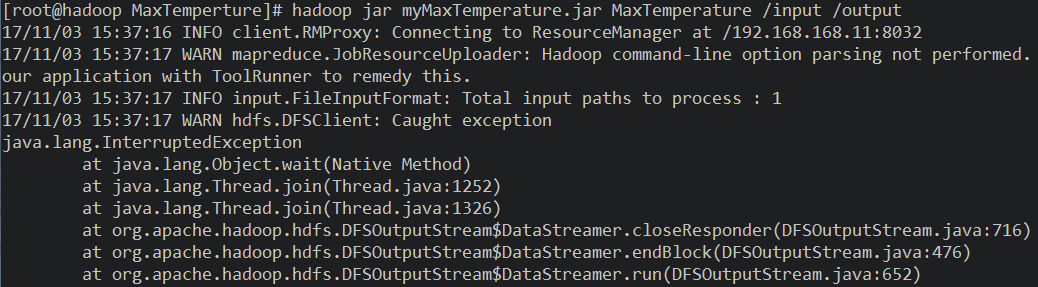

The reason is this: originally, DataStreamer::closeResponder always prints a warning about InterruptedException; since HDFS-9812, DFSOutputStream::closeImpl always forces threads to close, which causes InterruptedException.

A simple fix is to use debug level log instead of warning level.

In HDFS-9794, it has solved problem of that stream thread leak if failure happens when closing the striped outputstream. And in DFSOutputStream, it also exists the same problem in DFSOutputStream#closeImpl. If failures happen when flushing data blocks, the streamer threads will also not be closed.

When closing the DFSStripedOutputStream, if failures happen while flushing out the data/parity blocks, the streamer threads will not be closed.

这个是Hadoop的一个bug,大概的意思是:

首先是HDFS 9794的bug:

当关闭DFSStripedOutputStream的时候,如果在向data/parity块刷回数据失败的时候,streamer线程不会被关闭。同时在DFSOutputStream#closeImpl中也存在这个问题。

DFSOutputStream#closeImpl总是会强制性地关闭线程,会引起InterruptedException。

解决的方法是:将日志的级别从warning改成debug。