一、设置hosts

修改主机名

[root@localhost kubernetes]# hostnamectl set-hostname master69

四台服务器安装kebernetes,一个master节点和三个node节点(一主三从)

master69 172.28.18.69 master69.kubernetes.blockchain.hl95.com

node120 172.28.5.120 node120.kubernetes.blockchain.hl95.com

node124 172.28.5.124 node124.kubernetes.blockchain.hl95.com

node125 172.28.5.125 node125.kunernetes.blockchain.hl95.com

[root@localhost hl95-network]# vim /etc/hosts

172.28.18.69 master69.kubernetes.blockchain.hl95.com 172.28.5.120 noe120.kubernetes.blockchain.hl95.com 172.28.5.124 node124.kubernetes.blockchain.hl95.com 172.28.5.125 node125.kubernetes.blockchain.hl95.com

二、开启时钟同步服务

[root@redis-02 ~]# systemctl start chronyd

三、关闭防火墙服务

[root@redis-02 ~]# systemctl stop firewalld

四、关闭SELinux

[root@redis-02 ~]# setenforce 0 setenforce: SELinux is disabled

[root@redis-02 ~]# vim /etc/selinux/config

SELINUX=disabled

五、关闭swap

[root@redis-02 ~]# swapoff -a [root@redis-02 ~]# free -m total used free shared buff/cache available Mem: 32124 9966 9391 121 12766 21459 Swap: 0 0 0 [root@redis-02 ~]#

永久设置

编辑/etc/fstab文件,注释掉swap行

[root@redis-02 ~]# vim /etc/fstab

/dev/mapper/centos-root / xfs defaults 0 0 UUID=a9fdc7b9-2613-4a87-a250-3fabafa3ff5e /boot xfs defaults 0 0 /dev/mapper/centos-home /home xfs defaults 0 0 #/dev/mapper/centos-swap swap swap defaults 0 0

六、准备ipvs环境

[root@localhost kubernetes]# modprobe ip_vs [root@localhost kubernetes]# modprobe ip_vs_rr [root@localhost kubernetes]# modprobe ip_vs_wrr [root@localhost kubernetes]# modprobe ip_vs_sh [root@localhost kubernetes]# modprobe nf_conntrack_ipv4

[root@localhost kubernetes]# lsmod |grep ip_vs ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs 145497 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 139224 9 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6 libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

七、修改docker 开机自启动以及的cgroupdriver为systemd

[root@localhost kubernetes]# vim /etc/docker/daemon.json

"exec-opts": ["native.cgroupdriver=systemd"]

重启docker服务,查看

[root@localhost kubernetes]# systemctl restart docker

[root@localhost kubernetes]# docker info|grep Cgroup Cgroup Driver: systemd [root@localhost kubernetes]#

[root@redis-02 kubernetes]# systemctl enable docker

八、配置kubernetes.repo

阿里源

[root@redis-02 ~]# vim /etc/yum.repos.d/kubernetes.repo [kubernetes] name=kubernetes Repo enabled=1 gpgcheck=1 repo_gpgcheck=1 baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

九、安装kubelet kubeadm kubectl 1.18.5版本

这里安装指定版本1.18.5,如果不指定版本则安装最新的1.20.1版本,这个版本在 centos7.7上测试不通过

[root@redis-02 ~]# yum install kubelet-1.18.5 kubeadm-1.18.5 kubectl-1.18.5 -y

确保在此步骤之前已加载了 br_netfilter 模块。这可以通过运行 lsmod | grep br_netfilter 来完成。要显示加载它,请调用 modprobe br_netfilter。

[root@redis-02 ~]# lsmod|grep br_netfilter br_netfilter 22256 0 bridge 146976 1 br_netfilter

[root@redis-02 ~]# modprobe br_netfilter [root@redis-02 ~]#

十、配置/etc/sysctl.d/k8s.conf文件

[root@redis-02 ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-iptables=1

[root@redis-02 ~]# sysctl --system

十一、设置ip_forward

[root@redis-02 ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward=1

十二、设置kubelet开机自启动

[root@redis-02 ~]# systemctl enable kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. [root@redis-02 ~]#

十三、查看所需镜像列表,并下载相关镜像

[root@redis-02 ~]# systemctl enable kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service. [root@redis-02 ~]# kubeadm config images list k8s.gcr.io/kube-apiserver:v1.18.5 k8s.gcr.io/kube-controller-manager:v1.18.5 k8s.gcr.io/kube-scheduler:v1.18.5 k8s.gcr.io/kube-proxy:v1.18.5 k8s.gcr.io/pause:3.2 k8s.gcr.io/etcd:3.4.3-0 k8s.gcr.io/coredns:1.6.7 [root@redis-02 ~]#

在master节点172.28.18.69上下载以下镜像

由于k8s.gcr.io k8s镜像默认下载不了,需要通过docker hub 进行下载

[root@redis-02 ~]# docker pull mirrorgcrio/kube-apiserver:v1.18.5

[root@redis-02 ~]# docker pull mirrorgcrio/kube-controller-manager:v1.18.5 [root@redis-02 ~]# docker pull mirrorgcrio/kube-scheduler:v1.18.5 [root@redis-02 ~]# docker pull mirrorgcrio/kube-proxy:v1.18.5 [root@redis-02 ~]# docker pull mirrorgcrio/pause:3.2 [root@redis-02 ~]# docker pull mirrorgcrio/etcd:3.4.3-0 [root@redis-02 ~]# docker pull mirrorgcrio/coredns:1.6.7

将下载的镜像重新打上k8s.gcr.io的tag

[root@localhost blockchain-explorer]# docker tag mirrorgcrio/kube-apiserver:v1.18.5 k8s.gcr.io/kube-apiserver:v1.18.5 [root@localhost blockchain-explorer]# docker tag mirrorgcrio/kube-controller-manager:v1.18.5 k8s.gcr.io/kube-controller-manager:v1.18.5 [root@localhost blockchain-explorer]# docker tag mirrorgcrio/kube-scheduler:v1.18.5 k8s.gcr.io/kube-scheduler:v1.18.5

[root@localhost blockchain-explorer]# docker tag mirrorgcrio/kube-proxy:v1.18.5 k8s.gcr.io/kube-proxy:v1.18.5 [root@localhost blockchain-explorer]# docker tag mirrorgcrio/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0 [root@localhost blockchain-explorer]# docker tag mirrorgcrio/coredns:1.6.7 k8s.gcr.io/coredns:1.6.7 [root@localhost blockchain-explorer]# docker tag mirrorgcrio/pause:3.2 k8s.gcr.io/pause:3.2

删除之前的镜像

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/kube-apiserver:v1.18.5

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/kube-controller-manager:v1.18.5

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/kube-scheduler:v1.18.5

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/kube-proxy:v1.18.5

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/etcd:3.4.3-0

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/coredns:1.6.7

[root@localhost blockchain-explorer]# docker rmi -f mirrorgcrio/pause:3.2

最终镜像列表

[root@localhost kubernetes]# docker images|grep k8s.gcr.io

k8s.gcr.io/kube-proxy v1.18.5 a1daed4e2b60 6 months ago 117MB

k8s.gcr.io/kube-apiserver v1.18.5 08ca24f16874 6 months ago 173MB

k8s.gcr.io/kube-controller-manager v1.18.5 8d69eaf196dc 6 months ago 162MB

k8s.gcr.io/kube-scheduler v1.18.5 39d887c6621d 6 months ago 95.3MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 10 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 11 months ago 43.8MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 14 months ago 288MB

worker node节点只需要安装kube-proxy和coredns以及pause

[root@redis-02 ~]# docker images|grep k8s.gcr.io k8s.gcr.io/pause 3.2 80d28bedfe5d 10 months ago 683kB k8s.gcr.io/kube-proxy v1.20.0 cb2b1b8da1eb 4 years ago 166MB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 11 months ago 43.8MB [root@redis-02 ~]#

十四、导出初始化集群的配置文件

[root@localhost kubernetes]# kubeadm config print init-defaults>kubeadm.yaml

修改相应的配置

[root@localhost kubernetes]# vim kubeadm.yaml

advertiseAddress: 172.28.18.69

dnsDomain: master69.kubernetes.blockchain.hl95.com podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

kubernetesVersion: v1.18.5

十五、初始化集群

在master节点运行

[root@localhost kubernetes]# kubeadm init --config=kubeadm.yaml

W0103 21:14:22.685612 1255 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.5

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [localhost kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.28.18.69]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost localhost] and IPs [172.28.18.69 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost localhost] and IPs [172.28.18.69 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0103 21:14:36.238987 1255 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0103 21:14:36.240883 1255 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 175.091427 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node localhost as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node localhost as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.28.18.69:6443 --token abcdef.0123456789abcdef

--discovery-token-ca-cert-hash sha256:bb92e76902047bab95904938f94684430c282fd7091c67139bcf998984e00208

按要求依次运行

[root@localhost ~]# mkdir -p $HOME/.kube [root@localhost ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@localhost ~]# chown $(id -u):$(id -g) $HOME/.kube/config

如果初始化失败,需要重启重新初始化集群,那么先执行kubeadm reset -f ,清空集群数据

[root@localhost blockchain-explorer]# kubeadm reset -f

再执行init

十六、查看节点

[root@localhost kubernetes]# kubectl get nodes NAME STATUS ROLES AGE VERSION localhost NotReady master 4m8s v1.18.5

此时有一个master节点,状态为NotReady,因为我们还没有安装网络插件

查看集群状态

[root@localhost kubernetes]# kubectl get cs NAME STATUS MESSAGE ERROR etcd-0 Healthy {"health":"true"} scheduler Healthy ok controller-manager Healthy ok [root@localhost kubernetes]#

查看系统pods

[root@localhost kubernetes]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-66bff467f8-6xkqw 0/1 Pending 0 5m40s coredns-66bff467f8-lhrf2 0/1 Pending 0 5m40s etcd-localhost 1/1 Running 0 5m48s kube-apiserver-localhost 1/1 Running 0 5m48s kube-controller-manager-localhost 1/1 Running 0 5m48s kube-proxy-2qmxm 1/1 Running 0 5m40s kube-scheduler-localhost 1/1 Running 0 5m48s

十七、安装网络插件flannel

下载flannel.yml文件直接通过kubectl命令安装

[root@localhost kubernetes]# curl -k -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果报错:

% Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- 0:00:05 --:--:-- 0curl: (7) Failed connect to raw.githubusercontent.com:443; 拒绝连接

则添加域名解析到hosts文件中

[root@localhost kubernetes]# vim /etc/hosts 199.232.96.133 raw.githubusercontent.com

[root@localhost kubernetes]# curl -k -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4821 100 4821 0 0 4600 0 0:00:01 0:00:01 --:--:-- 4600

[root@localhost kubernetes]#

安装flannel

[root@localhost kubernetes]# kubectl apply -f kube-flannel.yml podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created [root@localhost kubernetes]#

再次查看节点

[root@localhost etcd]# kubectl get nodes NAME STATUS ROLES AGE VERSION localhost Ready master 19m v1.18.5

已经是Ready状态,查看系统pods

[root@localhost etcd]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-66bff467f8-6xkqw 1/1 Running 0 19m coredns-66bff467f8-lhrf2 1/1 Running 0 19m etcd-localhost 1/1 Running 0 19m kube-apiserver-localhost 1/1 Running 0 19m kube-controller-manager-localhost 1/1 Running 0 19m kube-flannel-ds-fctp9 1/1 Running 0 5m26s kube-proxy-2qmxm 1/1 Running 0 19m kube-scheduler-localhost 1/1 Running 0 19m

kube-flannel已经运行,各个pods都是running状态

此时,集群的master节点配置完毕,

十八、node节点安装kube-proxy和pause镜像

[root@redis-03 ~]# docker images|grep k8s k8s.gcr.io/kube-proxy v1.18.5 a1daed4e2b60 6 months ago 117MB k8s.gcr.io/pause 3.2 80d28bedfe5d 10 months ago 683kB

十九、复制master节点/run/flannel/subnet.env到各个slave node节点

[root@localhost kubernetes]# scp -P25601 /run/flannel/subnet.env root@172.28.5.120:/run/flannel/ [root@localhost kubernetes]# scp -P25601 /run/flannel/subnet.env root@172.28.5.124:/run/flannel/ [root@localhost kubernetes]# scp -P25601 /run/flannel/subnet.env root@172.28.5.125:/run/flannel/

二十、增加node节点

使用前面初始化完成后输出的kubeadm join命令在node节点上执行,就完成了node节点加入集群的操作

[root@redis-01 kubernetes]# kubeadm join 172.28.18.69:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:bb92e76902047bab95904938f94684430c282fd7091c67139bcf998984e00208 W0104 15:47:23.782612 34832 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set. [preflight] Running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' [WARNING Hostname]: hostname "redis-01.hlqxt" could not be reached [WARNING Hostname]: hostname "redis-01.hlqxt": lookup redis-01.hlqxt on 202.106.0.20:53: no such host [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

三台node节点都运行kubeadm join,然后在master查看节点状态

[root@localhost kubernetes]# kubectl get nodes NAME STATUS ROLES AGE VERSION localhost Ready master 5h6m v1.18.5 redis-01.hlqxt Ready <none> 48m v1.18.5 redis-02.hlqxt Ready <none> 2m8s v1.18.5 redis-03.hlqxt Ready <none> 2m30s v1.18.5

master查看系统pod

[root@localhost kubernetes]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-66bff467f8-95n7v 1/1 Running 0 5h6m coredns-66bff467f8-vhg9r 1/1 Running 0 5h6m etcd-localhost 1/1 Running 0 5h7m kube-apiserver-localhost 1/1 Running 0 5h7m kube-controller-manager-localhost 1/1 Running 0 5h7m kube-flannel-ds-8bx7f 1/1 Running 0 49m kube-flannel-ds-drfqk 1/1 Running 0 2m53s kube-flannel-ds-hx4tk 1/1 Running 0 3m15s kube-flannel-ds-spl2l 1/1 Running 0 5h4m kube-proxy-72bhk 1/1 Running 0 2m53s kube-proxy-jft52 1/1 Running 0 3m15s kube-proxy-lpt8q 1/1 Running 0 49m kube-proxy-r9hwc 1/1 Running 0 5h6m kube-scheduler-localhost 1/1 Running 0 5h7m

node节点运行有kube-proxy,kube-flannel,k8s.gcr.io/pause:3.2三个容器

二十一、kube-proxy开启ipvs

[root@localhost kubernetes]# kubectl edit configmap kube-proxy -n kube-system

将mode: ""改为mode: "ipvs"

mode: "ipvs"

保存退出,删除kube-system下的kube-proxy,会自动重启

[root@localhost kubernetes]# kubectl delete pod $(kubectl get pods -n kube-system|grep kube-proxy|awk '{print $1}') -n kube-system pod "kube-proxy-72bhk" deleted pod "kube-proxy-jft52" deleted pod "kube-proxy-lpt8q" deleted pod "kube-proxy-r9hwc" deleted

[root@localhost kubernetes]# kubectl get pods -n kube-system|grep kube-proxy kube-proxy-2564b 1/1 Running 0 13s kube-proxy-jltvv 1/1 Running 0 20s kube-proxy-kdv62 1/1 Running 0 16s kube-proxy-slgcq

查看proxy日志

[root@localhost kubernetes]# kubectl logs -f kube-proxy-2564b -n kube-system I0106 01:36:04.729433 1 node.go:136] Successfully retrieved node IP: 172.28.5.120 I0106 01:36:04.729498 1 server_others.go:259] Using ipvs Proxier. W0106 01:36:04.729899 1 proxier.go:429] IPVS scheduler not specified, use rr by default I0106 01:36:04.730189 1 server.go:583] Version: v1.18.5 I0106 01:36:04.730753 1 conntrack.go:52] Setting nf_conntrack_max to 131072 I0106 01:36:04.731044 1 config.go:133] Starting endpoints config controller I0106 01:36:04.731084 1 shared_informer.go:223] Waiting for caches to sync for endpoints config I0106 01:36:04.731095 1 config.go:315] Starting service config controller I0106 01:36:04.731110 1 shared_informer.go:223] Waiting for caches to sync for service config I0106 01:36:04.831234 1 shared_informer.go:230] Caches are synced for endpoints config I0106 01:36:04.831262 1 shared_informer.go:230] Caches are synced for service config

Using ipvs Proxier说明已经启用的ipvs,每个node节点也如此操作

至此,kubernetes集群就搭建起来了,下面我们来新建一个nginx的demo,创建一个pod副本为2,这样master节点将随机选择两个node节点来运行nginx 容器,保证2个nginx同时运行

Deployment、ReplicaSet 和 Pod 之间的关系 —— 层层隶属;以及这些资源和 namespace 的关系是 —— 隶属。如下图所示

1、创建deployment和pod

[root@redis-02 kubernetes]# mkdir nginx-demo

[root@localhost kubernetes]# vim nginx-demo/nginx-demo-deployment.yaml

apiVersion: apps/v1 #版本号 kind: Deployment metadata: labels: app: nginx-k8s-demo name: nginx-demo-deployment spec: replicas: 2 #副本数 selector: matchLabels: app: nginx-k8s-demo template: #定义pod模板 metadata: labels: app: nginx-k8s-demo spec: containers: - image: docker.io/nginx:latest name: nginx-k8s-demo ports: - containerPort: 80 #对service暴露的端口

创建

[root@localhost kubernetes]# kubectl create -f nginx-demo/nginx-demo-deployment.yaml deployment.apps/nginx-demo-deployment created [root@localhost kubernetes]#

2、查看deployment

[root@localhost nginx-demo]# kubectl get deployment -n default -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-demo-deployment 2/2 2 2 5h11m nginx-k8s-demo docker.io/nginx:latest app=nginx-k8s-demo

3、查看replica set副本集

[root@localhost log]# kubectl get replicaset -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR nginx-demo-deployment-59fbc48594 2 2 2 9h nginx-k8s-demo docker.io/nginx:latest app=nginx-k8s-demo,pod-template-hash=59fbc48594

4、查看pods

[root@localhost nginx-demo]# kubectl get pods -n default -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-demo-deployment-59fbc48594-2dr7k 1/1 Running 0 5h9m 10.244.3.2 redis-03.hlqxt <none> <none>

nginx-demo-deployment-59fbc48594-xw6bq 1/1 Running 0 5h9m 10.244.4.2 redis-02.hlqxt <none> <none>

2个pods在运行状态,分别部署在2个不同的node节点上

5、查看pod详情

[root@localhost log]# kubectl describe pods nginx-demo-deployment-59fbc48594-2x26z Name: nginx-demo-deployment-59fbc48594-2x26z Namespace: default Priority: 0 Node: redis-01.hlqxt/172.28.5.120 Start Time: Mon, 04 Jan 2021 23:55:28 +0800 Labels: app=nginx-k8s-demo pod-template-hash=59fbc48594 Annotations: <none> Status: Running IP: 10.244.2.2 IPs: IP: 10.244.2.2 Controlled By: ReplicaSet/nginx-demo-deployment-59fbc48594 Containers: nginx-k8s-demo: Container ID: docker://a932b856f0d6422fb8c22e1702f5640e5dc333a32576c57d80a4de3a4507fa54 Image: docker.io/nginx:latest Image ID: docker-pullable://nginx@sha256:4cf620a5c81390ee209398ecc18e5fb9dd0f5155cd82adcbae532fec94006fb9 Port: 80/TCP Host Port: 0/TCP State: Running Started: Mon, 04 Jan 2021 23:55:47 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-b9k9b (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-b9k9b: Type: Secret (a volume populated by a Secret) SecretName: default-token-b9k9b Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: <none>

6、创建service

[root@localhost kubernetes]# vim nginx-demo/nginx-demo-service.yaml

apiVersion: v1 kind: Service metadata: name: nginx-demo-service spec: selector: app: nginx-k8s-demo ports:

- name: http protocol: TCP port: 81 #pod端口 targetPort: 80 #nginx端口 nodePort: 30001 #service端口 type: NodePort

[root@localhost nginx-demo]# kubectl create -f nginx-demo-service.yaml service/nginx-demo-service created [root@localhost nginx-demo]#

7、查看service详情

[root@localhost nginx-demo]# kubectl describe service nginx-demo-service Name: nginx-demo-service Namespace: default Labels: <none> Annotations: Selector: app=nginx-k8s-demo Type: NodePort IP: 10.107.6.219 Port: http 81/TCP TargetPort: 80/TCP NodePort: http 30001/TCP Endpoints: 10.244.2.2:80,10.244.4.3:80 Session Affinity: None External Traffic Policy: Cluster Events: <none>

8、查看service

[root@localhost nginx-demo]# kubectl get svc -n default -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11h <none> nginx-demo-service NodePort 10.106.28.201 <none> 81:30001/TCP 4m59s app=nginx-k8s-demo

可通过ipvsadm -Ln|grep 10.106.28.201 -C 5 来查看service的负载均衡配置

[root@master69 etcd-v3.4.14-linux-amd64]# ipvsadm -Ln|grep 10.106.28.201 -A 3 TCP 10.106.28.201:81 rr -> 10.244.3.2:80 Masq 1 0 0 -> 10.244.4.2:80 Masq 1 0 0

在其他服务器上访问访问master节点ip:30001

[root@redis-01 kubernetes]# curl 172.28.18.69:30001 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> body { 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>

成功访问nginx

9、编辑服务

两种办法:

修改 yaml 文件后通过 kubectl 更新,例如:

修改deployment配置里的副本集为3

[root@localhost nginx-demo]# vim nginx-demo-deployment.yaml replicas: 3 #副本数

更新deployment

[root@localhost nginx-demo]# kubectl apply -f nginx-demo-deployment.yaml deployment.apps/nginx-demo-deployment configured [root@localhost nginx-demo]

查看replica set副本集

[root@localhost nginx-demo]# kubectl get replicaset NAME DESIRED CURRENT READY AGE nginx-demo-deployment-59fbc48594 3 3 3 9h

已经变了3个了

查看pods,3个

[root@localhost nginx-demo]# kubectl get pods -n default NAME READY STATUS RESTARTS AGE nginx-demo-deployment-59fbc48594-mhjx8 1/1 Running 0 25m nginx-demo-deployment-59fbc48594-wrqv6 1/1 Running 0 60s nginx-demo-deployment-59fbc48594-zwpb5 1/1 Running 0 24m

通过 kubectl edit 直接编辑 Kubernetes 上的资源,例如:编辑deployment,将副本集改为2

[root@localhost nginx-demo]# kubectl edit deployment nginx-demo-deployment

f:terminationGracePeriodSeconds: {}

manager: kubectl

operation: Update

time: "2021-01-05T01:43:02Z"

name: nginx-demo-deployment

namespace: default

resourceVersion: "194731"

selfLink: /apis/apps/v1/namespaces/default/deployments/nginx-demo-deployment

uid: e87087a3-c091-4fea-94a0-36a2ab793877

spec:

progressDeadlineSeconds: 600

replicas: 2

revisionHistoryLimit: 10

selector:

matchLabels:

app: nginx-k8s-demo

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

app: nginx-k8s-demo

spec:

containers:

- image: docker.io/nginx:latest

imagePullPolicy: Always

name: nginx-k8s-demo

ports:

- containerPort: 80

protocol: TCP

resources: {}

修改上面的replicas: 2,改为3,保存退出

.: {} "/tmp/kubectl-edit-jn880.yaml" 159L, 4857C written deployment.apps/nginx-demo-deployment edited [root@localhost nginx-demo]#

查看replica set

[root@localhost nginx-demo]# kubectl get replicaset NAME DESIRED CURRENT READY AGE nginx-demo-deployment-59fbc48594 2 2 2 9h

已经生效了

9、删除service

[root@localhost nginx-demo]# kubectl delete -f nginx-demo-service.yaml service "nginx-demo-service" deleted [root@localhost nginx-demo]#

10、删除deployment

[root@localhost nginx-demo]# kubectl delete -f nginx-demo-deployment.yaml deployment.apps "nginx-demo-deployment" deleted

11、删除pod

[root@localhost log]# kubectl delete pods nginx-demo-deployment-59fbc48594-2x26z pod "nginx-demo-deployment-59fbc48594-2x26z" deleted

12、查看集群中所有的namespace

[root@localhost log]# kubectl get ns NAME STATUS AGE default Active 20h kube-node-lease Active 20h kube-public Active 20h kube-system Active 20h

13、查看某个pods内容器日志

[root@localhost nginx-demo]# kubectl logs nginx-demo-deployment-59fbc48594-mhjx8 /docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration /docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/ /docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh 10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf 10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf /docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh /docker-entrypoint.sh: Configuration complete; ready for start up 10.244.0.0 - - [05/Jan/2021:01:21:04 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-" 10.244.0.0 - - [05/Jan/2021:01:21:29 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-" 10.244.0.0 - - [05/Jan/2021:01:21:37 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-" 10.244.0.0 - - [05/Jan/2021:01:21:37 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-" 10.244.0.0 - - [05/Jan/2021:01:21:38 +0000] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

14、进入某个pod容器

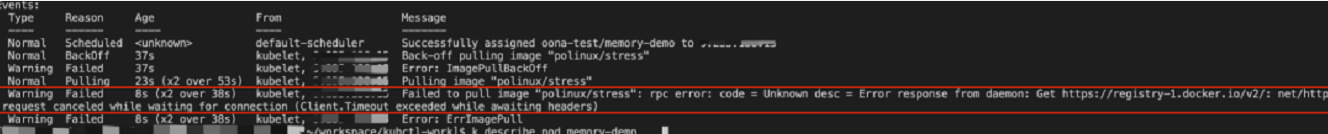

拉到最后看到Events部分,会显示出 Kubernetes 在部署这个服务过程的关键日志。这里我们可以看到是拉取镜像失败了[root@localhost nginx-demo]# kubectl exec -it nginx-demo-deployment-59fbc48594-mhjx8 /bin/bash

15、部署pod、deployment、service出现问题的排查

运行kubectl decribe service(deployment)(pods) xxxxxxx

拉到最后看到Events部分,会显示出 Kubernetes 在部署过程的关键日志。这里我们可以看到是拉取镜像失败了

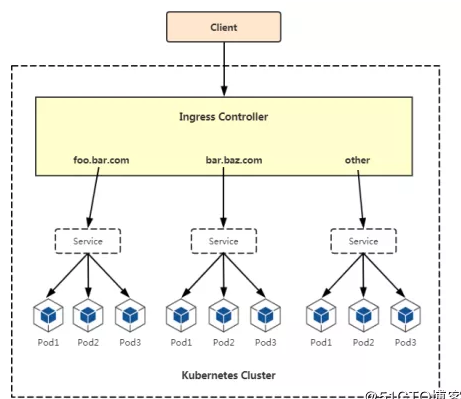

16、kubernetes集群架构图

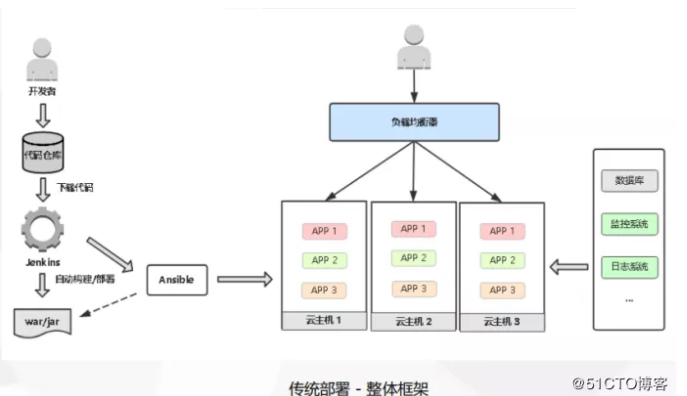

17、传统项目部署框架

首先开发者将代码部署到你的代码仓库中,主流的用的Git或者gitlab,提交完代码通过CI/CD平台需要对代码进行拉取、编译、构建,产生一个War包,然后交给Ansible然后发送到云主机上/物理机,然后通过负载均衡将项目暴露出去,然后会有数据库,监控系统,日志系统来提供相关的服务。

18、基于kubernetes集群的项目部署框架

首先也是开发将代码放在代码仓库,然后通过jenkins去完成拉取代码,编译,上传到我们的镜像仓库。

这里是将代码打包成一个镜像,而不是可以执行的war或者jar包,这个镜像包含了你的项目的运行环境和项目代码,这个镜像可以放在任何docker上去run起来,都可以去访问,首先得保证能够在docker上去部署起来,再部署到k8s上,打出来的镜像去放在镜像仓库中,来集中的去管理这些镜像。

因为每天会产生几十个或者上百个镜像,必须通过镜像仓库去管理,这里可能会去写一个脚本去连接k8smaster,而k8s会根据自己的部署去调度这些pod,然后通过ingress去发布我们的应用,让用户去访问,每个ingress会关联一组pod,而service会创建这组pod的负载均衡,通过service去区分这些节点上的Pod。

然后数据库是放在集群之外,监控系统日志系统也可以放在k8s集群放在去部署,也可以放在之外,我们是放在k8s集群内的,也不是特别敏感,主要用来运维和开发调试用的,不会影响到我们的业务,所以我们优先去k8s中去部署。