一. 离线安装

https://cloud.tencent.com/developer/article/1445946

https://github.com/liul85/sealos 这里提供了离线的 kube1.16.0.tar.gz包

https://sealyun.oss-cn-beijing.aliyuncs.com/37374d999dbadb788ef0461844a70151-1.16.0/kube1.16.0.tar.gz

https://sealyun.oss-cn-beijing.aliyuncs.com/7b6af025d4884fdd5cd51a674994359c-1.18.0/kube1.18.0.tar.gz

https://sealyun.oss-cn-beijing.aliyuncs.com/a4f6fa2b1721bc2bf6fe3172b72497f2-1.17.12/kube1.17.12.tar.gz

使用 sealos 安装出现如下为:

1、 [root@host-10-14-69-125 kubernetes]# kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.0", GitCommit:"9e991415386e4cf155a24b1da15becaa390438d8", GitTreeState:"clean", BuildDate:"2020-03-25T14:58:59Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}

Error from server (InternalError): an error on the server ("") has prevented the request from succeeding

待补充完整.................................

二. 在线安装

1、前提条件

1) 使用操作系统 7.6 ,见 《Linux 学习笔记之(一)centos7.6 安装 》

2) 准备安装 kubernetes 的服务器 能够上网

3) 准备两台服务器,一台做 k8s master node,另一台做 k8s worker node

2、准备工作

(1) 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

(2) 修改服务器名称并设置DNS

hostnamectl set-hostname k8s-2 //k8s-2 是 master 所属服务器名称 echo "127.0.0.1 k8s-2">>/etc/hosts

(3) 进行时间校时(用aliyun的NTP服务器)

yum install -y ntp

ntpdate ntp1.aliyun.com

(4) 安装常用软件

yum install wget

3、安装 docker

# 安装常用软件

yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加docker yum源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo #更新并安装 Docker-CE

yum makecache fast yum -y install docker-ce

# 启动 docker service docker start

# 开启自启动 docker systemctl enable docker

# 查看 docker 服务状态

systemctl status docke

4、配置 docker

mkdir -p /etc/docker tee /etc/docker/daemon.json <<-'EOF' {

# 使用阿里作为镜像加速器 "registry-mirrors": ["https://obww7jh1.mirror.aliyuncs.com"],

# 将 docker 原先使用的驱动 cgroups 更改为 systemd,因为 kubernetes 使用的驱动为 systemd "exec-opts": ["native.cgroupdriver=systemd"] } EOF

# 使 daemon.json 加载到系统中 systemctl daemon-reload

# 重启 docker systemctl restart docker

# 查看安装的 docker 相关信息

docker info

5、安装 kubernetes

# 安装 kubernetes yum 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

# 查看版本,本次安装1.17.12-0 yum --showduplicates list kubelet | expand

# 安装 kubeletkubeadmkubectl yum install -y kubelet-1.17.12-0 kubeadm-1.17.12-0 kubectl-1.17.12-0

6、配置 kubernetes

#关闭 SELINUX setenforce 0 sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config #设置iptables cat <<EOF > /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF #让 k8s.conf生效 modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf # 关闭 SWAP vi /etc/fstab 注释swap分区 # /dev/mapper/centos-swap swap swap defaults 0 0 #保存退出vi后执行 swapoff –a #开机启动kubelet systemctl enable kubelet

7、初始化 k8s 集群 master node

kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository=registry.aliyuncs.com/google_containers

--pod-network-cidr :后续安装 flannel 的前提条件,且值为 10.244.0.0/16

--image-repository :指定镜像仓库

执行以上命令,输出的日志为:

[root@k8s-2 opt]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository=registry.aliyuncs.com/google_containers W1013 10:38:56.543641 19539 version.go:101] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers) W1013 10:38:56.543871 19539 version.go:102] falling back to the local client version: v1.17.12 W1013 10:38:56.544488 19539 validation.go:28] Cannot validate kube-proxy config - no validator is available W1013 10:38:56.544515 19539 validation.go:28] Cannot validate kubelet config - no validator is available [init] Using Kubernetes version: v1.17.12 [preflight] Running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-2 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.149.133] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-2 localhost] and IPs [192.168.149.133 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-2 localhost] and IPs [192.168.149.133 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" W1013 10:42:48.939526 19539 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-scheduler" W1013 10:42:48.941281 19539 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 17.010651 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8s-2 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s-2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: 9od4xd.15l09jrrxa7qo3ny [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.149.133:6443 --token 9od4xd.15l09jrrxa7qo3ny --discovery-token-ca-cert-hash sha256:fb23ab81f7b95b36595dfb44ee7aab865aac7671a416b57f9cb2461f45823ea1

按照红色区域执行命令:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

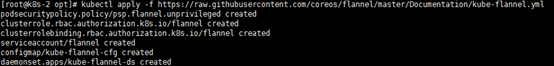

8、需要部署一个 Pod Network 到集群中,此处选择 flannel

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

此时直接将 raw.githubusercontent.com 写入到 /etc/hosts中

echo "199.232.28.133 raw.githubusercontent.com" >> /etc/hosts

PS: 补充说明

raw.githubusercontent.com 对应的真实 IP 可以通过 https://site.ip138.com/raw.githubusercontent.com/ 进行查询,建议使用美国的地址 (日本的和香港的测试不行)

再次执行上述命令,执行成功

查看集群状态:

kubectl cluster-info

9、检查是否搭建成功

# 查看所有 node结点 kubectl get nodes #查看所有命名空间中的 pod kubectl get pods –all-namespaces

如果初始化过程出现问题,使用如下命令重置

kubeadm reset rm -rf /var/lib/cni/ rm -f $HOME/.kube/config

10、初始化 k8s 集群 worker node

本示例主要是部署一个master 结点,要再添加 worker,可以参见参考资料中的文章。

(1) 按照5、6 步骤 在 woker node 结点安装 kubernetes;

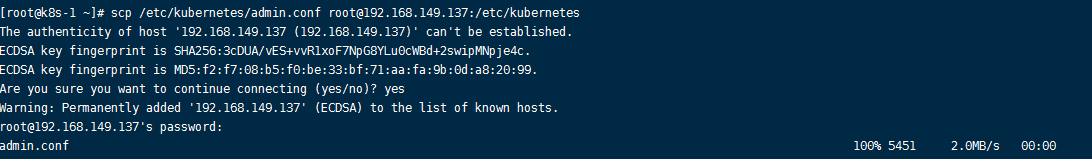

(2) 从 master 结点拷贝 /etc/kubernets/adim.conf文件到从结点同等目录下

(3) 在 worker 结点上执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

(4) 执行步骤8

(5) 按照步骤9进行校验

11、部署过程中遇到的问题

(1) 部署 docker 完之后,没有去修改它的驱动,导致在部署 kubernetes 启动 kubelet 失败(通过 systemctl status kubelet 查看服务状态)

通过 docker info 命令看到默认驱动是 cgroupfs

所以一定要执行以上的 配置 docker 步骤,否则得返回去再去修改 docker 的驱动

在 /etc/docker/daemon.json 文件中增加如下配置: { "exec-opts": ["native.cgroupdriver=systemd"] } systemctl restart docker

重启 docker 之后,再次查看 docker info 信息,

(2) 通过 kubectl get nodes 发现 work 结点 是 NotReady 状态的话,可以通过 tail -f /var/log/messages 查询原因。

参考资料:

https://www.cnblogs.com/bluersw/p/11713468.html

https://www.jianshu.com/p/832bcd89bc07

三、kubernetes 卸载及清理

kubeadm reset -f modprobe -r ipip lsmod rm -rf ~/.kube/ rm -rf /etc/kubernetes/ rm -rf /etc/systemd/system/kubelet.service.d rm -rf /etc/systemd/system/kubelet.service rm -rf /usr/bin/kube* rm -rf /etc/cni rm -rf /opt/cni rm -rf /var/lib/etcd

四、使用 centos 8 安装 kubernetes

安装步骤基本同上,不同点在于

1、手动修改 docker-ce repos

vi /etc/yum.repos.d/docker-ce.repo

将其中的操作系统 center 7 更改 为 8

修改后的内容如下:

[docker-ce-stable] name=Docker CE Stable - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/$basearch/stable enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-debuginfo] name=Docker CE Stable - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/debug-$basearch/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-stable-source] name=Docker CE Stable - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/source/stable enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge] name=Docker CE Edge - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/$basearch/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge-debuginfo] name=Docker CE Edge - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/debug-$basearch/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-edge-source] name=Docker CE Edge - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/source/edge enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test] name=Docker CE Test - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-debuginfo] name=Docker CE Test - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/debug-$basearch/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-test-source] name=Docker CE Test - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/source/test enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly] name=Docker CE Nightly - $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-debuginfo] name=Docker CE Nightly - Debuginfo $basearch baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/debug-$basearch/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg [docker-ce-nightly-source] name=Docker CE Nightly - Sources baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/8/source/nightly enabled=0 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

2、安装docker 之前添加对应 yum 源之后,原先执行 yum makecache fast 更改为执行 yum makecache