一、下载地址

http://apache.fayea.com/zookeeper

二、安装

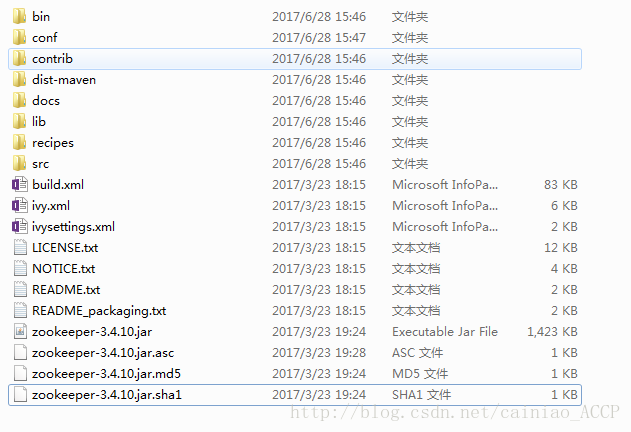

下载解压后如图

三、单机配置

1. 修改 config 下的配置文件

打开 conf 目录下 zoo_sample.cfg 将其名字改为 zoo.cfg,对其进行如下修改,如下

# The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. # 修改的地方 dataDir=D:/JAVA/zookeeper-3.4.10/data dataLogDir=D:/JAVA/zookeeper-3.4.10/logs # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1

2. 参数说明

- tickTime:基本事件单元,以毫秒为单位,用来控制心跳和超时,默认情况超时的时间为两倍的tickTime

- dataDir:数据目录.可以是任意目录.

- dataLogDir:log目录, 同样可以是任意目录. 如果没有设置该参数, 将使用和dataDir相同的设置.

- clientPort:监听client连接的端口号.

- maxClientCnxns:限制连接到zookeeper的客户端数量,并且限制并发连接数量,它通过ip区分不同的客户端。

- minSessionTimeout和maxSessionTimeout:最小会话超时时间和最大的会话超时时间,在默认情况下,最小的超时时间为2倍的tickTime时间,最大的会话超时时间为20倍的会话超时时间,系统启动时会显示相应的信息。默认为-1

- initLimit:参数设定了允许所有跟随者与领导者进行连接并同步的时间,如果在设定的时间段内,半数以上的跟随者未能完成同步,领导者便会宣布放弃领导地位,进行另一次的领导选举。如果zk集群环境数量确实很大,同步数据的时间会变长,因此这种情况下可以适当调大该参数。默认为10

- syncLimit:参数设定了允许一个跟随者与一个领导者进行同步的时间,如果在设定的时间段内,跟随者未完成同步,它将会被集群丢弃。所有关联到这个跟随者的客户端将连接到另外一个跟随着。

3. 启动 Zookeeper

在bin目录下,双击zkServer.cmd即可启动Zookeeper。

如果启动闪退,可能是配置文件有问题,可以使用默认配置文件看是否可以启动。

四、伪集群模式

所谓伪集群, 是指在单台机器中启动多个zookeeper进程, 并组成一个集群. 以启动3个zookeeper进程为例.

将zookeeper的目录拷贝2份:

|–zookeeper-3.4.10-0

|–zookeeper-3.4.10-1

|–zookeeper-3.4.10-2

1. 配置数据和日志存放路径

与单机配置类似,只是3个zookeeper不能配置同一个目录下,配置如下

zookeeper0

# The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. dataDir=D:/JAVA/zookeeper-3.4.10-0/data dataLogDir=D:/JAVA/zookeeper-3.4.10-0/logs # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1 server.0=127.0.0.1:2888:3888 server.1=127.0.0.1:2889:3889 server.2=127.0.0.1:2890:3890

zookeeper-3.4.10-1 、zookeeper-3.4.10-2与zookeeper-3.4.10-0类似,只有dataDir和dataLogDir配置到不同的目录,clientPort在zookeeper-3.4.10-0中是2181,在zookeeper-3.4.10-1中是 2182,在 zookeeper-3.4.10-2中为 2183

在之前设置的dataDir中新建myid文件, 写入一个数字, 该数字表示这是第几号server. 该数字必须和zoo.cfg文件中的server.X中的X一一对应.

D:/JAVA/zookeeper-3.4.10-0/data/myid文件中写入0,zookeeper-3.4.10-1为1,zookeeper-3.4.10-2为2

server.A=B:C:D:其中

A 是一个数字,表示这个是第几号服务器;

B 是这个服务器的 ip 地址;

C 表示的是这个服务器与集群中的 Leader 服务器交换信息的端口;

D 表示的是万一集群中的 Leader 服务器挂了,需要一个端口来重新进行选举,选出一个新的 Leader,而这个端口就是用来执行选举时服务器相互通信的端口。

如果是伪集群的配置方式,由于 B 都是一样,所以不同的 Zookeeper 实例通信端口号不能一样,所以要给它们分配不同的端口号。

2. 启动

我这里的启动顺序为zookeeper-3.4.10-0>zookeeper-3.4.10-1>zookeeper-3.4.10-2

在启动第一个的时候会报如下错误

ion@600] - Notification: 1 (message format version), 0 (n.leader), 0x0 (n.zxid), 0x1 (n.round), LOOKING (n.state), 0 (n.sid), 0x0 (n.peerEpoch) LOOKING (my stat e) 2017-06-28 16:39:17,306 [myid:0] - WARN [WorkerSender[myid=0]:QuorumCnxManager@ 588] - Cannot open channel to 1 at election address /127.0.0.1:3889 java.net.ConnectException: Connection refused: connect at java.net.DualStackPlainSocketImpl.waitForConnect(Native Method) at java.net.DualStackPlainSocketImpl.socketConnect(DualStackPlainSocketI mpl.java:85) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.ja va:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocket Impl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java :188) at java.net.PlainSocketImpl.connect(PlainSocketImpl.java:172) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:589) at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(Quorum CnxManager.java:562) at org.apache.zookeeper.server.quorum.QuorumCnxManager.toSend(QuorumCnxM anager.java:538) at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$Worke rSender.process(FastLeaderElection.java:452) at org.apache.zookeeper.server.quorum.FastLeaderElection$Messenger$Worke rSender.run(FastLeaderElection.java:433) at java.lang.Thread.run(Thread.java:748) 2017-06-28 16:39:17,314 [myid:0] - INFO [WorkerSender[myid=0]:QuorumPeer$Quorum Server@167] - Resolved hostname: 127.0.0.1 to address: /127.0.0.1 2017-06-28 16:39:18,327 [myid:0] - WARN [QuorumPeer[myid=0]/0:0:0:0:0:0:0:0:218 1:QuorumCnxManager@588] - Cannot open channel to 1 at election address /127.0.0. 1:3889 java.net.ConnectException: Connection refused: connect at java.net.DualStackPlainSocketImpl.waitForConnect(Native Method) at java.net.DualStackPlainSocketImpl.socketConnect(DualStackPlainSocketI mpl.java:85) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.ja va:350) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocket Impl.java:206) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java :188) at java.net.PlainSocketImpl.connect(PlainSocketImpl.java:172) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:589) at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectOne(Quorum CnxManager.java:562) at org.apache.zookeeper.server.quorum.QuorumCnxManager.connectAll(Quorum CnxManager.java:614) at org.apache.zookeeper.server.quorum.FastLeaderElection.lookForLeader(F astLeaderElection.java:843) at org.apache.zookeeper.server.quorum.QuorumPeer.run(QuorumPeer.java:913 )

上面的错误是由于ZooKeeper集群启动的时候,每个结点都试图去连接集群中的其它结点,先启动的肯定连不上后面还没启动的,所以上面日志前面部分的异常是可以忽略的。通过后面部分可以看到,集群在选出一个Leader后,最后稳定了。

五、集群模式

集群模式的配置和伪集群基本一致.

由于集群模式下, 各server部署在不同的机器上, 因此各server的conf/zoo.cfg文件可以完全一样.基于Linux配置。

tickTime=2000 initLimit=5 syncLimit=2 dataDir=/home/zookeeper/data dataLogDir=/home/zookeeper/logs clientPort=4180 server.43=10.1.39.43:2888:3888 server.47=10.1.39.47:2888:3888 server.48=10.1.39.48:2888:3888 部署了3台zookeeper server, 分别部署在10.1.39.43, 10.1.39.47, 10.1.39.48上. 需要注意的是, 各server的dataDir目录下的myid文件中的数字必须不同. 10.1.39.43 server的myid为43, 10.1.39.47 server的myid为47, 10.1.39.48 server的myid为48.

10.1.39.43 server的myid为43, 10.1.39.47 server的myid为47, 10.1.39.48 server的myid为48.

部署完成后即可分别启动。

启动后要检查 Zookeeper 是否已经在服务,可以通过 netstat -at|grep 2181 命令查看是否有 clientPort 端口号在监听服务。

原文出处:

https://blog.csdn.net/cainiao_ACCP/article/details/73850904