系统环境:CentOS6.3+JDK1.7

部署资源:hadoop-2.2.0 zookeeper-3.4.5

集群规划:

主机名 IP 安装的软件 运行的进程

reagina01 192.168.8.201 jdk,hadoop NameNode,DFSZKFailoverController

reagina02 192.168.8.202 jdk,hadoop NameNode,DFSZKFailoverController

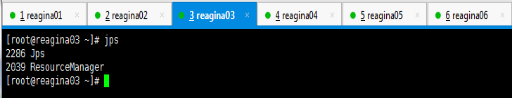

reagina03 192.168.8.203 jdk,hadoop ResourceManager

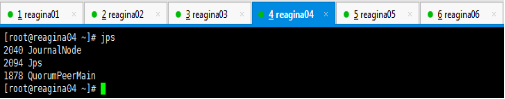

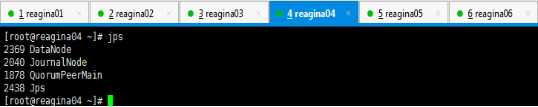

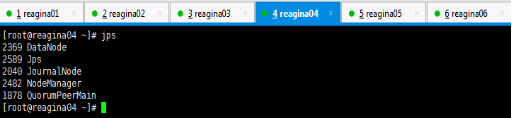

reagina04 192.168.8.204 jdk,hadoop,zookeeper DataNode,NodeManager,JournalNode,QuorumPeerMain

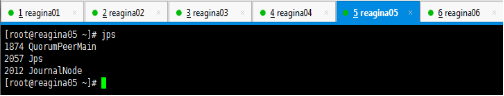

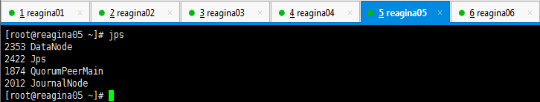

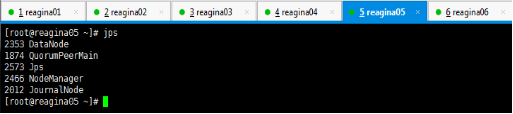

reagina05 192.168.8.205 jdk,hadoop,zookeeper DataNode,NodeManager,JournalNode,QuorumPeerMain

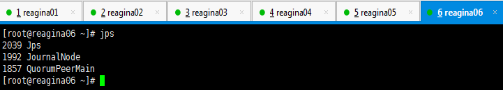

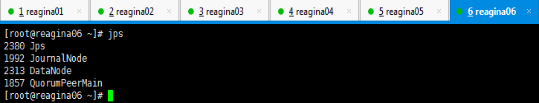

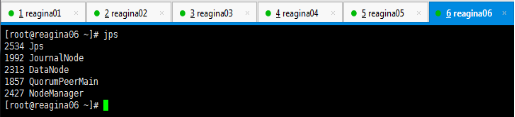

reagina06 192.168.8.206 jdk,hadoop,zookeeper DataNode,NodeManager,JournalNode,QuorumPeerMain

在reagina01节点上拆解Hadoop:

[root@reagina01 ~]# tar -zxvf hadoop-2.2.0-64bit.tar.gz -C /reagina/

修改配置文件:

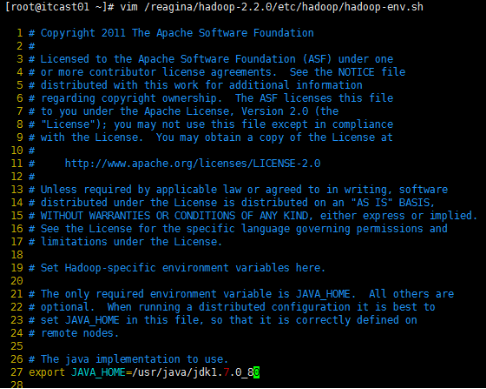

1、hadoop-env.sh

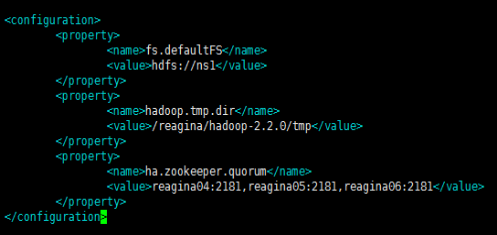

2、core-site.xml

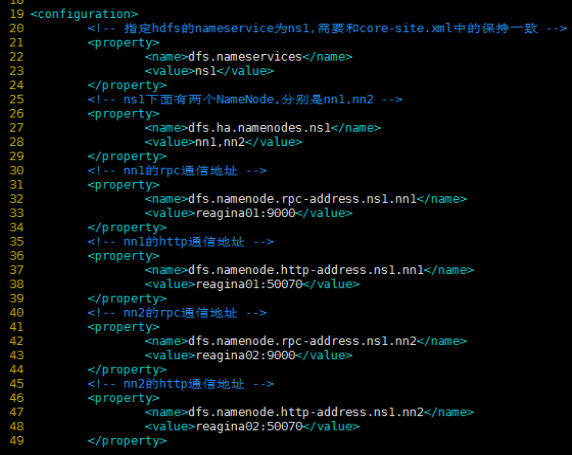

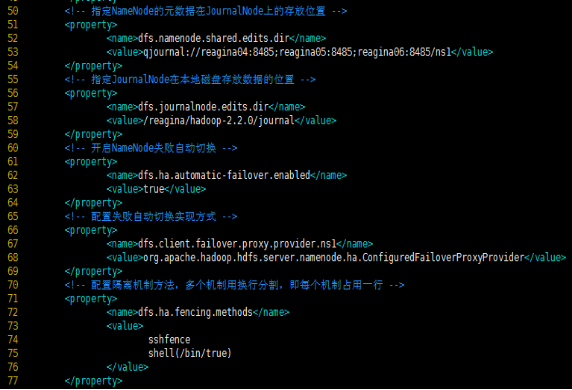

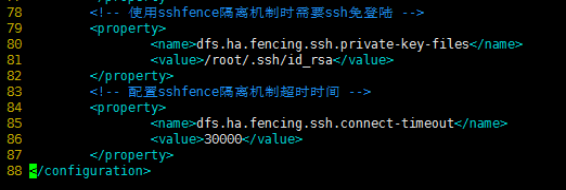

3、hdfs-site.xml

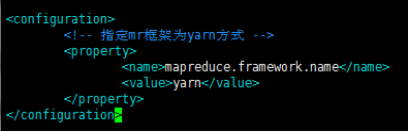

4、mapred-site.xml

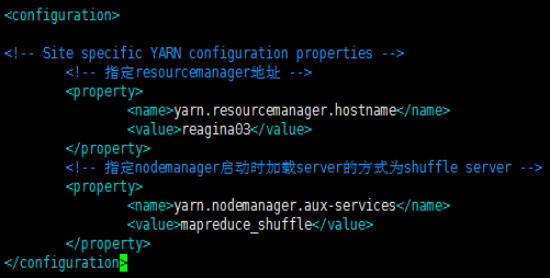

5、yarn-site.xml

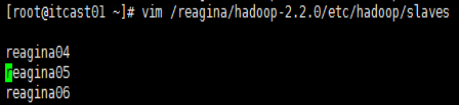

6、slaves

配置免密码登录:

首先要配置reagina01到reagina01、reagina02、reagina03、reagina04、reagina05、reagina06的免密码登录。

[root@reagina01 ~]# ssh-keygen -t rsa

[root@reagina01 ~]# ssh-copy-id reagina01

[root@reagina01 ~]# ssh-copy-id reagina02

[root@reagina01 ~]# ssh-copy-id reagina03

[root@reagina01 ~]# ssh-copy-id reagina04

[root@reagina01 ~]# ssh-copy-id reagina05

[root@reagina01 ~]# ssh-copy-id reagina06

然后配置reagina03到reagina03、reagina04、reagina05、reagina06的免密码登录

[root@reagina03 ~]# ssh-keygen -t rsa

[root@reagina03 ~]# ssh-copy-id reagina03

[root@reagina03 ~]# ssh-copy-id reagina04

[root@reagina03 ~]# ssh-copy-id reagina05

[root@reagina03 ~]# ssh-copy-id reagina06

注意:两个namenode之间要配置ssh免密码登录,所以还要配置reagina02到reagina01的免密码登录:

[root@reagina02 ~]# ssh-keygen -t rsa

[root@reagina02 ~]# ssh-copy-id reagina01

将配置好的Hadoop拷贝到其他节点:

[root@reagina01 ~]# scp -r /reagina/ reagina02:/

[root@reagina01 ~]# scp -r /reagina/ reagina03:/

[root@reagina01 ~]# scp -r /reagina/hadoop-2.2.0/ root@reagina04:/reagina/

[root@reagina01 ~]# scp -r /reagina/hadoop-2.2.0/ root@reagina05:/reagina/

[root@reagina01 ~]# scp -r /reagina/hadoop-2.2.0/ root@reagina06:/reagina/

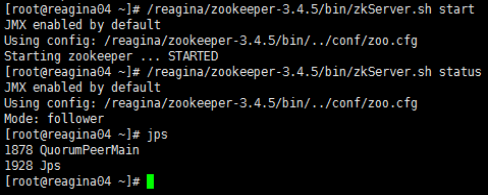

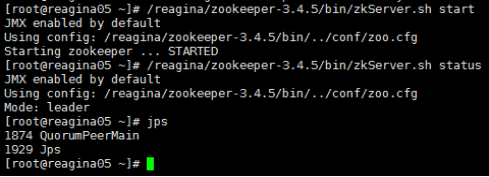

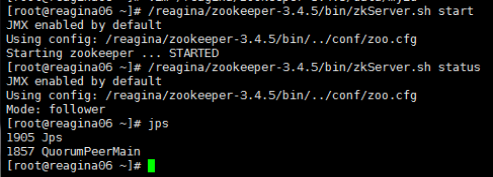

reagina04、reagina05、reagina06节点上的zookeeper集群的搭建:

http://www.cnblogs.com/reagina/p/6366363.html

启动zookeeper集群:

在reagina01上启动所有journalnode:

[root@reagina03 ~]# /reagina/hadoop-2.2.0/sbin/hadoop-daemons.sh start journalnode

格式化HDFS:

[root@reagina01 ~]# /reagina/hadoop-2.2.0/bin/hdfs namenode -format

格式化后会再根据core-site.xml中的hadoop.tmp.dir配置生成一个目录,然后将这个目录拷贝到reagina02节点:

[root@reagina01 ~]# scp -r /reagina/hadoop-2.2.0/tmp/ reagina02:/reagina/hadoop-2.2.0/

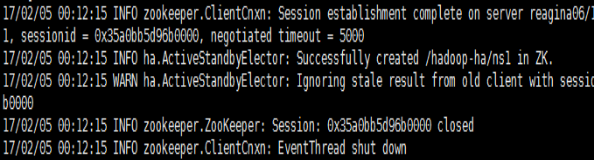

格式化ZK:

[root@reagina01 ~]# /reagina/hadoop-2.2.0/bin/hdfs zkfc -formatZK

启动HDFS:

[root@reagina01 ~]# /reagina/hadoop-2.2.0/sbin/start-dfs.sh

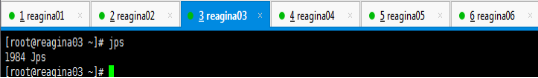

启动YARN(注意:是在reagina03上执行start-yarn.sh,把namenode和resourcemanager分开是因为性能问题,因为它们都要占用大量资源,所以把它们分开了,它们分开了就要分别在不同的机器上启动)

[root@reagina03 ~]# /reagina/hadoop-2.2.0/sbin/start-yarn.sh

在namenode节点上(reagina01、reagina02)启动zookeeper选举制度:

[root@reagina01 ~]# /reagina/hadoop-2.2.0/sbin/hadoop-daemon.sh start zkfc

starting zkfc, logging to /reagina/hadoop-2.2.0/logs/hadoop-root-zkfc-reagina01.out

[root@reagina02 ~]# /reagina/hadoop-2.2.0/sbin/hadoop-daemon.sh start zkfc

starting zkfc, logging to /reagina/hadoop-2.2.0/logs/hadoop-root-zkfc-reagina02.out

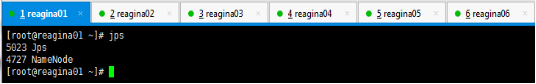

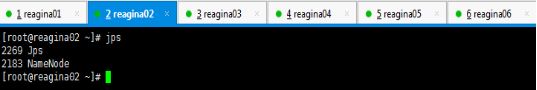

[root@reagina02 ~]# jps

2631 DFSZKFailoverController

2691 Jps

2183 NameNode

[root@reagina02 ~]#

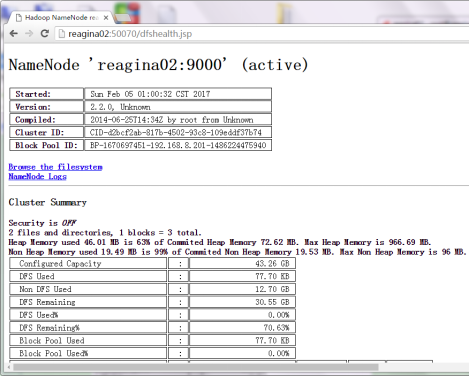

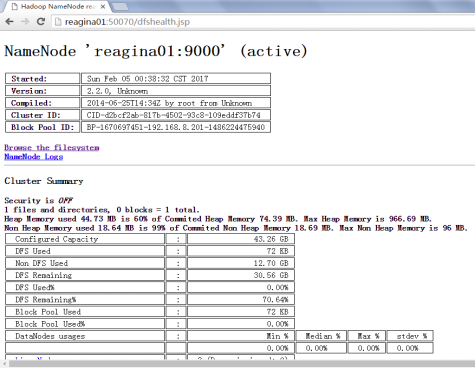

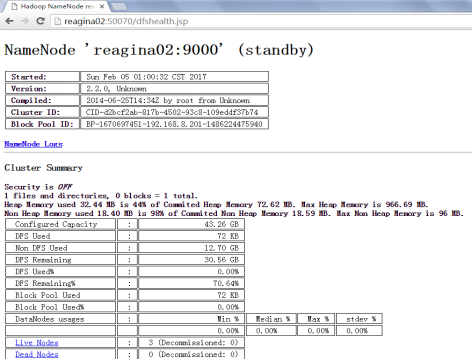

到此,hadoop2.2.0配置完毕,可以统计浏览器访问:

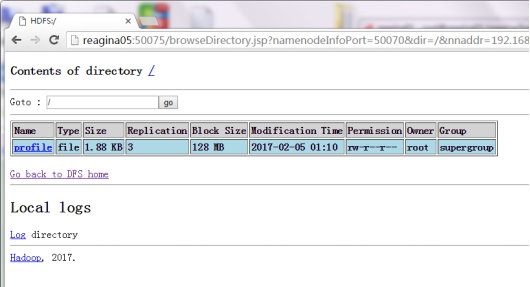

验证HDFS HA

[root@reagina01 sbin]# hadoop fs -put /etc/profile /profile

[root@reagina01 sbin]# hadoop fs -ls /

-rw-r--r-- 3 root supergroup 1924 2017-02-05 01:10 /profile

[root@reagina01 sbin]# jps

4727 NameNode

5260 DFSZKFailoverController

5491 Jps

[root@reagina01 sbin]# kill -9 4727