代码如下:

from urllib.request import urlopen from bs4 import BeautifulSoup import re import datetime import random import pymysql.cursors # Connect to the database connection = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='数据库密码', db='scraping', charset='utf8mb4', cursorclass=pymysql.cursors.DictCursor) cur = connection.cursor() random.seed(datetime.datetime.now()) def store(title,content): cur.execute("INSERT INTO pages(title,content)values("%s","%s")",(title,content)) cur.connection.commit() def getLinks(articleUrl): html = urlopen("http://en.wikipedia.org"+articleUrl) bsObj = BeautifulSoup(html,"html.parser") title = bsObj.find("h1").get_text() print(title) content = bsObj.find("div",{"id":"mw-content-text"}).find("p").get_text() print(content) store(title,content) return bsObj.find("div",{"id":"bodyContent"}).findAll("a",href=re.compile("^(/wiki/)((?!:).)*$")) links = getLinks("/wiki/Kevin_Bacon") try: while len(links) > 0 : newArticle = links[random.randint(0, len(links)-1)].attrs["href"] #print(newArticle) links = getLinks(newArticle) finally: cur.close() connection.close()

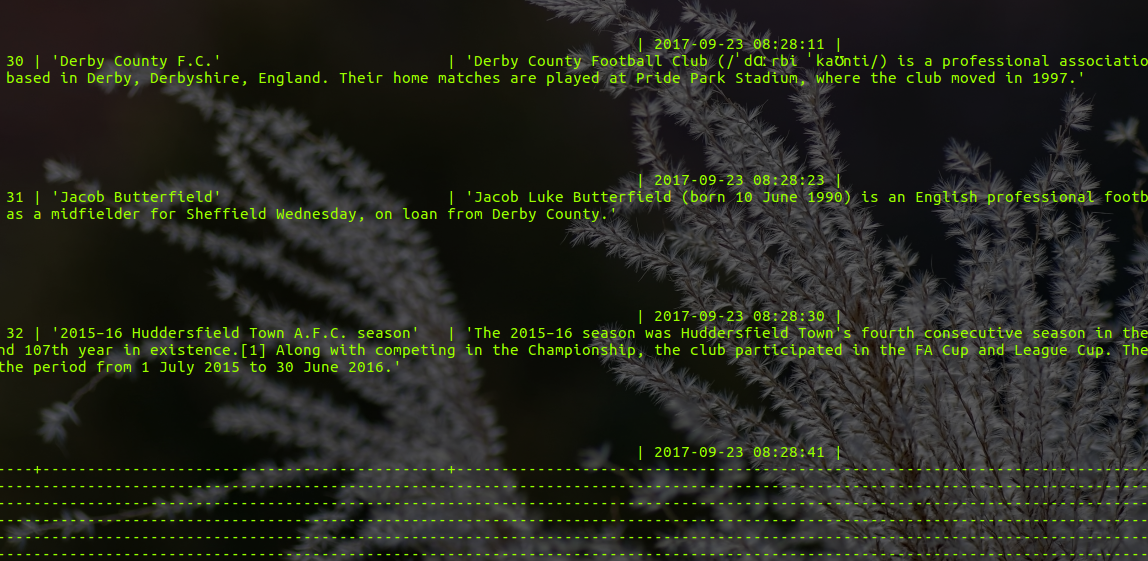

结果截图

注:

由于维基百科上我们会遇到各种各样的字符,所以最好通过下面四条语句让数据库支持unicode:

alter database scraping character set = utf8mb4 collate = utf8mb4_unicode_ci; alter table pages convert to character set = utf8mb4 collate = utf8mb4_unicode_ci; alter table pages change title title varchar(200) character set = utf8mb4 collate = utf8mb4_unicode_ci; alter table pages change content content varchar(10000) character set = utf8mb4 collate = utf8mb4_unicode_ci;