import numpy as np

from matplotlib import pyplot as plt

########################## 设置模型参数 ############################

"""

训练轮次: 500

输入层节点数: 3

隐藏层节点数: 4

输出层节点数: 1

"""

epochs = 500

hidden_size, output_size = 4, 1

lr = 0.005

############################ 数据准备 ##############################

"""

输入数据: X

目标函数: y

"""

X = np.array([[1, 1, -1, 1],

[1, -1, -1, 1],

[-1, 1, -1, 1],

[-1, -1, -1, 1]])

target = np.array([1, 0, 0, 1]).reshape(4, 1)

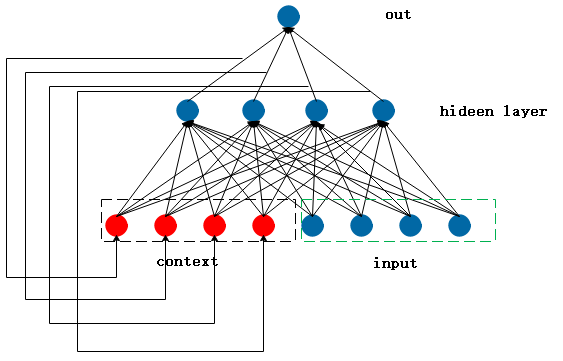

# 初始化承接层

context = np.zeros((X.shape[0], hidden_size))

input = np.hstack((context, X))

# 初始化权重

w_input = np.random.rand(X.shape[0], hidden_size + X.shape[1])

w_out = np.random.rand(hidden_size, 1)

############################ 搭建模型 #############################

# 激励函数

def sigmoid(x):

return 1/(1+np.exp(-x))

# 正向传播过程

def forward(x, w1, w2):

xw = np.dot(x, w1.T)

context = sigmoid(xw)

out = np.dot(context, w2)

return context, out

# 反向传播过程

def backward(dout, contxt, w1, w2, x):

d_contxt = np.dot(dout, w2.T)

dw2 = np.dot(contxt, dout)

dxw = d_contxt * (1.0 - context) * context

dw1 = np.dot(dxw, x)

return dw1, dw2

for i in range(epochs):

# 预测

context, out = forward(input, w_input, w_out)

# 计算损失

loss = np.square(target - out) / X.shape[0]

# 反向传播

dw1, dw2 = backward(loss, context, w_input, w_out, input)

# 更新

w_input -= lr * dw1

w_out -= lr * dw2

# 评价指标

print('-----------------------Epoch is :[{}/{}]-----------------------'.format(i+1, epochs))

print("out: {}

Loss: {}".format(out.T, loss.T))

该程序只是简单的写了一个框架,希望能大家提供一种编程思维。