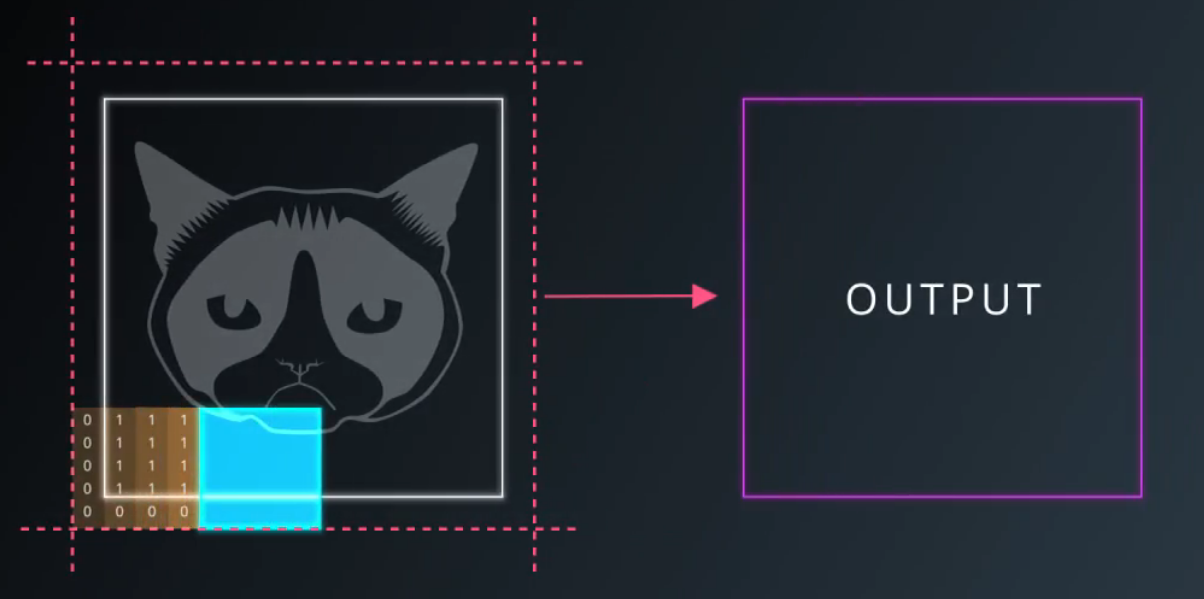

卷及神经网络的卷积操作对输入图像的边缘位置有两种处理方式:

- 有效填充

- 边缘填充

有效填充:滤波器的采样范围不超过图片的边界,strides=1时,输出特征图的大小计算方法为input_height - filter_height +1

相同填充,滤波器采样范围超过边界,且超过边界部分使用0填充,strides = 1 时,输出特征图的大小和输入图一样,即output_height=input_height

有效填充

相同填充

在tensorflow中,这两种操作的的输出特征图大小如何计算呢?

记输入形状为【input_height,input_width,input_depth】

采用same padding时

当strides设为2时,则输出的特征图的大小为【input_height/2, input_width/2, output_depth】

注意:如果input_height/2为上取整,即input_height=11时,在此有input_height/2 = 6

>>> import tensorflow as tf >>> aa = tf.truncated_normal([100,11,11,3]) >>> aa.shape TensorShape([Dimension(100), Dimension(11), Dimension(11), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 4, 2,padding='same') >>> bb.shape TensorShape([Dimension(100), Dimension(6), Dimension(6), Dimension(3)])

当strides设为3时,则输出的特征图的大小为【input_height/3, input_width/3, output_depth】

同理当input_height=11时,在此有input_height/3 = 4

>>> import tensorflow as tf >>> aa = tf.truncated_normal([100,11,11,3]) >>> aa.shape TensorShape([Dimension(100), Dimension(11), Dimension(11), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 4, 3,padding='same') >>> bb.shape TensorShape([Dimension(100), Dimension(4), Dimension(4), Dimension(3)])

>>> bb = tf.layers.conv2d(aa, 3, 4, (2,3),padding='same') >>> bb.shape TensorShape([Dimension(100), Dimension(6), Dimension(4), Dimension(3)])

采用valid padding时

当strides设为1时,则输出的特征图的大小为【input_height - kernel_size + 1, input_width - kernel_size + 1, output_depth】

>>> import tensorflow as tf >>> aa = tf.truncated_normal([100,11,11,3]) #创建形状为11*11*3的tensor(前面的100为batch size,可忽略) >>> bb = tf.layers.conv2d(aa, 3, 4, 1,padding='valid') #strides设为1 >>> bb.shape TensorShape([Dimension(100), Dimension(8), Dimension(8), Dimension(3)]) #输出大小为 8*8*3 >>> bb = tf.layers.conv2d(aa, 3, 3, 1,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(9), Dimension(9), Dimension(3)])

当strides设为k时,则输出的特征图的大小为【(input_height - kernel_size + 1)/k, (input_width - kernel_size + 1)/k, output_depth】

注意:除法向上取整

>>> bb = tf.layers.conv2d(aa, 3, 4, 2,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(4), Dimension(4), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 4, 3,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(3), Dimension(3), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 4, 4,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(2), Dimension(2), Dimension(3)])

以下几个可以验证上述结论:

>>> bb = tf.layers.conv2d(aa, 3, 3, 2,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(5), Dimension(5), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 3, 3,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(3), Dimension(3), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 3, 4,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(3), Dimension(3), Dimension(3)]) >>> bb = tf.layers.conv2d(aa, 3, 3, 5,padding='valid') >>> bb.shape TensorShape([Dimension(100), Dimension(2), Dimension(2), Dimension(3)])