Abstract

This paper presents a new open-sourced gradients boosting library that successfully handles categorical features and outperforms other gradient boosting algorithms based on CUPs and GPUs. Initally, the reason that this paper proposed is to handle categotical features.

Introduction

Why we need to pay attention to categorical features and transform them?

- categorical feature is a feature having a discrete set of values that are not comparable with each other (e.g., user ID or name of a city). So we need to transform into valueable feature.

This paper lists the problems about how we deal with categorical features and proposes:

- A new schema for calculating leaf values when selecting the tree structure, which helps reduce overfitting.

- Catboost has both GPU and CPU implementations. The GPU implementation allows for faster training and the CPU implement allows for faster scoring.

Categotical Features

-

one-hot encoding

The most widely used technique which is usually applied to low-cardinality categotical features is one-hot encoding. However, there are two issues:

- When it comes to high-cardinality categorical features, the sparse martrix will be so huge that the computation cost soaring.

- For the high important categorical features, the tree will grow the very unbalance tree and need to grow very deeply to achieve the accurancy. It will cause overfitting.

What we need to notice is that one-hot encoding can be done during the preprocessing phase or during the training, the latter can be implemented more efficiently in terms of training time and is implemented in Catboost.

-

Greedy Target-based Statistics (Greedy TBS)

Another way to deal with categorical features is to compute some statistics using the label values of the example. The simplest way is to substitue the category with the average label value on the whole training set.

[hat{x}_k^i=frac{sum_{j=1}^{n}[x_{j,k}=x_{i,k}]cdot Y_i}{sum_{j=1}^{n}[x_{j,k}=x_{i,k}]} ]Problem:

- overfitting

- bias gradients

-

Improved version of Greedy TBS

[hat{x}_k^i=frac{sum_{j=1}^{p-1}[x_{sigma _j,k}=x_{sigma _p,k}]Y_{sigma _j}+acdot P}{sum_{j=1}^{p-1}[x_{sigma _j,k}=x_{sigma _p,k}]+a} ]Adding prior (P) is a common practice and it helps to reduce the noise obtained from low-frequency categories.

Regression Task:

- calculating prior is to take the average label value in the dataset.

Binary Classification:

- prior is usually an priori probability of encountering a postive class.

Problem:

- overfitting

Feature combinations

Note that any combination of several categorical features could be considered as a new one (e.g., user ID and music genre). If we just use one-hot encoding or label encoding, we loose a new powerful information. So, we need to combine some features.

However, the number of combinations grows exponentially with the number of categorical featuees in dataset and it is not possible to consider all of them in the algorithm.

CatBoost method

CatBoost considers combination in a greedy way.

- No combinations are considered for the first split in the tree.

- For the next splits CatBoost combines all combinations and categorical features present in the current tree with all categorical features in dataset.

- Combination values are converted to numbers on the fly.

Notice:

CatBoost also generates combinations of numberical and categorical features in the following way: all the splits selected in the tree are considered as categorical with two values and used in combinations in the same way as categotical ones.

count encoding

Caboost also uses a number that calculates number of appearances of this category in the dataset.

Fighting Gradient Bias

why gradients have gradients bias?

what problem will cause by gradient bias?

how to fix it?

All classical boosting algorithms suffer from overfitting caused by the problem of biased pointwise gradient estimates. Gradients used same data points to estimate each step. This leads to a shift of the distribution of estimated gradients in any domain of feature space in comparison with the true distribution of gradients in this domain, which leads to overfitting.

In many GBDTs (e.g., XGBoost, LightGBM) building trees with two steps:

- Choosing the tree structure and setting values in leafts after the tree structure is fixed.

- To choose the best tree structure, the algorithm enumerates through different splits, builds trees with theses splits, set values in the obtained leafts, scores the trees and selects the best split.

CatBoost modified the first phase and hold the same second phase.

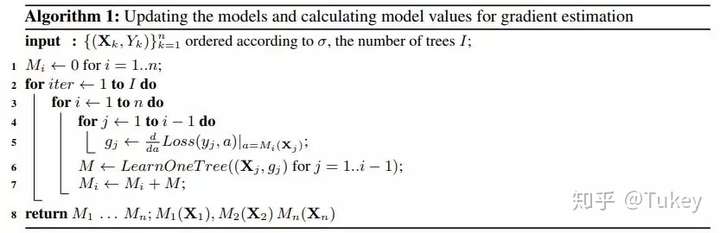

我们运用下面这个技巧来处理这个问题:对于每一个样本 ,我们训练一个单独的模型(M),且该模型从不使用基于该样本的梯度估计进行更新。我们使用(g_i)来估计 上的梯度,并使用这个估计对结果树进行评分。

参考:CatBoost详解

预测偏移和排序提升

预测偏移

对于学习预测偏移的内容,我提出了两个问题:

什么是预测偏移?

用什么办法解决预测偏移问题?

预测偏移(Prediction shift)是由梯度偏差造成的。在GDBT的每一步迭代中, 损失函数使用相同的数据集求得当前模型的梯度, 然后训练得到基学习器, 但这会导致梯度估计偏差, 进而导致模型产生过拟合的问题。CatBoost通过采用排序提升 (Ordered boosting) 的方式替换传统算法中梯度估计方法,进而减轻梯度估计的偏差,提高模型的泛化能力。下面我们对预测偏移进行详细的描述和分析。

首先来看下GBDT的整体迭代过程:

GBDT算法是通过一组分类器的串行迭代,最终得到一个强学习器,以此来进行更高精度的分类。它使用了前向分布算法,弱学习器使用分类回归树(CART)。

假设前一轮迭代得到的强学习器是, 损失函数是 ,则本轮迭代的目的是找到一个CART回归树模型的弱学习器 ,让本轮的损失函数最小。式(1)表示的是本轮迭代的目标函数 。

GBDT使用损失函数的负梯度来拟合每一轮的损失的近似值,式(2)中 表示的是上述梯度。

通常用式(3)近似拟合 。

最终得到本轮的强学习器,如式(4)所示:

在这个过程当中,偏移是这样发生的:

根据进行随机计算的条件分布 与测试集的分布 发生偏移,这样由公式(3)定义的基学习器 与公式(1)定义的产生偏差,最后影响模型 的泛化能力。

排序提升

为了克服预测偏移问题,CatBoost提出了一种新的叫做Ordered boosting的算法。

由上图的Ordered boosting算法可知,为了得到无偏梯度估计, CatBoost对每一个样本都会训练一个单独的模型 ,模型 由使用不包含样本的训练集训练得到。我们使用 来得到关于样本的梯度估计,并使用该梯度来训练基学习器并得到最终的模型。

Ordered boosting算法好是好,但是在大部分的实际任务当中都不具备使用价值,因为需要训练个不同的模型,大大增加的内存消耗和时间复杂度。在CatBoost当中,我们以决策树为基学习器的梯度提升算法的基础上,对该算法进行了改进。

前面提到过,在传统的GBDT框架当中,构建下一棵树分为两个阶段:选择树结构和在树结构固定后计算叶子节点的值。CatBoost主要在第一阶段进行优化。在建树的阶段,CatBoost有两种提升模式,Ordered和Plain。Plain模式是采用内建的ordered TS对类别型特征进行转化后的标准GBDT算法。Ordered则是对Ordered boosting算法的优化。两种提升模式的具体介绍可以翻看论文《CatBoost: unbiased boosting with categorical features》。