在上一篇博客中使用redis所维护的代理池抓取微信文章,开始运行良好,之后运行时总是会报501错误,我用浏览器打开网页又能正常打开,调试了好多次都还是会出错,既然这种方法出错,那就用selenium模拟浏览器获取搜狗微信文章的详情页面信息,把这个详情页面信息获取后,仍然用pyquery库进行解析,之后就可以正常的获得微信文章的url,然后就可以通过这个url,获得微信文章的信息

代码如下:

from selenium import webdriver from selenium.webdriver.common.by import By from selenium.webdriver.support.ui import WebDriverWait from selenium.webdriver.support import expected_conditions as EC from weixin.weixin.weixin_article import WeixinArticle from requests.exceptions import ConnectionError from pyquery import PyQuery as pq class SeleniumWeixinArticle(WeixinArticle): """使用selenium模拟浏览器,获取搜狗微信搜索的详细信息,继承WeixinArticle这个类""" proxy = None def __init__(self): """初始化浏览器,及部分浏览器信息""" self.browser = webdriver.Chrome(executable_path="C:/codeapp/seleniumDriver/chrome/chromedriver.exe") self.wait = WebDriverWait(self.browser, 10) super(SeleniumWeixinArticle, self).__init__() def get_html(self, url, count=1): """重写WeixinArticle 中的get_html 用selenium模拟浏览器去获取搜狗微信搜索的信息""" if not url: return None # 最后递归max_count这么多次,防止无限递归 if count >= self.max_count: print("try many count ") return None print('crowling url ', url) print('crowling count ', count) global proxy if self.proxy: proxy_ip = '--proxy-server=http://' + self.proxy chrome_options = webdriver.ChromeOptions() # 切换IP chrome_options.add_argument(proxy_ip) browser = self.browser(chrome_options=chrome_options) else: browser = self.browser try: browser.get(url) # 返回值是None,要取数直接用browser.page_source next_page = self.wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, "#sogou_next"))) if browser.current_url == url: page_source = browser.page_source return page_source else: print("must change ip proxy ") proxy = self.get_proxy(self.proxy_pool_url) if proxy: return self.get_html(url) else: print("get proxy is faired ") return None except ConnectionError: count += 1 proxy = self.get_proxy(self.proxy_pool_url) return self.get_html(url, count) if __name__ == "__main__": weixin_article = SeleniumWeixinArticle() weixin_article.run()

程序较为简单,主要是重写WeixinArticle中的get_html方法,其他的逻辑不变,这也是面向对象编程的好处,

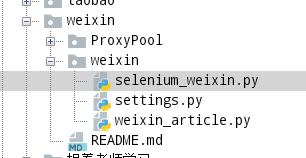

程序结构逻辑如下: