- 环境说明

- Win10

- VMware 15.0.2

- Ubuntu 18.10

- 软件版本说明

- hadoop-2.7.7.tar.gz

- jdk-8u212-linux-x64.tar.gz

- sbt-1.2.8.tgz

- scala-2.12.8.tgz

- spark-2.4.3-bin-hadoop2.7.tgz

- 下载以上压缩包,解压缩后添加环境变量,vi ~./bashrc,在最后添加如下

版本环境

版本环境1 # set hadoop env 2 export HARDOOP_HOME=/home/gemsuser/install/hadoop-2.7.7 3 export PATH=${HARDOOP_HOME}/bin:${PATH} 4 5 # set java env 6 export JAVA_HOME=/home/gemsuser/install/jdk1.8.0_212 7 export JRE_HOME=${JAVA_HOME}/jre 8 export CLASS_PATH=${JAVA_HOME}/lib:${JRE_HOME}/lib 9 export PATH=${JAVA_HOME}/bin:${PATH} 10 11 # set scala env 12 export SCALA_HOME=/home/gemsuser/install/scala-2.12.8 13 export PATH=${SCALA_HOME}/bin:${PATH} 14 15 # set sbt env 16 export SBT_HOME=/home/gemsuser/install/sbt 17 export PATH=${SBT_HOME}/bin:${PATH} 18 19 # set spark env 20 export SPARK_HOME=/home/gemsuser/install/spark-2.4.3-bin-hadoop2.7 21 export PATH=${SPARK_HOME}/bin:${PATH}

- 调整日志级别

log4j.properties:复制template文件,可修改log4j.rootCategory=INFO, console,更改为WARN,修改日志打印级别- spark-env.sh:复制template文件,配置spark运行所需要的环境变量

- 配置spark的config文件

Spark Maste Config

Spark Maste Config1 export JAVA_HOME=/home/gemsuser/install/jdk1.8.0_212 2 export SCALA_HOME=/home/gemsuser/install/scala-2.12.8 3 export SPARK_HOME=/home/gemsuser/install/spark-2.4.3-bin-hadoop2.7 4 export HADOOP_CONF_DIR=/home/gemsuser/install/spark-2.4.3-bin-hadoop2.7/conf 5 export SPARK_LOG_DIR=/home/gemsuser/install/spark-2.4.3-bin-hadoop2.7/logs 6 export SPARK_PID_DIR=/home/gemsuser/install/spark-2.4.3-bin-hadoop2.7/pid 7 export SPARK_MASTER_IP=localhost 8 export SPARK_MASTER_HOST=localhost 9 export SPARK_LOCAL_IP=localhost 10 export SPARK_WORKER_MEMORY=1G

Spark Slaves Config

Spark Slaves Config1 maste 2 slava1 3 slave2

- 配置maste无密登录slave

- ssh-keygen -t rsa

- cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

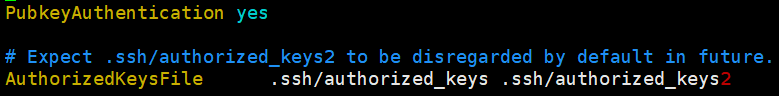

- 修改/etc/ssh/sshd_config:

- 启动Spark进程: