一。 爬取博客信息

使用jsoup可以下载并且使用jquery语法来解析xml 这里先通过http://blog.csdn.net/liaomin416100569 右键查看源代码可以看到分类的源代码为:

声明:该内容为个人学习使用 不做其他用途 如果侵权 请联系以下邮箱 416100569@qq.com

<div id="panel_Category" class="panel">

<ul class="panel_head"><span>文章分类</span></ul>

<ul class="panel_body">

<li>

<a href="/liaomin416100569/article/category/744627" onclick="_gaq.push(['_trackEvent','function', 'onclick', 'blog_articles_wenzhangfenlei']); ">activeMQ</a><span>(3)</span>

</li>

<li>

<a href="/liaomin416100569/article/category/643417" onclick="_gaq.push(['_trackEvent','function', 'onclick', 'blog_articles_wenzhangfenlei']); ">ant</a><span>(3)</span>

</li>

<li>

</ul>

</div>获取所有的分类 通过jquery选择器 #panel_Category a 即可获取所有的超链接 通过 text()获取分类名称 通过 attr("href")获取分类url

同理 获取分类下的所有文章的标题 描述 内容 最后更新时间等 最后通过lucene生成索引 代码如下

》分类实体类

package cn.et.spilder;

public class Category {

private String name;

private String count;

private String url;

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getCount() {

return count;

}

public void setCount(String count) {

this.count = count;

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

}

package cn.et.spilder;

public class Arcticle {

private String title;

private String description;

private String url;

private String createTime;

private String filePath;

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getDescription() {

return description;

}

public void setDescription(String description) {

this.description = description;

}

public String getUrl() {

return url;

}

public void setUrl(String url) {

this.url = url;

}

public String getCreateTime() {

return createTime;

}

public void setCreateTime(String createTime) {

this.createTime = createTime;

}

public String getFilePath() {

return filePath;

}

public void setFilePath(String filePath) {

this.filePath = filePath;

}

}

》lucene工具类

package cn.et.index;

import java.io.File;

import java.io.IOException;

import java.io.StringReader;

import java.util.ArrayList;

import java.util.List;

import org.apache.commons.io.FileUtils;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.TextField;

import org.apache.lucene.document.Field.Store;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.queryparser.classic.MultiFieldQueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.search.highlight.Highlighter;

import org.apache.lucene.search.highlight.QueryScorer;

import org.apache.lucene.search.highlight.SimpleHTMLFormatter;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.store.RAMDirectory;

import org.apache.lucene.util.Version;

import org.jsoup.Jsoup;

import org.wltea.analyzer.lucene.IKAnalyzer;

import cn.et.spilder.Arcticle;

import cn.et.spilder.CsdnSpilder;

/**

* lucene操作类

*时间:2017-6-30 上午09:14:48

*作者: LM

*联系方式:973465719@qq.com

*

*/

public class LuceneUtils {

//定义IK分词器

static Analyzer analyzer = new IKAnalyzer();

//批量处理内存存储的最大docuemnt个数

final static int dealCount=50;

//html格式化器 在关键字前后加上红色字体标签

static SimpleHTMLFormatter htmlFormatter=new SimpleHTMLFormatter("<font color="red">","</font>");

/**

* 关闭writer对象

* @param iw

* @throws IOException

*/

public static void close(IndexWriter iw) throws IOException{

iw.close();

}

public static IndexWriter getRamWriter(RAMDirectory ramDirectory) throws IOException{

IndexWriterConfig iwc=new IndexWriterConfig(Version.LUCENE_47,analyzer);

IndexWriter iw=new IndexWriter(ramDirectory, iwc);

return iw;

}

public static IndexWriter getWriter(String dir) throws IOException{

// FSDirectory fsDirectory= FSDirectory.open(Paths.get(dir));

FSDirectory fsDirectory= FSDirectory.open(new File(dir));

IndexWriterConfig iwc=new IndexWriterConfig(Version.LUCENE_47,analyzer);

IndexWriter iw=new IndexWriter(fsDirectory, iwc);

return iw;

}

public static List<Arcticle> search(String indexDir,String keys) throws Exception{

FSDirectory fsDirectory= FSDirectory.open(new File(indexDir));

IndexSearcher searcher=new IndexSearcher(DirectoryReader.open(fsDirectory));

MultiFieldQueryParser qp=new MultiFieldQueryParser(Version.LUCENE_47, new String[]{"title","content"}, analyzer);

Query query=qp.parse(keys);

//初始化高亮器

Highlighter high=new Highlighter(htmlFormatter, new QueryScorer(query));

TopDocs td=searcher.search(query, 10);

ScoreDoc[] sd=td.scoreDocs;

List<Arcticle> arcList=new ArrayList<Arcticle>();

for(ScoreDoc ss:sd){

Document doc=searcher.doc(ss.doc);

Arcticle arc=new Arcticle();

arc.setTitle(doc.getField("title").stringValue());

TokenStream tokenStream = analyzer.tokenStream("desc", new StringReader(doc.get("desc")));

String str = high.getBestFragment(tokenStream, doc.get("desc"));

arc.setDescription(str);

arc.setUrl(CsdnSpilder.rootDir+doc.getField("url").stringValue());

arc.setCreateTime(doc.getField("createTime").stringValue());

arcList.add(arc);

}

return arcList;

}

/**

* 优化方式 先将索引写入内存 到一定量后写入磁盘

* @param dir

* @param arc

* @throws IOException

*/

public static void indexs(String dir,List<Arcticle> arc) throws IOException{

RAMDirectory ramDirectory=new RAMDirectory();

IndexWriter ramWriter=getRamWriter(ramDirectory);

new File(dir).mkdirs();

//索引文件存在于目录中 IndexWriter只是个写入对象

IndexWriter iw=getWriter(dir);

for(int i=1;i<=arc.size();i++){

Arcticle srcTmp=arc.get(i-1);

if(i%dealCount==0 || i==arc.size()){

//必须关闭writer才能将目录索引数据写入到其他的writer中

ramWriter.commit();

ramWriter.close();

iw.addIndexes(ramDirectory);

if(i<arc.size())

ramWriter=getRamWriter(ramDirectory);

}else{

index(ramWriter,srcTmp);

}

}

iw.commit();

iw.close();

}

/**

* 直接写入磁盘

* @param writer

* @param arc

* @return

* @throws IOException

*/

public static Document index(IndexWriter writer,Arcticle arc) throws IOException{

Document doc=new Document();

doc.add(new TextField("title", arc.getTitle(), Store.YES));

doc.add(new TextField("url", arc.getUrl(), Store.YES));

doc.add(new TextField("createTime", arc.getCreateTime(), Store.YES));

doc.add(new TextField("desc", arc.getDescription(), Store.YES));

doc.add(new TextField("content", Jsoup.parse(FileUtils.readFileToString(new File(arc.getFilePath()))).text(), Store.YES));

writer.addDocument(doc);

return doc;

}

}

package cn.et.spilder;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import java.util.Properties;

import org.apache.commons.io.FileUtils;

import org.apache.log4j.Logger;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import org.junit.Test;

import cn.et.index.LuceneUtils;

/**

* Csdn的蜘蛛 需要自行分析博客的html语法的特点

*时间:2017-6-29 上午10:42:47

*作者: LM

*联系方式:973465719@qq.com

*

*/

public class CsdnSpilder {

//存放下载文件的目录

public final static String SAVED_DIR="F:/mycsdn/";

//CSDN的blog的官网

public final static String rootDir="http://blog.csdn.net/";

//爬取的用户名

public final static String userName="liaomin416100569";

//路徑分隔符

public final static String separatorChar="/";

//索引目录

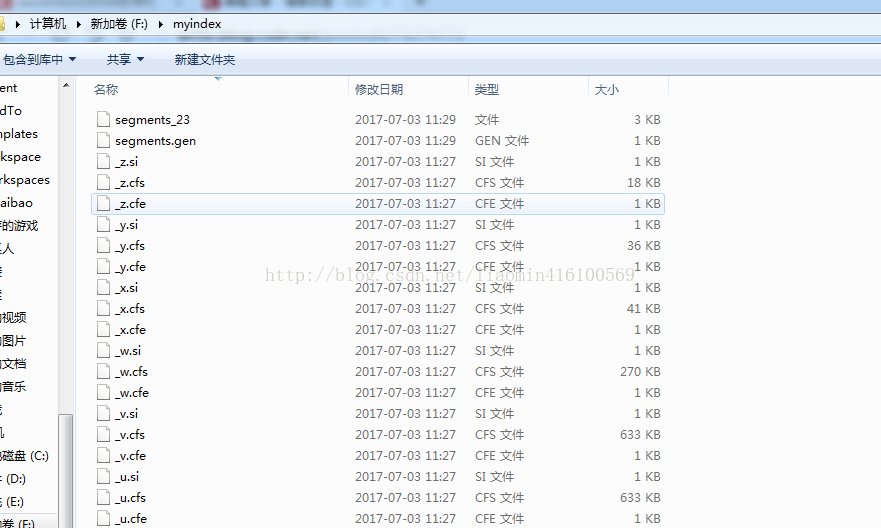

public final static String indexDir="F:/myindex/";

//日志处理

public final static Logger logger=Logger.getLogger(CsdnSpilder.class);

/**

开始爬虫的入口

* @param args

* @throws IOException

*/

public static void main(String[] args) throws IOException {

logger.debug("开始处理:"+rootDir+userName);

Document doc = getDoc(rootDir+userName);

//获取所有的分类

List<Category> category=getCategory(doc);

logger.debug("获取到类型个数为::"+category.size());

for(Category tmCate:category){

String curl=tmCate.getUrl();

logger.debug("开始处理文章分类:"+tmCate.getName()+",路径:"+curl+",文章数:"+tmCate.getCount());

//创建目录

new File(SAVED_DIR+curl).mkdirs();

List<Arcticle> arcList=getArcticle(curl);

logger.debug("开始索引");

LuceneUtils.indexs(indexDir, arcList);

logger.debug("处理完成");

}

}

/**

* 无法获取重试获取Document对象

* @return

*/

public static Document getDoc(String path){

Document doc;

while(true){

try {

doc = Jsoup.connect(path).get();

break;

} catch (Exception e) {

e.printStackTrace();

}

}

return doc;

}

@Test

public void testArcticle() throws IOException{

String curl="/liaomin416100569/article/category/650802";

//创建目录

new File(SAVED_DIR+curl).mkdirs();

List<Arcticle> arcList=getArcticle(curl);

LuceneUtils.indexs(indexDir, arcList);

System.out.println(arcList.size());

}

/**

* 获取所有分类

* @return

* @throws IOException

*/

public static List<Category> getCategory(Document doc) throws IOException{

Elements newsHeadlines = doc.select("#panel_Category a");

String[] categoryArr=newsHeadlines.text().replaceAll(" +", " ").split(" ");

List<Category> cates=new ArrayList<Category>();

for(int i=0;i<newsHeadlines.size();i++){

Element el=newsHeadlines.get(i);

Category c=new Category();

c.setName(el.text().trim());

c.setCount(el.nextElementSibling().text());

c.setUrl(el.attr("href"));

cates.add(c);

}

return cates;

}

/**

* 通过分类获取文章 文章过多不建议调用此方法

* @param cl

* @return

* @throws IOException

*/

public static List<Arcticle> getArcticle(String typeUrl) throws IOException{

String category=rootDir+typeUrl;

Document doc = getDoc(category);

//判断是否存在分页

Elements newsHeadlines = doc.select("#papelist");

int totalPage=0;

List<Arcticle> arcs=new ArrayList<Arcticle>();

//存在分页

if(newsHeadlines.size()>0){

totalPage=Integer.parseInt(newsHeadlines.get(0).child(0).text().split("共")[1].split("页")[0]);

for(int i=1;i<=totalPage;i++){

arcs.addAll(getArcticle(typeUrl,i));

}

}else{

arcs.addAll(getArcticle(typeUrl,1));

}

return arcs;

}

/**

* 获取分配中某一页的文章

* @param typeUrl

* @param page

* @return

* @throws IOException

*/

public static List<Arcticle> getArcticle(String typeUrl,int page) throws IOException{

//獲取當前頁的url地址

String pageUrl=rootDir+typeUrl+separatorChar+page;

//获取需要保存的目录

String destPath=SAVED_DIR+typeUrl+separatorChar+page;

//提前创建该目录

new File(destPath).mkdirs();

//如果如法连接自动重连

Document doc = getDoc(pageUrl);

//获取到所有文章的标题标签

Elements docs = doc.select(".link_title");

List<Arcticle> arcs=new ArrayList<Arcticle>();

for(int i=0;i<docs.size();i++){

Element el=docs.get(i);

Element ael=el.child(0);

//获取标题上超链接的地址

String url=ael.attr("href");

String title=ael.text();

Arcticle a=new Arcticle();

a.setUrl(url);

a.setTitle(title);

//获取描述的标签

Element descElement=el.parent().parent().nextElementSibling();

String description=descElement.text();

a.setDescription(description);

//获取最后更新时间的标签

Element timeElement=descElement.nextElementSibling();

String createTime=timeElement.select(".link_postdate").text();

a.setCreateTime(createTime);

logger.debug(title+url+description+createTime);

arcs.add(a);

//保存到文件中

String filePath=saveFile(destPath,rootDir+url,a);

a.setFilePath(filePath);

}

return arcs;

}

/**

* 保存html内容到文件中

* @param destDir 需要保存的目標目錄

* @param htmlUrl 抓取的htmlurl

* @throws IOException

*/

public static String saveFile(String destDir,String htmlUrl,Arcticle arc) throws IOException{

Document doc = getDoc(htmlUrl);

String fileName=htmlUrl.substring(htmlUrl.lastIndexOf("/")+1);

String file=destDir+separatorChar+fileName+".html";

File hfile=new File(file);

boolean ifUpdate=true;

//文件存在需要判断文件是否存在更新

if(hfile.exists()){

Properties p=new Properties();

p.load(new FileInputStream(destDir+separatorChar+fileName+".ini"));

String createTime=p.getProperty("createTime");

//之前的文章创建时间 小于 网上爬取文章 时间 所以文章被修改过 需要更新

if(createTime.compareTo(arc.getCreateTime())<0){

hfile.delete();

ifUpdate=true;

}else{

ifUpdate=false;

}

}

if(ifUpdate){

//写入文件 并将信息写入资源文件

Properties p=new Properties();

p.setProperty("title", arc.getTitle());

p.setProperty("url", arc.getUrl());

p.setProperty("description", arc.getDescription());

p.setProperty("createTime", arc.getCreateTime());

p.store(new FileOutputStream(destDir+separatorChar+fileName+".ini"),htmlUrl);

FileUtils.writeStringToFile(hfile, doc.toString(),"UTF-8");

}

return file;

}

}

》maven依赖配置(IK分词器 2012停止更新 只支持到lucene4.7.2所以使用该版本)

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-io</artifactId>

<version>1.3.2</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.4.3</version>

</dependency>

<dependency>

<groupId>com.janeluo</groupId>

<artifactId>ikanalyzer</artifactId>

<version>2012_u6</version>

</dependency>

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-highlighter</artifactId>

<version>4.7.2</version>

</dependency>》log4j配置 在 src/main/java添加 log4j.properties

log4j.rootLogger=debug,stdout

log4j.appender.stdout = org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target = System.out

log4j.appender.stdout.layout = org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern =%d{ABSOLUTE} %5p %c :%L - %m%n效果如下

二。 搜索索引文件

使用springboot快速发布web应用 使用angularjs 实现ajax请求和迭代输出

》添加maven依赖

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.et</groupId>

<artifactId>spilder</artifactId>

<version>0.0.1-SNAPSHOT</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>1.5.4.RELEASE</version>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--对jsp的支持-->

<dependency>

<groupId>org.apache.tomcat.embed</groupId>

<artifactId>tomcat-embed-jasper</artifactId>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>javax.servlet-api</artifactId>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>jstl</artifactId>

</dependency>

<dependency>

<groupId>com.janeluo</groupId>

<artifactId>ikanalyzer</artifactId>

<version>2012_u6</version>

</dependency>

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-highlighter</artifactId>

<version>4.7.2</version>

</dependency>

</dependencies>

</project>package cn.et;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

@SpringBootApplication

public class WebStart {

public static void main(String[] args) {

SpringApplication.run(WebStart.class, args);

}

}

package cn.et.controller;

import java.util.List;

import javax.servlet.http.HttpServletResponse;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import cn.et.index.LuceneUtils;

import cn.et.spilder.Arcticle;

@RestController

public class SearchController {

//索引目录

public final static String indexDir="F:/myindex/";

@RequestMapping("search")

public List<Arcticle> search(String key,HttpServletResponse rsp) throws Exception{

rsp.setHeader("Content-Type", "application/json;charset=UTF-8");

return LuceneUtils.search(indexDir, key);

}

}

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN">

<html>

<head>

<title>s.html</title>

<meta http-equiv="keywords" content="keyword1,keyword2,keyword3">

<meta http-equiv="description" content="this is my page">

<meta http-equiv="content-type" content="text/html; charset=UTF-8">

<!--<link rel="stylesheet" type="text/css" href="./styles.css">-->

<script type="text/javascript" src="angular.min.js"></script>

</head>

<body>

<div ng-app="searchApp" ng-controller="searchController">

搜索key:<input type="text" name="key" ng-model="key"> <input type="button" value='查询' ng-click="search()">

<div ng-repeat="x in searchData">

<a href="{{x.url}}"><font size="5"><titl ng-bind-html="x.title | trustHtml"></titl><br/></font></a>

<desp ng-bind-html="x.description | trustHtml"></desp><hr>

</div>

</div>

</body>

<script type="text/javascript">

var searchApp=angular.module("searchApp",[]);

searchApp.controller("searchController",function($scope,$http,$sce){

$scope.search=function(){

$http({

method:"GET",

url:"search?key="+$scope.key

}).then(function(res){

var d=res.data;

$scope.searchData=d;

},function(res){

alert("查询失败"+res.status+"-"+res.statusText);

});

}

})

//因为angular会自动将html内容转义 需要添加一个过滤器 表示信任

searchApp.filter("trustHtml",function($sce){

return function(val){

return $sce.trustAsHtml(val);

}

});

</script>

</html>

访问 http://localhost:8080/s.html 尝试搜索关键 点击标题 自动链接到csdn博客

技术学习路线(学习周期 一周)

jsoup 学习jquery用法即可 (http://www.runoob.com/jquery/jquery-tutorial.html)

angularjs学习 (http://www.runoob.com/angularjs/angularjs-tutorial.html)

springboot 学习 (http://docs.spring.io/spring-boot/docs/1.5.4.RELEASE/reference/htmlsingle/)

lucence 官网关于lucence例子 (http://lucene.apache.org/core/6_6_0/index.html)