1.哈夫曼树简述

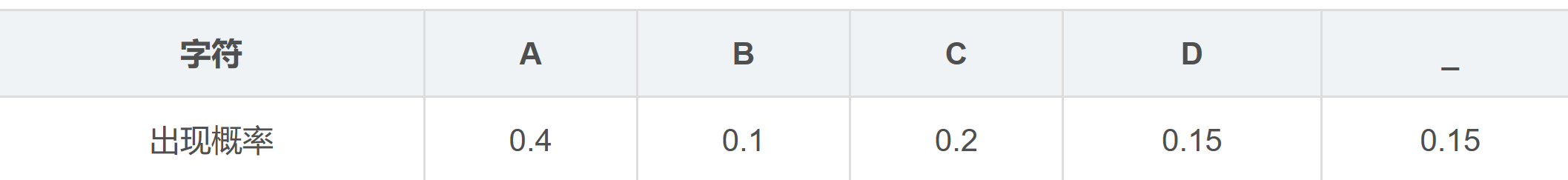

- 给定n个权值作为n个叶子结点,构造一棵二叉树,若带权路径长度达到最小,称这样的二叉树为最优二叉树,也称为哈夫曼树(Huffman Tree)。

- 为什么使用哈夫曼编码压缩文件?

- 一个字符八个bits,若使用编码来表示字母,节省空间,传输速度快。根据字母出现的频率构建哈夫曼树,频数即为权值,频数出现最多的字母更靠近哈夫曼树的根节点,编码长度也更短。

2.构造树的节点

- 先构造一个哈夫曼树的节点类,节点类包含5个元素:指向左节点、右节点的指针、代表的字母、频数和对应编码。

private HaffNode left,right;

private char ch;

private int n;

private String code;

- 复写节点类的compareTo方法,方便排序。

//便于使用列表排序

@Override

public int compareTo(Object o) {

HaffNode a=(HaffNode)o;

if(this.n>a.n)

return 1;

else if(this.n==a.n)

return 0;

else

return -1;

}

3.构造哈夫曼树的类(压缩)

- 构造一个哈夫曼树类,类中有6个元素:包含26个字母和空格的字符数组、读取文件得到的字符数组、保存各个节点权值的整数数组、哈夫曼树的根节点,哈夫曼树的列表形式,以及保存各个节点编码值的字符串数组。

//创立字母表

private static char[] ch={'a','b','c','d','e','f','g','h','i','j','k','l','m','n','o','p','q','r','s','t','u','v','w','x','y','z',' '};

//文件内容的字符数组

private char[] a=new char[100];

//各个字符的频数

private int[] sum=new int[27];

//树的根节点

private HaffNode root;

//保存压缩文件的单个编码

private String[] strings=new String[28];

//哈夫曼树的列表形式

private LinkedList treelist=new LinkedList<HaffNode>();

- 读取未压缩文件

//从文件中读取字节流,并保存为字符数组

public void read(String path,String name) throws IOException {

int i=0;

File f=new File(path,name);

Reader reader=new FileReader(f);

while(reader.ready()){

a[i++]=(char)reader.read();

}

reader.close();

System.out.print("压缩前的文件内容为:");

for(int k=0;k<a.length;k++){

System.out.print(a[k]);

}

System.out.println();

}

- 进行频数统计

//统计各个字母的频次

public void count(){

for(int k=0;k<a.length;k++){

for (int j=0;j<ch.length;j++){

if(a[k]==ch[j])

sum[j]++;

}

}

}

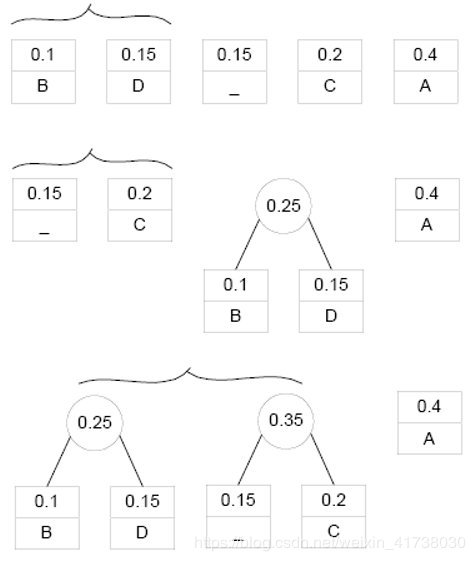

- 构造一棵哈夫曼树,将所有节点都加入列表后先排序一次。当列表长度大于1时,每次选取两个列表中权值最小的节点(也就是列表第1位和第2位的节点),构成一个字符为空的父节点,列表中删除这两个节点,把父节点加入列表后,对列表再次排序,循环到列表中只剩下一个节点时,该节点为根节点,保存。

//构造哈夫曼树,保存树的根节点

public LinkedList createTree() {

count();

//匹配频数和字符构成节点,全部加入树的列表

for (int i = 0; i < 27; i++) {

HaffNode node = new HaffNode();

node.setCh(ch[i]);

node.setN(sum[i]);

treelist.add(i, node);

}

Collections.sort(treelist);

while (treelist.size() > 1) {

//获得两个权值最小的节点

HaffNode first = (HaffNode) treelist.removeFirst();

HaffNode second = (HaffNode) treelist.removeFirst();

//将这两个权值最小的节点构造成父节点

HaffNode parent = new HaffNode();

parent.setN(first.getN() + second.getN());

parent.setLeft(first);

parent.setRight(second);

//把父节点添加进列表,并重新排序

treelist.add(parent);

Collections.sort(treelist);

}

root= (HaffNode) treelist.getFirst();

}

- 根据哈夫曼树获取编码:左子树编码为0,右子树编码为1;默认左右子树中频数较大的放右边。

//根据构建好的哈夫曼树获得各节点编码

public void getCode(HaffNode root,String code){

if(root.getLeft()!=null){

getCode(root.getLeft(),code+"0");

}

if(root.getRight()!=null){

getCode(root.getRight(),code+"1");

}

if(root.getRight()==null&&root.getLeft()==null){

System.out.println(root.getCh()+"的频次是"+root.getN()+",编码是"+code);

root.setCode(code);

return treelist;

}

}

- 根据编码转换文件内容的格式,并将转换后的结果存入文件。存入文件时写入频数表(存入字符+频数,以逗号分割,例如“a2,b3”表示a出现了2次,b出现了3次),文件内容的编码之间以空格分割,便于解压。

//文件压缩:格式转换与文件写入

public void Compress(String path) throws IOException {

String result="";

for(int i=0;i<27;i++){

result+=ch[i]+""+sum[i]+",";

}

String content="";

for(int i=0;i<a.length;i++){

for(int k=0;k<ch.length;k++){

if(a[i]==ch[k])

content+=search(root,ch[k]).getCode()+" ";

}

}

result+=content;

File f=new File(path);

if(!f.exists()){

f.createNewFile();

}

System.out.println("编码结果为:"+content);

Writer writer=new FileWriter(f);

BufferedWriter bufferedWriter=new BufferedWriter(writer);

bufferedWriter.write(result);

bufferedWriter.flush();

bufferedWriter.close();

}

- 写入一个search()方法,根据字符找到节点,从而找到该字符编码

public HaffNode search(HaffNode root,char c) {

if(root.getCh()==c){

return root;

}

if(root.getLeft()!=null||root.getRight()!=null) {

HaffNode a=search(root.getLeft(),c);

HaffNode b=search(root.getRight(),c);

if(a!=null)

return a;

if(b!=null)

return b;

}

return null;

}

4.构造哈夫曼树的类(解压)

- 解压的相关方法可以和加压的相关方法放在同一个哈夫曼树的类里,共用相同的私有变量,互不影响。为了便于区分,这里将两个过程分开来写。

- 获取压缩后的文件:

public void read2(String path,String name) throws IOException {

//读取文件

File file=new File(path,name);

Reader reader=new FileReader(file);

BufferedReader bufferedReader=new BufferedReader((new InputStreamReader(new FileInputStream(file),"GBK")));

String str="";

String temp="";

while((temp=bufferedReader.readLine())!=null){

System.out.println("解压前文件内容:"+temp);

str=temp;

}

//获取每个字符的频数(使用逗号分割),以及文件压缩后的内容

StringTokenizer s =new StringTokenizer(str,",");

int i=0;

while (s.hasMoreTokens()){

strings[i++]=s.nextToken();

}

}

- 根据频数造树:只有在添加节点时和压缩的方法有区别,分解表示频数的字符串,第一位表示字符,第二位表示频数,例如“a2”。

public LinkedList createTree2(){

for(int i=0;i<27;i++){

HaffNode temp=new HaffNode();

temp.setCh(strings[i].charAt(0));

temp.setN(strings[i].charAt(1)-'0');

treelist.add(temp);

}

Collections.sort(treelist);

while (treelist.size() > 1) {

//获得两个权值最小的节点

HaffNode first = (HaffNode) treelist.removeFirst();

HaffNode second = (HaffNode) treelist.removeFirst();

//将这两个权值最小的节点构造成父节点

HaffNode parent = new HaffNode();

parent.setN(first.getN() + second.getN());

parent.setLeft(first);

parent.setRight(second);

//把父节点添加进列表,并重新排序

treelist.add(parent);

Collections.sort(treelist);

}

root= (HaffNode) treelist.getFirst();

return treelist;

}

- 根据哈夫曼树获得编码:直接复用压缩里的getCode()方法。

- 开始解压,同样写了一个search2()方法用来根据编码寻找节点,进而找到对应字符进行格式转换。

//格式转换与文件写入

public void reCompress(String path) throws IOException {

String t=strings[27];

String result="";

StringTokenizer stringTokenizer=new StringTokenizer(t);

while(stringTokenizer.hasMoreTokens()){

String temp=stringTokenizer.nextToken();

result+=search2(root,temp).getCh();

}

System.out.println("解压后的文件内容为:"+result);

File f=new File(path);

Writer writer=new FileWriter(f);

BufferedWriter bufferedWriter=new BufferedWriter(writer);

bufferedWriter.write(result);

bufferedWriter.flush();

bufferedWriter.close();

}

public HaffNode search2(HaffNode root,String code) {

if (root.getCode() == null) {

if (root.getLeft() != null || root.getRight() != null) {

HaffNode a = search2(root.getLeft(), code);

HaffNode b = search2(root.getRight(), code);

if (a != null)

return a;

if (b != null)

return b;

}

return null;

}

else if(root.getCode().equals(code)){

return root;

}

return null;

5.整体工程文件(包括测试类)

- 节点类

public class HaffNode implements Comparable {

private HaffNode left,right;

private char ch;

private int n;

private String code;

public void setLeft(HaffNode left) {

this.left = left;

}

public void setRight(HaffNode right) {

this.right = right;

}

public void setCh(char ch) {

this.ch = ch;

}

public void setN(int sum) {

this.n = sum;

}

public void setCode(String code) {

this.code = code;

}

public HaffNode getLeft() {

return left;

}

public HaffNode getRight() {

return right;

}

public char getCh() {

return ch;

}

public int getN() {

return n;

}

public String getCode() {

return code;

}

//便于使用列表排序

@Override

public int compareTo(Object o) {

HaffNode a=(HaffNode)o;

if(this.n>a.n)

return 1;

else if(this.n==a.n)

return 0;

else

return -1;

}

@Override

public String toString() {

return getCh()+"的频次是"+getN()+",编码为"+getCode();

}

}

- 哈夫曼树类

import java.io.*;

import java.util.*;

public class HaffmanTree {

//创立字母表

private static char[] ch={'a','b','c','d','e','f','g','h','i','j','k','l','m','n','o','p','q','r','s','t','u','v','w','x','y','z',' '};

private char[] a=new char[100];

private int[] sum=new int[27];

private HaffNode root;

//保存压缩文件的单个编码

private String[] strings=new String[28];

//哈夫曼树的列表形式

private LinkedList treelist=new LinkedList<HaffNode>();

//构造哈夫曼树,保存树的根节点

public LinkedList createTree() {

count();

//匹配频数和字符构成节点,全部加入树的列表

for (int i = 0; i < 27; i++) {

HaffNode node = new HaffNode();

node.setCh(ch[i]);

node.setN(sum[i]);

treelist.add(i, node);

}

Collections.sort(treelist);

while (treelist.size() > 1) {

//获得两个权值最小的节点

HaffNode first = (HaffNode) treelist.removeFirst();

HaffNode second = (HaffNode) treelist.removeFirst();

//将这两个权值最小的节点构造成父节点

HaffNode parent = new HaffNode();

parent.setN(first.getN() + second.getN());

parent.setLeft(first);

parent.setRight(second);

//把父节点添加进列表,并重新排序

treelist.add(parent);

Collections.sort(treelist);

}

root= (HaffNode) treelist.getFirst();

return treelist;

}

//根据哈夫曼树获得各节点编码

public void getCode(HaffNode root,String code){

if(root.getLeft()!=null){

getCode(root.getLeft(),code+"0");

}

if(root.getRight()!=null){

getCode(root.getRight(),code+"1");

}

if(root.getRight()==null&&root.getLeft()==null){

System.out.println(root.getCh()+"的频次是"+root.getN()+",编码是"+code);

root.setCode(code);

}

}

public void read(String path,String name) throws IOException {

//从文件中读取字节流

int i=0;

File f=new File(path,name);

Reader reader=new FileReader(f);

while(reader.ready()){

a[i++]=(char)reader.read();

}

reader.close();

System.out.print("压缩前的文件内容为:");

for(int k=0;k<a.length;k++){

System.out.print(a[k]);

}

System.out.println();

}

public void count(){

//统计各个字母的频次

for(int k=0;k<a.length;k++){

for (int j=0;j<ch.length;j++){

if(a[k]==ch[j])

sum[j]++;

}

}

}

//文件压缩

public void Compress(String path) throws IOException {

String result="";

for(int i=0;i<27;i++){

result+=ch[i]+""+sum[i]+",";

}

String content="";

for(int i=0;i<a.length;i++){

for(int k=0;k<ch.length;k++){

if(a[i]==ch[k])

content+=search(root,ch[k]).getCode()+" ";

}

}

result+=content;

File f=new File(path);

if(!f.exists()){

f.createNewFile();

}

System.out.println("编码结果为:"+content);

Writer writer=new FileWriter(f);

BufferedWriter bufferedWriter=new BufferedWriter(writer);

bufferedWriter.write(result);

bufferedWriter.flush();

bufferedWriter.close();

}

public HaffNode search(HaffNode root,char c) {

if(root.getCh()==c){

return root;

}

if(root.getLeft()!=null||root.getRight()!=null) {

HaffNode a=search(root.getLeft(),c);

HaffNode b=search(root.getRight(),c);

if(a!=null)

return a;

if(b!=null)

return b;

}

return null;

}

public HaffNode getRoot() {

return root;

}

public void read2(String path,String name) throws IOException {

//读取文件

File file=new File(path,name);

Reader reader=new FileReader(file);

BufferedReader bufferedReader=new BufferedReader((new InputStreamReader(new FileInputStream(file),"GBK")));

String str="";

String temp="";

while((temp=bufferedReader.readLine())!=null){

System.out.println("解压前文件内容:"+temp);

str=temp;

}

//获取每个字符的频数(使用逗号分割),以及文件压缩后的内容

StringTokenizer s =new StringTokenizer(str,",");

int i=0;

while (s.hasMoreTokens()){

strings[i++]=s.nextToken();

}

}

public LinkedList createTree2(){

for(int i=0;i<27;i++){

HaffNode temp=new HaffNode();

temp.setCh(strings[i].charAt(0));

temp.setN(strings[i].charAt(1)-'0');

treelist.add(temp);

}

Collections.sort(treelist);

while (treelist.size() > 1) {

//获得两个权值最小的节点

HaffNode first = (HaffNode) treelist.removeFirst();

HaffNode second = (HaffNode) treelist.removeFirst();

//将这两个权值最小的节点构造成父节点

HaffNode parent = new HaffNode();

parent.setN(first.getN() + second.getN());

parent.setLeft(first);

parent.setRight(second);

//把父节点添加进列表,并重新排序

treelist.add(parent);

Collections.sort(treelist);

}

root= (HaffNode) treelist.getFirst();

return treelist;

}

public void reCompress(String path) throws IOException {

String t=strings[27];

String result="";

StringTokenizer stringTokenizer=new StringTokenizer(t);

while(stringTokenizer.hasMoreTokens()){

String temp=stringTokenizer.nextToken();

result+=search2(root,temp).getCh();

}

System.out.println("解压后的文件内容为:"+result);

File f=new File(path);

Writer writer=new FileWriter(f);

BufferedWriter bufferedWriter=new BufferedWriter(writer);

bufferedWriter.write(result);

bufferedWriter.flush();

bufferedWriter.close();

}

public HaffNode search2(HaffNode root,String code) {

if (root.getCode() == null) {

if (root.getLeft() != null || root.getRight() != null) {

HaffNode a = search2(root.getLeft(), code);

HaffNode b = search2(root.getRight(), code);

if (a != null)

return a;

if (b != null)

return b;

}

return null;

}

else if(root.getCode().equals(code)){

return root;

}

return null;

}

}

- 测试主函数

import java.io.IOException;

import java.util.LinkedList;

public class HaffmanTest {

public static void main(String[] args) throws IOException {

HaffmanTree h=new HaffmanTree();

h.read("C:\Users\XPS\Desktop","haha.txt");

LinkedList temp=h.createTree();

for(int i=0;i<temp.size();i++){

h.getCode((HaffNode) temp.get(i),"");

}

h.Compress("E:\hehe.txt");

HaffmanTree r=new HaffmanTree();

r.read2("C:\Users\XPS\Desktop","hehe.txt");

LinkedList temp2=r.createTree2();

for(int i=0;i<temp2.size();i++)

r.getCode((HaffNode) temp2.get(i),"");

r.reCompress("C:\Users\XPS\Desktop\haha1.txt");

}

}

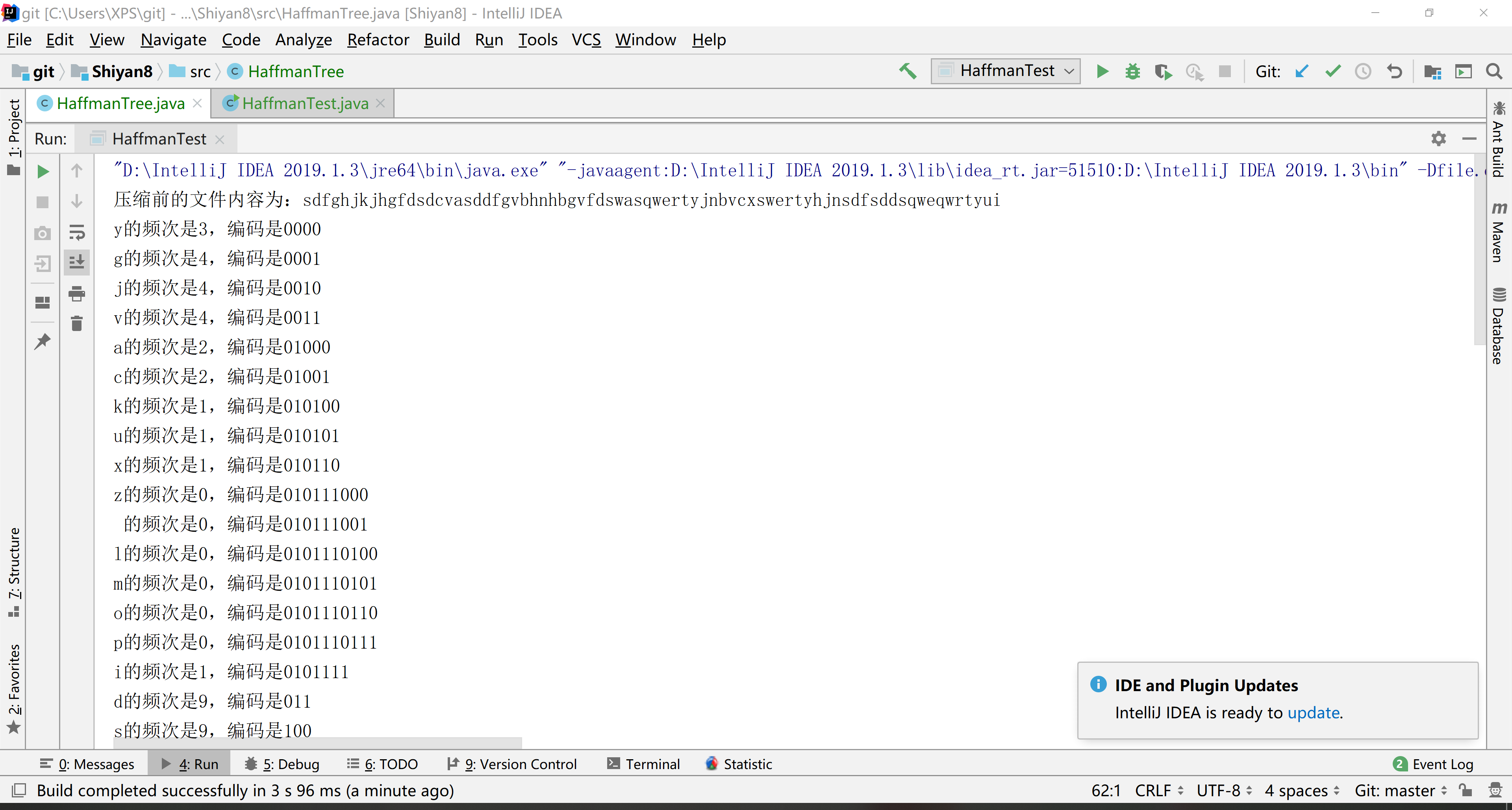

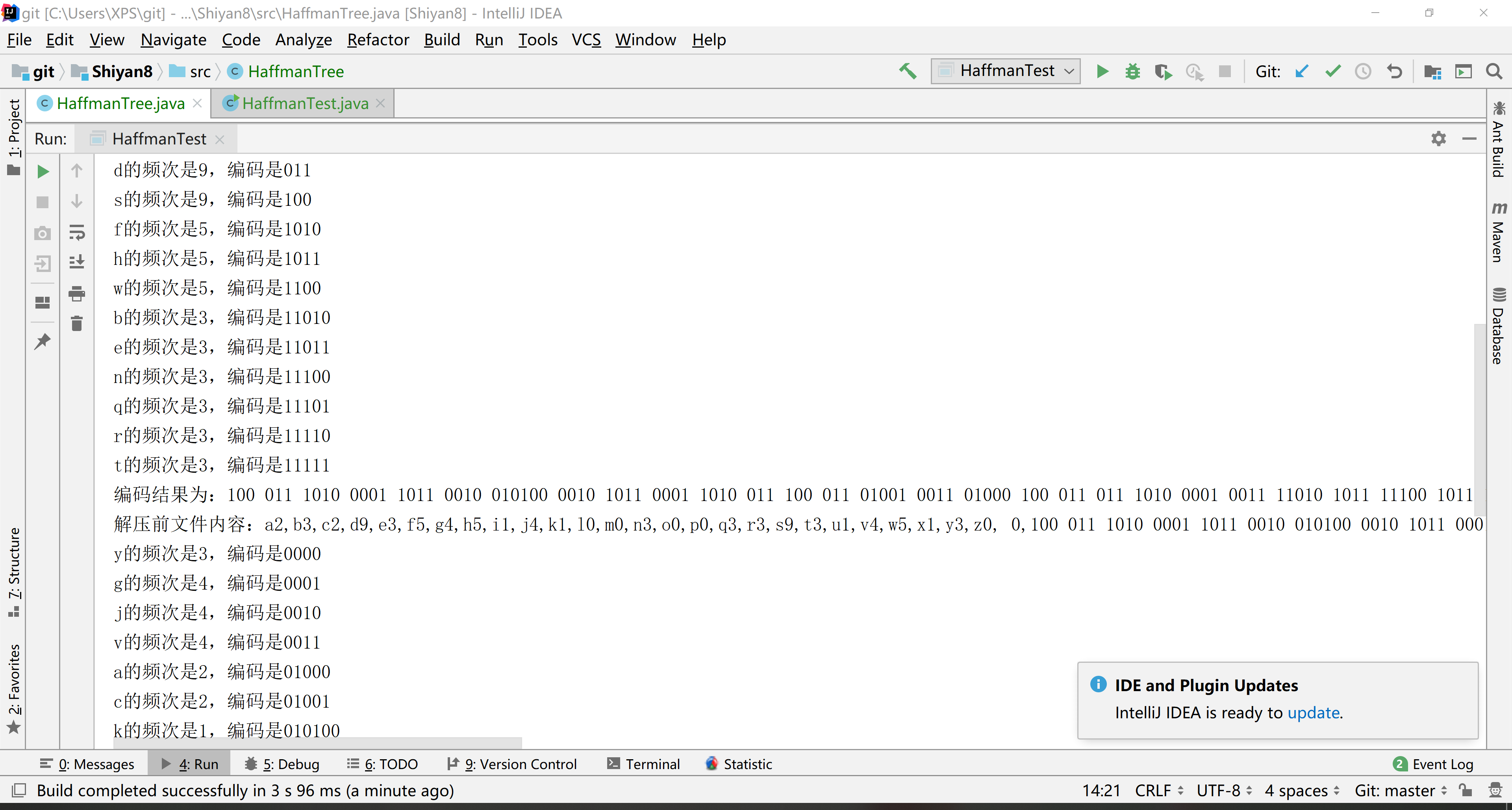

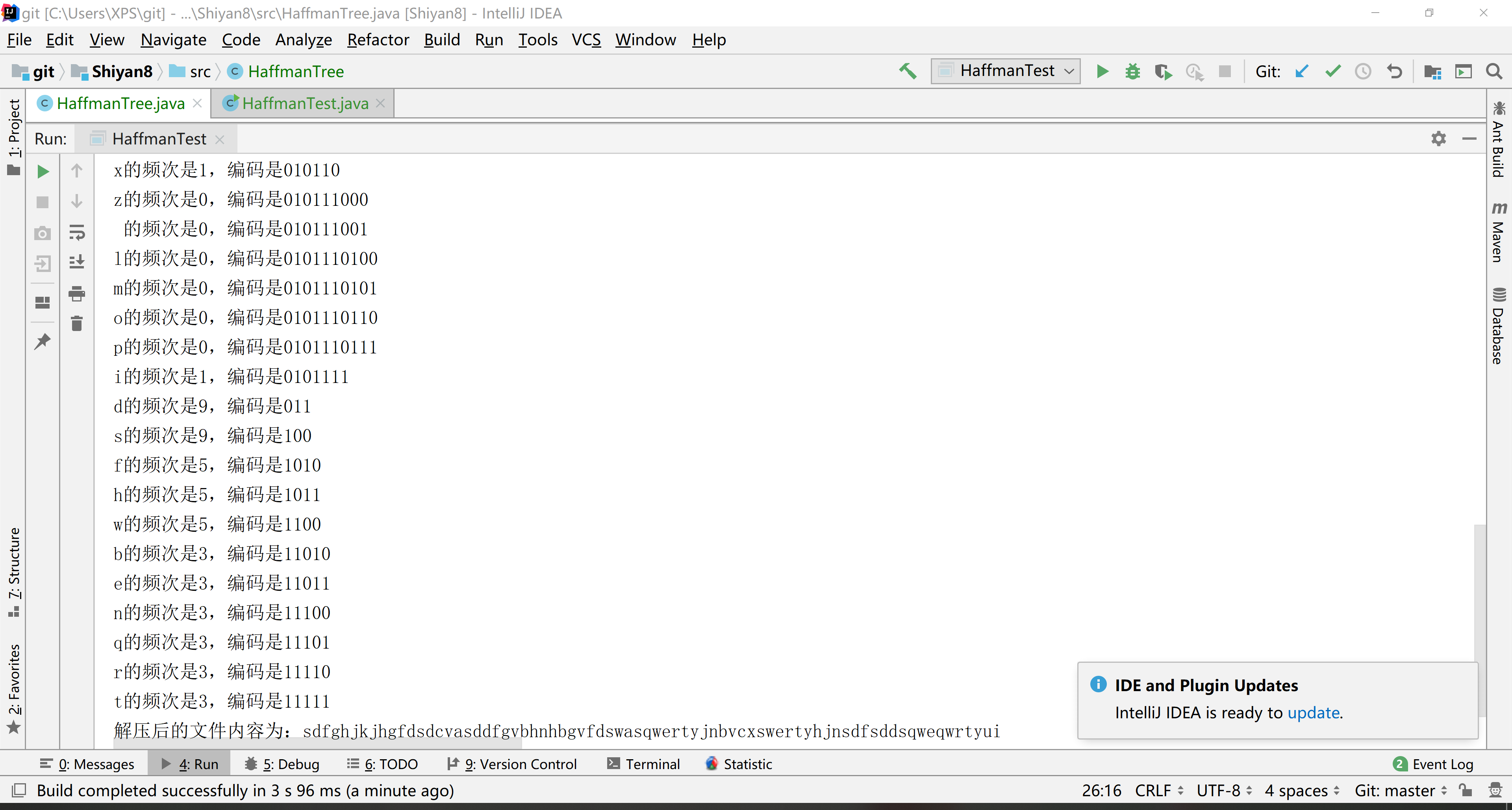

6.结果