STGNNs:SPATIAL–TEMPORAL GRAPH NEURAL NETWORKS

许多实际应用中的图在图结构和图输入方面都是动态的。STGNNs在捕获图的动态性方面占有重要地位。

这类方法的目的是建模动态节点输入,同时假设连接节点之间的相互依赖性。STGNNs同时捕获一个图的空间和时间依赖性。

STGNNs的任务可以是预测未来节点值或标签或预测时空图标签。

For example, a traffic network consists of speed sensors placed on roads, where edge weights are determined by the distance between pairs of sensors. As the traffic condition of one road may depend on its adjacent roads’ conditions, it is necessary to consider spatial dependence when performing traffic speed forecasting. As a solution, STGNNs capture spatial and temporal dependencies of a graph simultaneously.

STGNNs 分为两个方向:

1)RNN-based methods

大多数基于RNN的方法通过:使用图卷积传递到递归单元, 过滤输入和隐藏状态来捕获时空依赖性

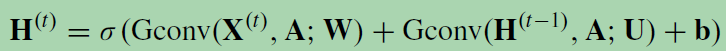

a simple RNN takes the form:

X(t): Rn×d is the node feature matrix at time step t. After inserting graph convolution, it becomes

Gconv(·) is a graph convolutional layer.

相关工作:

1): Graph convolutional recurrent network (GCRN) combines an LSTM network with ChebNet.

2): Diffusion convolutional RNN (DCRNN) incorporates a proposed diffusion graph convolutional layer into a GRU network. Besides, DCRNN adopts an encoder-decoder framework to predict the future K steps of node values.

3): Structural-RNN proposes a recurrent framework to predict node labels at each time step. It comprises two kinds of RNNs, namely, a node-RNN and an edge-RNN. The temporal information of each node and each edge is passed through a node-RNN and an edge-RNN, respectively. To incorporate the spatial information, a node-RNN takes the outputs of edge-RNNs as inputs. Since assuming different RNNs for different nodes and edges significantly increases model complexity, it instead splits nodes and edges into semantic groups. Nodes or edges in the same semantic group share the same RNN model, which saves the computational cost.

RNN-based approaches suffer from time-consuming iterative propagation and gradient explosion/vanishing issues.

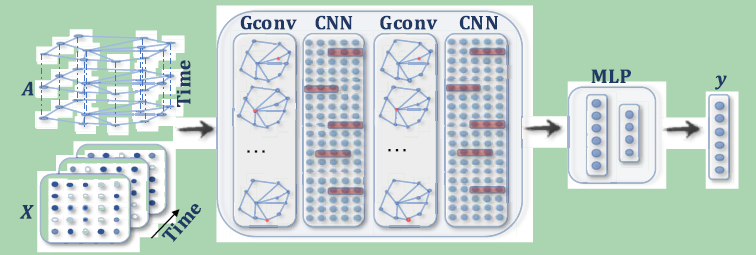

2)CNN-based methods

CNN-based approaches tackle spatial-temporal graphs in a nonrecursive manner with the advantages of parallel computing, stable gradients, and low-memory requirements.

CNN-based approaches interleave 1-D-CNN layers with graph convolutional layers to learn temporal and spatial dependencies, respectively.

Assume that the inputs to an STGNN are a tensor X: RT×n×d and the 1-D-CNN layer slides over X[:, i, :] along the time axis to aggregate temporal information for each

node, while the graph convolutional layer operates on X[i,:,:] to aggregate spatial information at each time step.

以前的方法都使用预定义的图结构。它们假设预定义的图结构反映了节点之间真实的依赖关系。然而,在时空设置中有许多图形数据的快照,可以从数据中自动学习潜在的静态图形结构。

学习潜在静态空间依赖可以帮助研究者发现网络中不同实体之间可解释且稳定的相关性。但是,在某些情况下,学习潜在的动态空间依赖关系可以进一步提高模型的精度。

GaAN employs attention mechanisms to learn dynamic spatial dependencies through an RNN-based approach. An attention function is used to update the edge weight between two connected nodes given their current node inputs.

ASTGCN further includes a spatial attention function and a temporal attention function to learn latent dynamic spatial dependencies and temporal dependencies through a CNN-based approach.

ASTGCN还包括一个空间注意函数和一个时间注意函数,用于通过基于cnn的方法学习潜在的动态空间依赖和时间依赖。

The common drawback of learning latent spatial dependencies is that it needs to calculate the spatial dependence weight between each pair of nodes, which costs O(n2).

Dynamic Graph Convolutional Networks:(2017)

Many different classification tasks need to manage structured data, which are usually modeled as graphs. Moreover, these graphs can be dynamic, meaning that the vertices/edges of each graph may change during time. Our goal is to jointly exploit structured data and temporal information through the use of a neural network model.

许多不同的分类任务需要管理结构化数据,这些数据通常建模为图。此外,这些图可以是动态的,这意味着每个图的顶点/边可能在一段时间内改变。我们的目标是通过使用神经网络模型联合利用结构化数据和时间信息。

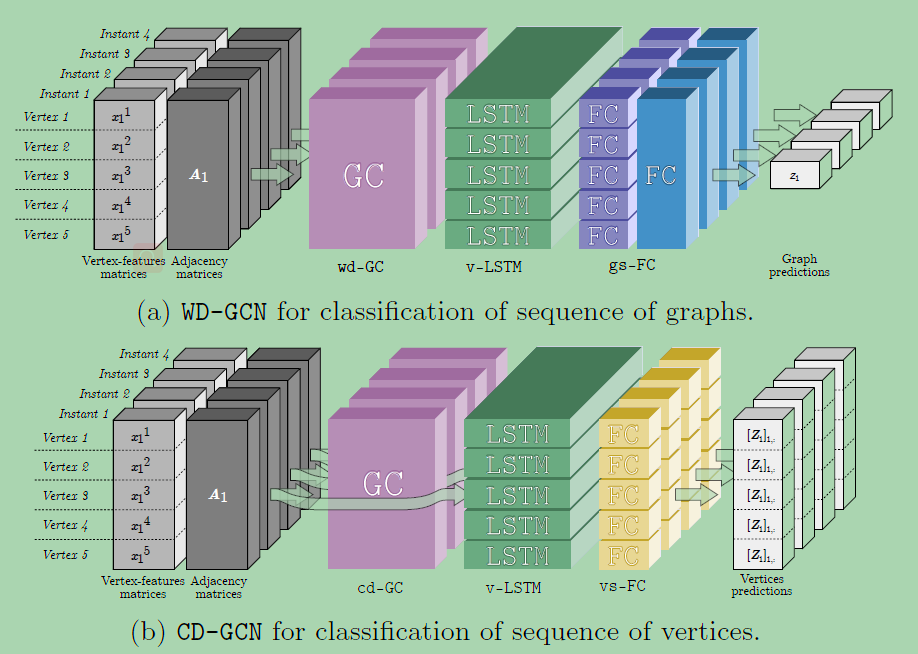

this paper proposes two novel approaches, which combine Long Short-Term Memory networks and Graph Convolutional Networks to learn long short-term dependencies together with graph structure.

本文提出了两种新的方法,即结合长短期记忆网络和图卷积网络来学习长短期依赖关系和图结构。

However, many real-world structured data are dynamic and nodes/edges in the graphs may change during time. In such a dynamic scenario, temporal information can also play an important role.

然而,许多真实世界的结构化数据是动态的,图中的节点/边可能会随着时间的推移而改变. 在这种动态场景中,时间信息也可以发挥重要作用。

the new network architectures proposed in this paper will work on ordered sequences of graphs and ordered sequences of vertex features.

A require to pass the same set of measurement data through multiple iterations to estimate a fixed quantum state,