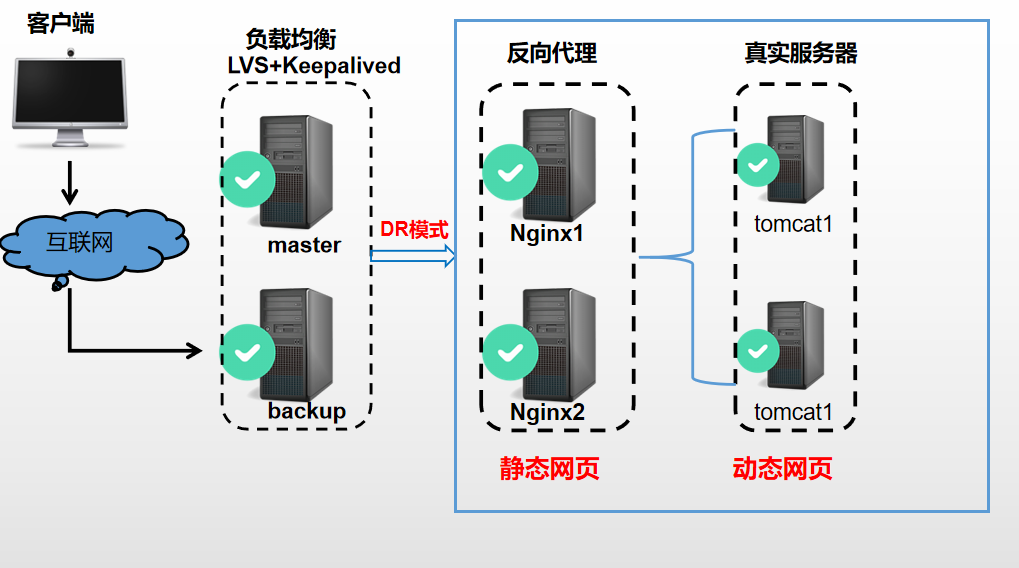

1.项目拓扑:

2.项目环境

| IP地址 | 主机名 | 安装服务名称 |

| 192.168.253.40 | keepalived |

keepalived lvs |

| 192.168.253.50 | keepalived-backup |

keepalived lvs |

| 192.168.253.51 | nginx1 |

nginx tomcat |

| 192.168.253.52 | nginx2 |

nginx tomcat |

| VIP | 192.168.253.200 |

3.实验重点:

1.概述:此架构中keepalived所起到的作用就是对lvs架构中的调度器进行热备份。

怎样实现:

两个负载均衡器同时安装keepalived,其中一台做master,另一台做backup,客户端请求master节点,master会发送数据包给backup节点,backup接收不到master发送的数据包时就表示master挂了,此时就有backup接管master节点,由此实现双机热备。

2.重点:LVS架构中需要通过ipvsadm工具来对ip_vs这个模块进行编写规则,使用keepalived+lvs时,不需要用到ipvsadm管理工具,不需要ipvsadm手动编写规则,用在keepalived的配置文件中指定配置项来将其取代;

3.keepalived的节点健康检查:keepalived可以通过对nginx的某个端口进行节点健康检查,来执行相应的操作,由notify_down配置项来完成

4.为什么采用DR模式实现负载均衡

lvs三种工作模式的路由方式

NAT: 客户机-->lvs调度器-->real server-->lvs调度器-->客户机

TUN: 客户机-->lvs调度器-->real server-->客户机

DR: 客户机-->lvs调度器-->real server-->客户机

总结:NAT模式的lvs调度器会成为这个模式的瓶颈所在,请求与响应都要经过调度器转发,当用户>20是达到瓶颈。TUN模式和DR模式的区别在于,DR模式没有ip封装的开销,但由于采用物理层(修改mac地址)技术,所有服务器必须都在同一物理网段中。

4.安装配置

全部:关闭防火墙,修改主机名,同步时间

1.

systemctl stop firewalld

systemctl disable firewalld 永久关闭

2.

[root@keepalived ~]# getenforce //查看selinux状态

[root@keepalived ~]# setenforce 0 //临时关闭

[root@keepalived ~]# getenforce

Permissive

[root@keepalived ~]# vim /etc/selinux/config //永久关闭

Enforcing==》disabled

3.

hostnamectl set-hostname keepalived //修改主机名

su -l

4.

yum -y install ntp ntpdate

ntpdate cn.pool.ntp.org //同步时间

将系统时间写入到硬件时间

[root@surfer ~]#hwclock -w

1.keepalived

1.1安装keepalived

tar -xf keepalived-2.0.19.tar.gz

mkdir -p /data/keepalived #创建安装目录

yum install openssl-devel gcc gcc-c++ make

cd keepalived-2.0.19/

./configure --prefix=/data/keepalived/

make

make install

1.2. 拷贝配置文件

cd keepalived-2.0.19/keepalived/etc/

cp -R init /data/keepalived/

cp -R init /data/keepalived/etc/

cp -R init.d/ /data/keepalived/etc/

cp /data/keepalived/etc/init.d/keepalived /etc/init.d/

cp /data/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

mkdir /etc/keepalived

cp /data/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

cp /data/keepalived/sbin/keepalived /usr/sbin/

systemctl start keepalived

1.3修改配置文件

ip:192.168.253.40

[root@keepalived]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived global_defs { notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id lvs_master #服务器名称 } vrrp_instance VI_1 { #定义vrrp热备实例 state MASTER #主 interface ens33 virtual_router_id 51 #master和slave必须一致 priority 150 #优先级 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.253.200 }

virtual_server 192.168.253.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 0

protocol TCP

real_server 192.168.253.51 80 {

weight 1

notify_down /etc/keepalived/check.sh #对nginx的80端口进行节点健康检查

TCP_CHECK {

connect_port 80

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.253.52 80 {

weight 1

notify_down /etc/keepalived/check.sh

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

192.168.253.50

[root@keepalived-backup ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id lvs_backup

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.253.200

}

}

virtual_server 192.168.253.200 80 {

delay_loop 6

lb_algo rr

lb_kind DR

nat_mask 255.255.255.0

persistence_timeout 0

protocol TCP

real_server 192.168.253.51 80 {

weight 1

notify_down /etc/keepalived/check.sh

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

real_server 192.168.253.52 80 {

weight 1

notify_down /etc/keepalived/check.sh #对nginx的80端口进行节点健康检查

TCP_CHECK {

connect_timeout 10

nb_get_retry 3

delay_before_retry 3

connect_port 80

}

}

}

测试:

[root@keepalived ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

link/ether 00:0c:29:2d:07:5f brd ff:ff:ff:ff:ff:ff

inet 192.168.253.40/24 brd 192.168.253.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.253.200/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe2d:75f/64 scope link

valid_lft forever preferred_lft forever

[root@keepalived ~]# systemctl stop keepalived

ip a 无192.168.253.200

在keepaived-backup查看

[root@keepalived-backup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

link/ether 00:0c:29:45:78:34 brd ff:ff:ff:ff:ff:ff

inet 192.168.253.50/24 brd 192.168.253.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.253.200/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe45:7834/64 scope link

valid_lft forever preferred_lft forever

[root@keepalived ~]# cat /etc/keepalived/check.sh

#!/bin/bash

echo -e " nginx1(192.168.253.51) or nginx2(192.168.253.52) is down on $(date +%F-%T)" >/root/check_nginx.log

2.nginx

2.1安装nginx

请出门左转:https://www.cnblogs.com/lanist/p/12752504.html

2.2 在nginx+tomcat上共享VIP

vim /etc/init.d/realserver

#!/bin/bash

VIP=192.168.253.200

#此function函数提供了一些基础功能,为/etc/init.d中的脚本,会设置umask,path和语言环境

/etc/rc.d/init.d/functions

case "$1" in

start)

#给lo:0网卡配置ip地址,并加上子网掩码,加上广播地址

/sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 broadcast $VIP

/sbin/route add -host $VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

/sbin/ifconfig lo:0 down

/sbin/route del $VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

[root@nginx2]# chmod +x /etc/init.d/realserver

设为开机自启

[root@nginx2]# chkconfig --add realserver

service realserver does not support chkconfig

此时应该在脚本中添加2行:

# chkconfig: 2345 10 90

# description: realserver ....

注释#chkconfig 中2345是默认启动级别,10 90

10是启动优先级,90是停止优先级

[root@nginx1 ~]#chkconfig realserver on #设置开机自启动此脚本

[root@nginx1 ~]# systemctl start realserver #开启此脚本

RealServer Start OK #表示启动成功!

[root@nginx1 ~]#ifconfig #查看一下是否真的成功添加了lo:0接口

[root@nginx1 test]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 192.168.253.200/32 brd 192.168.253.200 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

link/ether 00:0c:29:f5:44:09 brd ff:ff:ff:ff:ff:ff

inet 192.168.253.51/24 brd 192.168.253.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fef5:4409/64 scope link

valid_lft forever preferred_lft forever

chkconfig:

说明:

用来设置服务的运行级信息,该设置并非立即启动,或者禁用制定服务。

常用参数:

--add 增加所指定的系统服务,让chkconfig指令得以管理它,并同时在系统启动的叙述文件内增加相关数据。

--del 删除所指定的系统服务,不再由chkconfig指令管理,并同时在系统启动的叙述文件内删除相关数据。

等级代号说明:

等级0表示:表示关机

等级1表示:单用户模式

等级2表示:无网络连接的多用户命令行模式

等级3表示:有网络连接的多用户命令行模式

等级4表示:不可用

等级5表示:带图形界面的多用户模式

等级6表示:重新启动

--list [name]: 显示所有运行级系统服务的运行状态信息(on或off)。如果指定了name,那么只显示指定的服务在不同运行级的状态。

2.3配置nginx1,nginx2

cd /data/nginx/conf,新建proxy.conf,进行代理配置,内容如下:

# proxy.conf

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

client_max_body_size 10m;

client_body_buffer_size 128k;

proxy_connect_timeout 90;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffer_size 4k;

proxy_buffers 4 32k;

proxy_busy_buffers_size 64k;

proxy_temp_file_write_size 64k;

vim /data/nginx/conf/nginx.conf

user root; #运行用户

worker_processes 1; #启动进程,通常设置成和cpu的数量相等

#全局错误日志及PID文件

error_log /usr/local/nginx/logs/error.log;

error_log /usr/local/nginx/logs/error.log notice;

error_log /usr/local/nginx/logs/error.log info;

pid /usr/local/nginx/logs/nginx.pid;

# 工作模式及连接数上线

events {

use epoll; #epoll是多路复用IO(I/O Multiplexing)中的一种方式,但是仅用于linux2.6以上内核,可以大大提高nginx的性能

worker_connections 1024; #单个后台worker process进程的最大并发链接数

}

#设定http服务器,利用它的反向代理功能提供负载均衡支持

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

#设定请求缓冲

server_names_hash_bucket_size 128;

client_header_buffer_size 32K;

large_client_header_buffers 4 32k;

# client_max_body_size 8m;

#sendfile 指令指定 nginx 是否调用 sendfile 函数(zero copy 方式)来输出文件,对于普通应用,

#必须设为 on,如果用来进行下载等应用磁盘IO重负载应用,可设置为 off,以平衡磁盘与网络I/O处理速度,降低系统的uptime.

sendfile on;

tcp_nopush on;

tcp_nodelay on;

#连接超时时间

#keepalive_timeout 0;

keepalive_timeout 65;

#开启gzip压缩,降低传输流量

gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

#添加tomcat列表,负载均衡的服务器都放在这

upstream tomcat_pool {

#server tomcat地址:端口号 weight表示权值,权值越大,被分配的几率越大;

server 192.168.253.51:8080 weight=4 max_fails=2 fail_timeout=30s;

server 192.168.253.52:8080 weight=4 max_fails=2 fail_timeout=30s;

}

server {

listen 80; #监听端口

server_name 192.168.253.200; #对外提供服务的网址(域名或者ip)

#默认请求设置

location / {

index index.jsp index.html index.htm; #设定访问的默认首页

root /usr/local/tomcat/webapps; #站点根目录,此目录下存放我们的web项目

}

#charset koi8-r;

#access_log logs/host.access.log main;

#所有的jsp页面均由tomcat处理

location ~ .(jsp|jspx|dp)?$

{

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://tomcat_pool; #转向tomcat处理

}

#所有的静态文件直接读取不经过tomcat,nginx自己处理

location ~ .*.(htm|html|gif|jpg|jpeg|png|bmp|swf|ioc|rar|zip|txt|flv|mid|doc|ppt|pdf|xls|mp3|wma)$

{

expires 30d;

}

location ~ .*.(js|css)?$

{

expires 1h;

}

#log_format access '$remote_addr - $remote_user [$time_local] "$request" '$status $body_bytes_sent "$http_referer"' '"$http_user_agent" $http_x_forwarded_for';

#access_log /usr/local/nginx/logs/ubitechtest.log access;#设定访问日志的存放路径

# redirect server error pages to the static page /50x.html

#

#定义错误提示页面

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

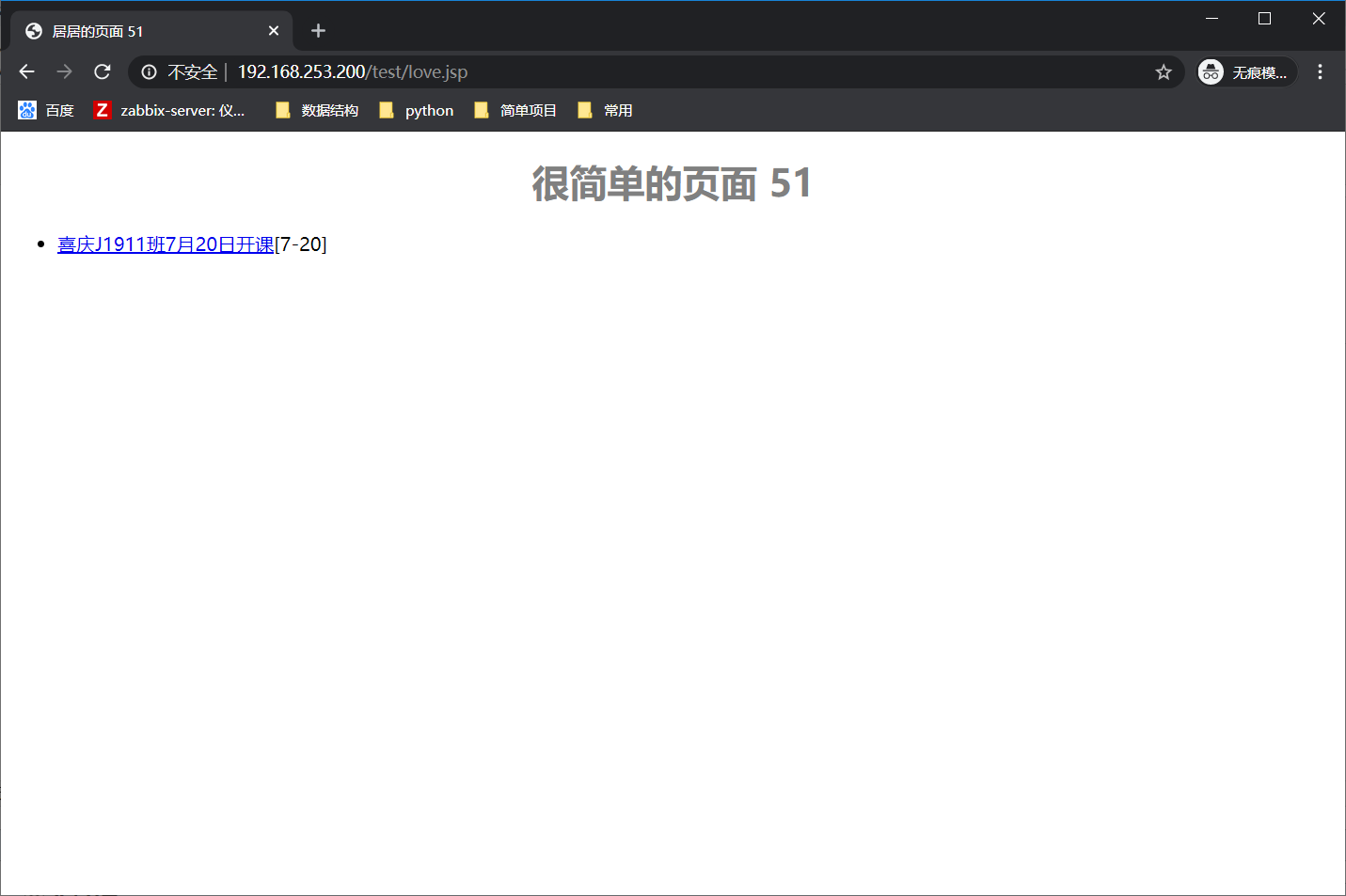

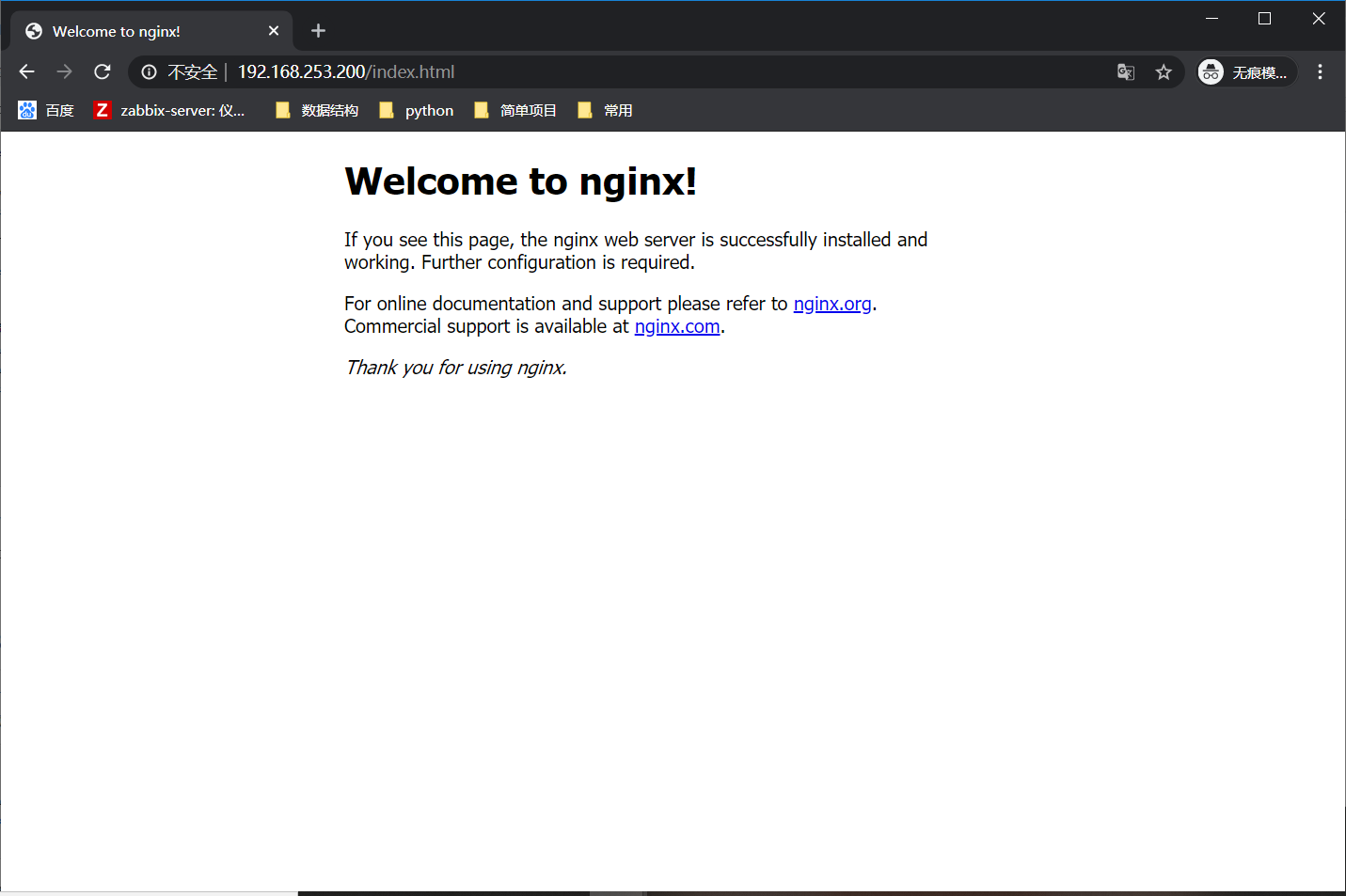

2.4 测试页面

4.tomcat

安装

用ansible一键安装

在tomcat1和tomcat2的工作目录中创建test文件夹并写入jsp文件

[root@nginx1 ~]# cd /usr/local/tomcat/webapps/

[root@nginx1 webapps]# ls

docs examples host-manager manager ROOT

[root@nginx1 webapps]# mkdir test

[root@nginx1 test]# vim love.jsp

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>居居的页面 51</title>

</head>

<body>

<center>

<h1 style="color: gray;">很简单的页面 51</h1>

</center>

<div class="bd">

<ul class="infoList">

<li><a href="#">喜庆J1911班7月20日开课</a><span>[7-20]</span></li>

</ul>

</div>

</body>

</html>