本脚本实现一个简单的三台机器分布式集群环境下的Hadoop一键启动。

1:在目录 /usr/local/bin下 touch xhp.sh

2: chmod 777 xhp.sh

3: 编辑 xhp.sh

ZK:Zookeeper ZKFC:Zookeeper FailoverController JN:JournalNodes RM:Yarn ResourceManager DM:Yarn ApplicationManager(DataManager)

ZKFC 必须跟namenode在一起(hearbeat) DM 最好跟DataNode放一起,JN和ZK必须为奇数个(3,5,7)

| 机器编号 | Namenode | DateNode | Zookeeper | ZKFC | JN | RM | DM |

| hadoop202 | √ | √ | √ | √ | √ | √ | |

| hadoop203 | √ | √ | √ | √ | √ | √ | √ |

| hadoop204 | √ | √ | √ | √ | √ |

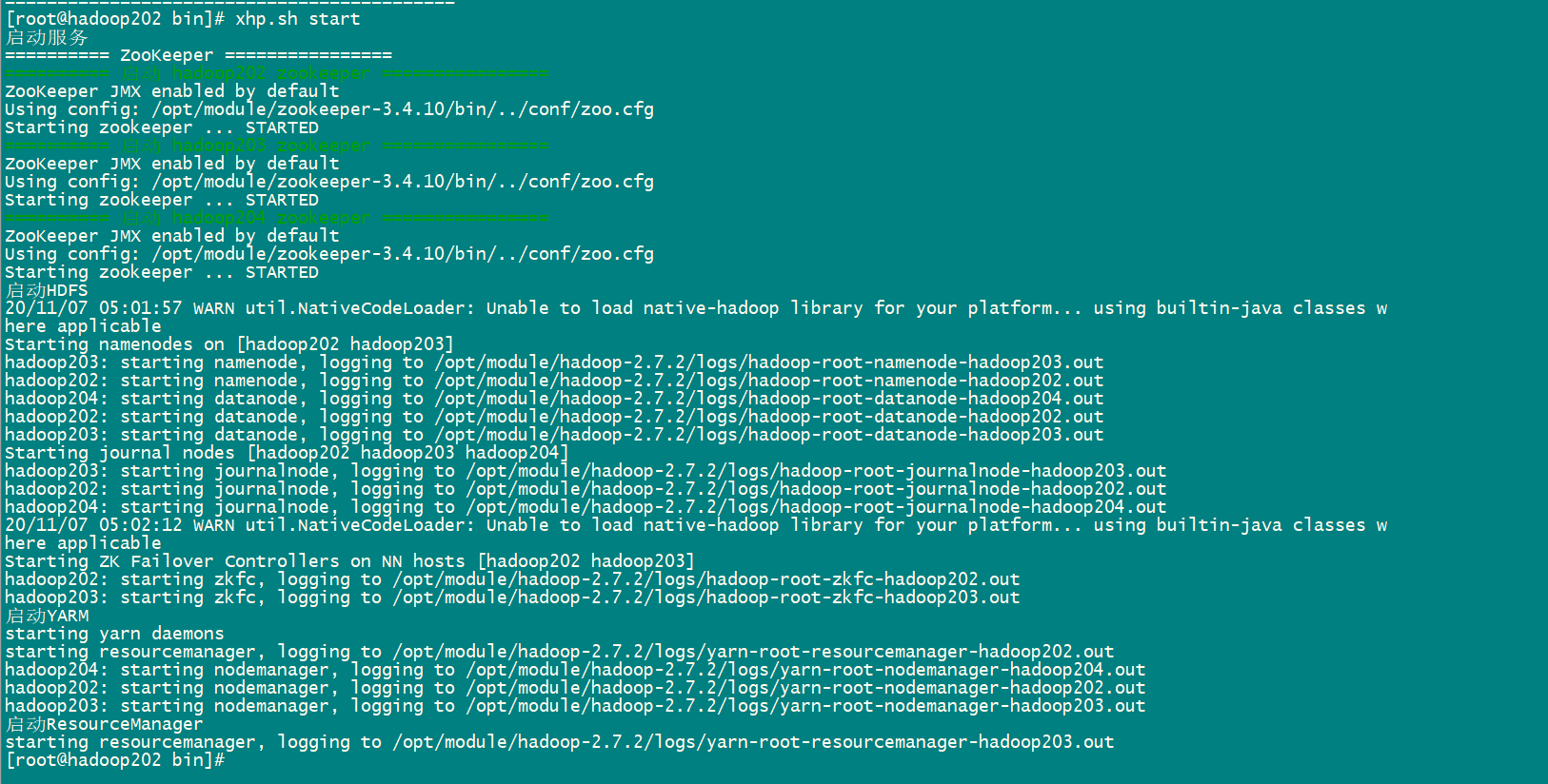

#判断用户是否传参 if [ $# -ne 1 ];then echo "无效参数,用法为: $0 {start|stop}" exit fi #获取用户输入的命令 cmd=$1 #定义函数功能 function hadoopManger(){ case $cmd in start) echo "启动服务" remoteExecutionstart ;; stop) echo "停止服务" remoteExecutionstop ;; *) echo "无效参数,用法为: $0 {start|stop}" ;; esac } #启动HADOOP function remoteExecutionstart(){ #zookeeper echo ========== ZooKeeper ================ for (( i=202 ; i<=204 ; i++ )) ; do tput setaf 2 echo ========== 启动 hadoop${i} zookeeper $1 ================ tput setaf 9 ssh hadoop${i} "source /etc/profile ; /opt/module/zookeeper-3.4.10/bin/zkServer.sh start" done #journalnode echo ========== Journalnode ================ for (( i=202 ; i<=204 ; i++ )) ; do tput setaf 2 echo ==========启动 hadoop${i} journalnode $1 ================ tput setaf 9 ssh hadoop${i} "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/hadoop-daemon.sh start journalnode" done echo "启动HDFS" ssh hadoop202 "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/start-dfs.sh" echo "启动YARM" ssh hadoop202 "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/start-yarn.sh" echo "启动ResourceManager" ssh hadoop203 "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/yarn-daemon.sh start resourcemanager" } #关闭HADOOP function remoteExecutionstop(){ echo "关闭ResourceManager" ssh hadoop203 "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/yarn-daemon.sh stop resourcemanager" echo "关闭YARM" ssh hadoop202 "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/stop-yarn.sh" echo "关闭HDFS" ssh hadoop202 "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/stop-dfs.sh" #journalnode echo ========== Journalnode ================ for (( i=202 ; i<=204 ; i++ )) ; do tput setaf 2 echo ==========关闭 hadoop${i} journalnode $1 ================ tput setaf 9 ssh hadoop${i} "source /etc/profile ; /opt/module/hadoop-2.7.2/sbin/hadoop-daemon.sh stop journalnode" done #zookeeper echo ========== ZooKeeper ================ for (( i=202 ; i<=204 ; i++ )) ; do tput setaf 2 echo ========== 关闭 hadoop${i} zookeeper $1 ================ tput setaf 9 ssh hadoop${i} "source /etc/profile ; /opt/module/zookeeper-3.4.10/bin/zkServer.sh stop" done } #调用函数 hadoopManger

注意:Journalnode 的启动关闭脚本可以不需要.ZKFC 的启动关闭脚本也不需要。因为

the start-dfs.sh script will now automatically start a ZKFC daemon on any machine that runs a NameNode. When the ZKFCs start, they will automatically select one of the NameNodes to become active.

Journalnode 也会被自动启动.

运行脚本:查询启动结果

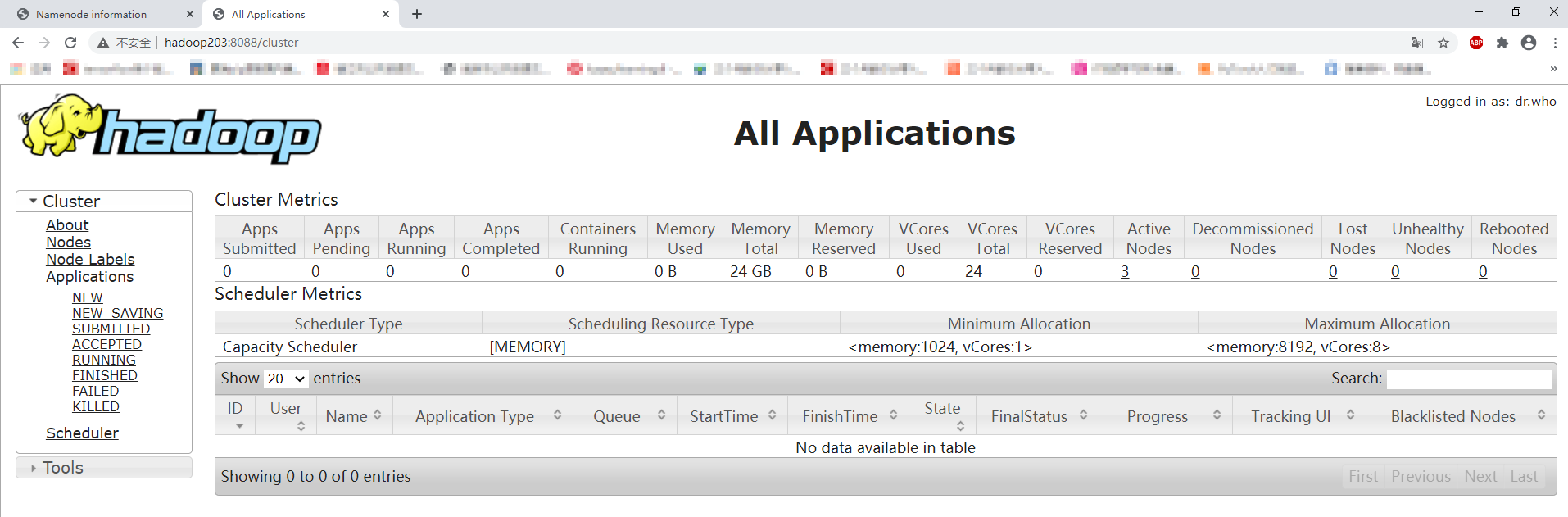

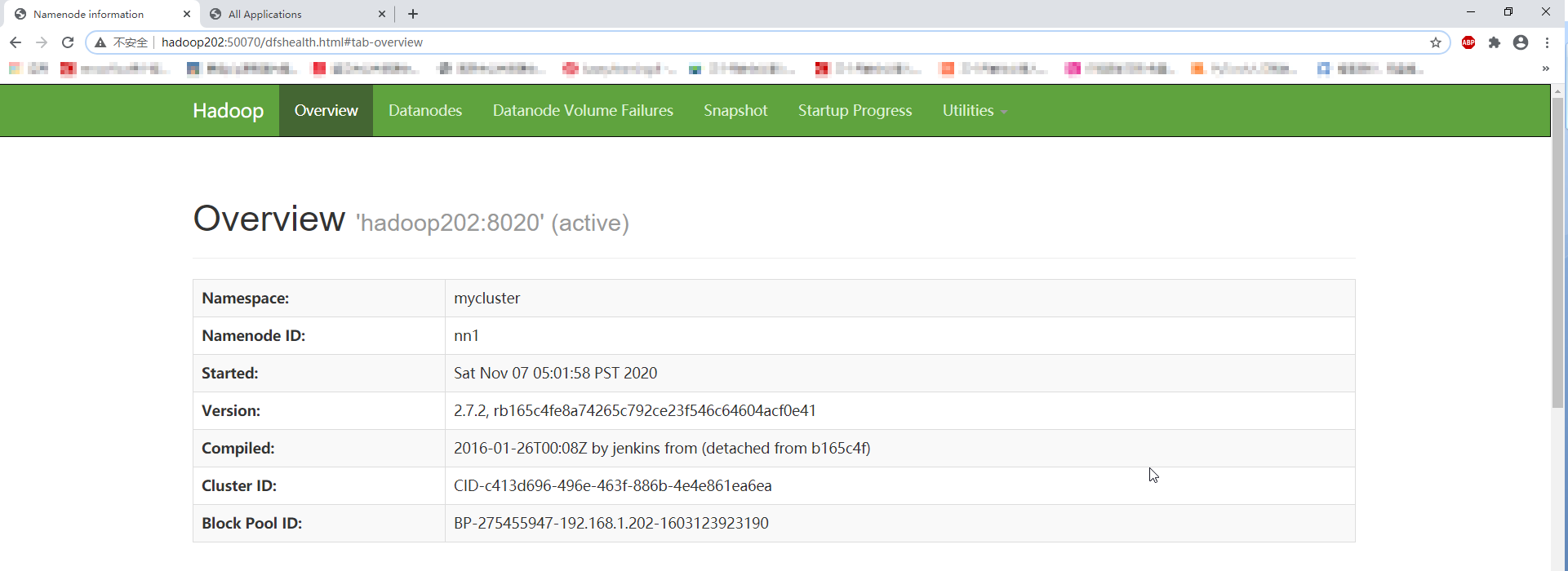

web 端查看

http://hadoop202:50070/

http://hadoop203:8088/