1. 安装MySQL

在root用户根目录下使用以下命令进行mysql的安装

yum install mysql-server mysql mysql-devel

2. 开启mysql

service mysqld start

3. 设置root密码

mysqladmin -u root password 'root'

4. 设置mysql开机启动

chkconfig mysqld on

5. 解压hive

使用xftp将apache-hive-1.2.1-bin.tar.gz上传到soft目录下,然后进行解压。

6. 修改hive-env.sh,从模板文件复制出来

cp hive-env.sh.template hive-env.sh(注意路径问题)

修改hive-env.sh

export HADOOP_HEAPSIZE=1024

# Set HADOOP_HOME to point to a specific hadoop install directory

HADOOP_HOME=/home/hadoop/hadoop-2.4.1

# Hive Configuration Directory can be controlled by:

export HIVE_CONF_DIR=/home/hadoop/apache-hive-1.0.0-bin/conf

# Folder containing extra ibraries required for hive compilation/execution can be controlled by:

export HIVE_AUX_JARS_PATH=/home/hadoop/apache-hive-1.0.0-bin/lib

7. 配置hive-site.xml

hive-site.xml在conf目录中不存在,需要自己新建一个,然后将下面内容复制进去,进行替换

可以使用 cp hive-default.xml.template hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hive</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.hwi.listen.port</name>

<value>9999</value>

<description>This is the port the Hive Web Interface will listen on</description>

</property>

<property>

<name>datanucleus.autoCreateSchema</name>

<value>true</value>

<description>creates necessary schema on a startup if one doesn't exist. set this to false, after creating it once</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/home/meng/appdata/hivetmp/iotmp</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/home/meng/appdata/hivetmp/iotmp</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/home/meng/appdata/hivetmp/iotmp</value>

<description>Location of Hive run time structured log file</description>

</property>

</configuration>

代码部分解释:jdbc:mysql://localhost:3306/hive中的hive是指在mysql中创建的数据库名称;/home/meng/appdata/hivetmp/iotmp中的meng是指虚拟机主机名称。

8. 拷贝mysql-connector-java-5.0.8-bin.jar到hive 的lib下面

9. 在mysql中创建hive数据库,用于存储hive的元数据。

首先进入mysql数据库mysql -uroot -proot,然后创建数据库create database hive;。

10. 把jline-2.12.jar拷贝到hadoop相应的目录下,替代jline-0.9.94.jar,否则启动会报错

cp hive/lib/jline-2.12.jar hadoop-2.6.0/share/hadoop/yarn/lib/

11. hive命令启动hive

bin/hive

12. 在hive中创建表

create table test_user(id int, name string);

show tables;

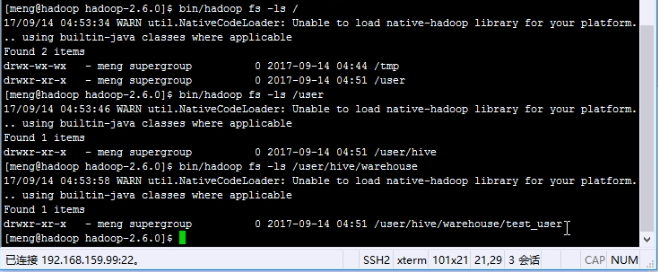

14. 在hadoop根目录下使用bin/hadoop fs -ls /user/hive/warehouse查看结果

15.在mysql中查看hive对应的元数据

(1)use hive;

(2)show tables;

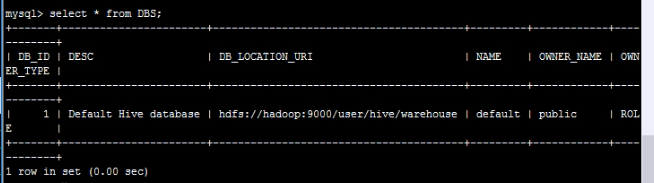

(3)select * from DBS;

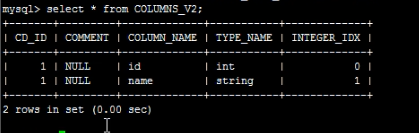

(4)select * from COLUMNS_V2;

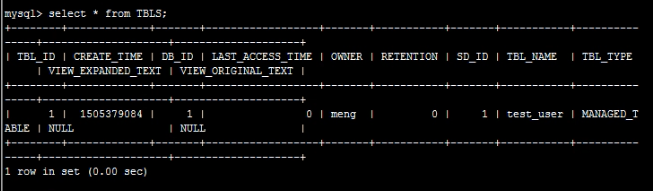

(5)select * from TBLS;