一、安装Hive

- 1.1下载并解压Hive

wget https://mirrors.aliyun.com/apache/hive/hive-2.3.7/apache-hive-2.3.7-bin.tar.gz

tar -zxvf apache-hive-2.3.7-bin.tar.gz -C /opt/module/

mv apache-hive-2.3.7-bin/ hive-2.3.7

- 1.2 将hive添加到环境变量

vim /etc/profile

export HIVE_HOME=/opt/module/hive-2.3.7

export PATH=$PATH:$HIVE_HOME/bin

- 1.3 激活配置

source /etc/profile

二、配置Hive

- 2.1配置hive配置文件,hive元数据默认存储到derby数据库中,我们这里使用mysql来存储,hive-site.xml配置信息较多建议下载到windows下修改,然后再传上去

首先复制默认的配置文件模板,里面已经包含hive所有的默认配置信息

进入到conf目录下

cp hive-default.xml.template hive-site.xml

修改hive-site.xml配置文件,将元数据存放数据库改为mysql,在hive-site.xml中找到下列属性,修改为:

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.130.77:3306/hive?useSSL=false&useUnicode=true&serverTimezone=UTC&characterEncoding=UTF-8&allowPublicKeyRetrieval=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

数据库驱动为mysql驱动com.mysql.jdbc.Driver,URL改为mysql的hive(自定义)数据库,用户名密码为自己数据库对应的用户名密码

修改hive配置的一些目录,指定到自己选择的目录,搜索以 ${system 开头的 value 替换为自己的目录,我这里替换为:/opt/module/hive-2.3.7/hive下相关目录

<property>

<name>hive.exec.local.scratchdir</name>

<value>/opt/module/hive-2.3.7/hive</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/module/hive-2.3.7/hive/downloads</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<property>

<name>hive.querylog.location</name>

<value>/opt/module/hive-2.3.7/hive/querylog</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/opt/module/hive-2.3.7/hive/server2_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property

修改权限验证为false

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

<description>

Setting this property to true will have HiveServer2 execute

Hive operations as the user making the calls to it.

</description>

</property>

- 2.2既然修改元数据存放在mysql库里,就需要将mysql驱动包放入到hive/lib中,注意mysql版本和驱动包一致

需要自己去下载jdbc

mv mysql-connector-java-8.0.18.jar /opt/module/hive-2.3.7/lib/

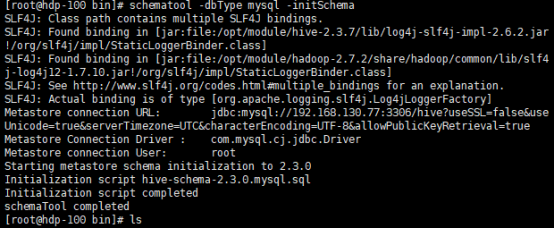

- 2.3初始化hive的元数据(表结构)到mysql中

cd /opt/module/hive-2.3.7/bin

schematool -dbType mysql -initSchema

出现如下信息,代表成功

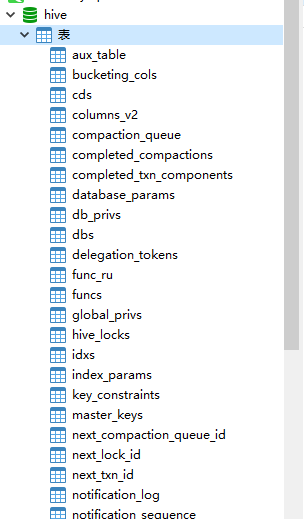

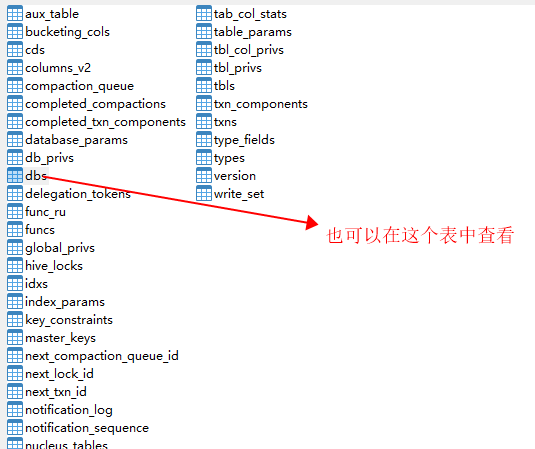

也可以查看mysql中hive库,所有表初始化完成

- 2.4 Hive使用,前提是Hadoop启动

cd /opt/module/hive-2.3.7/bin

hive

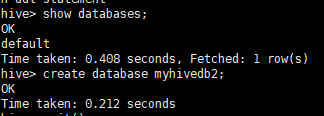

- 2.5 创建数据库

create database myhivedb2;

- 2.6可以查看一下hdgs中是否创建了对应的目录

hdfs dfs -ls -R /user/hive/

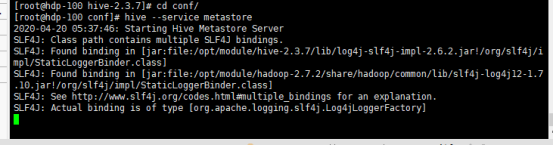

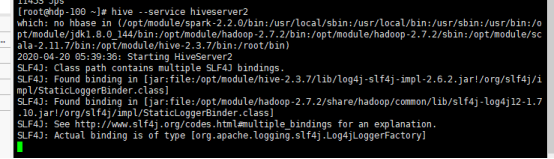

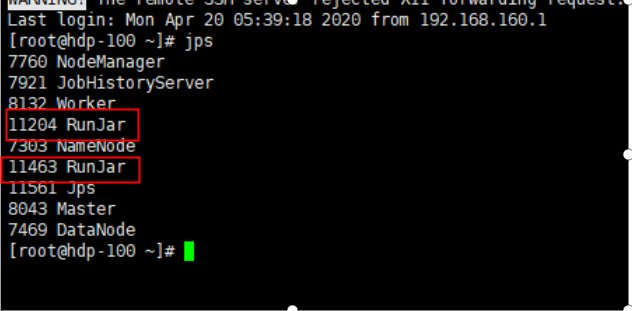

- 2.7在使用hive之前需要启动metastore和hiveserver服务,通过如下命令启用:

hive --service metastore &

hive --service hiveserver2 &(版本在centos6.8-6.9请执行hive --service hiveserver)