Ubuntu18安装ELK

前期准备

Elasticsearch和Logstash需要运行在Java8环境下,所以要先在机器上安装好jdk1.8。

本人博客里提供了centos环境下的jdk安装教程,仅供参考:https://www.cnblogs.com/helios-fz/p/12623038.html 。

注意:目前ES暂不支持Java 9。

安装和配置Elasticsearch

Ubuntu的默认包存储库中不提供Elastic Stack组件。 但是,在添加Elastic的包源列表后,它们可以与APT一起安装。

所有Elastic Stack的软件包都使用Elasticsearch签名密钥进行签名,以保护您的系统免受软件包欺骗。 使用密钥进行身份验证的软件包将被包管理器视为信任。

首先,运行以下命令将Elasticsearch公共GPG密钥导入APT:

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

接下来,将Elastic源列表添加到sources.list.d目录,其中APT将查找新的源:

echo "deb https://artifacts.elastic.co/packages/6.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-6.x.list

接下来,更新您的包列表,以便APT读取新的Elastic源:

sudo apt update

然后使用以下命令安装Elasticsearch:

sudo apt install elasticsearch

默认安装路径为:/usr/share/elasticsearch

默认配置文件路径为:/etc/elasticsearch

完成Elasticsearch安装后,我们需要修改/etc/elasticsearch下的主配置文件elasticsearch.yml。注意: Elasticsearch的配置文件采用YAML格式,这意味着缩进非常重要! 编辑此文件时,请确保不添加任何额外的空格。

配置文件说明如下:

#服务名[集群名] cluster.name: my-application #节点名 node.name: node-1 #设置此节点具备成为主节点的资格 node.master: true #为节点添加自定义属性 node.attr.rack: r1 #数据文件存放位置 官方建议自定义 path.data: /var/lib/elasticsearch #日志文件存放路径 官方建议自定义 path.logs: /var/log/elasticsearch #启动时锁定内存,默认为true。 #因为当jvm开始swapping时es的效率 会降低,所以要保证它不swap,可以把ES_MIN_MEM和ES_MAX_MEM两个环境变量设置成同一个值,并且保证机器有足够的内存分配给es。 #同时也要允许elasticsearch的进程可以锁住内存,linux下可以通过ulimit -l unlimited命令来实现。 bootstrap.memory_lock: false #禁止swapping交换 bootstrap.system_call_filter: false #为es实例绑定特定的IP地址 network.host: localhost #es实例设置特定的端口,默认为9200端口 http.port: 9200 #es集群间通信的tcp端口 transport.tcp.port: 8081 #跨域设置 http.cors.enabled: true http.cors.allow-origin: "*" http.cors.allow-credentials: true

启动elasticsearch:

# 切换到命令文件夹 cd /usr/share/elasticsearch/bin/ # 后台启动es ./elasticsearch -d

现在Elasticsearch已经启动并运行,让我们安装Kibana,它是Elastic Stack的下一个组件。

Q&A

Elasticsearch启动报错:-Pack is not supported and Machine Learning is not available

在elasticsearch.yml中加入配置项:

xpack.ml.enabled: false

xpack.ml.enabled设置为false禁用X-Pack机器学习功能

安装和配置Kibana

根据官方文档 ,Kibana应该在Elasticsearch之后安装。 按此顺序安装可确保每个产品所依赖的组件正确到位。

因为在上一步中已经添加了Elastic包源,所以您可以使用apt安装Elastic Stack的其余组件:

sudo apt install kibana

默认安装路径为:/usr/share/kibana

默认配置文件路径为:/etc/kibana

修改/etc/kibana下的主配置文件kibana.yml:

# 默认端口是5601,如需要改端口在此修改 server.port: 5601 # es的地址和端口 elasticsearch.hosts: ["http://localhost:9200"] # 本机地址,访问此地址+端口显示kibana界面 server.host: "localhost" # 汉化kibana i18n.locale: "zh-CN"

后台启动Kibana:

# 切换到命令文件夹 cd /usr/share/kibana/bin/ # 启动 ./kibana &

Q&A

配置外部服务器访问kibana

1.查看kibana端口号是否对外暴露

2.修改kibaba.yml文件

server.host: "localhost" 修改为 server.host: "本机ip"

安装和配置Logstash

虽然Beats可以将数据直接发送到Elasticsearch数据库,但还是建议使用Logstash来处理数据。 这样可以从不同的源收集数据,将其转换为通用格式,并将其导出到另一个数据库。

使用以下命令安装Logstash:

sudo apt install logstash

默认安装路径为:/usr/share/logstash

默认配置文件路径为:/etc/logstash

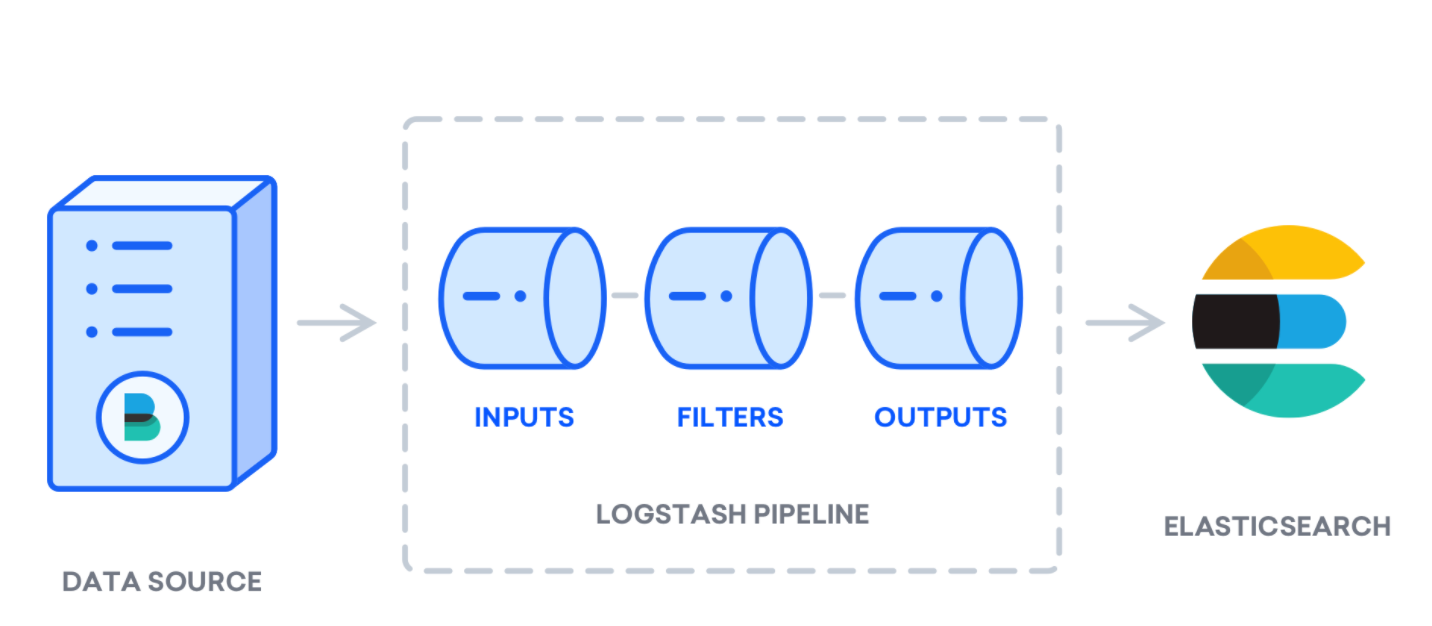

Logstash可以视为一个管道,从一端接收数据,以某种方式处理它,然后将其发送到目的地(在这里是指Elasticsearch)。 Logstash管道有两个必需元素, input和output ,以及一个可选元素filter 。 输入插件使用来自源的数据,过滤器插件处理数据,输出插件将数据写入目标。

创建一个名为02-beats-input.conf的配置文件,在其中设置Filebeat输入:

# 进入目录 cd /etc/logstash/conf.d/ # 创建文件 touch 02-beats-input.conf

插入以下input配置, 这将在TCP端口5044上监听beats输入:

input { beats { port => 5044 } }

保存并关闭文件。 接下来,在当前目录下创建一个名为10-syslog-filter.conf的配置文件,在其中添加系统日志过滤器,也称为syslogs :

# 创建文件 touch 10-syslog-filter.conf

插入以下syslog过滤器配置。 此示例系统日志配置取自官方Elastic文档 。 此过滤器用于解析传入的系统日志,使其可以被预定义的Kibana仪表板构建和使用:

filter { if [fileset][module] == "system" { if [fileset][name] == "auth" { grok { match => { "message" => ["%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sshd(?:[%{POSINT:[system][auth][pid]}])?: %{DATA:[system][auth][ssh][event]} %{DATA:[system][auth][ssh][method]} for (invalid user )?%{DATA:[system][auth][user]} from %{IPORHOST:[system][auth][ssh][ip]} port %{NUMBER:[system][auth][ssh][port]} ssh2(: %{GREEDYDATA:[system][auth][ssh][signature]})?", "%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sshd(?:[%{POSINT:[system][auth][pid]}])?: %{DATA:[system][auth][ssh][event]} user %{DATA:[system][auth][user]} from %{IPORHOST:[system][auth][ssh][ip]}", "%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sshd(?:[%{POSINT:[system][auth][pid]}])?: Did not receive identification string from %{IPORHOST:[system][auth][ssh][dropped_ip]}", "%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sudo(?:[%{POSINT:[system][auth][pid]}])?: s*%{DATA:[system][auth][user]} :( %{DATA:[system][auth][sudo][error]} ;)? TTY=%{DATA:[system][auth][sudo][tty]} ; PWD=%{DATA:[system][auth][sudo][pwd]} ; USER=%{DATA:[system][auth][sudo][user]} ; COMMAND=%{GREEDYDATA:[system][auth][sudo][command]}", "%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} groupadd(?:[%{POSINT:[system][auth][pid]}])?: new group: name=%{DATA:system.auth.groupadd.name}, GID=%{NUMBER:system.auth.groupadd.gid}", "%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} useradd(?:[%{POSINT:[system][auth][pid]}])?: new user: name=%{DATA:[system][auth][user][add][name]}, UID=%{NUMBER:[system][auth][user][add][uid]}, GID=%{NUMBER:[system][auth][user][add][gid]}, home=%{DATA:[system][auth][user][add][home]}, shell=%{DATA:[system][auth][user][add][shell]}$", "%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} %{DATA:[system][auth][program]}(?:[%{POSINT:[system][auth][pid]}])?: %{GREEDYMULTILINE:[system][auth][message]}"] } pattern_definitions => { "GREEDYMULTILINE"=> "(.| )*" } remove_field => "message" } date { match => [ "[system][auth][timestamp]", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ] } geoip { source => "[system][auth][ssh][ip]" target => "[system][auth][ssh][geoip]" } } else if [fileset][name] == "syslog" { grok { match => { "message" => ["%{SYSLOGTIMESTAMP:[system][syslog][timestamp]} %{SYSLOGHOST:[system][syslog][hostname]} %{DATA:[system][syslog][program]}(?:[%{POSINT:[system][syslog][pid]}])?: %{GREEDYMULTILINE:[system][syslog][message]}"] } pattern_definitions => { "GREEDYMULTILINE" => "(.| )*" } remove_field => "message" } date { match => [ "[system][syslog][timestamp]", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ] } } } }

完成后保存并关闭文件。

最后,创建一个名为30-elasticsearch-output.conf的配置文件:

# 创建文件 touch 30-elasticsearch-output.conf

插入以下output配置。 本质上,此输出将Logstash配置为将Beats数据存储在Elasticsearch中,该数据在localhost:9200运行,位于以Beat使用的名称命名的索引中。 这里使用的Beat是Filebeat:

output { elasticsearch { hosts => ["localhost:9200"] manage_template => false index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}" } }

保存并关闭文件。

如果要为使用Filebeat输入的其他应用程序添加过滤器,请确保将文件命名为在输入和输出配置之间的数字,在这里就应该是02到之间的两位数 。30

使用以下命令测试Logstash配置:

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -t

如果没有语法错误,几秒钟后输出将显示Configruation OK 。 如果在输出中没有看到此信息,请检查输出中出现的任何错误并更新配置以更正它们。

如果配置测试成功,请启动并启用Logstash以使配置更改生效:

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash

sudo systemctl enable logstash

启动成功后,可以通过 http://127.0.0.1:9600/ 来查看logstash运行状态。

注意:直接使用 ./logstash & 会报错,错误是 Failed to read pipelines yaml file. Location: /usr/share/logstash/config/pipelines.yml,所以启动的时候要加上 --path.setting 参数,指定配置文件地址。

安装和配置Filebeat

Elastic Stack使用几个名为Beats的轻量级数据发送器来收集各种来源的数据,并将它们传输到Logstash或Elasticsearch。 以下是目前Elastic提供的Beats:

- Filebeat :收集并发送日志文件。

- Metricbeat :从您的系统和服务中收集指标。

- Packetbeat :收集和分析网络数据。

- Winlogbeat :收集Windows事件日志。

- Auditbeat :收集Linux审计框架数据并监视文件完整性。

- 心跳 :通过主动探测监控服务的可用性。

这里我们使用Filebeat将本地日志转发到我们的Elastic Stack。

使用apt安装Filebeat:

sudo apt install filebeat

默认安装路径为:/usr/share/filebeat

默认配置文件路径为:/etc/filebeat

接下来,配置Filebeat以连接到Logstash。打开Filebeat配置文件filebeat.yml。

与Elasticsearch一样,Filebeat的配置文件采用YAML格式。 这意味着正确的缩进至关重要,因此请务必使用这些说明中指示的相同数量的空格。

Filebeat支持多种输出,但通常只将事件直接发送到Elasticsearch或Logstash以进行其他处理。 在这里将使用Logstash对Filebeat收集的数据执行其他处理。 Filebeat不需要直接向Elasticsearch发送任何数据,所以在这里找到文件的output.elasticsearch部分,在行首加 # 注释掉相关配置:

#output.elasticsearch: # Array of hosts to connect to. #hosts: ["localhost:9200"]

然后配置output.logstash部分。 删除#以激活logstash配置 。 以下配置将Filebeat连接到5044端口上的Logstash:

output.logstash: # The Logstash hosts hosts: ["localhost:5044"]

保存并关闭文件。

Filebeat的功能可以使用Filebeat模块进行扩展。 在这里我们将使用系统模块,该模块收集和解析由常见Linux发行版的系统日志记录服务创建的日志。

输入以下命令启用:

sudo filebeat modules enable system

通过运行以下命令查看已启用和已禁用模块的列表:

sudo filebeat modules list

列表内容大致如下:

Enabled:

system

Disabled:

apache2

auditd

elasticsearch

icinga

iis

kafka

kibana

logstash

mongodb

mysql

nginx

osquery

postgresql

redis

traefik

默认情况下,Filebeat使用syslog和授权日志的默认路径。 在这里我们无需更改配置中的任何内容。模块参数可以在/etc/filebeat/modules.d/system.yml配置文件中查看。

接下来,将索引模板加载到Elasticsearch中。 Elasticsearch索引是具有类似特征的文档集合。 索引用名称标识,用于在其中执行各种操作时引用索引。 创建新索引时,将自动应用索引模板。

使用以下命令加载模板:

sudo filebeat setup --template -E output.logstash.enabled=false -E 'output.elasticsearch.hosts=["localhost:9200"]'

成功之后输出:

Loaded index template

Filebeat附带了示例Kibana仪表板,可以在Kibana中可视化Filebeat数据。 在使用仪表板之前,需要创建索引模式并将仪表板加载到Kibana中。

在仪表板加载时,Filebeat连接到Elasticsearch以检查版本信息。 要在启用Logstash时加载仪表板,需要禁用Logstash输出并启用Elasticsearch输出:

sudo filebeat setup -e -E output.logstash.enabled=false -E output.elasticsearch.hosts=['localhost:9200'] -E setup.kibana.host=localhost:5601

输出大致如下:

2020-07-08T16:24:52.197+0800 INFO instance/beat.go:611 Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat] 2020-07-08T16:24:52.197+0800 INFO instance/beat.go:618 Beat UUID: 6dd3cc49-28df-4fbf-99f4-8d78c43fda6c 2020-07-08T16:24:52.197+0800 INFO [beat] instance/beat.go:931 Beat info {"system_info": {"beat": {"path": {"config": "/etc/filebeat", "data": "/var/lib/filebeat", "home": "/usr/share/filebeat", "logs": "/var/log/filebeat"}, "type": "filebeat", "uuid": "6dd3cc49-28df-4fbf-99f4-8d78c43fda6c"}}} 2020-07-08T16:24:52.197+0800 INFO [beat] instance/beat.go:940 Build info {"system_info": {"build": {"commit": "4e10965a54359738b64b9ea4b141831affbb8242", "libbeat": "6.8.10", "time": "2020-05-28T13:47:20.000Z", "version": "6.8.10"}}} 2020-07-08T16:24:52.197+0800 INFO [beat] instance/beat.go:943 Go runtime info {"system_info": {"go": {"os":"linux","arch":"amd64","max_procs":8,"version":"go1.10.8"}}} 2020-07-08T16:24:52.198+0800 INFO [beat] instance/beat.go:947 Host info {"system_info": {"host": {"architecture":"x86_64","boot_time":"2020-07-07T09:20:33+08:00","containerized":false,"name":"fanzhen","ip":["127.0.0.1/8","::1/128","192.168.1.128/24","fe80::4ab2:967c:4254:4ffe/64"],"kernel_version":"5.3.0-28-generic","mac":["60:f2:62:57:89:8d"],"os":{"family":"debian","platform":"ubuntu","name":"Ubuntu","version":"18.04.4 LTS (Bionic Beaver)","major":18,"minor":4,"patch":4,"codename":"bionic"},"timezone":"CST","timezone_offset_sec":28800,"id":"8f613c4d695c4642890b0e636e121222"}}} 2020-07-08T16:24:52.198+0800 INFO [beat] instance/beat.go:976 Process info {"system_info": {"process": {"capabilities": {"inheritable":null,"permitted":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend","audit_read"],"effective":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend","audit_read"],"bounding":["chown","dac_override","dac_read_search","fowner","fsetid","kill","setgid","setuid","setpcap","linux_immutable","net_bind_service","net_broadcast","net_admin","net_raw","ipc_lock","ipc_owner","sys_module","sys_rawio","sys_chroot","sys_ptrace","sys_pacct","sys_admin","sys_boot","sys_nice","sys_resource","sys_time","sys_tty_config","mknod","lease","audit_write","audit_control","setfcap","mac_override","mac_admin","syslog","wake_alarm","block_suspend","audit_read"],"ambient":null}, "cwd": "/root", "exe": "/usr/share/filebeat/bin/filebeat", "name": "filebeat", "pid": 24600, "ppid": 24599, "seccomp": {"mode":"disabled","no_new_privs":false}, "start_time": "2020-07-08T16:24:51.210+0800"}}} 2020-07-08T16:24:52.198+0800 INFO instance/beat.go:280 Setup Beat: filebeat; Version: 6.8.10 2020-07-08T16:24:52.198+0800 INFO elasticsearch/client.go:164 Elasticsearch url: http://localhost:9200 2020-07-08T16:24:52.198+0800 INFO [publisher] pipeline/module.go:110 Beat name: fanzhen 2020-07-08T16:24:52.199+0800 INFO elasticsearch/client.go:164 Elasticsearch url: http://localhost:9200 2020-07-08T16:24:52.200+0800 INFO elasticsearch/client.go:739 Attempting to connect to Elasticsearch version 6.8.10 2020-07-08T16:24:52.269+0800 INFO template/load.go:128 Template already exists and will not be overwritten. 2020-07-08T16:24:52.269+0800 INFO instance/beat.go:889 Template successfully loaded. Loaded index template Loading dashboards (Kibana must be running and reachable) 2020-07-08T16:24:52.269+0800 INFO elasticsearch/client.go:164 Elasticsearch url: http://localhost:9200 2020-07-08T16:24:52.270+0800 INFO elasticsearch/client.go:739 Attempting to connect to Elasticsearch version 6.8.10 2020-07-08T16:24:52.333+0800 INFO kibana/client.go:118 Kibana url: http://localhost:5601 2020-07-08T16:24:55.199+0800 INFO add_cloud_metadata/add_cloud_metadata.go:340 add_cloud_metadata: hosting provider type not detected. 2020-07-08T16:25:25.165+0800 INFO instance/beat.go:736 Kibana dashboards successfully loaded. Loaded dashboards 2020-07-08T16:25:25.166+0800 INFO elasticsearch/client.go:164 Elasticsearch url: http://localhost:9200 2020-07-08T16:25:25.168+0800 INFO elasticsearch/client.go:739 Attempting to connect to Elasticsearch version 6.8.10 2020-07-08T16:25:25.249+0800 INFO kibana/client.go:118 Kibana url: http://localhost:5601 2020-07-08T16:25:25.321+0800 WARN fileset/modules.go:388 X-Pack Machine Learning is not enabled 2020-07-08T16:25:25.384+0800 WARN fileset/modules.go:388 X-Pack Machine Learning is not enabled Loaded machine learning job configurations

之后,启动并启用Filebeat:

sudo systemctl start filebeat sudo systemctl enable filebeat

如果已经正确设置了Elastic Stack,Filebeat将开始把系统日志和授权日志发送到Logstash,Logstash会将该数据加载到Elasticsearch中。

要验证Elasticsearch是否确实正在接收此数据,请使用以下命令查询Filebeat索引:

curl -XGET 'http://localhost:9200/filebeat-*/_search?pretty'

输出大致如下:

{ "took" : 1, "timed_out" : false, "_shards" : { "total" : 12, "successful" : 12, "skipped" : 0, "failed" : 0 }, "hits" : { "total" : 11408, "max_score" : 1.0, "hits" : [ { "_index" : "filebeat-6.8.10-2020.07.05", "_type" : "doc", "_id" : "ZumJLXMBSsiTvi4Uh_J1", "_score" : 1.0, "_source" : { "@timestamp" : "2020-07-05T05:53:50.000Z", "system" : { "auth" : { "program" : "systemd-logind", "message" : "New seat seat0.", "timestamp" : "Jul 5 13:53:50", "pid" : "638", "hostname" : "fanzhen" } }, "@version" : "1", "source" : "/var/log/auth.log", "log" : { "file" : { "path" : "/var/log/auth.log" } }, "offset" : 0, "event" : { "dataset" : "system.auth" }, "prospector" : { "type" : "log" }, "host" : { "id" : "8f613c4d695c4642890b0e636e121222", "name" : "fanzhen", "containerized" : false, "os" : { "name" : "Ubuntu", "platform" : "ubuntu", "family" : "debian", "codename" : "bionic", "version" : "18.04.4 LTS (Bionic Beaver)" }, "architecture" : "x86_64" }, "beat" : { "name" : "fanzhen", "version" : "6.8.10", "hostname" : "fanzhen" }, "tags" : [ "beats_input_codec_plain_applied", "_geoip_lookup_failure" ], "fileset" : { "module" : "system", "name" : "auth" }, "input" : { "type" : "log" } } }, ... ] } }

如果输出显示总命中数为0,则Elasticsearch不会在搜索的索引下加载任何日志。这时候需要检查设置是否有错误。