Meta Blogging

由来

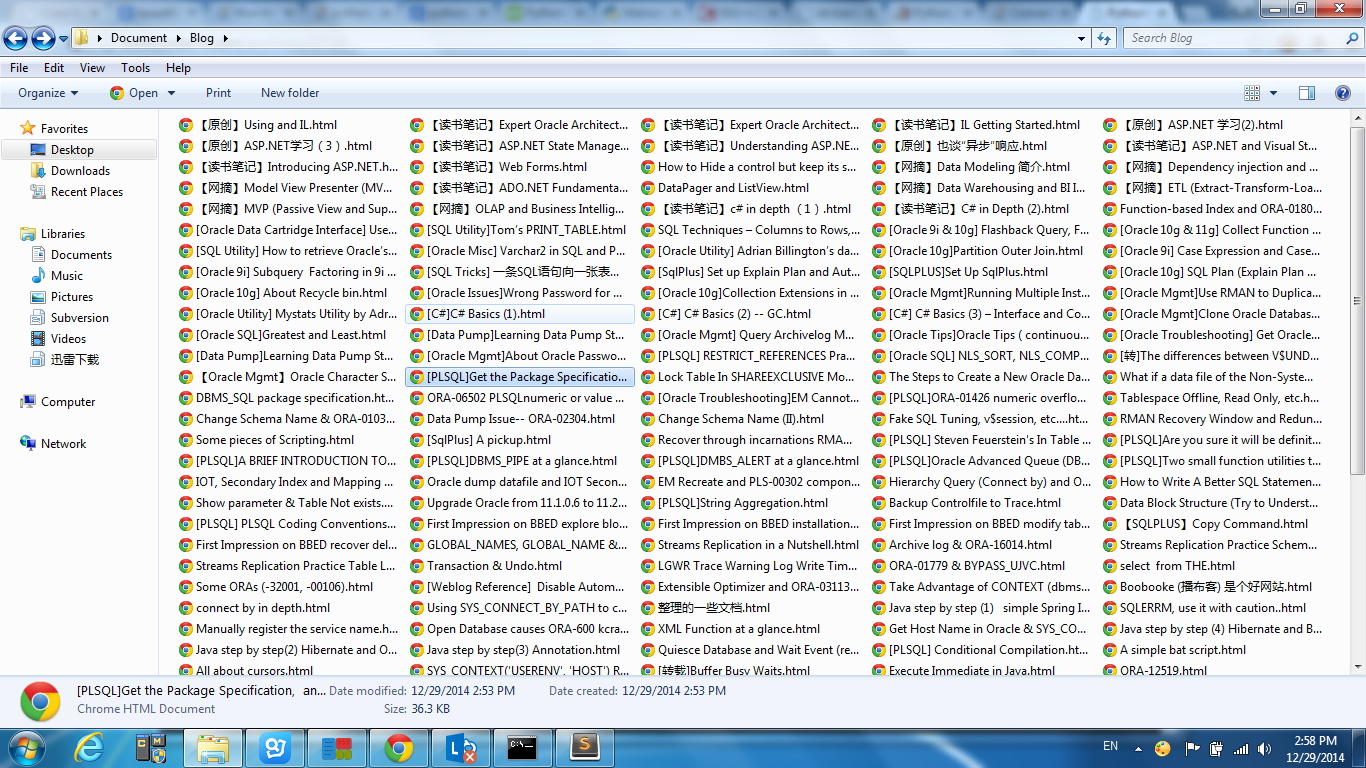

偶然想到说如果哪一天cnblogs挂了,那之前记录的随笔怎么办?可不可以把它们给download下来本地保存一份。正好看到有个库叫requests, 干嘛不试试看呢。

开工

有了requests 和 beautifulsoup,代码其实很简单。唯一需要注意的是,不能太频繁地用requests.get来抓取网页,不然会报错。一般的网站都会有类似的自我保护机制吧,防止被爬虫给爬死了。

import requests

from BeautifulSoup import BeautifulSoup

import re

import os

import time

URL='http://www.cnblogs.com/fangwenyu/p/'

URL_PATTERN = 'http://www.cnblogs.com/fangwenyu/p|archive'

pattern = re.compile(URL_PATTERN)

DIRECTORY = os.path.dirname(__file__)

ESCAPE_CHARS = '/:*?"<>|' # Those characters are not allowed to be used in file name in Windows.

tbl = {ord(char): u'' for char in ESCAPE_CHARS}

# get the total page number

page_count = 0

resp = requests.get(URL)

if resp.status_code == requests.codes.ok:

soup = BeautifulSoup(resp.content)

attr = {'class':'Pager'}

result = soup.find('div', attr)

page_count = int(result.getText()[1:2])

with open(os.path.join(DIRECTORY, 'blog_archive.txt'), 'w') as blog_archive:

for page in range(1,page_count+1):

param = {'page':page}

resp = requests.get(URL, params=param)

soup = BeautifulSoup(resp.content, convertEntities=BeautifulSoup.HTML_ENTITIES)

blog_list = [(a.getText(), a.get('href')) for a in soup.findAll('a', id=True, href=pattern)]

for title, link in blog_list:

norm_title = title.translate(tbl)

item = '%s |[%s]| %s ' % (title, norm_title, link)

blog_archive.write(item.encode('utf-8'))

blog_archive.write('

')

with open(os.path.join(DIRECTORY, norm_title + '.html'), 'w') as f:

f.write(requests.get(link).content)

# sleep for some time as access the cnblogs too freqently will cause the server not respond.

# Something like this --

# ...

# requests.exceptions.ConnectionError: ('Connection aborted.', error(10060, 'A connection attempt failed

# because the connected party did not properly respond after a period of time, or established connection failed

# because connected host has failed to respond'))

time.sleep(5)