DATABASE ACCESS WITHOUT ADO.NET

Your options for database access without ADO.NET include the following:

• The SqlDataSource control: The SqlDataSource control allows you to define queries declaratively. You can

connect the SqlDataSource to rich controls such as the GridView, and give your pages the ability to edit and

update data without requiring any ADO.NET code. Best of all, the SqlDataSource uses ADO.NET behind the

scenes, and so it supports any database that has a full ADO.NET provider. However, the SqlDataSource is somewhat controversial, because it encourages you to place database logic in the markup portion of your

page. Many developers prefer to use the ObjectDataSource instead, which gives similar data binding functionality

but relies on a custom database component. When you use the ObjectDataSource, it’s up to you to

create the database component and write the back-end ADO.NET code.

• LINQ to SQL: With LINQ to SQL, you define a query using C# code (or the LinqDataSource control) and the

appropriate database logic is generated automatically. LINQ to SQL supports updates, generates secure and

well-written SQL statements, and provides some customizability. Like the SQLDataSource control, LINQ to

SQL doesn’t allow you to execute database commands that don’t map to straightforward queries and updates

(such as creating tables). Unlike the SqlDataSource control, LINQ to SQL only works with SQL Server and is

completely independent of ADO.NET.

• Profiles: The profiles feature allows you to store user-specific blocks of data in a database without writing

ADO.NET code. However, the stock profiles feature is significantly limited—for example, it doesn’t allow you

to control the structure of the database table where the data is stored. As a result, developers who use the

profiles feature often create custom profile providers, which require custom ADO.NET code.

None of these options is a replacement for ADO.NET, because none of them offers the full flexibility, customizability, and performance that hand-written database code offers. However, depending on your needs, it may be worth using one or more of these features simply to get better code-writing productivity.

Overall, most ASP.NET developers will need to write some ADO.NET code, even if it’s only to optimize a

performance-sensitive task or to perform a specific operation that wouldn’t otherwise be possible. Also, every professional ASP.NET developer needs to understand how the ADO.NET plumbing works in order to evaluate when it’s required and when another approach is just as effective.

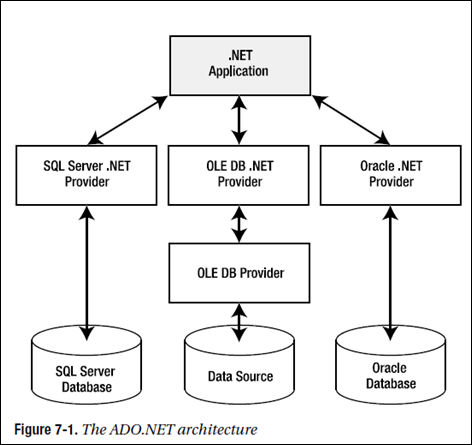

The ADO.NET Architecture

One of the key differences between ADO.NET and some other database technologies is how it deals with the challenge of different data sources. In many previous database technologies, such as classic ADO, programmers use a generic set of objects no matter what the underlying data source is. For example, if you want to retrieve a record from an Oracle database using ADO code, you use the same Connection class you would use to tackle the task with SQL Server. This isn’t the case in ADO.NET, which uses a data provider model.

ADO.NET Data Providers

A data provider is a set of ADO.NET classes that allows you to access a specific database, execute SQL commands, and retrieve data. Essentially, a data provider is a bridge between your application and a data source.

The classes that make up a data provider include the following:

• Connection: You use this object to establish a connection to a data source.

• Command: You use this object to execute SQL commands and stored procedures.

• DataReader: This object provides fast read-only, forward-only access to the data retrieved from a query.

• DataAdapter: This object performs two tasks. First, you can use it to fill a DataSet (a disconnected collection of tables and relationships) with information extracted from a data source.Second, you can use it to apply changes to a data source, according to the modifications you’ve made in a DataSet.

ADO.NET doesn’t include generic data provider objects. Instead, it includes different data providers specifically designed for different types of data sources. Each data provider has a specific implementation of the Connection, Command, DataReader, and DataAdapter classes that’s optimized for a specific RBDMS (relational database management system). For example, if you need to create a connection to a SQL Server database, you’ll use a connection class named SqlConnection.

One of the key underlying ideas of the ADO.NET provider model is that it’s extensible. In other words, developers can create their own providers for proprietary data sources. In fact, numerous proof-of-concept examples are available that show how you can easily create custom ADO.NET providers to wrap nonrelational data stores, such as the file system or a directory service. Some third-party vendors also sell custom providers for .NET.

The .NET Framework is bundled with a small set of four providers:

• SQL Server provider: Provides optimized access to a SQL Server database (version 7.0 or later).

• OLE DB provider: Provides access to any data source that has an OLE DB driver. This includes SQL Server databases prior to version 7.0.

• Oracle provider: Provides optimized access to an Oracle database (version 8i or later).

• ODBC provider: Provides access to any data source that has an ODBC driver.

Standardization in ADO.NET

Even though different .NET data providers use different classes, all providers are standardized in the same way. More specifically, each provider is based on the same set of interfaces and base classes.

ADO.NET also has another layer of standardization: the DataSet. The DataSet is an all-purpose container for data that you’ve retrieved from one or more tables in a data source. The DataSet is completely generic—in other words, custom providers don’t define their own custom versions of the DataSet class. No matter which data provider you use, you can extract your data and place it into a disconnected DataSet in the same way. That makes it easy to separate data retrieval code from data processing code. If you change the underlying database, you will need to change the data retrieval code, but if you use the DataSet and your information has the same structure, you won’t need to modify the way you process that data.

Fundamental ADO.NET Classes

ADO.NET has two types of objects: connection-based and content-based.

(1) Connection-based objects: These are the data provider objects such as Connection, Command, DataReader, and DataAdapter. They allow you to connect to a database, execute SQL statements, move through a read-only result set, and fill a DataSet. The connection-based objects are specific to the type of data source, and are found in a provider-specific namespace (such as System.Data.SqlClient for the SQL Server provider).

(2) Content-based objects: These objects are really just “packages” for data. They include the DataSet, DataColumn, DataRow, DataRelation, and several others. They are completely independent of the type of data source and are found in the System.Data namespace.

The Connection Class

Connection Strings

When you create a Connection object, you need to supply a connection string. The connection string is a series of name/value settings separated by semicolons (;). The order of these settings is unimportant, as is the capitalization. Taken together, they specify the basic information needed to create a connection.

The <connectionStrings> section of the web.config file is a handy place to store your connection strings. Here’s an example:

1: <configuration>

2: <connectionStrings>

3: <add name="Northwind" connectionString= "Data Source=localhost; Initial Catalog=Northwind; Integrated Security=SSPI"/>

4: </connectionStrings>

5: ...

6: </configuration>

You can then retrieve your connection string by name from the WebConfigurationManager.ConnectionStrings collection. Assuming you’ve imported the System.Web.Configuration namespace, you can use a code statement like this:

1: string connectionString =

2: WebConfigurationManager.ConnectionStrings["Northwind"].ConnectionString;

Testing a Connection

When opening a connection, you face two possible exceptions. An InvalidOperationException occurs if your connection string is missing required information or the connection is already open. A SqlException occurs for

just about any other type of problem, including an error contacting the database server, logging in, or accessing the specified database.

SqlException is a provider-specific class that’s used for the SQL Server provider. Other database providers use different exception classes to serve the same role, such as OracleException, OleDbException, and OdbcException.

Connections are a limited server resource. This means it’s imperative that you open the connection as late as possible and release it as quickly as possible.

Connection Pooling

Connection pooling is the practice of keeping a permanent set of open database connections to be shared by sessions that use the same data source. This avoids the need to create and destroy connections all the time. Connection pools in ADO.NET are completely transparent to the programmer, and your data access code doesn’t need to be altered. When a client requests a connection by calling Open(), it’s served directly from the available pool, rather than re-created. When a client releases a connection by calling Close() or Dispose(), it’s not discarded but returned to the pool to serve the next request.

ADO.NET does not include a connection pooling mechanism. However, most ADO.NET providers implement some form of connection pooling. The SQL Server and Oracle data providers implement their own efficient connection pooling algorithms. These algorithms are implemented entirely in managed code and—in contrast to some popular misconceptions—do not use COM+ enterprises services. For a connection to be reused with SQL Server or Oracle, the connection string must match exactly. If it differs even slightly, a new connection will be created in a new pool.

■Tip

SQL Server and Oracle connection pooling use a full-text match algorithm. That means any minor change in the connection string will thwart connection pooling, even if the change is simply to reverse the order of parameters or add an extra blank space at the end. For this reason, it’s imperative that you don’t hard-code the connection string in different web pages. Instead, you should store the connection string in one place—preferably in the

<connectionStrings> section of the web.config file.

With both the SQL Server and Oracle providers, connection pooling is enabled and used automatically. However, you can also use connection string parameters to configure pool size settings.

The Command and DataReader Classes

Command Basics

Before you can use a command, you need to choose the command type, set the command text, and bind the command to a connection. You can perform this work by setting the corresponding properties (CommandType, CommandText, and Connection), or you can pass the information you need as constructor arguments.

The DataReader Class

A DataReader allows you to read the data returned by a SELECT command one record at a time, in a forward-only, read-only stream. This is sometimes called a firehose cursor. Using a DataReader is the simplest way to get to your data, but it lacks the sorting and relational abilities of the disconnected DataSet. However, the DataReader provides the quickest possible no-nonsense access to data.

The ExecuteReader() Method and the DataReader

Null Values

Unfortunately, the DataReader isn’t integrated with .NET nullable values. This discrepancy is due to historical reasons. The nullable data types were first introduced in .NET 2.0, at which point the DataReader model was already well established and difficult to change.

Instead, the DataReader returns the constant DBNull.Value when it comes across a null value in the database. Attempting to use this value or cast it to another data type will cause an exception. (Sadly, there’s no way to cast between DBNull.Value and a nullable data type.) As a result, you need to test for DBNull.Value when it might occur, using code like this:

1: int? numberOfHires;

2: if (reader["NumberOfHires"] == DBNull.Value)

3: numberOfHires = null;

4: else

5: numberOfHires = (int?)reader["NumberOfHires"];

CommandBehavior

The ExecuteReader() method has an overloaded version that takes one of the values from the CommandBehavior enumeration as a parameter. One useful value is CommandBehavior.CloseConnection. When you pass this value to the ExecuteReader() method, the DataReader will close the associated connection as soon as you close the

DataReader.

Using this technique, you could rewrite the code as follows:

1: SqlDataReader reader = cmd.ExecuteReader(CommandBehavior.CloseConnection);

2: // (Build the HTML string here.)

3: // No need to close the connection. You can simply close the reader.

4: reader.Close();

5: HtmlContent.Text = htmlStr.ToString();

This behavior is particularly useful if you retrieve a DataReader in one method and need to pass it to another method to process it. If you use the CommandBehavior.CloseConnection value, the connection will be automatically closed as soon as the second method closes the reader.

Another possible value is CommandBehavior.SingleRow, which can improve the performance of the query execution when you’re retrieving only a single row. For example, if you are retrieving a single record using its unique primary key field (CustomerID, ProductID, and so on), you can use this optimization.

You can also use CommandBehavior.SequentialAccess to read part of a binary field at a time, which reduces the memory overhead for large binary fields.

Processing Multiple Result Sets

The command you execute doesn’t have to return a single result set. Instead, it can execute more than one query and return more than one result set as part of the same command. This is useful if you need to retrieve a large amount of related data, such as a list of products and product categories that, taken together, represent a product catalog.

A command can return more than one result set in two ways:

• If you’re calling a stored procedure, it may use multiple SELECT statements.

• If you’re using a straight text command, you may be able to batch multiple commands by separating commands with a semicolon (;).

Not all providers support this technique, but the SQL Server database provider does.

Here’s an example of a string that defines a batch of three SELECT statements:

1: string sql = "SELECT TOP 5 * FROM Employees;" +

2: "SELECT TOP 5 * FROM Customers; SELECT TOP 5 * FROM Suppliers";

This string contains three queries. Together, they return the first five records from the Employees table, the first five from the Customers table, and the first five from the Suppliers table.

Processing these results is fairly straightforward. Initially, the DataReader will provide access to the results from the Employees table. Once you’ve finished using the Read() method to read all these records, you can call NextResult() to move to the next result set. When there are no more result sets, this method returns false.

You can even cycle through all the available result sets with a while loop, although in this case you must be careful not to call NextResult() until you finish reading the first result set. Here’s an example of this more specialized technique:

1: // Cycle through the records and all the rowsets,

2: // and build the HTML string.

3: StringBuilder htmlStr = new StringBuilder("");

4: int i = 0;

5: do

6: {

7: htmlStr.Append("<h2>Rowset: ");

8: htmlStr.Append(i.ToString());

9: htmlStr.Append("</h2>");

10: while (reader.Read())

11: {

12: htmlStr.Append("<li>");

13: // Get all the fields in this row.

14: for (int field = 0; field < reader.FieldCount; field++)

15: {

16: htmlStr.Append(reader.GetName(field).ToString());

17: htmlStr.Append(": ");

18: htmlStr.Append(reader.GetValue(field).ToString());

19: htmlStr.Append("");

20: }

21: htmlStr.Append("</li>");

22: }

23: htmlStr.Append("<br/><br />");

24: i++;

25: } while (reader.NextResult());

26: // Close the

27: DataReader and the Connection.

28: reader.Close();

29: con.Close();

30: // Show the

31: generated HTML code on the page.

32: HtmlContent.Text = htmlStr.ToString();

The ExecuteScalar() Method

The ExecuteScalar() method returns the value stored in the first field of the first row of a result set generated by the command’s SELECT query. This method is usually used to execute a query that retrieves only a single field, perhaps calculated by a SQL aggregate function such as COUNT() or SUM().

The ExecuteNonQuery() Method

The ExecuteNonQuery() method executes commands that don’t return a result set, such as INSERT, DELETE, and UPDATE. The ExecuteNonQuery() method returns a single piece of information—the number of affected records (or –1 if your command isn’t an INSERT, DELETE, or UPDATE statement).

SQL Injection Attacks

Using Parameterized Commands

A parameterized command is simply a command that uses placeholders in the SQL text. The placeholders indicate dynamically supplied values, which are then sent through the Parameters collection of the Command object.

For example, take this SQL statement:

SELECT * FROM Customers WHERE CustomerID = 'ALFKI'

It would become something like this:

SELECT * FROM Customers WHERE CustomerID = @CustID

The placeholders are then added separately and automatically encoded.

The syntax for parameterized commands differs slightly for different providers. With the SQL Server provider, parameterized commands use named placeholders (with unique names). With the OLE DB provider, each hard-coded value is replaced with a question mark. In either case, you need to supply a Parameter object for each parameter, which you insert into the Command.Parameters collection. With the OLE DB provider, you must make sure you add the parameters in the same order that they appear in the SQL string. This isn’t a requirement with the SQL Server provider, because the parameters are matched to the placeholders based on their names.

Calling Stored Procedures

Parameterized commands are just a short step from commands that call full-fledged stored procedures.

As you probably know, a stored procedure is a batch of one or more SQL statements that are stored in the database. Stored procedures are similar to functions in that they are well-encapsulated blocks of logic that can accept data (through input parameters) and return data (through result sets and output parameters).

Stored procedures have many benefits:

They are easier to maintain: For example, you can optimize the commands in a stored procedure without recompiling the application that uses it. They also standardize data access logic in one place—the database—making it easier for different applications to reuse that logic in a consistent way. (In object-oriented terms, stored procedures define the interface to your database.)

They allow you to implement more secure database usage: For example, you can allow the Windows account that runs your ASP.NET code to use certain stored procedures but restrict access to the underlying tables.

They can improve performance: Because a stored procedure batches together multiple statements, you can get a lot of work done with just one trip to the database server. If your database is on another computer, this reduces the total time to perform a complex task dramatically.

■Note There’s one catch—nullable fields. If you want to pass a null value to a stored procedure, you can’t use a

C# null reference, because that indicates an uninitialized reference, which is an error condition. Unfortunately, you

can’t use a nullable data type either (such as int?), because the SqlParameter class doesn’t support nullable data

types. To indicate null content in a field, you must pass the .NET constant DBNull.Value as a parameter value.

Transactions

A transaction is a set of operations that must either succeed or fail as a unit. The goal of a transaction is to ensure that data is always in a valid, consistent state.

Transactions are characterized by four properties popularly called ACID properties. ACID is an acronym that represents the following concepts:

Atomic: All steps in the transaction should succeed or fail together. Unless all the steps from a transaction complete, a transaction is not considered complete.

Consistent: The transaction takes the underlying database from one stable state to another.

Isolated: Every transaction is an independent entity. One transaction should not affect any other transaction running at the same time.

Durable: Changes that occur during the transaction are permanently stored on some media, typically a hard disk, before the transaction is declared successful. Logs are maintained so that the database can be restored to a valid state even if a hardware or network failure occurs.

Transactions and ASP.NET Applications

Stored procedure transactions: These transactions take place entirely in the database. Stored procedure transactions offer the best performance, because they need only a single round-trip to the database. The drawback is that you also need to write the transaction logic using SQL statements.

Client-initiated (ADO.NET) transactions: These transactions are controlled programmatically by your ASP.NET web-page code. Under the covers, they use the same commands as a stored procedure transaction, but your code uses some ADO.NET objects that wrap these details. The drawback is that extra round-trips are required to the database to start and commit the transaction.

COM+ transactions: These transactions are handled by the COM+ runtime, based on declarative attributes you add to your code. COM+ transactions use a two-stage commit protocol and always incur extra overhead. They also require that you create a separate serviced component class. COM+ components are generally a good choice only if your transaction spans multiple transaction-aware resource managers, because COM+ includes built-in support for distributed transactions. For example, a single COM+ transaction can span interactions in a SQL Server

database and an Oracle database. COM+ transactions are not covered in this book.

Isolation Levels

The isolation level determines how sensitive a transaction is to changes made by other in-progress transactions. For example, by default when two transactions are running independently of each other, records inserted by one transaction are not visible to the other transaction until the first transaction is committed.

The concept of isolation levels is closely related to the concept of locks, because by determining the isolation level for a given transaction you determine what types of locks are required.

Share locks are locks that are placed when a transaction wants to read data from the database. No other transactions can modify the data while shared locks exist on a table, row, or range. However, more than one user can use a shared lock to read the data simultaneously.

[共享锁:读会阻塞写 (Oracle 中其实不存在共享锁,因为Oracle采用读一致性的方法(use undo segment)保证读不阻塞写,写也不阻塞读。 – by Frank]

Exclusive locks are the locks that prevent two or more transactions from modifying data simultaneously. An exclusive lock is issued when a transaction needs to update data and no other locks are already held. No other user can read or modify the data while an exclusive lock is in place.

Values of the IsolationLevel Enumeration

(1)ReadUncommitted: No shared locks are placed, and no exclusive locks are honored. This type of isolation level is appropriate when you want to work with all the data matching certain conditions, irrespective of whether it’s committed. Dirty reads are possible, but performance is increased.

(2)ReadCommitted: Shared locks are held while the data is being read by the transaction. This avoids dirty reads, but the data can be changed before a transaction completes. This may result in nonrepeatable reads or phantom rows. This is the default isolation level used by SQL Server.

(3)Snapshot: Stores a copy of the data your transaction accesses. As a result, the transaction won’t see the changes made by other transactions. This approach reduces blocking, because even if other transactions are holding locks on the data, a transaction with snapshot isolation will still be able to read a copy of the data. This option is supported only in SQL Server 2005 and needs to be enabled through a database-level option.

(4)RepeatableRead: In this case, shared locks are placed on all data that is used in a query. This prevents others from modifying the data, and it also prevents nonrepeatable reads. However, phantom rows are possible.

(5)Serializable: A range lock is placed on the data you use, thereby preventing other users from updating or inserting rows that would fall in that range. This is the only isolation level that removes the possibility of phantom rows. However, it has an extremely negative effect on user concurrency and is rarely used in multiple user scenarios.

Savepoints

Savepoints are markers that act like bookmarks. You mark a certain point in the flow of the transaction, and then you can roll back to that point. You set the savepoint using the Transaction.Save() method. Note that the Save() method is available only for the SqlTransaction class, because it’s not part of the standard IDbTransaction interface.

Provider-Agnostic Code

Creating the Factory

The basic idea of the factory model is that you use a single factory object to create every other type of provider-specific object you need. You can then interact with these provider-specific objects in a completely generic way, through a set of common base classes.

The factory class is itself provider-specific—for example, the SQL Server provider includes a class named System.Data.SqlClient.SqlClientFactory. The Oracle provider uses System.Data.OracleClient.OracleClientFactory. At first glance, this might seem to stop you from writing provider-agnostic code. However, it turns out that there’s a completely standardized class that’s designed to dynamically find and create the factory you need. This class is

System.Data.Common.DbProviderFactories. It provides a static GetFactory() method that returns the factory you need based on the provider name.

For example, here’s the code that uses DbProviderFactories to get the SqlClientFactory:

1: string factory = "System.Data.SqlClient";

2: DbProviderFactory provider = DbProviderFactories.GetFactory(factory);

Even though the DbProviderFactories class returns a strongly typed SqlClientFactory object, you shouldn’t treat it as such. Instead, your code should access it as a DbProviderFactory instance. That’s because all factories inherit from DbProviderFactory. If you use only the DbProviderFactory members, you can write code that works with any factory.

The weak point in the code snippet shown previously is that you need to pass a string that identifies the provider to the DbProviderFactories.GetFactory() method. You would typically read this from an application setting in the web.config file. That way, you can write completely database-agnostic code and switch your application over to another provider simply by modifying a single setting.

Create Objects with Factory

Once you have a factory, you can create other objects, such as Connection and Command instances, using the DbProviderFactory.CreateXxx() methods. For example, the CreateConnection() method returns the Connection object for your data provider. Once again, you must assume you don’t know what provider you’ll be using, so you can interact with the objects the factory creates only through a standard base class.