对网上各处寻找reinforcement learning的实践环节感到无力,尤其是对open ai gym的介绍上。cnblogs、github、csdn、zhuanlan.zhihu、公众号、google、youtube等等,都非常的零碎。公开课上主要是理论,对代码实现讲的很少。对于像我这样的新手来说简直两眼一抹黑,无从下手的感觉。

目前我自己在用的学习材料:

1,官方文档

https://gym.openai.com/docs/

2,知乎专栏

https://zhuanlan.zhihu.com/reinforce(等等)

3,github

https://github.com/openai/gym

https://github.com/dennybritz/reinforcement-learning

4,git blog、youtube

莫烦python

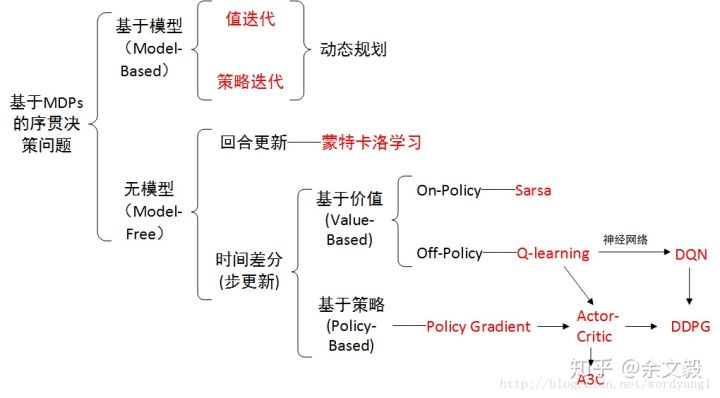

在此处作为一个整理工具,放一些学习材料中的重要知识点和我自己的实现。大致的计划是,先实现一些Silver课程上的基本算法或者gym里的案例控制,后面再学deep learning和DRL,这个顺序。

4/9/2018 实现iterative policy evaluation用于grid world

学习材料:

https://zhuanlan.zhihu.com/p/28084990

我的代码:(我的gridworld问题和专栏里面有点不一样,我这里出口只有右下角一个,并且我用的是table和矩阵运算的形式来实现的)

https://github.com/ysgclight/RL_Homework/blob/master/4x4gridworld.py

4/10/2018 从零开始学习gym的ABC

1,重要知识点:

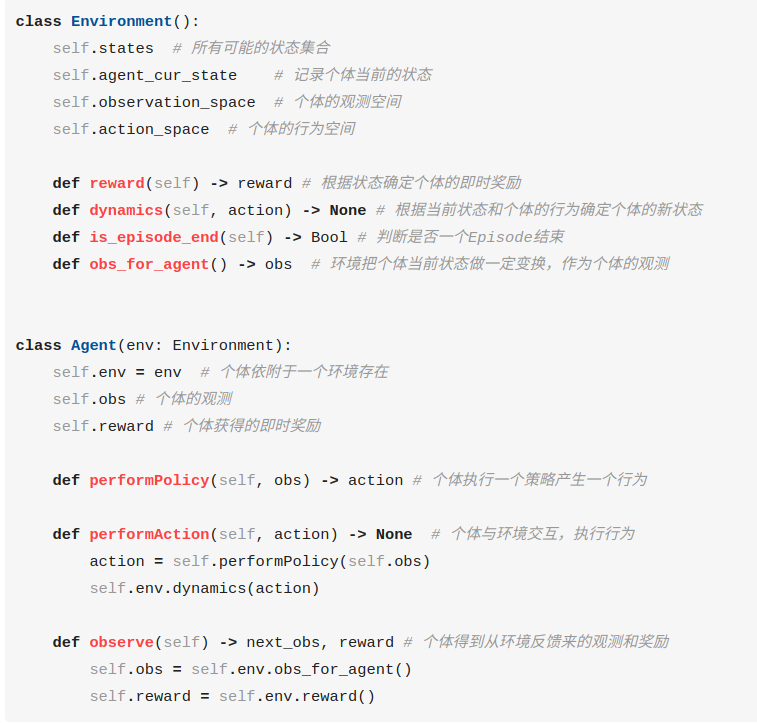

https://zhuanlan.zhihu.com/p/28086233

一个小问题:env和space到底有什么区别啊?

2,

mountain_car.py中对于观测空间和行为空间描述

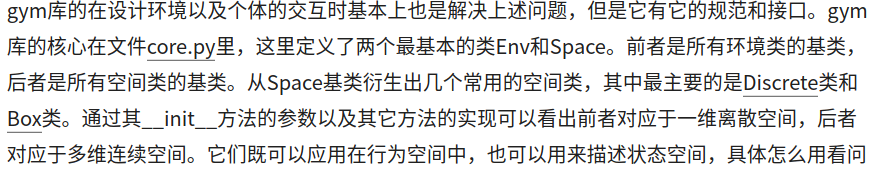

core.py中有一以下重要的class

class Env(object): """The main OpenAI Gym class. It encapsulates an environment with arbitrary behind-the-scenes dynamics. An environment can be partially or fully observed. The main API methods that users of this class need to know are: step reset render close seed And set the following attributes: action_space: The Space object corresponding to valid actions observation_space: The Space object corresponding to valid observations reward_range: A tuple corresponding to the min and max possible rewards Note: a default reward range set to [-inf,+inf] already exists. Set it if you want a narrower range. The methods are accessed publicly as "step", "reset", etc.. The non-underscored versions are wrapper methods to which we may add functionality over time. """

def step(self, action): """Run one timestep of the environment's dynamics. When end of episode is reached, you are responsible for calling `reset()` to reset this environment's state. Accepts an action and returns a tuple (observation, reward, done, info). Args: action (object): an action provided by the environment Returns: observation (object): agent's observation of the current environment reward (float) : amount of reward returned after previous action done (boolean): whether the episode has ended, in which case further step() calls will return undefined results info (dict): contains auxiliary diagnostic information (helpful for debugging, and sometimes learning) """ raise NotImplementedError def reset(self): """Resets the state of the environment and returns an initial observation. Returns: observation (object): the initial observation of the space. """ raise NotImplementedError def render(self, mode='human'): """Renders the environment. The set of supported modes varies per environment. (And some environments do not support rendering at all.) By convention, if mode is: - human: render to the current display or terminal and return nothing. Usually for human consumption. - rgb_array: Return an numpy.ndarray with shape (x, y, 3), representing RGB values for an x-by-y pixel image, suitable for turning into a video. - ansi: Return a string (str) or StringIO.StringIO containing a terminal-style text representation. The text can include newlines and ANSI escape sequences (e.g. for colors). Note: Make sure that your class's metadata 'render.modes' key includes the list of supported modes. It's recommended to call super() in implementations to use the functionality of this method. Args: mode (str): the mode to render with close (bool): close all open renderings Example: class MyEnv(Env): metadata = {'render.modes': ['human', 'rgb_array']} def render(self, mode='human'): if mode == 'rgb_array': return np.array(...) # return RGB frame suitable for video elif mode is 'human': ... # pop up a window and render else: super(MyEnv, self).render(mode=mode) # just raise an exception """ raise NotImplementedError

class Space(object): """Defines the observation and action spaces, so you can write generic code that applies to any Env. For example, you can choose a random action. """

3,gym官方文件

envs定义游戏类型

spaces定义action和observation的抽象数据空间

4,gym envs二次开发

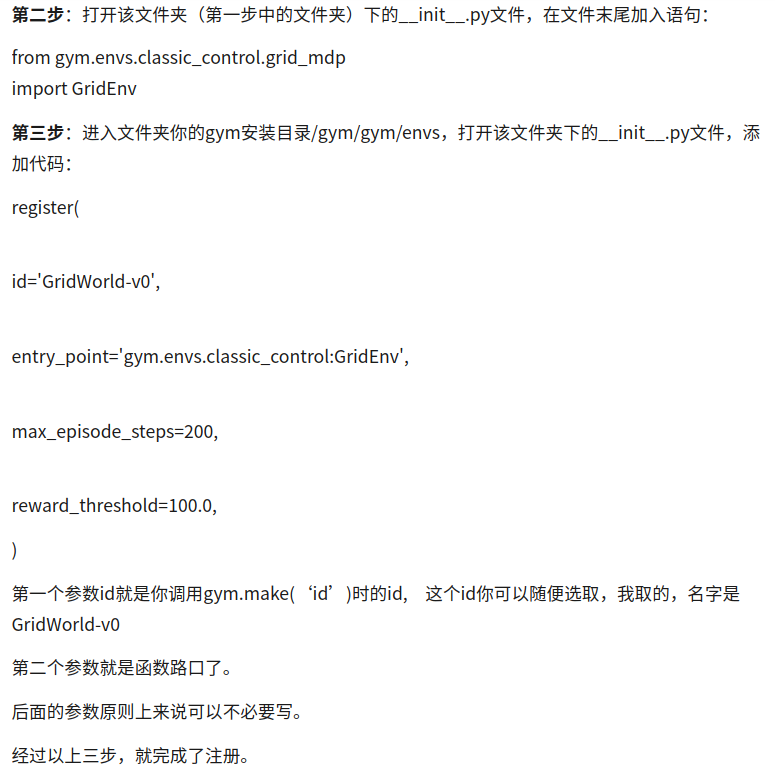

https://zhuanlan.zhihu.com/p/26985029

第零步,把gym下载位置保存到/.bashrs为PYTHONPATH这个环境变量,否则下面的操作会报错

第一步,在gym/gym/envs/classic_control下面写入新的env子类

至少包括以下函数:__init__(self),step,reset,render

这个就是最坑的地方,调试了一晚上都没完全调试出来。

其中一个因素是gym改版了,而这个教程里的代码是旧的,所以运行会报错!

注意,我进行了以下修改:

*添加register的时候,括号的内容加了tab缩进

*GridEnv里面的__init__函数,加一个self.seed(),并且copy了cartpole.py里的def seed函数定义。开头import了seeding进来,参考cartpole那样

*registration.py里面把174-191行的def patch_deprecated_methods(env)屏蔽了

*grid_mdp.py所有的主要函数都去掉了开头的_符号

*terminal退出当前的python命令,再重进,就可以在terminal调用import gym,定义env=新加入的GridEnv-v0,然后reset,render执行,不会报错了,render也生效了。但是quit之后会提示TypeError: 'NoneType' object is not iterable。

*在其他文件下调用执行相同的程序,不显示render效果,只有上面的那个typeerror。

*在vscode里执行官方代码的时候

https://zhuanlan.zhihu.com/p/36506567