master ubuntu 10.10.1682

woker cloud 10.10.16.47

root@cloud:~# apt-get install -y kubeadm-1.18.1 kubectl-1.18.1 Reading package lists... Done Building dependency tree Reading state information... Done E: Unable to locate package kubeadm-1.18.1 E: Couldn't find any package by glob 'kubeadm-1.18.1' E: Couldn't find any package by regex 'kubeadm-1.18.1' E: Unable to locate package kubectl-1.18.1 E: Couldn't find any package by glob 'kubectl-1.18.1' E: Couldn't find any package by regex 'kubectl-1.18.1' root@cloud:~#

oot@cloud:~# cat /etc/apt/sources.list.d/kubernetes.list deb https://mirrors.huaweicloud.com/kubernetes/apt/ kubernetes-xenial main root@cloud:~#

oot@cloud:~# cat /etc/apt/sources.list.d/kubernetes.list deb https://mirrors.huaweicloud.com/kubernetes/apt/ kubernetes-xenial main root@cloud:~#

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

apt-cache madison kubeadm

root@cloud:~# apt-get install -y kubeadm=1.18.1-00 kubectl=1.18.1-00 Reading package lists... Done Building dependency tree Reading state information... Done The following package was automatically installed and is no longer required: libusb-0.1-4 Use 'apt autoremove' to remove it. The following additional packages will be installed: conntrack cri-tools kubelet kubernetes-cni The following NEW packages will be installed: conntrack cri-tools kubeadm kubectl kubelet kubernetes-cni 0 upgraded, 6 newly installed, 0 to remove and 259 not upgraded. Need to get 62.1 MB of archives. After this operation, 285 MB of additional disk space will be used. Get:1 https://mirrors.huaweicloud.com/kubernetes/apt kubernetes-xenial/main arm64 cri-tools arm64 1.13.0-01 [7,964 kB] Get:2 http://us.ports.ubuntu.com/ubuntu-ports bionic/main arm64 conntrack arm64 1:1.4.4+snapshot20161117-6ubuntu2 [27.3 kB] Get:3 https://mirrors.huaweicloud.com/kubernetes/apt kubernetes-xenial/main arm64 kubernetes-cni arm64 0.8.7-00 [23.1 MB] Get:4 https://mirrors.huaweicloud.com/kubernetes/apt kubernetes-xenial/main arm64 kubelet arm64 1.21.1-00 [16.3 MB] Get:5 https://mirrors.huaweicloud.com/kubernetes/apt kubernetes-xenial/main arm64 kubectl arm64 1.18.1-00 [7,623 kB] Get:6 https://mirrors.huaweicloud.com/kubernetes/apt kubernetes-xenial/main arm64 kubeadm arm64 1.18.1-00 [7,077 kB] Fetched 62.1 MB in 2s (35.7 MB/s) Selecting previously unselected package conntrack. (Reading database ... 128945 files and directories currently installed.) Preparing to unpack .../0-conntrack_1%3a1.4.4+snapshot20161117-6ubuntu2_arm64.deb ... Unpacking conntrack (1:1.4.4+snapshot20161117-6ubuntu2) ... Selecting previously unselected package cri-tools. Preparing to unpack .../1-cri-tools_1.13.0-01_arm64.deb ... Unpacking cri-tools (1.13.0-01) ... Selecting previously unselected package kubernetes-cni. Preparing to unpack .../2-kubernetes-cni_0.8.7-00_arm64.deb ... Unpacking kubernetes-cni (0.8.7-00) ... Selecting previously unselected package kubelet. Preparing to unpack .../3-kubelet_1.21.1-00_arm64.deb ... Unpacking kubelet (1.21.1-00) ... Selecting previously unselected package kubectl. Preparing to unpack .../4-kubectl_1.18.1-00_arm64.deb ... Unpacking kubectl (1.18.1-00) ... Selecting previously unselected package kubeadm. Preparing to unpack .../5-kubeadm_1.18.1-00_arm64.deb ... Unpacking kubeadm (1.18.1-00) ... Setting up conntrack (1:1.4.4+snapshot20161117-6ubuntu2) ... Setting up kubernetes-cni (0.8.7-00) ... Setting up cri-tools (1.13.0-01) ... Setting up kubelet (1.21.1-00) ... Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /lib/systemd/system/kubelet.service. Setting up kubectl (1.18.1-00) ... Processing triggers for man-db (2.8.3-2ubuntu0.1) ... Setting up kubeadm (1.18.1-00) ...

centos

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@bogon ~]# cat /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64/ #baseurl=http://mirrors.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-aarch64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg [root@bogon ~]

[root@bogon ~]# yum install -y kubelet-1.18.1-0 kubeadm-1.18.1-0 kubectl-1.18.1-0 Loaded plugins: fastestmirror, langpacks Repository epel is listed more than once in the configuration Repository epel-debuginfo is listed more than once in the configuration Repository epel-source is listed more than once in the configuration Loading mirror speeds from cached hostfile * centos-qemu-ev: mirror.worria.com * epel: fedora.ipserverone.com Resolving Dependencies --> Running transaction check ---> Package kubeadm.aarch64 0:1.18.1-0 will be installed --> Processing Dependency: kubernetes-cni >= 0.7.5 for package: kubeadm-1.18.1-0.aarch64 ---> Package kubectl.aarch64 0:1.18.1-0 will be installed ---> Package kubelet.aarch64 0:1.18.1-0 will be installed --> Running transaction check ---> Package kubernetes-cni.aarch64 0:0.8.7-0 will be installed --> Finished Dependency Resolution Dependencies Resolved ============================================================================================================================================================================================================================================================ Package Arch Version Repository Size ============================================================================================================================================================================================================================================================ Installing: kubeadm aarch64 1.18.1-0 kubernetes 7.6 M kubectl aarch64 1.18.1-0 kubernetes 8.3 M kubelet aarch64 1.18.1-0 kubernetes 18 M Installing for dependencies: kubernetes-cni aarch64 0.8.7-0 kubernetes 32 M Transaction Summary ============================================================================================================================================================================================================================================================ Install 3 Packages (+1 Dependent package)

master节点

/查看admin秘钥 kubectl get secret --all-namespaces | grep admin

root@ubuntu:~# kubeadm token list root@ubuntu:~# kubectl get secret --all-namespaces | grep admin root@ubuntu:~#

root@ubuntu:~# kubeadm token create W0617 19:18:56.243540 29270 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] er88xl.xrb7hmi2auqvutex root@ubuntu:~# kubectl get secret --all-namespaces | grep admin root@ubuntu:~# kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS er88xl.xrb7hmi2auqvutex 23h 2021-06-18T19:18:56+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token root@ubuntu:~#

root@ubuntu:~# kubectl get secret --all-namespaces | grep admin root@ubuntu:~# kubeadm token list TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS er88xl.xrb7hmi2auqvutex 23h 2021-06-18T19:18:56+08:00 authentication,signing <none> system:bootstrappers:kubeadm:default-node-token root@ubuntu:~# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 982403e601ada0d2104685b295cbeb45caa25cb81998680affe5b490e4afa9ef root@ubuntu:~#

上面太繁琐,一步到位:#

kubeadm token create --print-join-command

第二种方法:#

# 第二种方法

token=$(kubeadm token generate)

kubeadm token create $token --print-join-command --ttl=0root@cloud:~# kubeadm join 10.10.16.82:6443 --token er88xl.xrb7hmi2auqvutex --discovery-token-ca-cert-hash sha256:982403e601ada0d2104685b295cbeb45caa25cb81998680affe5b490e4afa9ef W0617 19:21:57.992816 406386 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set. [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. root@cloud:~#

root@ubuntu:~# kubectl get nodes NAME STATUS ROLES AGE VERSION cloud NotReady <none> 62s v1.21.1 ubuntu Ready master 244d v1.18.1 root@ubuntu:~#

更改hosts

root@ubuntu:~# vi /etc/hosts 127.0.0.1 localhost 10.10.16.82 ubuntu 10.10.16.47 cloud 127.0.0.1 ubuntu

root@ubuntu:~# ping cloud PING cloud (10.10.16.47) 56(84) bytes of data. 64 bytes from cloud (10.10.16.47): icmp_seq=1 ttl=64 time=0.162 ms 64 bytes from cloud (10.10.16.47): icmp_seq=2 ttl=64 time=0.570 ms ^C --- cloud ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 0.162/0.366/0.570/0.204 ms root@ubuntu:~#

root@cloud:~# journalctl -f -u kubelet -- Logs begin at Tue 2020-10-20 19:26:58 CST. -- Jun 17 19:28:21 cloud kubelet[406675]: I0617 19:28:21.732112 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Jun 17 19:28:26 cloud kubelet[406675]: E0617 19:28:26.235678 406675 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" Jun 17 19:28:26 cloud kubelet[406675]: I0617 19:28:26.732515 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Jun 17 19:28:31 cloud kubelet[406675]: E0617 19:28:31.276277 406675 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" Jun 17 19:28:31 cloud kubelet[406675]: I0617 19:28:31.733131 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Jun 17 19:28:32 cloud kubelet[406675]: E0617 19:28:32.391790 406675 reflector.go:138] k8s.io/client-go/informers/factory.go:134: Failed to watch *v1.RuntimeClass: failed to list *v1.RuntimeClass: the server could not find the requested resource Jun 17 19:28:32 cloud kubelet[406675]: E0617 19:28:32.489962 406675 aws_credentials.go:77] while getting AWS credentials NoCredentialProviders: no valid providers in chain. Deprecated. Jun 17 19:28:32 cloud kubelet[406675]: For verbose messaging see aws.Config.CredentialsChainVerboseErrors Jun 17 19:28:32 cloud kubelet[406675]: E0617 19:28:32.503570 406675 aws_credentials.go:77] while getting AWS credentials NoCredentialProviders: no valid providers in chain. Deprecated. Jun 17 19:28:32 cloud kubelet[406675]: For verbose messaging see aws.Config.CredentialsChainVerboseErrors Jun 17 19:28:36 cloud kubelet[406675]: E0617 19:28:36.314291 406675 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" Jun 17 19:28:36 cloud kubelet[406675]: I0617 19:28:36.733908 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Jun 17 19:28:41 cloud kubelet[406675]: E0617 19:28:41.351254 406675 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" Jun 17 19:28:41 cloud kubelet[406675]: I0617 19:28:41.734611 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Jun 17 19:28:46 cloud kubelet[406675]: E0617 19:28:46.384032 406675 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" Jun 17 19:28:46 cloud kubelet[406675]: I0617 19:28:46.734924 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.577800 406675 remote_runtime.go:116] "RunPodSandbox from runtime service failed" err="rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.2": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.577901 406675 kuberuntime_sandbox.go:68] "Failed to create sandbox for pod" err="rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.2": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" pod="kube-system/kube-proxy-nh2cp" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.577948 406675 kuberuntime_manager.go:790] "CreatePodSandbox for pod failed" err="rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.2": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" pod="kube-system/kube-proxy-nh2cp" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.578067 406675 pod_workers.go:190] "Error syncing pod, skipping" err="failed to "CreatePodSandbox" for "kube-proxy-nh2cp_kube-system(20b8a4ec-96e5-419f-8b6e-ff6137017318)" with CreatePodSandboxError: "Failed to create sandbox for pod \"kube-proxy-nh2cp_kube-system(20b8a4ec-96e5-419f-8b6e-ff6137017318)\": rpc error: code = Unknown desc = failed pulling image \"k8s.gcr.io/pause:3.2\": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)"" pod="kube-system/kube-proxy-nh2cp" podUID=20b8a4ec-96e5-419f-8b6e-ff6137017318 Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.661054 406675 remote_runtime.go:116] "RunPodSandbox from runtime service failed" err="rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.2": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.661116 406675 kuberuntime_sandbox.go:68] "Failed to create sandbox for pod" err="rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.2": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" pod="kube-system/antrea-agent-l5zg5" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.661154 406675 kuberuntime_manager.go:790] "CreatePodSandbox for pod failed" err="rpc error: code = Unknown desc = failed pulling image "k8s.gcr.io/pause:3.2": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)" pod="kube-system/antrea-agent-l5zg5" Jun 17 19:28:47 cloud kubelet[406675]: E0617 19:28:47.661248 406675 pod_workers.go:190] "Error syncing pod, skipping" err="failed to "CreatePodSandbox" for "antrea-agent-l5zg5_kube-system(dbbff09a-87af-43e7-8147-04f809d8c2ff)" with CreatePodSandboxError: "Failed to create sandbox for pod \"antrea-agent-l5zg5_kube-system(dbbff09a-87af-43e7-8147-04f809d8c2ff)\": rpc error: code = Unknown desc = failed pulling image \"k8s.gcr.io/pause:3.2\": Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)"" pod="kube-system/antrea-agent-l5zg5" podUID=dbbff09a-87af-43e7-8147-04f809d8c2ff Jun 17 19:28:51 cloud kubelet[406675]: E0617 19:28:51.422587 406675 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized" Jun 17 19:28:51 cloud kubelet[406675]: I0617 19:28:51.736003 406675 cni.go:239] "Unable to update cni config" err="no networks found in /etc/cni/net.d" ^C

缺少镜像

root@cloud:~# docker pull mirrorgcrio/pause-arm64:3.2 3.2: Pulling from mirrorgcrio/pause-arm64 84f9968a3238: Pull complete Digest: sha256:31d3efd12022ffeffb3146bc10ae8beb890c80ed2f07363515580add7ed47636 Status: Downloaded newer image for mirrorgcrio/pause-arm64:3.2 docker.io/mirrorgcrio/pause-arm64:3.2 root@cloud:~# docker pull coredns/coredns:coredns-arm64 coredns-arm64: Pulling from coredns/coredns c6568d217a00: Pull complete f9e3dfb6419e: Pull complete Digest: sha256:8f50c3cfb9de7707b988d4e00d56fe9aa35ceec5246f5891a9e82b5f5aa26897 Status: Downloaded newer image for coredns/coredns:coredns-arm64 docker.io/coredns/coredns:coredns-arm64 root@cloud:~# docker pull mirrorgcrio/kube-proxy-arm64:v1.18.1 v1.18.1: Pulling from mirrorgcrio/kube-proxy-arm64 ed2e7fd67416: Pull complete d033d9855b96: Pull complete 7bd91d4a9747: Pull complete 6c3c2821ac4d: Pull complete b8ac04191d92: Pull complete 355857a7a906: Pull complete ea9711a0e51a: Pull complete Digest: sha256:1cd85e909859001b68022f269c6ce223370cdb7889d79debd9cb87626a8280fb Status: Downloaded newer image for mirrorgcrio/kube-proxy-arm64:v1.18.1 docker.io/mirrorgcrio/kube-proxy-arm64:v1.18.1 root@cloud:~# docker tag coredns/coredns:coredns-arm64 k8s.gcr.io/coredns:1.6.7 root@cloud:~# docker tag mirrorgcrio/pause-arm64:3.2 k8s.gcr.io/pause:3.2 root@cloud:~# docker tag mirrorgcrio/kube-proxy-arm64:v1.18.1 k8s.gcr.io/kube-proxy:v1.18.1 root@cloud:~#

root@cloud:~# docker pull registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm64 v0.12.0-arm64: Pulling from yijindami/flannel 8fa90b21c985: Pull complete c4b41df13d81: Pull complete a73758d03943: Pull complete d09921139b63: Pull complete 17ca61374c07: Pull complete 6da2b4782d50: Pull complete Digest: sha256:a2f5081b71ee4688d0c7693d7e5f2f95e9eea5ea3b4147a12179f55ede42c185 Status: Downloaded newer image for registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm64 registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm64 root@cloud:~# docker tag registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm64 quay.io/coreos/flannel:v0.12.0-arm64 root@cloud:~#

root@ubuntu:~# kubectl get nodes NAME STATUS ROLES AGE VERSION cloud Ready <none> 15m v1.21.1 ubuntu Ready master 244d v1.18.1 root@ubuntu:~#

镜像

docker pull mirrorgcrio/pause-arm64:3.2 docker pull mirrorgcrio/kube-proxy-arm64:v1.18.1 docker pull mirrorgcrio/kube-controller-manager-arm64:v1.18.1 docker pull mirrorgcrio/kube-apiserver-arm64:v1.18.1 docker pull mirrorgcrio/kube-scheduler-arm64:v1.18.1 docker pull mirrorgcrio/etcd-arm64:3.4.3-0 docker pull coredns/coredns:coredns-arm64 docker tag mirrorgcrio/kube-apiserver-arm64:v1.18.1 k8s.gcr.io/kube-apiserver:v1.18.1 docker tag mirrorgcrio/kube-scheduler-arm64:v1.18.1 k8s.gcr.io/kube-scheduler:v1.18.1 docker tag mirrorgcrio/kube-controller-manager-arm64:v1.18.1 k8s.gcr.io/kube-controller-manager:v1.18.1 docker tag mirrorgcrio/kube-proxy-arm64:v1.18.1 k8s.gcr.io/kube-proxy:v1.18.1 docker tag mirrorgcrio/pause-arm64:3.2 k8s.gcr.io/pause:3.2 docker tag mirrorgcrio/etcd-arm64:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0 docker tag coredns/coredns:coredns-arm64 k8s.gcr.io/coredns:1.6.7 apt-get install kubeadm=1.18.1-00 kubectl=1.18.1-00 kubelet=1.18.1-00 kubeadm init --kubernetes-version=v1.18.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=14.14.18.6 ------------------------------------------------------------------------ flannel镜像: docker pull registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-amd64 docker pull registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm64 docker pull registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm docker pull registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-ppc64le docker pull registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-s390x docker tag registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-amd64 quay.io/coreos/flannel:v0.12.0-amd64 docker tag registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm64 quay.io/coreos/flannel:v0.12.0-arm64 docker tag registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-arm quay.io/coreos/flannel:v0.12.0-arm docker tag registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-ppc64le quay.io/coreos/flannel:v0.12.0-ppc64le docker tag registry.cn-shanghai.aliyuncs.com/yijindami/flannel:v0.12.0-s390x quay.io/coreos/flannel:v0.12.0-s390x kubectl create -f kube-flannel.yml

root@ubuntu:~# kubectl label node cloud node-role.kubernetes.io/worker=worker node/cloud labeled root@ubuntu:~# kubectl get nodes NAME STATUS ROLES AGE VERSION cloud Ready worker 19m v1.21.1 ubuntu Ready master 244d v1.18.1 root@ubuntu:~#

root@ubuntu:~# kubectl get nodes --show-labels NAME STATUS ROLES AGE VERSION LABELS cloud Ready worker 38m v1.21.1 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=cloud,kubernetes.io/os=linux,node-role.kubernetes.io/worker=worker ubuntu Ready master 244d v1.18.1 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=ubuntu,kubernetes.io/os=linux,node-role.kubernetes.io/master= root@ubuntu:~#

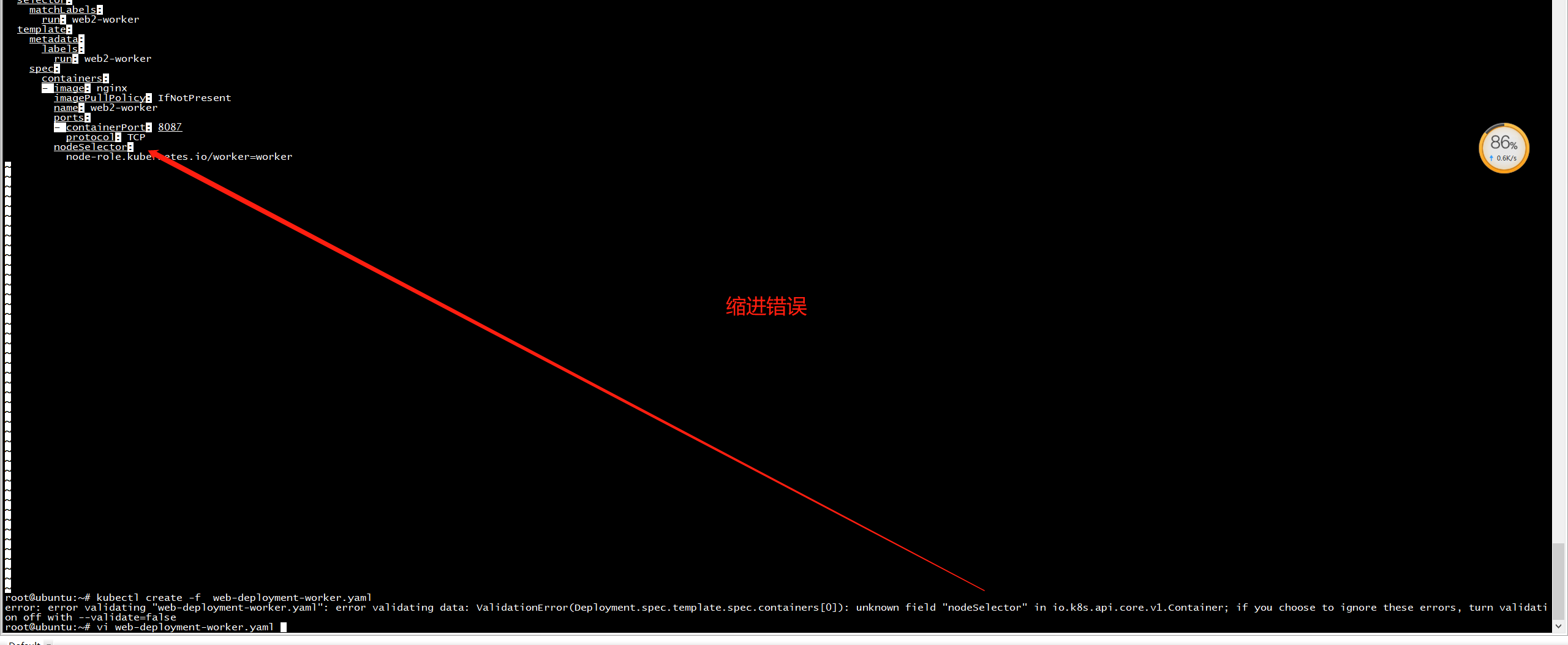

root@ubuntu:~# kubectl create -f web-deployment-worker.yaml error: error validating "web-deployment-worker.yaml": error validating data: ValidationError(Deployment.spec.template.spec.containers[0]): unknown field "nodeSelector" in io.k8s.api.core.v1.Container; if you choose to ignore these errors, turn validation off with --validate=false

apiVersion: apps/v1 kind: Deployment metadata: name: web2-worker namespace: default spec: selector: matchLabels: run: web2-worker template: metadata: labels: run: web2-worker spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: web2-worker ports: - containerPort: 8087 protocol: TCP nodeSelector: node-role.kubernetes.io/worker=worker

root@ubuntu:~# kubectl create -f web-deployment-worker.yaml error: error validating "web-deployment-worker.yaml": error validating data: ValidationError(Deployment.spec.template.spec.nodeSelector): invalid type for io.k8s.api.core.v1.PodSpec.nodeSelector: got "string", expected "map"; if you choose to ignore these errors, turn validation off with --validate=false root@ubuntu:~#

apiVersion: apps/v1 kind: Deployment metadata: name: web2-worker namespace: default spec: selector: matchLabels: run: web2-worker template: metadata: labels: run: web2-worker spec: containers: - image: nginx imagePullPolicy: IfNotPresent name: web2-worker ports: - containerPort: 8087 protocol: TCP nodeSelector: node-role.kubernetes.io/worker: worker ~

root@ubuntu:~# kubectl create -f web-deployment-worker.yaml deployment.apps/web2-worker created root@ubuntu:~# kubectl get pods NAME READY STATUS RESTARTS AGE debian-6c44fc6956-ltsrt 0/1 CrashLoopBackOff 4898 17d mc1 2/2 Running 0 17d my-deployment-68bdbbb5cc-bbszv 0/1 ImagePullBackOff 0 36d my-deployment-68bdbbb5cc-nrst9 0/1 ImagePullBackOff 0 36d my-deployment-68bdbbb5cc-rlgzt 0/1 ImagePullBackOff 0 36d my-nginx-5dc4865748-jqx54 1/1 Running 2 36d my-nginx-5dc4865748-pcrbg 1/1 Running 2 36d nginx 0/1 ImagePullBackOff 0 36d nginx-deployment-6b474476c4-r6z5b 1/1 Running 0 9d nginx-deployment-6b474476c4-w6xh9 1/1 Running 0 9d web2-6d784f67bf-4gqq2 1/1 Running 0 20d web2-worker-579fdc68dd-d8t6m 1/1 Running 0 34s root@ubuntu:~#

root@cloud:~# kubectl get pods error: no configuration has been provided, try setting KUBERNETES_MASTER environment variable root@cloud:~#

把master节点$HOME/.kube/config拷贝到worker节点

root@cloud:~# mkdir -p $HOME/.kube

安装bug

root@bogon ~]# kubeadm join 10.10.16.82:6443 --token er88xl.xrb7hmi2auqvutex --discovery-token-ca-cert-hash sha256:982403e601ada0d2104685b295cbeb45caa25cb81998680affe5b490e4afa9ef W0618 17:17:51.344638 17154 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set. [preflight] Running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.7. Latest validated version: 19.03 [WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service' error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR Swap]: running with swap on is not supported. Please disable swap [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` To see the stack trace of this error execute with --v=5 or higher [root@bogon ~]# swapoff -a

[root@bogon ~]# journalctl -f -u kubelet -- Logs begin at Fri 2021-02-05 03:12:20 CST. -- Jun 18 17:26:59 bogon kubelet[21116]: I0618 17:26:59.729401 21116 reconciler.go:224] operationExecutor.VerifyControllerAttachedVolume started for volume "kube-proxy" (UniqueName: "kubernetes.io/configmap/8d93ca5c-867b-4675-b8ee-5af4d8586337-kube-proxy") pod "kube-proxy-p4qkx" (UID: "8d93ca5c-867b-4675-b8ee-5af4d8586337") Jun 18 17:26:59 bogon kubelet[21116]: I0618 17:26:59.729447 21116 reconciler.go:224] operationExecutor.VerifyControllerAttachedVolume started for volume "xtables-lock" (UniqueName: "kubernetes.io/host-path/8d93ca5c-867b-4675-b8ee-5af4d8586337-xtables-lock") pod "kube-proxy-p4qkx" (UID: "8d93ca5c-867b-4675-b8ee-5af4d8586337") Jun 18 17:26:59 bogon kubelet[21116]: I0618 17:26:59.729485 21116 reconciler.go:224] operationExecutor.VerifyControllerAttachedVolume started for volume "lib-modules" (UniqueName: "kubernetes.io/host-path/8d93ca5c-867b-4675-b8ee-5af4d8586337-lib-modules") pod "kube-proxy-p4qkx" (UID: "8d93ca5c-867b-4675-b8ee-5af4d8586337") Jun 18 17:26:59 bogon kubelet[21116]: I0618 17:26:59.729532 21116 reconciler.go:224] operationExecutor.VerifyControllerAttachedVolume started for volume "kube-proxy-token-7xhtb" (UniqueName: "kubernetes.io/secret/8d93ca5c-867b-4675-b8ee-5af4d8586337-kube-proxy-token-7xhtb") pod "kube-proxy-p4qkx" (UID: "8d93ca5c-867b-4675-b8ee-5af4d8586337") Jun 18 17:26:59 bogon kubelet[21116]: I0618 17:26:59.729550 21116 reconciler.go:157] Reconciler: start to sync state Jun 18 17:27:00 bogon kubelet[21116]: W0618 17:27:00.668979 21116 pod_container_deletor.go:77] Container "1a464dcb3c92f6b53b8f3bdbb0df0038b8bf3a4a7043b10a83135c351b0e5b9a" not found in pod's containers

root@ubuntu:~# kubectl get nodes NAME STATUS ROLES AGE VERSION bogon NotReady <none> 5m8s v1.18.1 cloud Ready worker 22h v1.21.1 ubuntu NotReady master 245d v1.18.1 root@ubuntu:~# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-66bff467f8-57g2p 1/1 Running 0 29m coredns-66bff467f8-bjvn7 1/1 Running 0 29m etcd-ubuntu 1/1 Running 1 245d kube-apiserver-ubuntu 1/1 Running 1 245d kube-controller-manager-ubuntu 1/1 Running 3 245d kube-proxy-896mz 1/1 Running 0 245d kube-proxy-nh2cp 1/1 Running 0 22h kube-proxy-p4qkx 1/1 Running 0 16m kube-scheduler-ubuntu 1/1 Running 5 245d root@ubuntu:~#

coredns没有起来,原因是没有cni,先部署calico

root@ubuntu:~# kubectl apply -f calico.yaml configmap/calico-config configured customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node configured clusterrolebinding.rbac.authorization.k8s.io/calico-node configured daemonset.apps/calico-node created serviceaccount/calico-node unchanged deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created poddisruptionbudget.policy/calico-kube-controllers created root@ubuntu:~# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-5978c5f6b5-6kqtf 1/1 Running 0 47s 10.244.2.12 cloud <none> <none> calico-node-db2m9 0/1 PodInitializing 0 48s 10.10.16.82 ubuntu <none> <none> calico-node-jt86r 0/1 Init:0/3 0 48s 10.10.16.81 bogon <none> <none> calico-node-tq8c4 0/1 Init:2/3 0 48s 10.10.16.47 cloud <none> <none> coredns-66bff467f8-57g2p 1/1 Running 0 54m 10.244.2.4 cloud <none> <none> coredns-66bff467f8-bjvn7 1/1 Running 0 54m 10.244.2.2 cloud <none> <none> etcd-ubuntu 1/1 Running 1 245d 10.10.16.82 ubuntu <none> <none> kube-apiserver-ubuntu 1/1 Running 1 245d 10.10.16.82 ubuntu <none> <none> kube-controller-manager-ubuntu 1/1 Running 3 245d 10.10.16.82 ubuntu <none> <none> kube-proxy-896mz 1/1 Running 0 245d 10.10.16.82 ubuntu <none> <none> kube-proxy-nh2cp 1/1 Running 0 22h 10.10.16.47 cloud <none> <none> kube-proxy-p4qkx 1/1 Running 0 41m 10.10.16.81 bogon <none> <none> kube-scheduler-ubuntu 1/1 Running 5 245d 10.10.16.82 ubuntu <none> <none> root@ubuntu:~# kubectl get nodes NAME STATUS ROLES AGE VERSION bogon Ready <none> 31m v1.18.1 cloud Ready worker 22h v1.21.1 ubuntu Ready master 245d v1.18.1 root@ubuntu:~#