7.TC routine: htb

qdisc_enqueue_root(sch_generic.h) -> qdisc_enqueue(sch_generic.h) -> htb_enqueue(sch_htb.c) ->htb_classify(sch_htb.c) -> flow_classify(cls_flow.c) -> tcf_exts_exec(pkt_cls.h) ->tcf_action_exec(act_api.c) -> tcf_act_police(act_police.c)

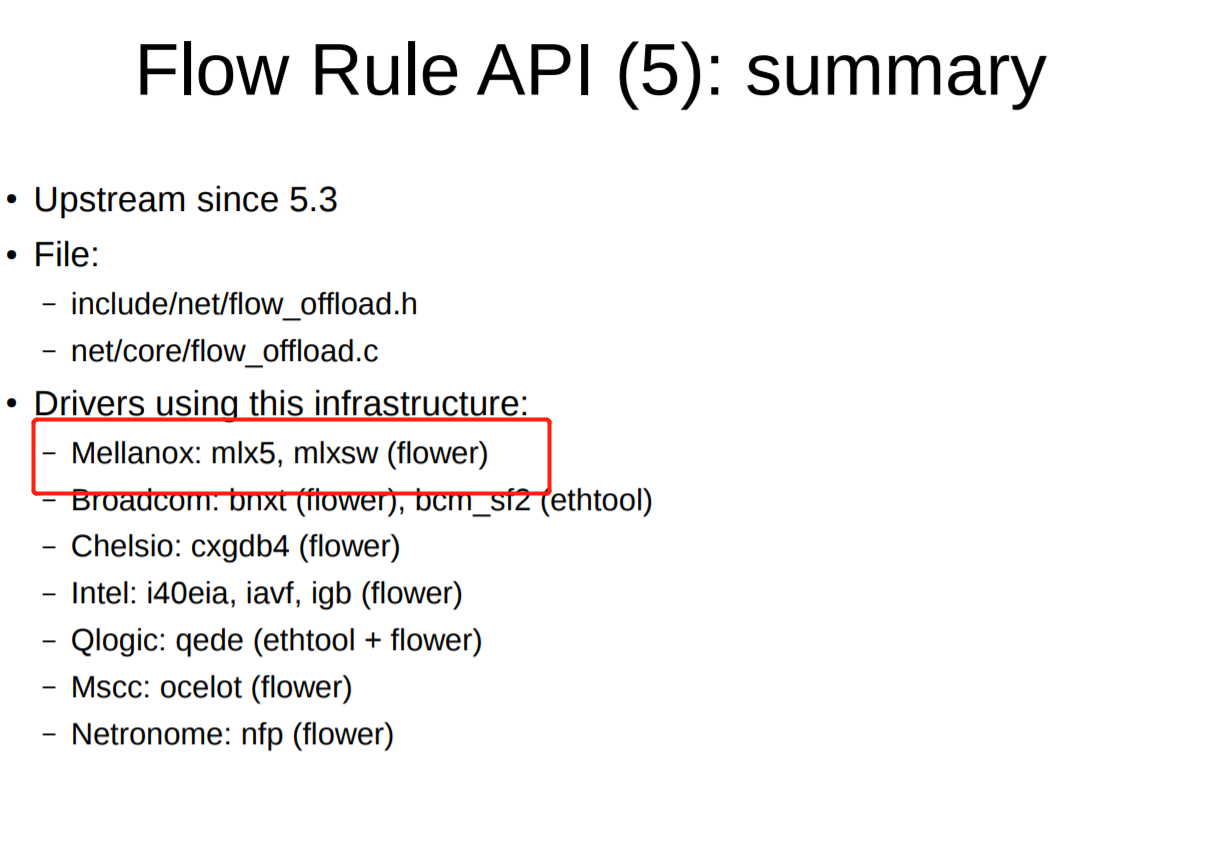

mellanox flow_indr_block_cb_register

drivers/net/ethernet/mellanox/mlx5/core/en_rep.c:837: err = __flow_indr_block_cb_register(netdev, rpriv, include/net/flow_offload.h:396:int __flow_indr_block_cb_register(struct net_device *dev, void *cb_priv, include/net/flow_offload.h:404:int flow_indr_block_cb_register(struct net_device *dev, void *cb_priv,

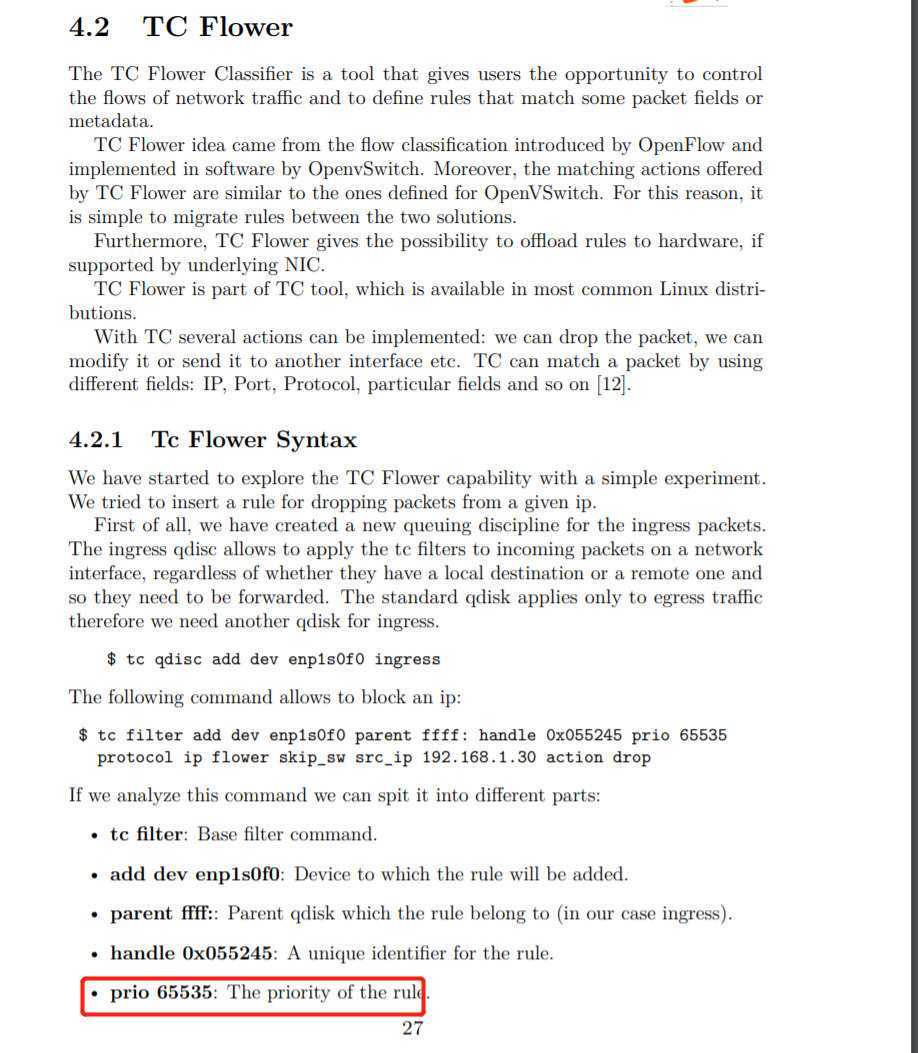

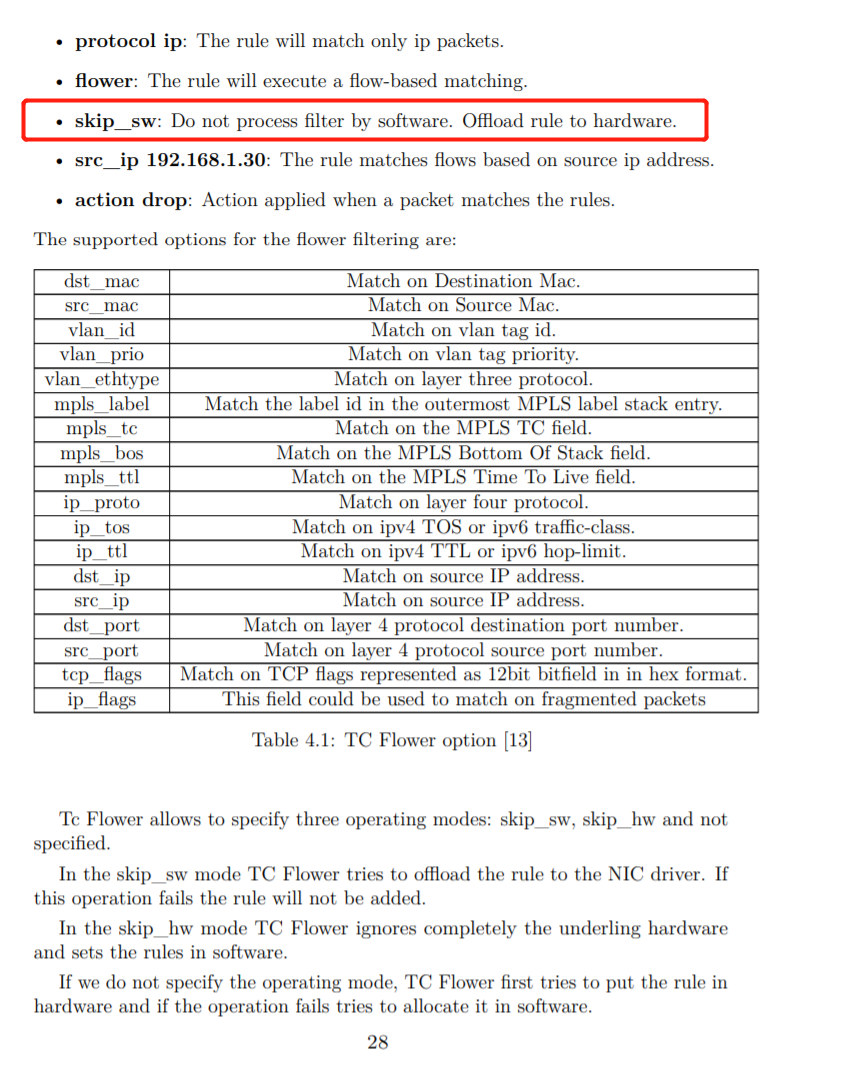

Tc Flower allows to specify three operating modes: skip_sw, skip_hw and not specified.

In the skip_sw mode TC Flower tries to offload the rule to the NIC driver. If this operation fails the rule will not be added. I

n the skip_hw mode TC Flower ignores completely the underling hardware and sets the rules in software.

If we do not specify the operating mode, TC Flower first tries to put the rule in hardware and if the operation fails tries to allocate it in software.

if (f && !tc_skip_sw(f->flags)) { //硬件offload不调用 *res = f->res; return tcf_exts_exec(skb, &f->exts, res); }

static inline bool tc_skip_hw(u32 flags) { return (flags & TCA_CLS_FLAGS_SKIP_HW) ? true : false; } static inline bool tc_skip_sw(u32 flags) { return (flags & TCA_CLS_FLAGS_SKIP_SW) ? true : false; }

net/sched/cls_bpf.c:422: ret = tcf_exts_validate(net, tp, tb, est, &prog->exts, ovr, true, net/sched/cls_flower.c没有调用tcf_exts_validate tcf_exts_validate tcf_action_init tcf_action_init_1

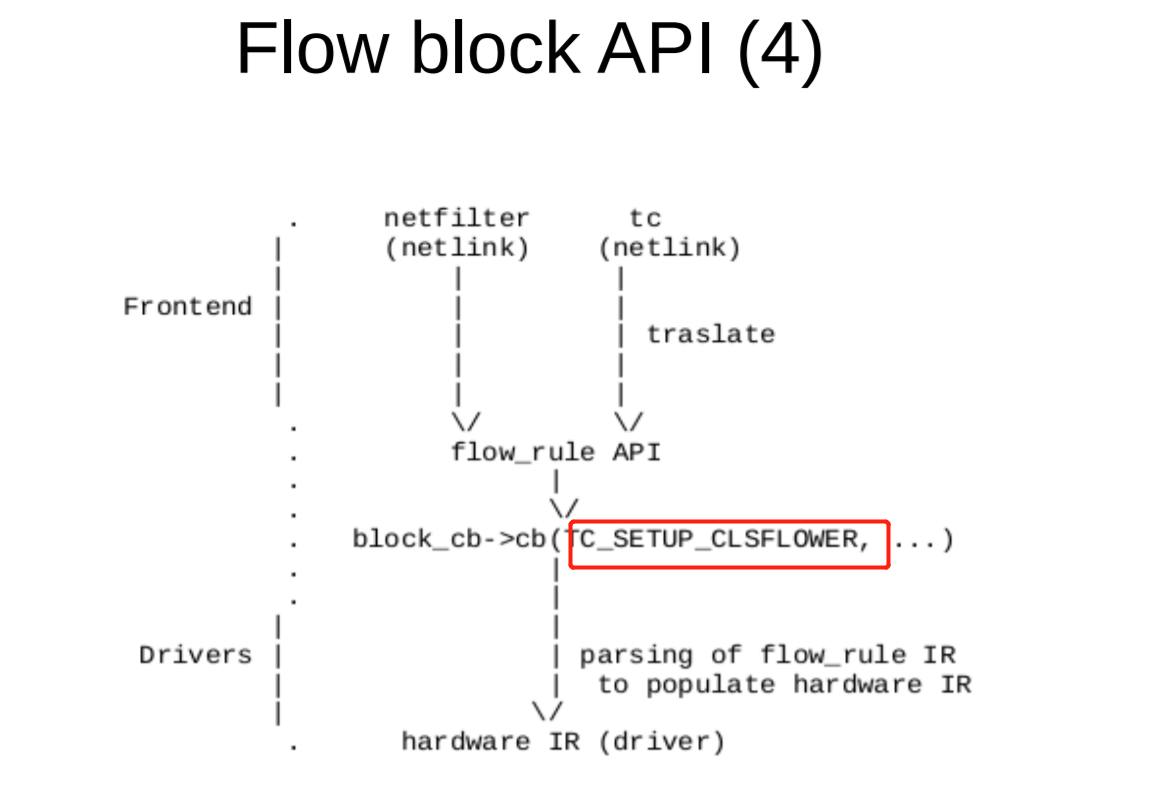

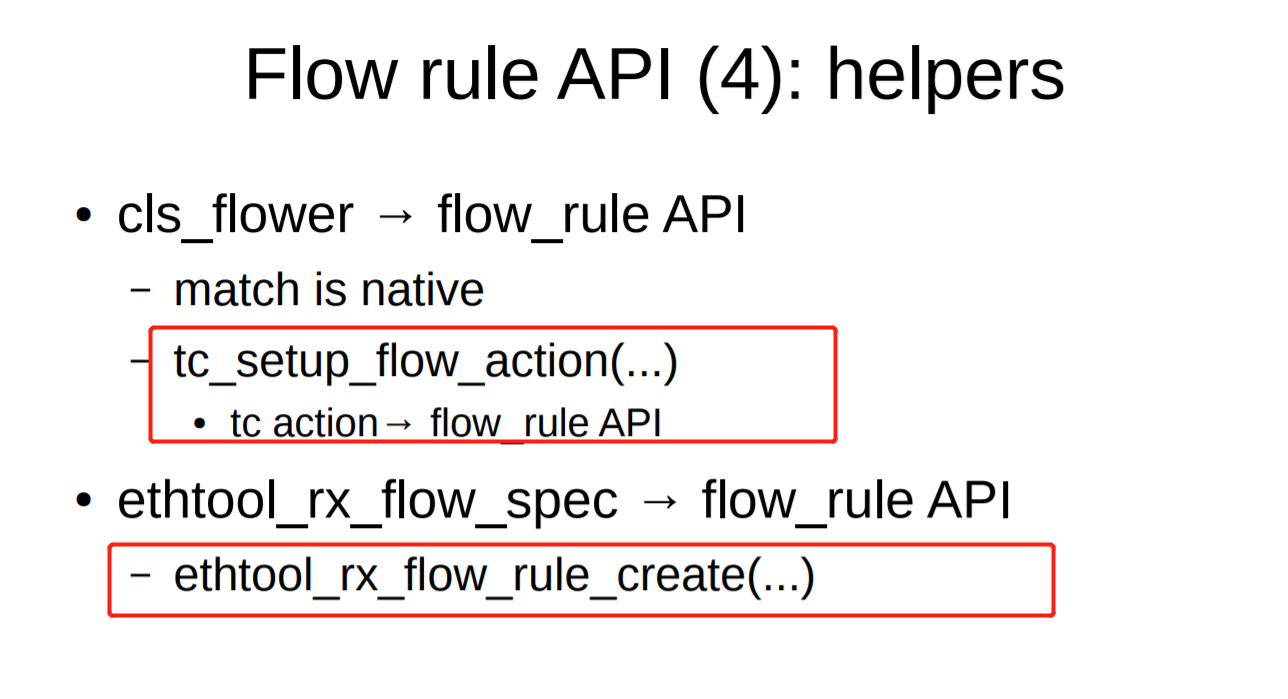

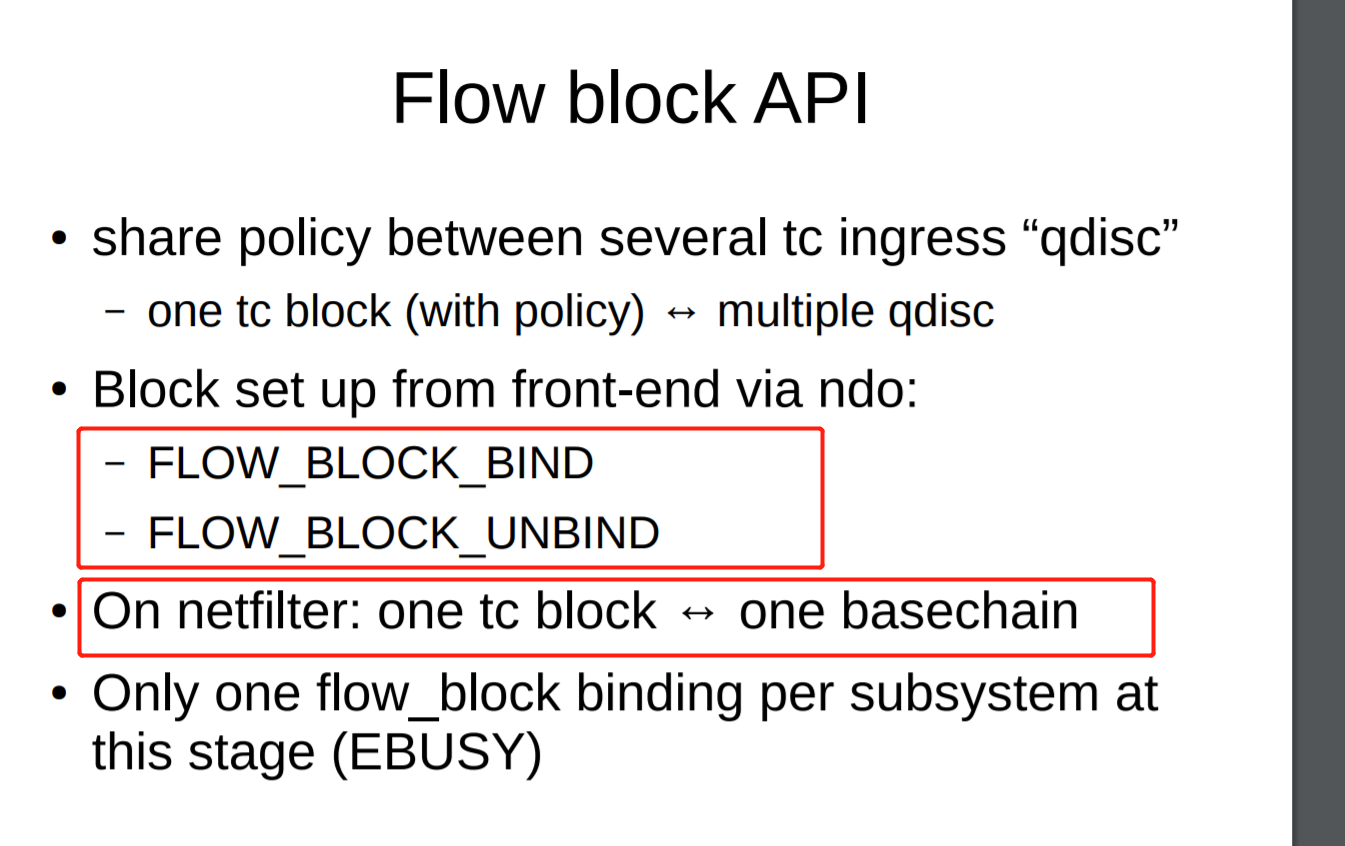

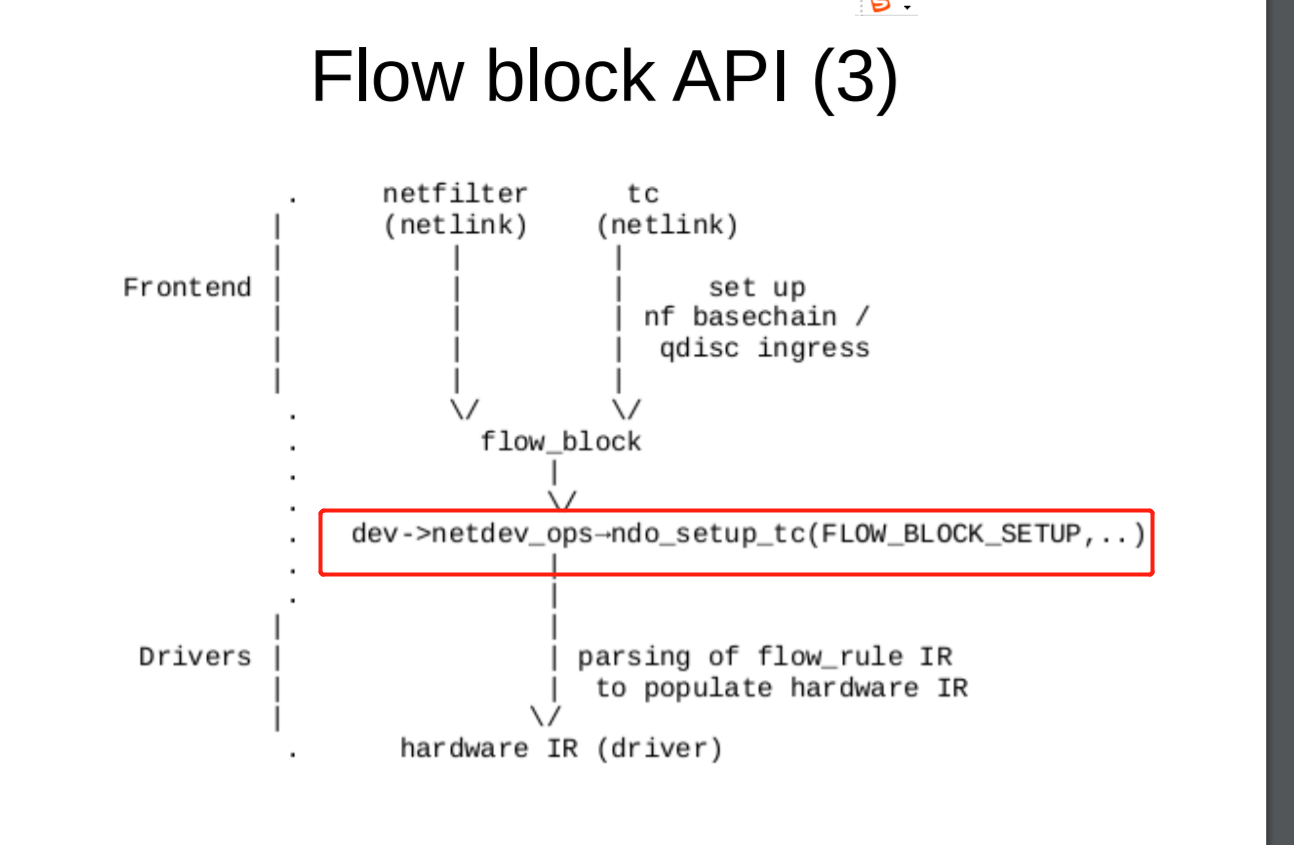

TC_SETUP_BLOCK TC_SETUP_CLSFLOWER

err = dev->netdev_ops->ndo_setup_tc(dev, TC_SETUP_BLOCK, &bo); tc_setup_cb_add(block, tp, TC_SETUP_CLSFLOWER, &cls_flower, skip_sw, &f->flags, &f->in_hw_count, rtnl_held);

struct tcf_exts

struct tcf_exts { #ifdef CONFIG_NET_CLS_ACT __u32 type; /* for backward compat(TCA_OLD_COMPAT) */ int nr_actions; struct tc_action **actions; #endif /* Map to export classifier specific extension TLV types to the * generic extensions API. Unsupported extensions must be set to 0. */ int action; int police; };

struct tc_action { // 私有数据 void *priv; // 操作结构 struct tc_action_ops *ops; // 类型 __u32 type; /* for backward compat(TCA_OLD_COMPAT) */ // 阶数 __u32 order; // 动作链表下一项 struct tc_action *next; }; #define TCA_CAP_NONE 0 // action操作结构, 实际就是定义目标操作, 通常每个匹配操作都由一个静态tcf_action_ops // 结构定义, 作为一个内核模块, 初始化事登记系统的链表 struct tc_action_ops { // 链表中的下一项 struct tc_action_ops *next; struct tcf_hashinfo *hinfo; // 名称 char kind[IFNAMSIZ]; __u32 type; /* TBD to match kind */ __u32 capab; /* capabilities includes 4 bit version */ struct module *owner; // 动作 int (*act)(struct sk_buff *, struct tc_action *, struct tcf_result *); // 获取统计参数 int (*get_stats)(struct sk_buff *, struct tc_action *); // 输出 int (*dump)(struct sk_buff *, struct tc_action *, int, int); // 清除 int (*cleanup)(struct tc_action *, int bind); // 查找 int (*lookup)(struct tc_action *, u32); // 初始化 int (*init)(struct rtattr *, struct rtattr *, struct tc_action *, int , int); // 遍历 int (*walk)(struct sk_buff *, struct netlink_callback *, int, struct tc_action *); };

/*

2 * net/sched/cls_flower.c Flower classifier

3 *

4 * Copyright (c) 2015 Jiri Pirko <jiri@resnulli.us>

5 *

6 * This program is free software; you can redistribute it and/or modify

7 * it under the terms of the GNU General Public License as published by

8 * the Free Software Foundation; either version 2 of the License, or

9 * (at your option) any later version.

10 */

11

12#include <linux/kernel.h>

13#include <linux/init.h>

14#include <linux/module.h>

15#include <linux/rhashtable.h>

16

17#include <linux/if_ether.h>

18#include <linux/in6.h>

19#include <linux/ip.h>

20

21#include <net/sch_generic.h>

22#include <net/pkt_cls.h>

23#include <net/ip.h>

24#include <net/flow_dissector.h>

25

26struct fl_flow_key {

27 int indev_ifindex;

28 struct flow_dissector_key_control control;

29 struct flow_dissector_key_basic basic;

30 struct flow_dissector_key_eth_addrs eth;

31 struct flow_dissector_key_addrs ipaddrs;

32 union {

33 struct flow_dissector_key_ipv4_addrs ipv4;

34 struct flow_dissector_key_ipv6_addrs ipv6;

35 };

36 struct flow_dissector_key_ports tp;

37} __aligned(BITS_PER_LONG / 8); /* Ensure that we can do comparisons as longs. */

38

39struct fl_flow_mask_range {

40 unsigned short int start;

41 unsigned short int end;

42};

43

44struct fl_flow_mask {

45 struct fl_flow_key key;

46 struct fl_flow_mask_range range;

47 struct rcu_head rcu;

48};

49

50struct cls_fl_head {

51 struct rhashtable ht;

52 struct fl_flow_mask mask;

53 struct flow_dissector dissector;

54 u32 hgen;

55 bool mask_assigned;

56 struct list_head filters;

57 struct rhashtable_params ht_params;

58 struct rcu_head rcu;

59};

60

61struct cls_fl_filter {

62 struct rhash_head ht_node;

63 struct fl_flow_key mkey;

64 struct tcf_exts exts;

65 struct tcf_result res;

66 struct fl_flow_key key;

67 struct list_head list;

68 u32 handle;

69 u32 flags;

70 struct rcu_head rcu;

71};

72

73static unsigned short int fl_mask_range(const struct fl_flow_mask *mask)

74{

75 return mask->range.end - mask->range.start;

76}

77

78static void fl_mask_update_range(struct fl_flow_mask *mask)

79{

80 const u8 *bytes = (const u8 *) &mask->key;

81 size_t size = sizeof(mask->key);

82 size_t i, first = 0, last = size - 1;

83

84 for (i = 0; i < sizeof(mask->key); i++) {

85 if (bytes[i]) {

86 if (!first && i)

87 first = i;

88 last = i;

89 }

90 }

91 mask->range.start = rounddown(first, sizeof(long));

92 mask->range.end = roundup(last + 1, sizeof(long));

93}

94

95static void *fl_key_get_start(struct fl_flow_key *key,

96 const struct fl_flow_mask *mask)

97{

98 return (u8 *) key + mask->range.start;

99}

100

101static void fl_set_masked_key(struct fl_flow_key *mkey, struct fl_flow_key *key,

102 struct fl_flow_mask *mask)

103{

104 const long *lkey = fl_key_get_start(key, mask);

105 const long *lmask = fl_key_get_start(&mask->key, mask);

106 long *lmkey = fl_key_get_start(mkey, mask);

107 int i;

108

109 for (i = 0; i < fl_mask_range(mask); i += sizeof(long))

110 *lmkey++ = *lkey++ & *lmask++;

111}

112

113static void fl_clear_masked_range(struct fl_flow_key *key,

114 struct fl_flow_mask *mask)

115{

116 memset(fl_key_get_start(key, mask), 0, fl_mask_range(mask));

117}

118

tcf_exts_exec

- TCA_CLS_FLAGS_SKIP_HW:只在软件(系统内核TC模块)添加规则,不在硬件添加。如果规则不能添加则报错。

- TCA_CLS_FLAGS_SKIP_SW:只在硬件(规则挂载的网卡)添加规则,不在软件添加。如果规则不能添加则报错。

- 默认(不带标志位):尝试同时在硬件和软件下载规则,如果规则不能在软件添加则报错。

通过TC命令查看规则,如果规则已经卸载到硬件了,可以看到 in_hw标志位。

119static int fl_classify(struct sk_buff *skb, const struct tcf_proto *tp,

120 struct tcf_result *res)

121{

122 struct cls_fl_head *head = rcu_dereference_bh(tp->root);

123 struct cls_fl_filter *f;

124 struct fl_flow_key skb_key;

125 struct fl_flow_key skb_mkey;

126

127 if (!atomic_read(&head->ht.nelems))

128 return -1;

129

130 fl_clear_masked_range(&skb_key, &head->mask);

131 skb_key.indev_ifindex = skb->skb_iif;

132 /* skb_flow_dissect() does not set n_proto in case an unknown protocol,

133 * so do it rather here.

134 */

135 skb_key.basic.n_proto = skb->protocol;

136 skb_flow_dissect(skb, &head->dissector, &skb_key, 0);

137

138 fl_set_masked_key(&skb_mkey, &skb_key, &head->mask);

139

140 f = rhashtable_lookup_fast(&head->ht,

141 fl_key_get_start(&skb_mkey, &head->mask),

142 head->ht_params);

143 if (f && !tc_skip_sw(f->flags)) {

144 *res = f->res;

145 return tcf_exts_exec(skb, &f->exts, res);

146 }

147 return -1;

148}

tcf_exts_exec(struct sk_buff *skb, struct tcf_exts *exts, struct tcf_result *res) { if (exts->nr_actions) return tcf_action_exec(skb, exts->actions, exts->nr_actions, res); return 0; } int tcf_action_exec(struct sk_buff *skb, struct tc_action **actions, int nr_actions, struct tcf_result *res) { int ret = -1, i; if (skb->tc_verd & TC_NCLS) { skb->tc_verd = CLR_TC_NCLS(skb->tc_verd); ret = TC_ACT_OK; goto exec_done; } for (i = 0; i < nr_actions; i++) { const struct tc_action *a = actions[i]; repeat: ret = a->ops->act(skb, a, res); if (ret == TC_ACT_REPEAT) goto repeat; /* we need a ttl - JHS */ if (ret != TC_ACT_PIPE) goto exec_done; } exec_done: return ret; }

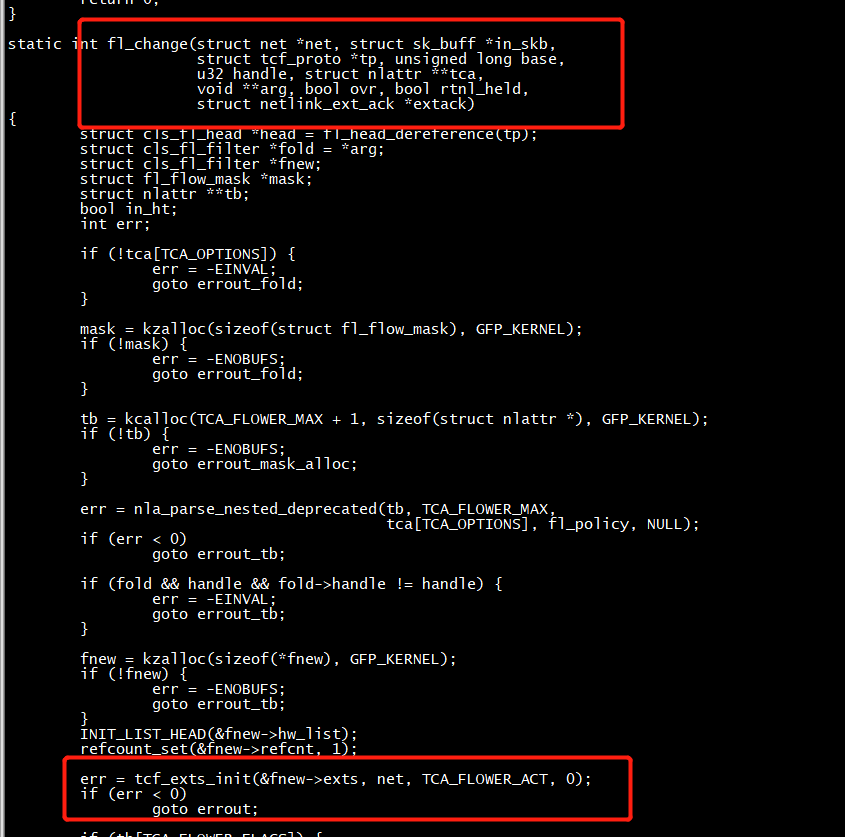

net/sched/cls_flower.c 初始化

tcf_exts_init(&fnew->exts, net, TCA_FLOWER_ACT, 0);

General informations

The Linux kernel configuration item CONFIG_MLX5_CLS_ACT:

- prompt: MLX5 TC classifier action support

- type: bool

- depends on:

CONFIG_MLX5_ESWITCH && CONFIG_NET_CLS_ACT - defined in drivers/net/ethernet/mellanox/mlx5/core/Kconfig

- found in Linux kernels: 5.8–5.11, 5.11+HEAD

前动作为policce 应的回调为tcf_act_police,下面单独分析

tcf_exts_init(&fnew->exts, net, TCA_FLOWER_ACT, 0);

net/sched/cls_flow.c:441: err = tcf_exts_init(&fnew->exts, net, TCA_FLOW_ACT, TCA_FLOW_POLICE);

et/sched/cls_flower.c:1577: err = tcf_exts_init(&fnew->exts, net, TCA_FLOWER_ACT, 0);

static inline int tcf_exts_init(struct tcf_exts *exts, struct net *net, int action, int police) { #ifdef CONFIG_NET_CLS_ACT exts->type = 0; exts->nr_actions = 0; // 不执行tcf_action_exec exts->net = net; exts->actions = kcalloc(TCA_ACT_MAX_PRIO, sizeof(struct tc_action *), GFP_KERNEL); if (!exts->actions) return -ENOMEM; #endif exts->action = action; exts->police = police; return 0; }

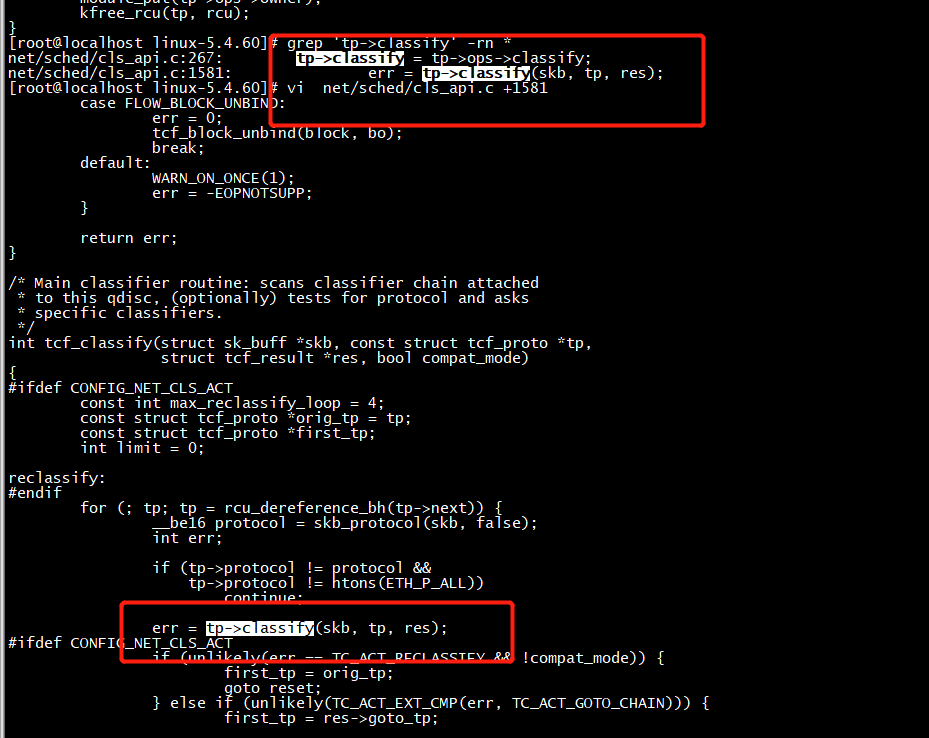

fl_classify

tcf_classify --> fl_classify

static int fl_classify(struct sk_buff *skb, const struct tcf_proto *tp, struct tcf_result *res) { struct cls_fl_head *head = rcu_dereference_bh(tp->root); struct fl_flow_key skb_mkey; struct fl_flow_key skb_key; struct fl_flow_mask *mask; struct cls_fl_filter *f; list_for_each_entry_rcu(mask, &head->masks, list) { flow_dissector_init_keys(&skb_key.control, &skb_key.basic); fl_clear_masked_range(&skb_key, mask); skb_flow_dissect_meta(skb, &mask->dissector, &skb_key); /* skb_flow_dissect() does not set n_proto in case an unknown * protocol, so do it rather here. */ skb_key.basic.n_proto = skb_protocol(skb, false); skb_flow_dissect_tunnel_info(skb, &mask->dissector, &skb_key); skb_flow_dissect_ct(skb, &mask->dissector, &skb_key, fl_ct_info_to_flower_map, ARRAY_SIZE(fl_ct_info_to_flower_map)); skb_flow_dissect(skb, &mask->dissector, &skb_key, 0); fl_set_masked_key(&skb_mkey, &skb_key, mask); f = fl_lookup(mask, &skb_mkey, &skb_key); if (f && !tc_skip_sw(f->flags)) { *res = f->res; return tcf_exts_exec(skb, &f->exts, res); } } return -1; }

static struct tcf_proto *tcf_proto_create(const char *kind, u32 protocol, u32 prio, struct tcf_chain *chain, bool rtnl_held, struct netlink_ext_ack *extack) { struct tcf_proto *tp; int err; tp = kzalloc(sizeof(*tp), GFP_KERNEL); if (!tp) return ERR_PTR(-ENOBUFS); tp->ops = tcf_proto_lookup_ops(kind, rtnl_held, extack); if (IS_ERR(tp->ops)) { err = PTR_ERR(tp->ops); goto errout; } tp->classify = tp->ops->classify; tp->protocol = protocol; tp->prio = prio; tp->chain = chain; spin_lock_init(&tp->lock); refcount_set(&tp->refcnt, 1); err = tp->ops->init(tp); if (err) { module_put(tp->ops->owner); goto errout; } return tp; errout: kfree(tp); return ERR_PTR(err); }

149

150static int fl_init(struct tcf_proto *tp)

151{

152 struct cls_fl_head *head;

153

154 head = kzalloc(sizeof(*head), GFP_KERNEL);

155 if (!head)

156 return -ENOBUFS;

157

158 INIT_LIST_HEAD_RCU(&head->filters);

159 rcu_assign_pointer(tp->root, head);

160

161 return 0;

162}

163

164static void fl_destroy_filter(struct rcu_head *head)

165{

166 struct cls_fl_filter *f = container_of(head, struct cls_fl_filter, rcu);

167

168 tcf_exts_destroy(&f->exts);

169 kfree(f);

170}

171

172static void fl_hw_destroy_filter(struct tcf_proto *tp, unsigned long cookie)

173{

174 struct net_device *dev = tp->q->dev_queue->dev;

175 struct tc_cls_flower_offload offload = {0};

176 struct tc_to_netdev tc;

177

178 if (!tc_should_offload(dev, tp, 0))

179 return;

180

181 offload.command = TC_CLSFLOWER_DESTROY;

182 offload.cookie = cookie;

183

184 tc.type = TC_SETUP_CLSFLOWER;

185 tc.cls_flower = &offload;

186

187 dev->netdev_ops->ndo_setup_tc(dev, tp->q->handle, tp->protocol, &tc);

188}

189

190static int fl_hw_replace_filter(struct tcf_proto *tp,

191 struct flow_dissector *dissector,

192 struct fl_flow_key *mask,

193 struct fl_flow_key *key,

194 struct tcf_exts *actions,

195 unsigned long cookie, u32 flags)

196{

197 struct net_device *dev = tp->q->dev_queue->dev;

198 struct tc_cls_flower_offload offload = {0};

199 struct tc_to_netdev tc;

200 int err;

201

202 if (!tc_should_offload(dev, tp, flags))

203 return tc_skip_sw(flags) ? -EINVAL : 0;

204

205 offload.command = TC_CLSFLOWER_REPLACE;

206 offload.cookie = cookie;

207 offload.dissector = dissector;

208 offload.mask = mask;

209 offload.key = key;

210 offload.exts = actions;

211

212 tc.type = TC_SETUP_CLSFLOWER;

213 tc.cls_flower = &offload;

214

215 err = dev->netdev_ops->ndo_setup_tc(dev, tp->q->handle, tp->protocol, &tc);

216

217 if (tc_skip_sw(flags))

218 return err;

219

220 return 0;

221}

222

223static void fl_hw_update_stats(struct tcf_proto *tp, struct cls_fl_filter *f)

224{

225 struct net_device *dev = tp->q->dev_queue->dev;

226 struct tc_cls_flower_offload offload = {0};

227 struct tc_to_netdev tc;

228

229 if (!tc_should_offload(dev, tp, 0))

230 return;

231

232 offload.command = TC_CLSFLOWER_STATS;

233 offload.cookie = (unsigned long)f;

234 offload.exts = &f->exts;

235

236 tc.type = TC_SETUP_CLSFLOWER;

237 tc.cls_flower = &offload;

238

239 dev->netdev_ops->ndo_setup_tc(dev, tp->q->handle, tp->protocol, &tc);

240}

241

242static bool fl_destroy(struct tcf_proto *tp, bool force)

243{

244 struct cls_fl_head *head = rtnl_dereference(tp->root);

245 struct cls_fl_filter *f, *next;

246

247 if (!force && !list_empty(&head->filters))

248 return false;

249

250 list_for_each_entry_safe(f, next, &head->filters, list) {

251 fl_hw_destroy_filter(tp, (unsigned long)f);

252 list_del_rcu(&f->list);

253 call_rcu(&f->rcu, fl_destroy_filter);

254 }

255 RCU_INIT_POINTER(tp->root, NULL);

256 if (head->mask_assigned)

257 rhashtable_destroy(&head->ht);

258 kfree_rcu(head, rcu);

259 return true;

260}

261

262static unsigned long fl_get(struct tcf_proto *tp, u32 handle)

263{

264 struct cls_fl_head *head = rtnl_dereference(tp->root);

265 struct cls_fl_filter *f;

266

267 list_for_each_entry(f, &head->filters, list)

268 if (f->handle == handle)

269 return (unsigned long) f;

270 return 0;

271}

272

273static const struct nla_policy fl_policy[TCA_FLOWER_MAX + 1] = {

274 [TCA_FLOWER_UNSPEC] = { .type = NLA_UNSPEC },

275 [TCA_FLOWER_CLASSID] = { .type = NLA_U32 },

276 [TCA_FLOWER_INDEV] = { .type = NLA_STRING,

277 .len = IFNAMSIZ },

278 [TCA_FLOWER_KEY_ETH_DST] = { .len = ETH_ALEN },

279 [TCA_FLOWER_KEY_ETH_DST_MASK] = { .len = ETH_ALEN },

280 [TCA_FLOWER_KEY_ETH_SRC] = { .len = ETH_ALEN },

281 [TCA_FLOWER_KEY_ETH_SRC_MASK] = { .len = ETH_ALEN },

282 [TCA_FLOWER_KEY_ETH_TYPE] = { .type = NLA_U16 },

283 [TCA_FLOWER_KEY_IP_PROTO] = { .type = NLA_U8 },

284 [TCA_FLOWER_KEY_IPV4_SRC] = { .type = NLA_U32 },

285 [TCA_FLOWER_KEY_IPV4_SRC_MASK] = { .type = NLA_U32 },

286 [TCA_FLOWER_KEY_IPV4_DST] = { .type = NLA_U32 },

287 [TCA_FLOWER_KEY_IPV4_DST_MASK] = { .type = NLA_U32 },

288 [TCA_FLOWER_KEY_IPV6_SRC] = { .len = sizeof(struct in6_addr) },

289 [TCA_FLOWER_KEY_IPV6_SRC_MASK] = { .len = sizeof(struct in6_addr) },

290 [TCA_FLOWER_KEY_IPV6_DST] = { .len = sizeof(struct in6_addr) },

291 [TCA_FLOWER_KEY_IPV6_DST_MASK] = { .len = sizeof(struct in6_addr) },

292 [TCA_FLOWER_KEY_TCP_SRC] = { .type = NLA_U16 },

293 [TCA_FLOWER_KEY_TCP_DST] = { .type = NLA_U16 },

294 [TCA_FLOWER_KEY_UDP_SRC] = { .type = NLA_U16 },

295 [TCA_FLOWER_KEY_UDP_DST] = { .type = NLA_U16 },

296};

297

298static void fl_set_key_val(struct nlattr **tb,

299 void *val, int val_type,

300 void *mask, int mask_type, int len)

301{

302 if (!tb[val_type])

303 return;

304 memcpy(val, nla_data(tb[val_type]), len);

305 if (mask_type == TCA_FLOWER_UNSPEC || !tb[mask_type])

306 memset(mask, 0xff, len);

307 else

308 memcpy(mask, nla_data(tb[mask_type]), len);

309}

310

311static int fl_set_key(struct net *net, struct nlattr **tb,

312 struct fl_flow_key *key, struct fl_flow_key *mask)

313{

314#ifdef CONFIG_NET_CLS_IND

315 if (tb[TCA_FLOWER_INDEV]) {

316 int err = tcf_change_indev(net, tb[TCA_FLOWER_INDEV]);

317 if (err < 0)

318 return err;

319 key->indev_ifindex = err;

320 mask->indev_ifindex = 0xffffffff;

321 }

322#endif

323

324 fl_set_key_val(tb, key->eth.dst, TCA_FLOWER_KEY_ETH_DST,

325 mask->eth.dst, TCA_FLOWER_KEY_ETH_DST_MASK,

326 sizeof(key->eth.dst));

327 fl_set_key_val(tb, key->eth.src, TCA_FLOWER_KEY_ETH_SRC,

328 mask->eth.src, TCA_FLOWER_KEY_ETH_SRC_MASK,

329 sizeof(key->eth.src));

330

331 fl_set_key_val(tb, &key->basic.n_proto, TCA_FLOWER_KEY_ETH_TYPE,

332 &mask->basic.n_proto, TCA_FLOWER_UNSPEC,

333 sizeof(key->basic.n_proto));

334

335 if (key->basic.n_proto == htons(ETH_P_IP) ||

336 key->basic.n_proto == htons(ETH_P_IPV6)) {

337 fl_set_key_val(tb, &key->basic.ip_proto, TCA_FLOWER_KEY_IP_PROTO,

338 &mask->basic.ip_proto, TCA_FLOWER_UNSPEC,

339 sizeof(key->basic.ip_proto));

340 }

341

342 if (tb[TCA_FLOWER_KEY_IPV4_SRC] || tb[TCA_FLOWER_KEY_IPV4_DST]) {

343 key->control.addr_type = FLOW_DISSECTOR_KEY_IPV4_ADDRS;

344 fl_set_key_val(tb, &key->ipv4.src, TCA_FLOWER_KEY_IPV4_SRC,

345 &mask->ipv4.src, TCA_FLOWER_KEY_IPV4_SRC_MASK,

346 sizeof(key->ipv4.src));

347 fl_set_key_val(tb, &key->ipv4.dst, TCA_FLOWER_KEY_IPV4_DST,

348 &mask->ipv4.dst, TCA_FLOWER_KEY_IPV4_DST_MASK,

349 sizeof(key->ipv4.dst));

350 } else if (tb[TCA_FLOWER_KEY_IPV6_SRC] || tb[TCA_FLOWER_KEY_IPV6_DST]) {

351 key->control.addr_type = FLOW_DISSECTOR_KEY_IPV6_ADDRS;

352 fl_set_key_val(tb, &key->ipv6.src, TCA_FLOWER_KEY_IPV6_SRC,

353 &mask->ipv6.src, TCA_FLOWER_KEY_IPV6_SRC_MASK,

354 sizeof(key->ipv6.src));

355 fl_set_key_val(tb, &key->ipv6.dst, TCA_FLOWER_KEY_IPV6_DST,

356 &mask->ipv6.dst, TCA_FLOWER_KEY_IPV6_DST_MASK,

357 sizeof(key->ipv6.dst));

358 }

359

360 if (key->basic.ip_proto == IPPROTO_TCP) {

361 fl_set_key_val(tb, &key->tp.src, TCA_FLOWER_KEY_TCP_SRC,

362 &mask->tp.src, TCA_FLOWER_UNSPEC,

363 sizeof(key->tp.src));

364 fl_set_key_val(tb, &key->tp.dst, TCA_FLOWER_KEY_TCP_DST,

365 &mask->tp.dst, TCA_FLOWER_UNSPEC,

366 sizeof(key->tp.dst));

367 } else if (key->basic.ip_proto == IPPROTO_UDP) {

368 fl_set_key_val(tb, &key->tp.src, TCA_FLOWER_KEY_UDP_SRC,

369 &mask->tp.src, TCA_FLOWER_UNSPEC,

370 sizeof(key->tp.src));

371 fl_set_key_val(tb, &key->tp.dst, TCA_FLOWER_KEY_UDP_DST,

372 &mask->tp.dst, TCA_FLOWER_UNSPEC,

373 sizeof(key->tp.dst));

374 }

375

376 return 0;

377}

378

379static bool fl_mask_eq(struct fl_flow_mask *mask1,

380 struct fl_flow_mask *mask2)

381{

382 const long *lmask1 = fl_key_get_start(&mask1->key, mask1);

383 const long *lmask2 = fl_key_get_start(&mask2->key, mask2);

384

385 return !memcmp(&mask1->range, &mask2->range, sizeof(mask1->range)) &&

386 !memcmp(lmask1, lmask2, fl_mask_range(mask1));

387}

388

389static const struct rhashtable_params fl_ht_params = {

390 .key_offset = offsetof(struct cls_fl_filter, mkey), /* base offset */

391 .head_offset = offsetof(struct cls_fl_filter, ht_node),

392 .automatic_shrinking = true,

393};

394

395static int fl_init_hashtable(struct cls_fl_head *head,

396 struct fl_flow_mask *mask)

397{

398 head->ht_params = fl_ht_params;

399 head->ht_params.key_len = fl_mask_range(mask);

400 head->ht_params.key_offset += mask->range.start;

401

402 return rhashtable_init(&head->ht, &head->ht_params);

403}

404

405#define FL_KEY_MEMBER_OFFSET(member) offsetof(struct fl_flow_key, member)

406#define FL_KEY_MEMBER_SIZE(member) (sizeof(((struct fl_flow_key *) 0)->member))

407#define FL_KEY_MEMBER_END_OFFSET(member)

408 (FL_KEY_MEMBER_OFFSET(member) + FL_KEY_MEMBER_SIZE(member))

409

410#define FL_KEY_IN_RANGE(mask, member)

411 (FL_KEY_MEMBER_OFFSET(member) <= (mask)->range.end &&

412 FL_KEY_MEMBER_END_OFFSET(member) >= (mask)->range.start)

413

414#define FL_KEY_SET(keys, cnt, id, member)

415 do {

416 keys[cnt].key_id = id;

417 keys[cnt].offset = FL_KEY_MEMBER_OFFSET(member);

418 cnt++;

419 } while(0);

420

421#define FL_KEY_SET_IF_IN_RANGE(mask, keys, cnt, id, member)

422 do {

423 if (FL_KEY_IN_RANGE(mask, member))

424 FL_KEY_SET(keys, cnt, id, member);

425 } while(0);

426

427static void fl_init_dissector(struct cls_fl_head *head,

428 struct fl_flow_mask *mask)

429{

430 struct flow_dissector_key keys[FLOW_DISSECTOR_KEY_MAX];

431 size_t cnt = 0;

432

433 FL_KEY_SET(keys, cnt, FLOW_DISSECTOR_KEY_CONTROL, control);

434 FL_KEY_SET(keys, cnt, FLOW_DISSECTOR_KEY_BASIC, basic);

435 FL_KEY_SET_IF_IN_RANGE(mask, keys, cnt,

436 FLOW_DISSECTOR_KEY_ETH_ADDRS, eth);

437 FL_KEY_SET_IF_IN_RANGE(mask, keys, cnt,

438 FLOW_DISSECTOR_KEY_IPV4_ADDRS, ipv4);

439 FL_KEY_SET_IF_IN_RANGE(mask, keys, cnt,

440 FLOW_DISSECTOR_KEY_IPV6_ADDRS, ipv6);

441 FL_KEY_SET_IF_IN_RANGE(mask, keys, cnt,

442 FLOW_DISSECTOR_KEY_PORTS, tp);

443

444 skb_flow_dissector_init(&head->dissector, keys, cnt);

445}

446

447static int fl_check_assign_mask(struct cls_fl_head *head,

448 struct fl_flow_mask *mask)

449{

450 int err;

451

452 if (head->mask_assigned) {

453 if (!fl_mask_eq(&head->mask, mask))

454 return -EINVAL;

455 else

456 return 0;

457 }

458

459 /* Mask is not assigned yet. So assign it and init hashtable

460 * according to that.

461 */

462 err = fl_init_hashtable(head, mask);

463 if (err)

464 return err;

465 memcpy(&head->mask, mask, sizeof(head->mask));

466 head->mask_assigned = true;

467

468 fl_init_dissector(head, mask);

469

470 return 0;

471}

472

473static int fl_set_parms(struct net *net, struct tcf_proto *tp,

474 struct cls_fl_filter *f, struct fl_flow_mask *mask,

475 unsigned long base, struct nlattr **tb,

476 struct nlattr *est, bool ovr)

477{

478 struct tcf_exts e;

479 int err;

480

481 tcf_exts_init(&e, TCA_FLOWER_ACT, 0);

482 err = tcf_exts_validate(net, tp, tb, est, &e, ovr);

483 if (err < 0)

484 return err;

485

486 if (tb[TCA_FLOWER_CLASSID]) {

487 f->res.classid = nla_get_u32(tb[TCA_FLOWER_CLASSID]);

488 tcf_bind_filter(tp, &f->res, base);

489 }

490

491 err = fl_set_key(net, tb, &f->key, &mask->key);

492 if (err)

493 goto errout;

494

495 fl_mask_update_range(mask);

496 fl_set_masked_key(&f->mkey, &f->key, mask);

497

498 tcf_exts_change(tp, &f->exts, &e);

499

500 return 0;

501errout:

502 tcf_exts_destroy(&e);

503 return err;

504}

505

506static u32 fl_grab_new_handle(struct tcf_proto *tp,

507 struct cls_fl_head *head)

508{

509 unsigned int i = 0x80000000;

510 u32 handle;

511

512 do {

513 if (++head->hgen == 0x7FFFFFFF)

514 head->hgen = 1;

515 } while (--i > 0 && fl_get(tp, head->hgen));

516

517 if (unlikely(i == 0)) {

518 pr_err("Insufficient number of handles

");

519 handle = 0;

520 } else {

521 handle = head->hgen;

522 }

523

524 return handle;

525}

526

527static int fl_change(struct net *net, struct sk_buff *in_skb,

528 struct tcf_proto *tp, unsigned long base,

529 u32 handle, struct nlattr **tca,

530 unsigned long *arg, bool ovr)

531{

532 struct cls_fl_head *head = rtnl_dereference(tp->root);

533 struct cls_fl_filter *fold = (struct cls_fl_filter *) *arg;

534 struct cls_fl_filter *fnew;

535 struct nlattr *tb[TCA_FLOWER_MAX + 1];

536 struct fl_flow_mask mask = {};

537 int err;

538

539 if (!tca[TCA_OPTIONS])

540 return -EINVAL;

541

542 err = nla_parse_nested(tb, TCA_FLOWER_MAX, tca[TCA_OPTIONS], fl_policy);

543 if (err < 0)

544 return err;

545

546 if (fold && handle && fold->handle != handle)

547 return -EINVAL;

548

549 fnew = kzalloc(sizeof(*fnew), GFP_KERNEL);

550 if (!fnew)

551 return -ENOBUFS;

552

553 tcf_exts_init(&fnew->exts, TCA_FLOWER_ACT, 0);

554

555 if (!handle) {

556 handle = fl_grab_new_handle(tp, head);

557 if (!handle) {

558 err = -EINVAL;

559 goto errout;

560 }

561 }

562 fnew->handle = handle;

563

564 if (tb[TCA_FLOWER_FLAGS]) {

565 fnew->flags = nla_get_u32(tb[TCA_FLOWER_FLAGS]);

566

567 if (!tc_flags_valid(fnew->flags)) {

568 err = -EINVAL;

569 goto errout;

570 }

571 }

572

573 err = fl_set_parms(net, tp, fnew, &mask, base, tb, tca[TCA_RATE], ovr);

574 if (err)

575 goto errout;

576

577 err = fl_check_assign_mask(head, &mask);

578 if (err)

579 goto errout;

580

581 if (!tc_skip_sw(fnew->flags)) {

582 err = rhashtable_insert_fast(&head->ht, &fnew->ht_node,

583 head->ht_params);

584 if (err)

585 goto errout;

586 }

587 //调用硬件offload

588 err = fl_hw_replace_filter(tp,

589 &head->dissector,

590 &mask.key,

591 &fnew->key,

592 &fnew->exts,

593 (unsigned long)fnew,

594 fnew->flags);

595 if (err)

596 goto errout;

597

598 if (fold) {

599 rhashtable_remove_fast(&head->ht, &fold->ht_node,

600 head->ht_params);

601 fl_hw_destroy_filter(tp, (unsigned long)fold);

602 }

603

604 *arg = (unsigned long) fnew;

605

606 if (fold) {

607 list_replace_rcu(&fold->list, &fnew->list);

608 tcf_unbind_filter(tp, &fold->res);

609 call_rcu(&fold->rcu, fl_destroy_filter);

610 } else {

611 list_add_tail_rcu(&fnew->list, &head->filters);

612 }

613

614 return 0;

615

616errout:

617 kfree(fnew);

618 return err;

619}

620

621static int fl_delete(struct tcf_proto *tp, unsigned long arg)

622{

623 struct cls_fl_head *head = rtnl_dereference(tp->root);

624 struct cls_fl_filter *f = (struct cls_fl_filter *) arg;

625

626 rhashtable_remove_fast(&head->ht, &f->ht_node,

627 head->ht_params);

628 list_del_rcu(&f->list);

629 fl_hw_destroy_filter(tp, (unsigned long)f);

630 tcf_unbind_filter(tp, &f->res);

631 call_rcu(&f->rcu, fl_destroy_filter);

632 return 0;

633}

634

635static void fl_walk(struct tcf_proto *tp, struct tcf_walker *arg)

636{

637 struct cls_fl_head *head = rtnl_dereference(tp->root);

638 struct cls_fl_filter *f;

639

640 list_for_each_entry_rcu(f, &head->filters, list) {

641 if (arg->count < arg->skip)

642 goto skip;

643 if (arg->fn(tp, (unsigned long) f, arg) < 0) {

644 arg->stop = 1;

645 break;

646 }

647skip:

648 arg->count++;

649 }

650}

651

652static int fl_dump_key_val(struct sk_buff *skb,

653 void *val, int val_type,

654 void *mask, int mask_type, int len)

655{

656 int err;

657

658 if (!memchr_inv(mask, 0, len))

659 return 0;

660 err = nla_put(skb, val_type, len, val);

661 if (err)

662 return err;

663 if (mask_type != TCA_FLOWER_UNSPEC) {

664 err = nla_put(skb, mask_type, len, mask);

665 if (err)

666 return err;

667 }

668 return 0;

669}

670

671static int fl_dump(struct net *net, struct tcf_proto *tp, unsigned long fh,

672 struct sk_buff *skb, struct tcmsg *t)

673{

674 struct cls_fl_head *head = rtnl_dereference(tp->root);

675 struct cls_fl_filter *f = (struct cls_fl_filter *) fh;

676 struct nlattr *nest;

677 struct fl_flow_key *key, *mask;

678

679 if (!f)

680 return skb->len;

681

682 t->tcm_handle = f->handle;

683

684 nest = nla_nest_start(skb, TCA_OPTIONS);

685 if (!nest)

686 goto nla_put_failure;

687

688 if (f->res.classid &&

689 nla_put_u32(skb, TCA_FLOWER_CLASSID, f->res.classid))

690 goto nla_put_failure;

691

692 key = &f->key;

693 mask = &head->mask.key;

694

695 if (mask->indev_ifindex) {

696 struct net_device *dev;

697

698 dev = __dev_get_by_index(net, key->indev_ifindex);

699 if (dev && nla_put_string(skb, TCA_FLOWER_INDEV, dev->name))

700 goto nla_put_failure;

701 }

702

703 fl_hw_update_stats(tp, f);

704

705 if (fl_dump_key_val(skb, key->eth.dst, TCA_FLOWER_KEY_ETH_DST,

706 mask->eth.dst, TCA_FLOWER_KEY_ETH_DST_MASK,

707 sizeof(key->eth.dst)) ||

708 fl_dump_key_val(skb, key->eth.src, TCA_FLOWER_KEY_ETH_SRC,

709 mask->eth.src, TCA_FLOWER_KEY_ETH_SRC_MASK,

710 sizeof(key->eth.src)) ||

711 fl_dump_key_val(skb, &key->basic.n_proto, TCA_FLOWER_KEY_ETH_TYPE,

712 &mask->basic.n_proto, TCA_FLOWER_UNSPEC,

713 sizeof(key->basic.n_proto)))

714 goto nla_put_failure;

715 if ((key->basic.n_proto == htons(ETH_P_IP) ||

716 key->basic.n_proto == htons(ETH_P_IPV6)) &&

717 fl_dump_key_val(skb, &key->basic.ip_proto, TCA_FLOWER_KEY_IP_PROTO,

718 &mask->basic.ip_proto, TCA_FLOWER_UNSPEC,

719 sizeof(key->basic.ip_proto)))

720 goto nla_put_failure;

721

722 if (key->control.addr_type == FLOW_DISSECTOR_KEY_IPV4_ADDRS &&

723 (fl_dump_key_val(skb, &key->ipv4.src, TCA_FLOWER_KEY_IPV4_SRC,

724 &mask->ipv4.src, TCA_FLOWER_KEY_IPV4_SRC_MASK,

725 sizeof(key->ipv4.src)) ||

726 fl_dump_key_val(skb, &key->ipv4.dst, TCA_FLOWER_KEY_IPV4_DST,

727 &mask->ipv4.dst, TCA_FLOWER_KEY_IPV4_DST_MASK,

728 sizeof(key->ipv4.dst))))

729 goto nla_put_failure;

730 else if (key->control.addr_type == FLOW_DISSECTOR_KEY_IPV6_ADDRS &&

731 (fl_dump_key_val(skb, &key->ipv6.src, TCA_FLOWER_KEY_IPV6_SRC,

732 &mask->ipv6.src, TCA_FLOWER_KEY_IPV6_SRC_MASK,

733 sizeof(key->ipv6.src)) ||

734 fl_dump_key_val(skb, &key->ipv6.dst, TCA_FLOWER_KEY_IPV6_DST,

735 &mask->ipv6.dst, TCA_FLOWER_KEY_IPV6_DST_MASK,

736 sizeof(key->ipv6.dst))))

737 goto nla_put_failure;

738

739 if (key->basic.ip_proto == IPPROTO_TCP &&

740 (fl_dump_key_val(skb, &key->tp.src, TCA_FLOWER_KEY_TCP_SRC,

741 &mask->tp.src, TCA_FLOWER_UNSPEC,

742 sizeof(key->tp.src)) ||

743 fl_dump_key_val(skb, &key->tp.dst, TCA_FLOWER_KEY_TCP_DST,

744 &mask->tp.dst, TCA_FLOWER_UNSPEC,

745 sizeof(key->tp.dst))))

746 goto nla_put_failure;

747 else if (key->basic.ip_proto == IPPROTO_UDP &&

748 (fl_dump_key_val(skb, &key->tp.src, TCA_FLOWER_KEY_UDP_SRC,

749 &mask->tp.src, TCA_FLOWER_UNSPEC,

750 sizeof(key->tp.src)) ||

751 fl_dump_key_val(skb, &key->tp.dst, TCA_FLOWER_KEY_UDP_DST,

752 &mask->tp.dst, TCA_FLOWER_UNSPEC,

753 sizeof(key->tp.dst))))

754 goto nla_put_failure;

755

756 nla_put_u32(skb, TCA_FLOWER_FLAGS, f->flags);

757

758 if (tcf_exts_dump(skb, &f->exts))

759 goto nla_put_failure;

760

761 nla_nest_end(skb, nest);

762

763 if (tcf_exts_dump_stats(skb, &f->exts) < 0)

764 goto nla_put_failure;

765

766 return skb->len;

767

768nla_put_failure:

769 nla_nest_cancel(skb, nest);

770 return -1;

771}

772

tcf_proto_ops cls_fl_ops

773static struct tcf_proto_ops cls_fl_ops __read_mostly = {

774 .kind = "flower",

775 .classify = fl_classify,

776 .init = fl_init,

777 .destroy = fl_destroy,

778 .get = fl_get,

779 .change = fl_change,

780 .delete = fl_delete,

781 .walk = fl_walk,

782 .dump = fl_dump,

783 .owner = THIS_MODULE,

784};

785

786static int __init cls_fl_init(void)

787{

788 return register_tcf_proto_ops(&cls_fl_ops);

789}

790

791static void __exit cls_fl_exit(void)

792{

793 unregister_tcf_proto_ops(&cls_fl_ops);

794}

795

796module_init(cls_fl_init);

797module_exit(cls_fl_exit);

798

799MODULE_AUTHOR("Jiri Pirko <jiri@resnulli.us>");

800MODULE_DESCRIPTION("Flower classifier");

801MODULE_LICENSE("GPL v2");

802

cls_bpf

static struct tcf_proto_ops cls_bpf_ops __read_mostly = { .kind = "bpf", .owner = THIS_MODULE, .classify = cls_bpf_classify, .init = cls_bpf_init, .destroy = cls_bpf_destroy, .get = cls_bpf_get, .change = cls_bpf_change, .delete = cls_bpf_delete, .walk = cls_bpf_walk, .dump = cls_bpf_dump, .bind_class = cls_bpf_bind_class, };

static int cls_bpf_change(struct net *net, struct sk_buff *in_skb, struct tcf_proto *tp, unsigned long base, u32 handle, struct nlattr **tca, void **arg, bool ovr) { struct cls_bpf_head *head = rtnl_dereference(tp->root); struct cls_bpf_prog *oldprog = *arg; struct nlattr *tb[TCA_BPF_MAX + 1]; struct cls_bpf_prog *prog; int ret; if (tca[TCA_OPTIONS] == NULL) return -EINVAL; ret = nla_parse_nested(tb, TCA_BPF_MAX, tca[TCA_OPTIONS], bpf_policy, NULL); if (ret < 0) return ret; prog = kzalloc(sizeof(*prog), GFP_KERNEL); if (!prog) return -ENOBUFS; ret = tcf_exts_init(&prog->exts, TCA_BPF_ACT, TCA_BPF_POLICE); if (ret < 0) goto errout; if (oldprog) { if (handle && oldprog->handle != handle) { ret = -EINVAL; goto errout; } } if (handle == 0) prog->handle = cls_bpf_grab_new_handle(tp, head); else prog->handle = handle; if (prog->handle == 0) { ret = -EINVAL; goto errout; } ret = cls_bpf_set_parms(net, tp, prog, base, tb, tca[TCA_RATE], ovr); if (ret < 0) goto errout; ret = cls_bpf_offload(tp, prog, oldprog); if (ret) { __cls_bpf_delete_prog(prog); return ret; } if (!tc_in_hw(prog->gen_flags)) prog->gen_flags |= TCA_CLS_FLAGS_NOT_IN_HW; if (oldprog) { list_replace_rcu(&oldprog->link, &prog->link); tcf_unbind_filter(tp, &oldprog->res); call_rcu(&oldprog->rcu, cls_bpf_delete_prog_rcu); } else { list_add_rcu(&prog->link, &head->plist); } *arg = prog; return 0; errout: tcf_exts_destroy(&prog->exts); kfree(prog); return ret; }

bpf action

static struct tc_action_ops act_bpf_ops __read_mostly = { .kind = "bpf", .id = TCA_ID_BPF, .owner = THIS_MODULE, .act = tcf_bpf_act, .dump = tcf_bpf_dump, .cleanup = tcf_bpf_cleanup, .init = tcf_bpf_init, .walk = tcf_bpf_walker, .lookup = tcf_bpf_search, .size = sizeof(struct tcf_bpf), }; static __net_init int bpf_init_net(struct net *net) { struct tc_action_net *tn = net_generic(net, bpf_net_id); return tc_action_net_init(net, tn, &act_bpf_ops); }

static int __init bpf_init_module(void) { return tcf_register_action(&act_bpf_ops, &bpf_net_ops); }

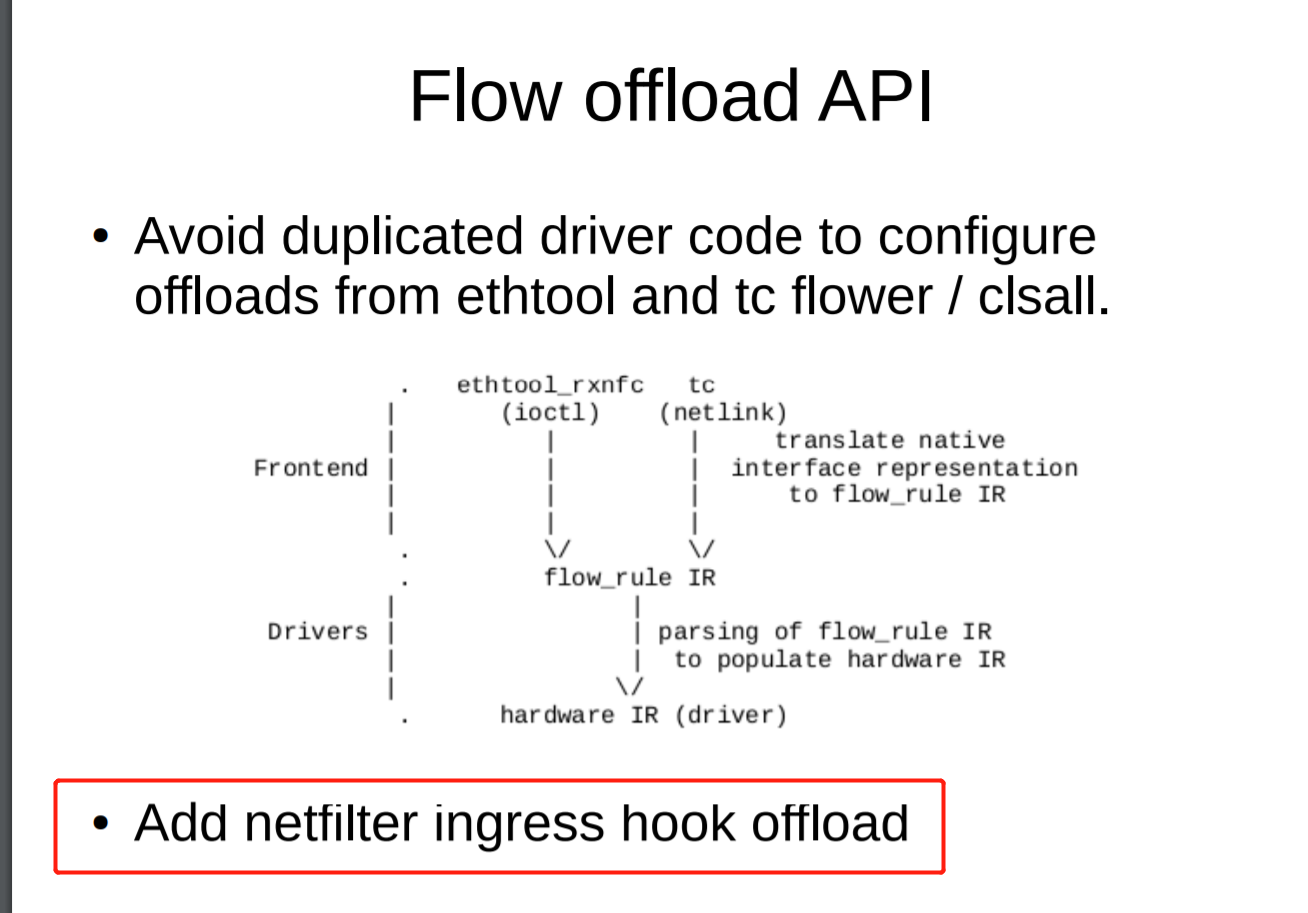

Linux flow offload提高路由转发效率

https://blog.csdn.net/dog250/article/details/103422860