论文通过实现RNN来完成了文本分类。

论文地址:88888888

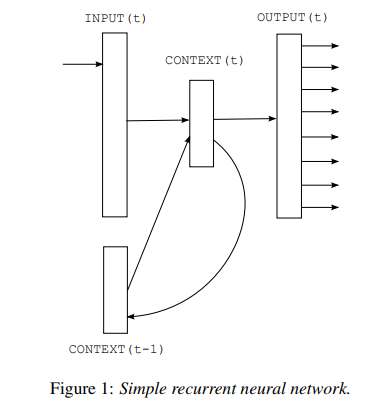

模型结构图:

原理自行参考论文,code and comment(https://github.com/graykode/nlp-tutorial):

1 # -*- coding: utf-8 -*- 2 # @time : 2019/11/9 15:12 3 4 import numpy as np 5 import torch 6 import torch.nn as nn 7 import torch.optim as optim 8 from torch.autograd import Variable 9 10 dtype = torch.FloatTensor 11 12 sentences = [ "i like dog", "i love coffee", "i hate milk"] 13 14 word_list = " ".join(sentences).split() 15 word_list = list(set(word_list)) 16 word_dict = {w: i for i, w in enumerate(word_list)} 17 number_dict = {i: w for i, w in enumerate(word_list)} 18 n_class = len(word_dict) 19 20 # TextRNN Parameter 21 batch_size = len(sentences) 22 n_step = 2 # number of cells(= number of Step) 23 n_hidden = 5 # number of hidden units in one cell 24 25 def make_batch(sentences): 26 input_batch = [] 27 target_batch = [] 28 29 for sen in sentences: 30 word = sen.split() 31 input = [word_dict[n] for n in word[:-1]] 32 target = word_dict[word[-1]] 33 34 input_batch.append(np.eye(n_class)[input]) 35 target_batch.append(target) 36 37 return input_batch, target_batch 38 39 # to Torch.Tensor 40 input_batch, target_batch = make_batch(sentences) 41 input_batch = Variable(torch.Tensor(input_batch)) 42 target_batch = Variable(torch.LongTensor(target_batch)) 43 44 class TextRNN(nn.Module): 45 def __init__(self): 46 super(TextRNN, self).__init__() 47 48 self.rnn = nn.RNN(input_size=n_class, hidden_size=n_hidden,batch_first=True) 49 self.W = nn.Parameter(torch.randn([n_hidden, n_class]).type(dtype)) 50 self.b = nn.Parameter(torch.randn([n_class]).type(dtype)) 51 52 def forward(self, hidden, X): 53 if self.rnn.batch_first == True: 54 # X [batch_size,time_step,word_vector] 55 outputs, hidden = self.rnn(X, hidden) 56 57 # outputs [batch_size, time_step, hidden_size*num_directions] 58 output = outputs[:, -1, :] # [batch_size, num_directions(=1) * n_hidden] 59 model = torch.mm(output, self.W) + self.b # model : [batch_size, n_class] 60 return model 61 else: 62 X = X.transpose(0, 1) # X : [n_step, batch_size, n_class] 63 outputs, hidden = self.rnn(X, hidden) 64 # outputs : [n_step, batch_size, num_directions(=1) * n_hidden] 65 # hidden : [num_layers(=1) * num_directions(=1), batch_size, n_hidden] 66 67 output = outputs[-1,:,:] # [batch_size, num_directions(=1) * n_hidden] 68 model = torch.mm(output, self.W) + self.b # model : [batch_size, n_class] 69 return model 70 71 model = TextRNN() 72 73 criterion = nn.CrossEntropyLoss() 74 optimizer = optim.Adam(model.parameters(), lr=0.001) 75 76 # Training 77 for epoch in range(5000): 78 optimizer.zero_grad() 79 80 # hidden : [num_layers * num_directions, batch, hidden_size] 81 hidden = Variable(torch.zeros(1, batch_size, n_hidden)) 82 # input_batch : [batch_size, n_step, n_class] 83 output = model(hidden, input_batch) 84 85 # output : [batch_size, n_class], target_batch : [batch_size] (LongTensor, not one-hot) 86 loss = criterion(output, target_batch) 87 if (epoch + 1) % 1000 == 0: 88 print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss)) 89 90 loss.backward() 91 optimizer.step() 92 93 94 # Predict 95 hidden_initial = Variable(torch.zeros(1, batch_size, n_hidden)) 96 predict = model(hidden_initial, input_batch).data.max(1, keepdim=True)[1] 97 print([sen.split()[:2] for sen in sentences], '->', [number_dict[n.item()] for n in predict.squeeze()])

LSTM unit的RNN模型:

1 import numpy as np 2 import torch 3 import torch.nn as nn 4 import torch.optim as optim 5 from torch.autograd import Variable 6 7 dtype = torch.FloatTensor 8 9 char_arr = [c for c in 'abcdefghijklmnopqrstuvwxyz'] 10 word_dict = {n: i for i, n in enumerate(char_arr)} 11 number_dict = {i: w for i, w in enumerate(char_arr)} 12 n_class = len(word_dict) # number of class(=number of vocab) 13 14 seq_data = ['make', 'need', 'coal', 'word', 'love', 'hate', 'live', 'home', 'hash', 'star'] 15 16 # TextLSTM Parameters 17 n_step = 3 18 n_hidden = 128 19 20 21 def make_batch(seq_data): 22 input_batch, target_batch = [], [] 23 24 for seq in seq_data: 25 input = [word_dict[n] for n in seq[:-1]] # 'm', 'a' , 'k' is input 26 target = word_dict[seq[-1]] # 'e' is target 27 input_batch.append(np.eye(n_class)[input]) 28 target_batch.append(target) 29 30 return Variable(torch.Tensor(input_batch)), Variable(torch.LongTensor(target_batch)) 31 32 33 class TextLSTM(nn.Module): 34 def __init__(self): 35 super(TextLSTM, self).__init__() 36 37 self.lstm = nn.LSTM(input_size=n_class, hidden_size=n_hidden) 38 self.W = nn.Parameter(torch.randn([n_hidden, n_class]).type(dtype)) 39 self.b = nn.Parameter(torch.randn([n_class]).type(dtype)) 40 41 def forward(self, X): 42 input = X.transpose(0, 1) # X : [n_step, batch_size, n_class] 43 44 hidden_state = Variable( 45 torch.zeros(1, len(X), n_hidden)) # [num_layers(=1) * num_directions(=1), batch_size, n_hidden] 46 cell_state = Variable( 47 torch.zeros(1, len(X), n_hidden)) # [num_layers(=1) * num_directions(=1), batch_size, n_hidden] 48 49 outputs, (_, _) = self.lstm(input, (hidden_state, cell_state)) 50 outputs = outputs[-1] # [batch_size, n_hidden] 51 model = torch.mm(outputs, self.W) + self.b # model : [batch_size, n_class] 52 return model 53 54 55 input_batch, target_batch = make_batch(seq_data) 56 57 model = TextLSTM() 58 59 criterion = nn.CrossEntropyLoss() 60 optimizer = optim.Adam(model.parameters(), lr=0.001) 61 62 # Training 63 for epoch in range(1000): 64 65 output = model(input_batch) 66 loss = criterion(output, target_batch) 67 if (epoch + 1) % 100 == 0: 68 print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss)) 69 optimizer.zero_grad() 70 loss.backward() 71 optimizer.step() 72 73 inputs = [sen[:3] for sen in seq_data] 74 75 predict = model(input_batch).data.max(1, keepdim=True)[1] 76 print(inputs, '->', [number_dict[n.item()] for n in predict.squeeze()])

BiLSTM RNN model:

1 import numpy as np 2 import torch 3 import torch.nn as nn 4 import torch.optim as optim 5 from torch.autograd import Variable 6 import torch.nn.functional as F 7 8 dtype = torch.FloatTensor 9 10 sentence = ( 11 'Lorem ipsum dolor sit amet consectetur adipisicing elit ' 12 'sed do eiusmod tempor incididunt ut labore et dolore magna ' 13 'aliqua Ut enim ad minim veniam quis nostrud exercitation' 14 ) 15 16 word_dict = {w: i for i, w in enumerate(list(set(sentence.split())))} 17 number_dict = {i: w for i, w in enumerate(list(set(sentence.split())))} 18 n_class = len(word_dict) 19 max_len = len(sentence.split()) 20 n_hidden = 5 21 22 def make_batch(sentence): 23 input_batch = [] 24 target_batch = [] 25 26 words = sentence.split() 27 for i, word in enumerate(words[:-1]): 28 input = [word_dict[n] for n in words[:(i + 1)]] 29 input = input + [0] * (max_len - len(input)) 30 target = word_dict[words[i + 1]] 31 input_batch.append(np.eye(n_class)[input]) 32 target_batch.append(target) 33 34 return Variable(torch.Tensor(input_batch)), Variable(torch.LongTensor(target_batch)) 35 36 class BiLSTM(nn.Module): 37 def __init__(self): 38 super(BiLSTM, self).__init__() 39 40 self.lstm = nn.LSTM(input_size=n_class, hidden_size=n_hidden, bidirectional=True) 41 self.W = nn.Parameter(torch.randn([n_hidden * 2, n_class]).type(dtype)) 42 self.b = nn.Parameter(torch.randn([n_class]).type(dtype)) 43 44 def forward(self, X): 45 input = X.transpose(0, 1) # input : [n_step, batch_size, n_class] 46 47 hidden_state = Variable(torch.zeros(1*2, len(X), n_hidden)) # [num_layers(=1) * num_directions(=1), batch_size, n_hidden] 48 cell_state = Variable(torch.zeros(1*2, len(X), n_hidden)) # [num_layers(=1) * num_directions(=1), batch_size, n_hidden] 49 50 outputs, (_, _) = self.lstm(input, (hidden_state, cell_state)) 51 outputs = outputs[-1] # [batch_size, n_hidden] 52 model = torch.mm(outputs, self.W) + self.b # model : [batch_size, n_class] 53 return model 54 55 input_batch, target_batch = make_batch(sentence) 56 57 model = BiLSTM() 58 59 criterion = nn.CrossEntropyLoss() 60 optimizer = optim.Adam(model.parameters(), lr=0.001) 61 62 # Training 63 for epoch in range(10000): 64 output = model(input_batch) 65 loss = criterion(output, target_batch) 66 if (epoch + 1) % 1000 == 0: 67 print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss)) 68 69 optimizer.zero_grad() 70 loss.backward() 71 optimizer.step() 72 73 predict = model(input_batch).data.max(1, keepdim=True)[1] 74 print(sentence) 75 print([number_dict[n.item()] for n in predict.squeeze()])