安装Kubernetes(K8S)

用kubeadm的方法安装k8s:

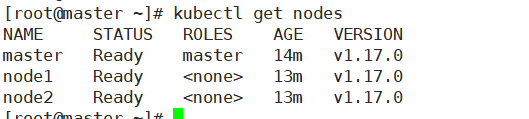

环境: 三台主机 一台master 俩台node

三台主机的硬件需求:

·最少2G内存

·最少2个CPU

更改hosts文件:

[root@master ~]# cat /etc/hosts 192.168.172.134 master 192.168.172.135 node1 192.168.172.136 node2

关闭swap和selinux 防火墙(实验环境 ) 并设置swap内核参数

永久关闭swap 注释掉就好

cat /etc/fstab #/dev/mapper/cl-swap swap swap defaults 0 0

关闭selinux

vim /etc/selinux/config

SELINUX=disabled ##更改成disabled

关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

设置swap内核参数:

vim /etc/sysctl.conf vm.swappiness = 0

重新加载

swapoff -a

设置桥接网:

先加载模块: modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

重新加载并查看:

sysctl -p

安装docker三台都需要操作

yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo yum makecache fast yum -y install docker-ce-18.06.3.ce-3.el7

开启服务并且自启动:

systemctl start docker

systemctl enable docker

三台都需要安装:

[root@master ~]# cat /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

下载kubernetes

yum -y install kubelet-1.17.0 kubeadm-1.17.0 kubectl-1.17.0

开启服务:

systemctl start kubelet.service && systemctl enable kubelet

下载镜像,打上标签,删除之前的

[root@master ~]# vim caoyi.sh

caoyi=registry.aliyuncs.com/google_containers

source=k8s.gcr.io

docker pull $caoyi/kube-apiserver:v1.17.0

docker pull $caoyi/kube-controller-manager:v1.17.0

docker pull $caoyi/kube-scheduler:v1.17.0

docker pull $caoyi/kube-proxy:v1.17.0

docker pull $caoyi/pause:3.1

docker pull $caoyi/etcd:3.4.3-0

docker pull $caoyi/coredns:1.6.5

docker tag $caoyi/kube-apiserver:v1.17.0 $source/kube-apiserver:v1.17.0

docker tag $caoyi/kube-controller-manager:v1.17.0 $source/kube-controller-manager:v1.17.0

docker tag $caoyi/kube-scheduler:v1.17.0 $source/kube-scheduler:v1.17.0

docker tag $caoyi/kube-proxy:v1.17.0 $source/kube-proxy:v1.17.0

docker tag $caoyi/pause:3.1 $source/pause:3.1

docker tag $caoyi/etcd:3.4.3-0 $source/etcd:3.4.3-0

docker tag $caoyi/coredns:1.6.5 $source/coredns:1.6.5

docker rmi $caoyi/kube-apiserver:v1.17.0

docker rmi $caoyi/kube-controller-manager:v1.17.0

docker rmi $caoyi/kube-scheduler:v1.17.0

docker rmi $caoyi/kube-proxy:v1.17.0

docker rmi $caoyi/pause:3.1

docker rmi $caoyi/etcd:3.4.3-0

docker rmi $caoyi/coredns:1.6.5

下载flannel:

docker pull quay.io/coreos/flannel:v0.12.0-adm64

三台主机都需要这样做

配置网络:

[root@master ~]# mkdir -p /etc/cni/net.d/ [root@master ~]# cat /etc/cni/net.d/10-flannel.conf {"name":"cbr0","type":"flannel","delegate":{"isDefaultGateway":true}} [root@master ~]# mkdir /usr/share/oci-umount/oci-umount.d -p [root@master ~]# mkdir /run/flannel/ [root@master ~]# cat /run/flannel/subnet.env FLANNEL_NETWORK=10.244.0.0/16 FLANNEL_SUBNET=10.244.0.1/24 FLANNEL_MTU=1450 FLANNEL_IPMASQ=true

初始化 在master主机上:

kubeadm init --kubernetes-version=v1.17.0 --apiserver-advertise-address 192.168.172.134 --pod-network-cidr 10.244.0.0/16

初始化完成后按照提示在master创建文件

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

按照提示将网络部署到集群

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

因为网址是国外的可能无法访问,我把下载好的yml文件传到github上,方便下载,然后把其他的两个节点加入集群

git clone https://github.com/mrlxxx/kube-flannel.yml.git kubectl apply -f kube-flannel.yml

kubeadm join 192.168.172.134:6443 --token nwnany.7rvdbwfm4wjtxa62 --discovery-token-ca-cert-hash sha256:c44ea7dcf98f5611a34d239dd2fc3420ac4e2993b7aa874d70c01f90f1f8fe60

注意:如果出现节点NotReady的状况,可按照下面的方式进行配置(三台主机都需要配置): # mkdir -p /etc/cni/net.d/ # cat <<EOF> /etc/cni/net.d/10-flannel.conf { "name": "cbr0", "type": "flannel", "delegate": { "isDefaultGateway": true } } EOF

[root@master ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health":"true"}