1、ResNet

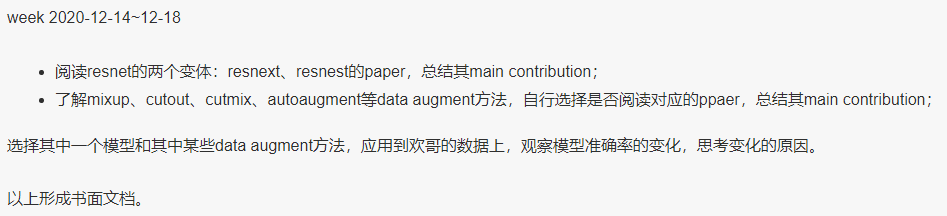

2、ResNext

2020-12-18

2020-12-18

3、ResNest

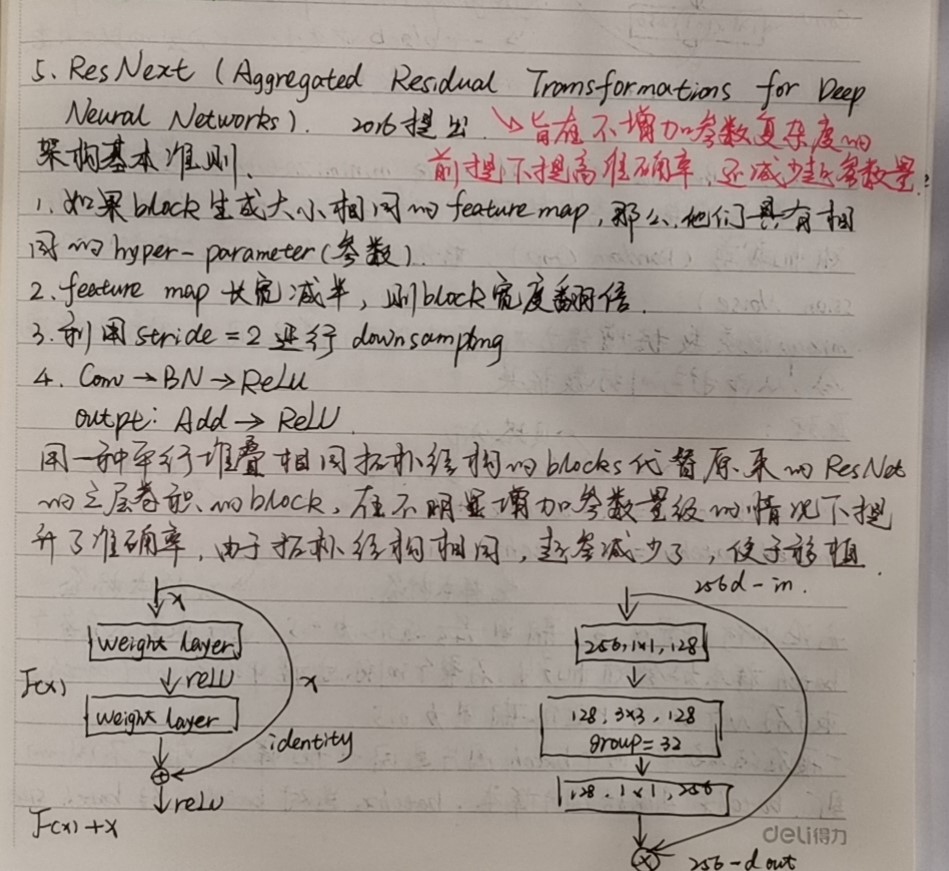

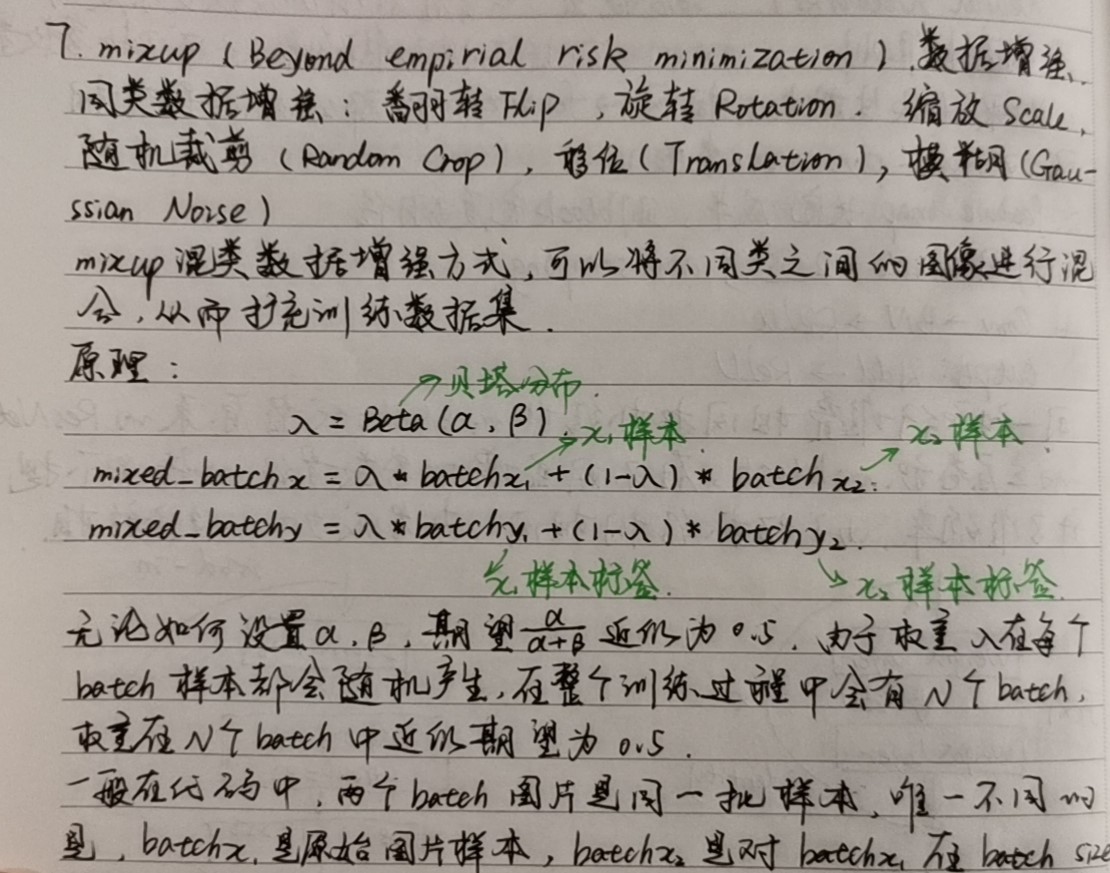

4、数据增强

其他的一些具体方法:

数据中心化

数据标准化

缩放

裁剪

旋转

翻转

填充

噪声添加

灰度变换

线性变换

仿射变换

亮度、饱和度及对比度变换

1. 裁剪

- 中心裁剪:transforms.CenterCrop(512); # 一个参数,宽高相等

- 随机裁剪:transforms.RandomCrop;

- 随机大小、长宽比裁剪:transforms.RandomResizedCrop;

- 上下左右中心裁剪:transforms.FiveCrop;

- 上下左右中心裁剪后翻转: transforms.TenCrop。

2. 翻转和旋转

- 依概率p水平翻转:transforms.RandomHorizontalFlip(p=0.5);

- 依概率p垂直翻转:transforms.RandomVerticalFlip(p=0.5);

- 随机旋转:transforms.RandomRotation(degrees, resample=False, expand=False, center=None)。

- degrees:旋转角度,当为一个数a时,在(-a,a)之间随机旋转

- resample:重采样方法

- expand:旋转时是否保持图片完整,只针对中心旋转

- center:设置旋转中心点

3. 随机遮挡

- 对图像进行随机遮挡: transforms.RandomErasing。

4. 图像变换

- 尺寸变换:transforms.Resize;

- 标准化:transforms.Normalize;

- 填充:transforms.Pad;

- 修改亮度、对比度和饱和度:transforms.ColorJitter;

- 转灰度图:transforms.Grayscale;

- 依概率p转为灰度图:transforms.RandomGrayscale;

- 线性变换:transforms.LinearTransformation();

- 仿射变换:transforms.RandomAffine;

- 将数据转换为PILImage:transforms.ToPILImage;

- 转为tensor,并归一化至[0-1]:transforms.ToTensor;

- 用户自定义方法:transforms.Lambda。

5. 对transforms的选择操作,使数据增强更灵活

- transforms.RandomChoice(transforms列表):从给定的一系列transforms中选一个进行操作;

- transforms.RandomApply(transforms列表, p=0.5):给一个transform加上概率,依概率进行选择操作;

- transforms.RandomOrder(transforms列表):将transforms中的操作随机打乱。

还有几个自定义的数据增强:

import numpy as np

from PIL import ImageDraw, Image

import matplotlib.pyplot as plt

def randomErasing(img, p=0.5, sl=0.02, sh=0.4, r1=0.3, r2=3):

"""

随机擦除

"""

if np.random.rand() > p:

return img

img = np.array(img)

while True:

img_h, img_w, img_c = img.shape

img_area = img_h * img_w

mask_area = np.random.uniform(sl, sh) * img_area

mask_aspect_ratio = np.random.uniform(r1, r2)

mask_w = int(np.sqrt(mask_area / mask_aspect_ratio))

mask_h = int(np.sqrt(mask_area * mask_aspect_ratio))

# 产生的mask区域

mask = np.random.rand(mask_h, mask_w, img_c) * 255

left = np.random.randint(0, img_w)

top = np.random.randint(0, img_h)

right = left + mask_w

bottom = top + mask_h

if right <= img_w and bottom <= img_h:

break

img[top:bottom, left:right, :] = mask

return Image.fromarray(img)

def randomOcclusion(img, p=0.5, sl=0.02, sh=0.4, r1=0.3, r2=3):

"""

随机遮挡

"""

if np.random.rand() > p:

return img

img = np.array(img)

while True:

h, w = img.shape[:2]

image_area = h * w

mask_area = np.random.uniform(sl, sh) * image_area

aspect_ratio = np.random.uniform(r1, r2)

mask_h = int(np.sqrt(mask_area * aspect_ratio))

mask_w = int(np.sqrt(mask_area / aspect_ratio))

x0 = np.random.randint(0, w - mask_w)

y0 = np.random.randint(0, h - mask_h)

x1 = x0 + mask_w

y1 = y0 + mask_h

img[y0:y1, x0:x1, :] = (0,244,255)

# http://www.yuangongju.com/color

if mask_w < w and mask_h < h:

break

return Image.fromarray(img)

def randomMirror(img, boxes, p=0.5):

"""

水平翻转

"""

if np.random.rand() > p:

return img

img = np.array(img)

height, width, _ = img.shape

# 图像水平翻转

img = img[:, ::-1, :]

labels = [0 for _ in range(8)]

# 改变标注框

labels[0] = width-1 - boxes[0]

labels[1] = boxes[1]

labels[2] = width-1 - boxes[2]

labels[3] = boxes[3]

labels[4] = width-1 - boxes[4]

labels[5] = boxes[5]

labels[6] = width-1 - boxes[6]

labels[7] = boxes[7]

print(labels)

img = Image.fromarray(img)

return img, labels

img = Image.open(r'E:wangpengcodemodelspytorch est1.jpg')

list = [300,100,100,100,100,50,300,50]

# Img = randomErasing(img, p=0.5, sl=0.02, sh=0.4, r1=0.3, r2=3)

# Img = randomOcclusion(img, p=0.8, sl=0.02, sh=0.4, r1=0.3, r2=3)

Img, labels = randomMirror(img, boxes=list, p=0.8)

draw = ImageDraw.Draw(Img)

draw.polygon(labels, outline = 'gold')

Img.show()

做的一个小任务,对6类场景进行分类:

对比结果如下:

对比了只用resnet50,只用resnext50_32x2d,resnext50_32x2d加数据增强,这是一个比赛:https://www.kaggle.com/puneet6060/intel-image-classification。用resnet50第8个epoch训练精度差不多稳定下来,其余两种精度也差不多类似;test的时候三种略微存在差异,resnet50稳定在92.5%左右,其余两种93.7%左右,主要是基于resnext的提升,数据增强在这里表现并不明显,可能是因为数据有点简单,单纯网络就已经能够达到最佳。比赛结果中比较乐观的表现也差不多93%左右。

我用的cutout,里面还有mixup,代码如下:

1 from __future__ import print_function, division 2 3 import torch 4 import torch.nn as nn 5 import torch.optim as optim 6 from torch.optim import lr_scheduler 7 from torch.autograd import Variable 8 from torchvision import models, transforms 9 import numpy as np 10 import time 11 import os 12 from torch.utils.data import Dataset 13 14 from PIL import Image 15 16 from models import resnext 17 18 # use PIL Image to read image 19 def default_loader(path): 20 try: 21 img = Image.open(path) 22 return img.convert('RGB') 23 except: 24 print("Cannot read image: {}".format(path)) 25 26 # define your Dataset. Assume each line in your .txt file is [name/tab/label], for example:0001.jpg 1 27 class Data(Dataset): 28 def __init__(self, img_path, txt_path, dataset = '', data_transforms=None, loader = default_loader): 29 with open(txt_path) as input_file: 30 lines = input_file.readlines() 31 self.img_name =A= [os.path.join(img_path, line.strip().split(' ')[0]) for line in lines] 32 self.img_label =B= [int(line.strip(' ').split(' ')[-1]) for line in lines] 33 34 35 self.data_transforms = data_transforms 36 self.dataset = dataset 37 self.loader = loader 38 39 def __len__(self): 40 return len(self.img_name) 41 42 def __getitem__(self, item): 43 img_name = self.img_name[item] 44 label = self.img_label[item] 45 img = self.loader(img_name) 46 47 if self.data_transforms is not None: 48 try: 49 img = self.data_transforms[self.dataset](img) 50 except: 51 print("Cannot transform image: {}".format(img_name)) 52 return img, label 53 54 def train_model(model, criterion, optimizer, scheduler, num_epochs, use_gpu): 55 since = time.time() 56 57 best_model_wts = model.state_dict() 58 best_acc = 0.0 59 count_batch = 0 60 61 for epoch in range(num_epochs): 62 begin_time = time.time() 63 print('Epoch {}/{}'.format(epoch, num_epochs - 1)) 64 print('-' * 10) 65 66 # Each epoch has a training and validation phase 67 for phase in ['train', 'test']: 68 69 if phase == 'train': 70 scheduler.step() 71 model.train(True) # Set model to training mode 72 else: 73 model.train(False) # Set model to evaluate mode 74 75 running_loss = 0.0 76 running_corrects = 0.0 77 78 # Iterate over data. 79 for data in dataloders[phase]: 80 count_batch += 1 81 # get the inputs 82 inputs, labels = data 83 84 # wrap them in Variable 85 if use_gpu: 86 inputs = Variable(inputs.cuda()) 87 labels = Variable(labels.cuda()) 88 else: 89 inputs, labels = Variable(inputs), Variable(labels) 90 91 # zero the parameter gradients 92 optimizer.zero_grad() 93 94 # forward 95 outputs = model(inputs) 96 _, preds = torch.max(outputs.data, 1) 97 loss = criterion(outputs, labels) 98 99 # backward + optimize only if in training phase 100 if phase == 'train': 101 loss.backward() 102 optimizer.step() 103 # statistics 104 # running_loss += loss.data[0] 105 running_loss += loss.item() 106 running_corrects += torch.sum(preds == labels.data).to(torch.float32) 107 108 # print result every 10 batch 109 if count_batch%10 == 0: 110 batch_loss = running_loss / (batch_size*count_batch) 111 batch_acc = running_corrects / (batch_size*count_batch) 112 print('{} Epoch [{}] Batch [{}] Loss: {:.4f} Acc: {:.4f} Time: {:.4f}s'. 113 format(phase, epoch, count_batch, batch_loss, batch_acc, time.time()-begin_time)) 114 begin_time = time.time() 115 116 epoch_loss = running_loss / dataset_sizes[phase] 117 epoch_acc = running_corrects / dataset_sizes[phase] 118 print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc)) 119 120 # save model 121 if phase == 'train': 122 if not os.path.exists('output'): 123 os.makedirs('output') 124 torch.save(model, 'output/resnet_epoch{}.pkl'.format(epoch)) 125 126 # deep copy the model 127 if phase == 'test' and epoch_acc > best_acc: 128 best_acc = epoch_acc 129 best_model_wts = model.state_dict() 130 131 time_elapsed = time.time() - since 132 print('Training complete in {:.0f}m {:.0f}s'.format( 133 time_elapsed // 60, time_elapsed % 60)) 134 print('Best test Acc: {:4f}'.format(best_acc)) 135 136 # load best model weights 137 model.load_state_dict(best_model_wts) 138 return model 139 140 141 ########################################################################################## 142 ## Cutout means 143 class Cutout(object): 144 """Randomly mask out one or more patches from an image. 145 Args: 146 n_holes (int): Number of patches to cut out of each image. 147 length (int): The length (in pixels) of each square patch. 148 """ 149 def __init__(self, n_holes, length): 150 self.n_holes = n_holes 151 self.length = length 152 153 def __call__(self, img): 154 """ 155 Args: 156 img (Tensor): Tensor image of size (C, H, W). 157 Returns: 158 Tensor: Image with n_holes of dimension length x length cut out of it. 159 """ 160 h = img.size(1) 161 w = img.size(2) 162 163 mask = np.ones((h, w), np.float32) 164 165 for n in range(self.n_holes): 166 # (x,y)is the sit of centor 167 y = np.random.randint(h) 168 x = np.random.randint(w) 169 170 y1 = np.clip(y - self.length // 2, 0, h) 171 y2 = np.clip(y + self.length // 2, 0, h) 172 x1 = np.clip(x - self.length // 2, 0, w) 173 x2 = np.clip(x + self.length // 2, 0, w) 174 175 mask[y1: y2, x1: x2] = 0. 176 177 mask = torch.from_numpy(mask) 178 mask = mask.expand_as(img) 179 img = img * mask 180 181 return img 182 ####################################################################################### 183 def mixup_data(x, y, alpha=1.0, use_cuda=True): 184 '''Returns mixed inputs, pairs of targets, and lambda''' 185 if alpha > 0: 186 lam = np.random.beta(alpha, alpha) 187 else: 188 lam = 1 189 190 batch_size = x.size()[0] 191 if use_cuda: 192 index = torch.randperm(batch_size).cuda() 193 else: 194 index = torch.randperm(batch_size) 195 196 mixed_x = lam * x + (1 - lam) * x[index, :] 197 y_a, y_b = y, y[index] 198 199 return mixed_x, y_a, y_b, lam 200 def mixup_criterion(criterion, pred, y_a, y_b, lam): 201 return lam * criterion(pred, y_a) + (1 - lam) * criterion(pred, y_b) 202 ########################################################################################## 203 204 if __name__ == '__main__': 205 206 mean = [0.4309895, 0.4576381, 0.4534026] 207 std = [0.2699252, 0.26827288, 0.29846913] 208 209 data_transforms = { 210 'train': transforms.Compose([ 211 transforms.Resize((150, 150)), 212 transforms.RandomHorizontalFlip(), 213 transforms.ToTensor(), 214 transforms.Normalize(mean, std), 215 Cutout(n_holes=1, length=16) 216 ]), 217 'test': transforms.Compose([ 218 transforms.Resize((150, 150)), 219 transforms.ToTensor(), 220 transforms.Normalize(mean, std), 221 ]), 222 } 223 224 use_gpu = torch.cuda.is_available() 225 226 batch_size = 16 227 num_class = 6 228 229 image_datasets = {x: Data(img_path='SceneClassification', 230 txt_path=('SceneClassification/' + x + '.txt'), 231 data_transforms=data_transforms, 232 dataset=x) for x in ['train', 'test']} 233 234 # wrap your data and label into Tensor 235 dataloders = {x: torch.utils.data.DataLoader(image_datasets[x], 236 batch_size=batch_size, 237 shuffle=True) for x in ['train', 'test']} 238 239 dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'test']} 240 241 # get model and replace the original fc layer with your fc layer 242 243 ### resnet50 resnext50_32x4d 244 245 model_ft = models.resnext50_32x4d(pretrained=True) 246 247 num_ftrs = model_ft.fc.in_features 248 model_ft.fc = nn.Linear(num_ftrs, num_class) 249 250 # if use gpu 251 if use_gpu: 252 model_ft = model_ft.cuda() 253 254 # define cost function 255 criterion = nn.CrossEntropyLoss() 256 257 # Observe that all parameters are being optimized 258 optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.005, momentum=0.9) 259 260 # Decay LR by a factor of 0.2 every 5 epochs 261 exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=5, gamma=0.2) 262 263 # multi-GPU 264 model_ft = torch.nn.DataParallel(model_ft, device_ids=[0]) 265 266 # train model 267 model_ft = train_model(model=model_ft, 268 criterion=criterion, 269 optimizer=optimizer_ft, 270 scheduler=exp_lr_scheduler, 271 num_epochs=25, 272 use_gpu=use_gpu) 273 274 # save best model 275 torch.save(model_ft, "output/best_resnet.pkl")

其中还写了一个计算自己数据集的men,std的代码一并放出:

1 import numpy as np 2 import cv2 3 import os 4 5 6 img_h, img_w = 150, 150 7 means, stdevs = [], [] 8 img_list = [] 9 10 imgs_path = 'SceneClassification/train/' 11 imgs_path_list = os.listdir(imgs_path) 12 13 len_ = len(imgs_path_list) 14 i = 0 15 for item in imgs_path_list: 16 img = cv2.imread(os.path.join(imgs_path, item)) 17 img = cv2.resize(img, (img_w, img_h)) 18 img = img[:, :, :, np.newaxis] 19 img_list.append(img) 20 i += 1 21 print(i, '/', len_) 22 23 imgs = np.concatenate(img_list, axis=3) 24 imgs = imgs.astype(np.float32) / 255. 25 26 for i in range(3): 27 pixels = imgs[:, :, i, :].ravel() # 拉成一行 28 means.append(np.mean(pixels)) 29 stdevs.append(np.std(pixels)) 30 31 # BGR --> RGB , CV读取的需要转换,PIL读取的不用转换 32 means.reverse() 33 stdevs.reverse() 34 35 print("normMean = {}".format(means)) 36 print("normStd = {}".format(stdevs))

学习阶段,有任何问题、错误,欢迎指出,有大佬能有更好的方法,欢迎提出。

数据增强运用:

1 #!/usr/bin/env python3 2 # -*- coding: utf-8 -*- 3 """ 4 5 Project Name: ComputerVision 6 7 File Name: cifar_util.py 8 9 """ 10 11 __author__ = 'Welkin' 12 __date__ = '2019/7/13 16:19' 13 14 import numpy as np 15 16 import torch 17 import torchvision 18 19 from torch.utils.data import DataLoader 20 from torchvision import transforms 21 22 __all__ = ['Cutout', 'data_augmentation', 'get_data_loader', 23 'mixup_data', 'mixup_criterion', 'calculate_acc'] 24 25 26 class Cutout(object): 27 def __init__(self, n_holes, length): 28 self.n_holes = n_holes 29 self.length = length 30 31 def __call__(self, img): 32 h = img.size(1) 33 w = img.size(2) 34 35 mask = np.ones((h, w), np.float32) 36 37 for n in range(self.n_holes): 38 y = np.random.randint(h) 39 x = np.random.randint(w) 40 41 y1 = np.clip(y - self.length // 2, 0, h) 42 y2 = np.clip(y + self.length // 2, 0, h) 43 x1 = np.clip(x - self.length // 2, 0, w) 44 x2 = np.clip(x + self.length // 2, 0, w) 45 46 mask[y1: y2, x1: x2] = 0. 47 48 mask = torch.from_numpy(mask) 49 mask = mask.expand_as(img) 50 img = img * mask 51 52 return img 53 54 55 def data_augmentation(config, train_mode = True): 56 aug = [] 57 if train_mode: 58 # random crop 59 if config.augmentation.random_crop: 60 aug.append(transforms.RandomCrop(config.input_size, padding = 4)) 61 # horizontal filp 62 if config.augmentation.random_horizontal_filp: 63 aug.append(transforms.RandomHorizontalFlip()) 64 65 aug.append(transforms.ToTensor()) 66 # normalize [- mean / std] 67 if config.augmentation.normalize: 68 if config.dataset == 'cifar10': 69 aug.append(transforms.Normalize( 70 (0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))) 71 elif config.dataset == 'cifar100': 72 aug.append(transforms.Normalize( 73 (0.5071, 0.4867, 0.4408), (0.2675, 0.2565, 0.2761))) 74 75 if train_mode and config.augmentation.cutout: 76 # cutout 77 aug.append(Cutout(n_holes = config.augmentation.holes, 78 length = config.augmentation.length)) 79 return aug 80 81 82 def get_data_loader(transform, config, train_mode): 83 assert config.dataset in ['cifar10', 'cifar100'] 84 if config.dataset == "cifar10": 85 dataset = torchvision.datasets.CIFAR10(root = config.data_path, train = train_mode, download = True, 86 transform = transform) 87 else: 88 dataset = torchvision.datasets.CIFAR100( 89 root = config.data_path, train = train_mode, download = True, transform = transform) 90 91 data_loader = DataLoader(dataset, batch_size = config.batch_size, 92 shuffle = train_mode, num_workers = config.workers) 93 94 return data_loader 95 96 97 def mixup_data(x, y, alpha, device): 98 '''Returns mixed inputs, pairs of targets, and lambda''' 99 if alpha > 0: 100 lam = np.random.beta(alpha, alpha) 101 else: 102 lam = 1 103 104 batch_size = x.size()[0] 105 index = torch.randperm(batch_size).to(device) 106 107 mixed_x = lam * x + (1 - lam) * x[index, :] 108 y_a, y_b = y, y[index] 109 return mixed_x, y_a, y_b, lam 110 111 112 def mixup_criterion(criterion, pred, y_a, y_b, lam): 113 return lam * criterion(pred, y_a) + (1 - lam) * criterion(pred, y_b) 114 115 116 def calculate_acc(outputs, targets, config, correct = 0, total = 0, train_mode = True, **kwargs): 117 if not config.dataset in ['cifar10', 'cifar100']: 118 raise ValueError("`dataset` in `config` should be 'cifar10' or 'cifar100'") 119 _, predicted = outputs.max(1) 120 total += targets.size(0) 121 if train_mode and config.mixup: 122 lam, targets_a, targets_b = kwargs['lam'], kwargs['targets_a'], kwargs['targets_b'] 123 correct += (lam * predicted.eq(targets_a).sum().item() 124 + (1 - lam) * predicted.eq(targets_b).sum().item()) 125 else: 126 correct += predicted.eq(targets).sum().item() 127 acc = correct / total 128 return acc, correct, total