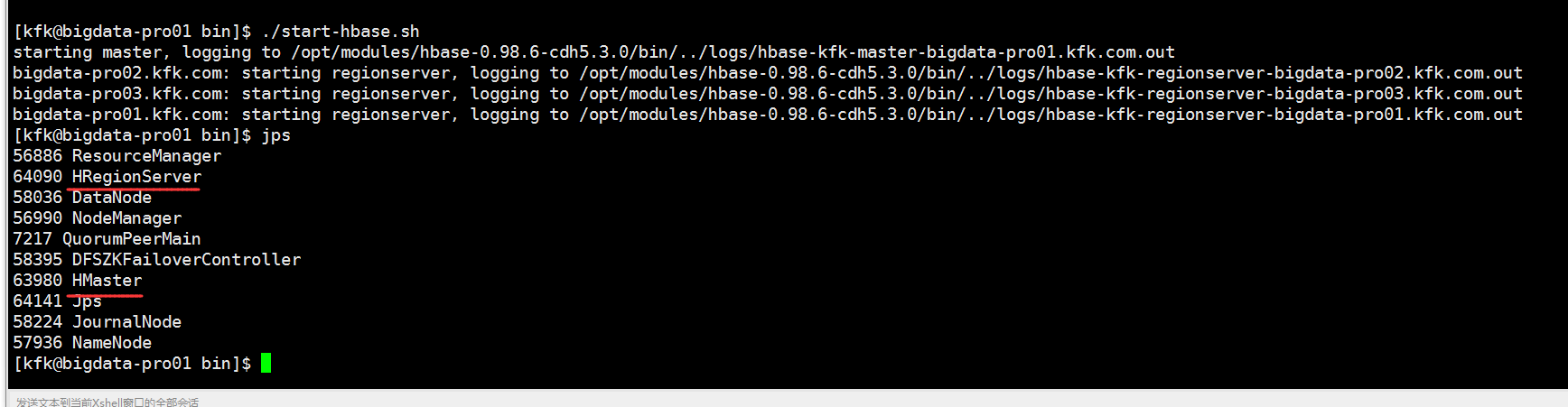

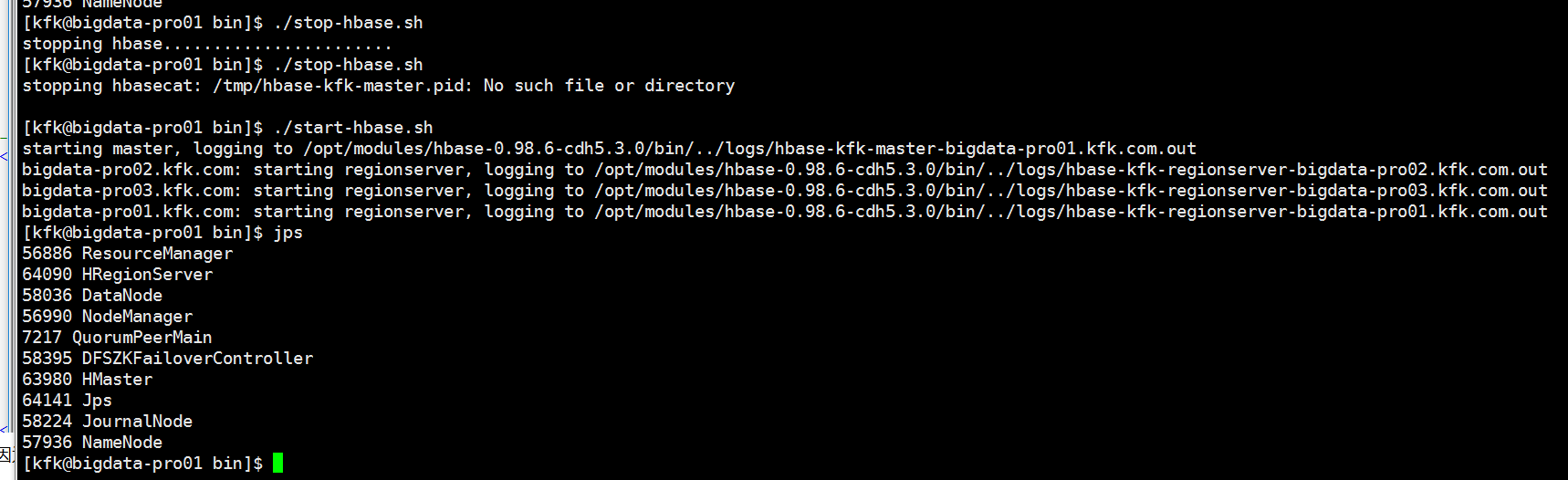

启动hbase后,主节点的进程正常,但是子节点的regionserver进程会自动挂掉

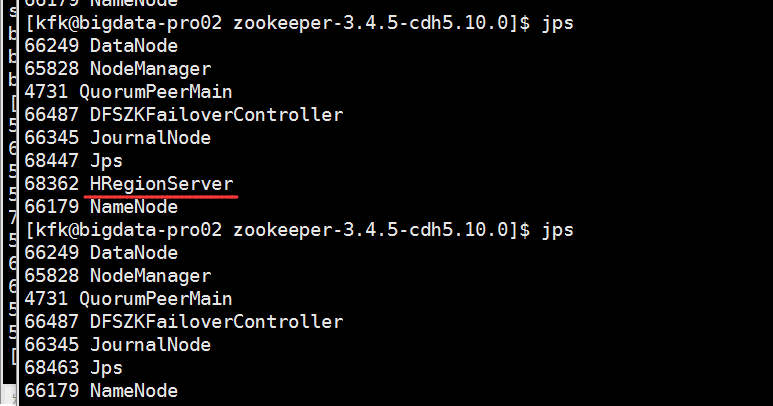

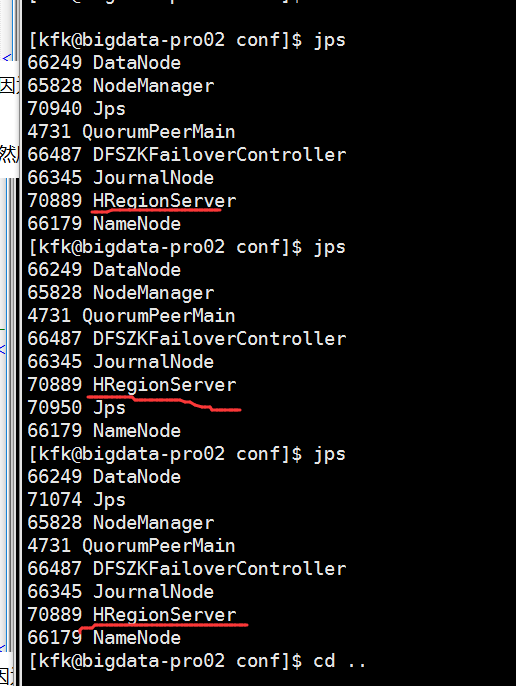

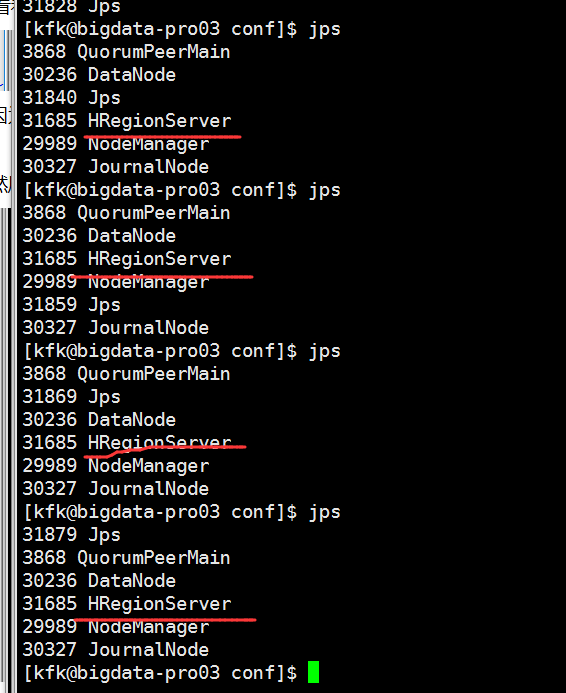

然后我们看看子节点的情况

可以看到挂掉了

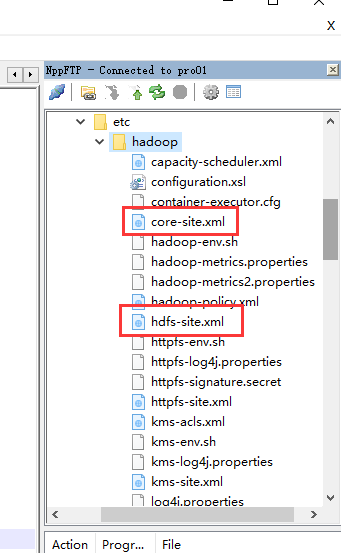

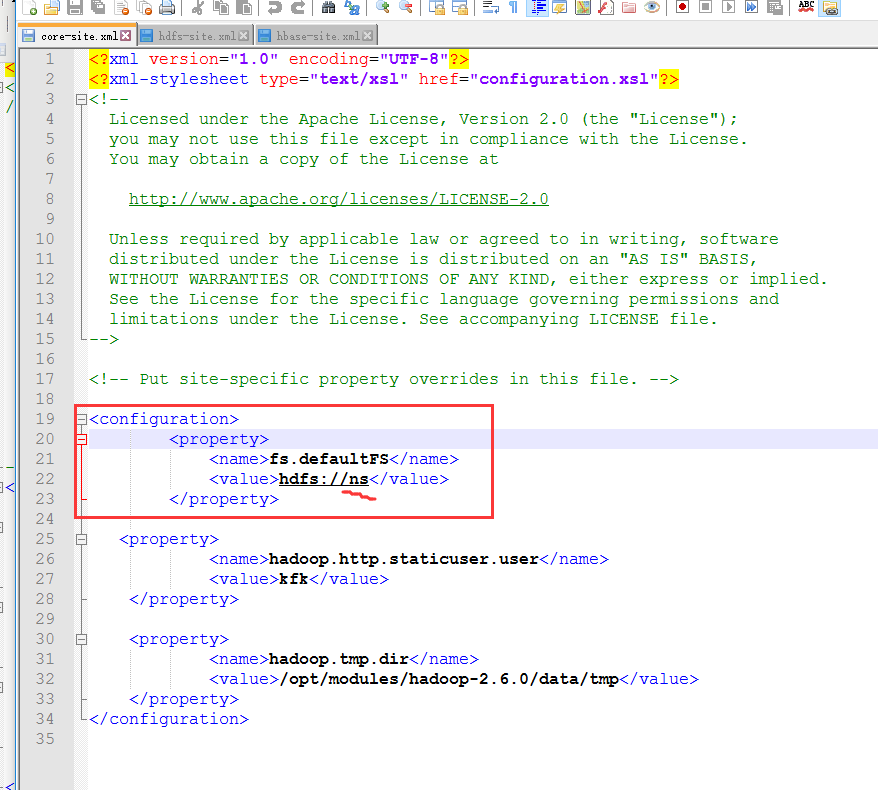

我们这样解决问题,先把hadoop目录下的这个两个文件放到hbase的conf目录下

core-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://ns</value> </property> <property> <name>hadoop.http.staticuser.user</name> <value>kfk</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/opt/modules/hadoop-2.6.0/data/tmp</value> </property> </configuration>

hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License. See accompanying LICENSE file. --> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.nameservices</name> <value>ns</value> </property> <property> <name>dfs.ha.namenodes.ns</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.ns.nn1</name> <value>bigdata-pro01.kfk.com:9000</value> </property> <property> <name>dfs.namenode.rpc-address.ns.nn2</name> <value>bigdata-pro02.kfk.com:9000</value> </property> <property> <name>dfs.namenode.http-address.ns.nn1</name> <value>bigdata-pro01.kfk.com:50070</value> </property> <property> <name>dfs.namenode.http-address.ns.nn2</name> <value>bigdata-pro02.kfk.com:50070</value> </property> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://bigdata-pro01.kfk.com:8485;bigdata-pro02.kfk.com:8485;bigdata-pro03.kfk.com:8485/ns</value> </property> <property> <name>dfs.journalnode.edits.dir</name> <value>/opt/modules/hadoop-2.6.0/data/jn</value> </property> <property> <name>dfs.client.failover.proxy.provider.ns</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.ha.fencing.methods</name> <value>sshfence</value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/home/kfk/.ssh/id_rsa</value> </property> <property> <name>dfs.ha.automatic-failover.enabled.ns</name> <value>true</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>bigdata-pro01.kfk.com:2181,bigdata-pro02.kfk.com:2181,bigdata-pro03.kfk.com:2181</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.permissions.enabled</name> <value>false</value> </property> </configuration>

我这里说的是每一个节点都要这么做,不是单单说主节点!!!!

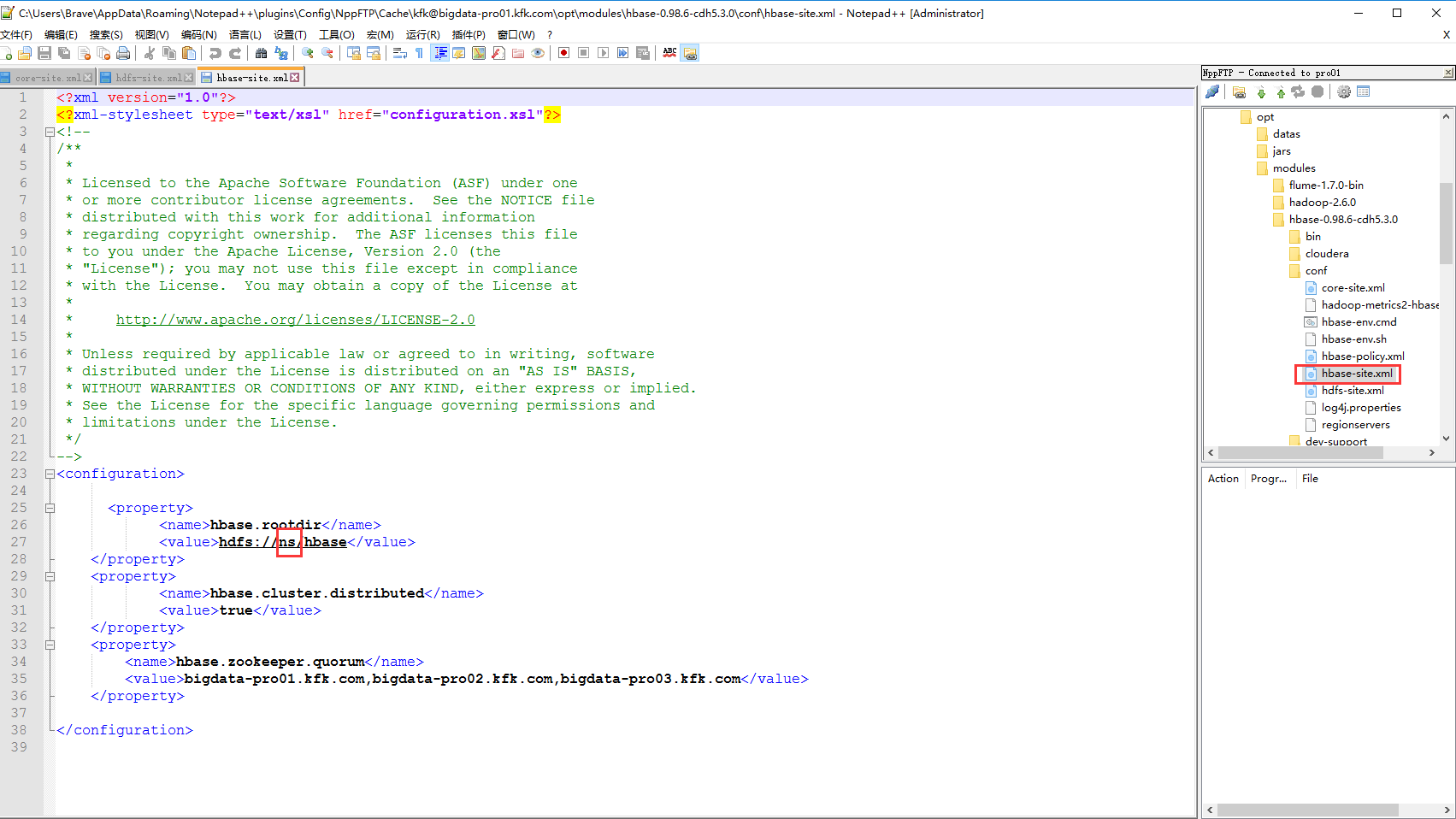

然后检查hbase的conf目录下的hbase-site.xml文件的这个地方一定要跟core-site.xml的一样

因为我之前在hbase-site.xml配置的是主节点的主机名,现在改了,这里也是所有节点都要改

然后再重启hbase

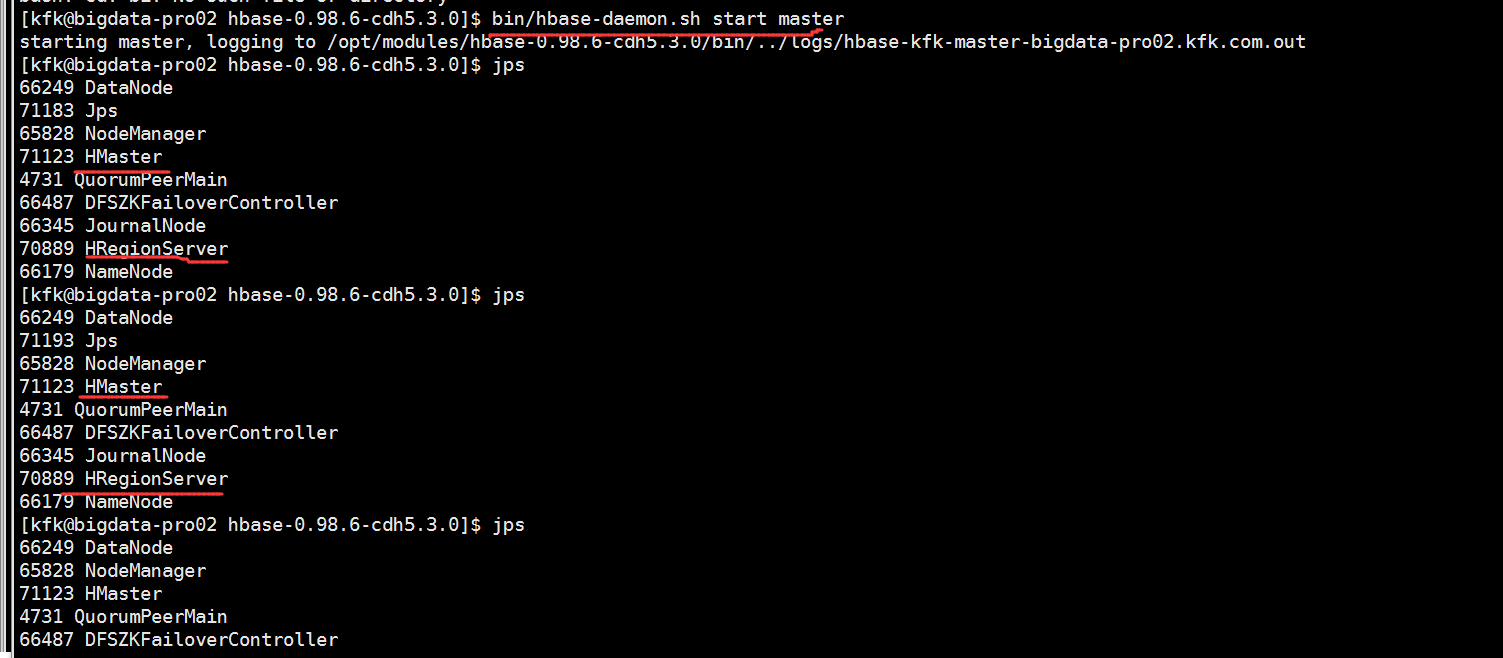

看看子节点的进程

ok没问题,再启动一下节点2的Hmaster进程

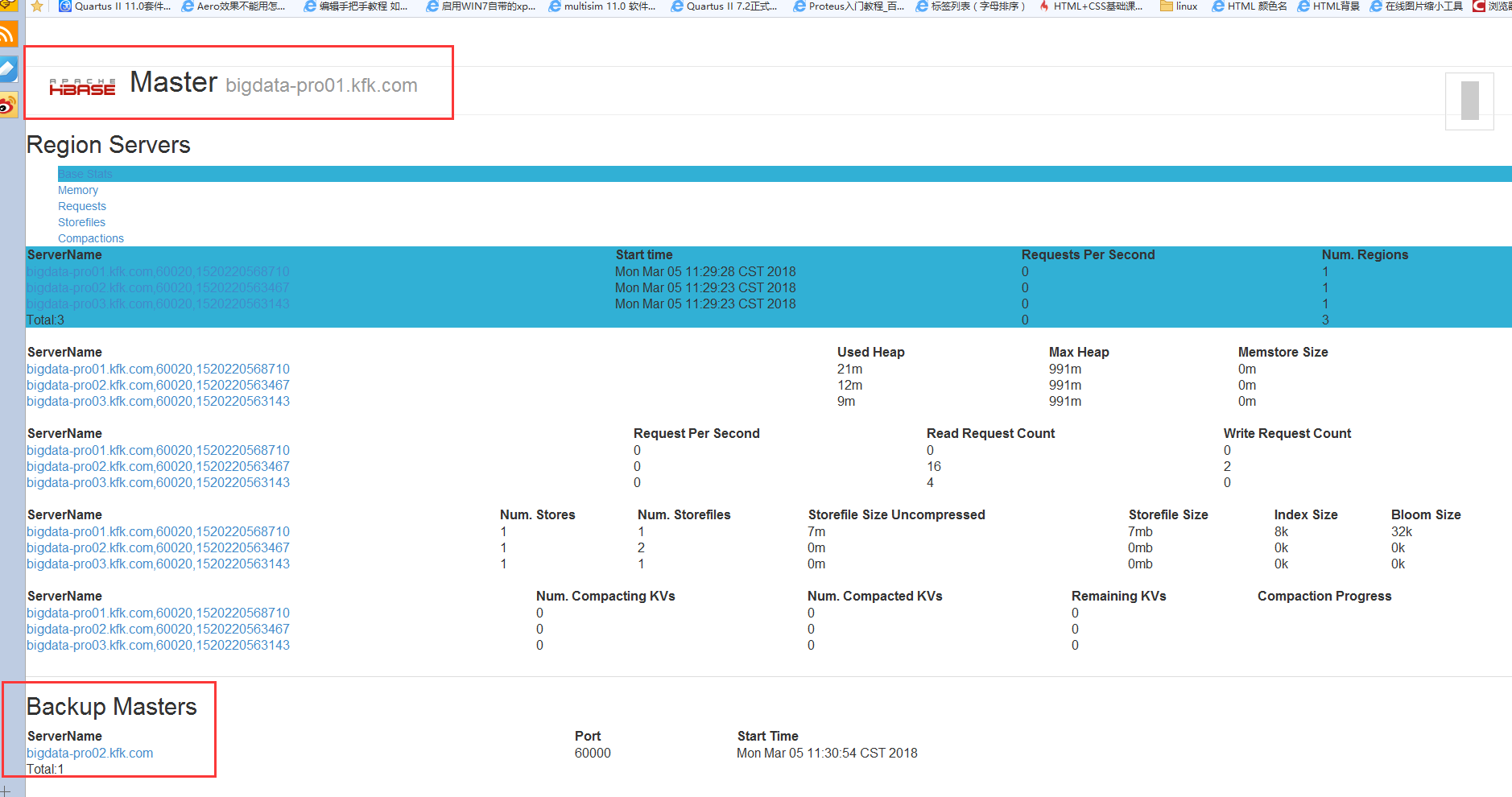

在浏览器打开测试页面

问题解决了!!!!