export JAVA_HOME=/home/hadoop/app/jdk1.8.0_144

export HADOOP_HOME=/home/hadoop/app/hadoop-2.4.1

export HIVE_HOME=/home/hadoop/app/apache-hive-0.14.0-bin

export ZK_HOME=/home/hadoop/app/zookeeper-3.4.8

export SCALA_HOME=/home/hadoop/app/scala-2.10.4

export SPARK_HOME=/home/hadoop/app/spark

export HBASE_HOME=/home/hadoop/hbase-0.96.2

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZK_HOME/bin:$SCALA_HOME/bin:$PATH:$SPARK_HOME/bin:$HBASE_HOME/bin:

------------------------------hbase-env.sh------------------------------------------

/home/hadoop/hbase-0.96.2/conf/hbase-env.sh

# The java implementation to use. Java 1.6 required.

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_144

# Tell HBase whether it should manage it's own instance of Zookeeper or not.

export HBASE_MANAGES_ZK=true

/home/hadoop/hbase-0.96.2/conf/hbase-env.sh

---------------------------hbase-site.xml-----------------------------------------------------

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://alamps:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>alamps</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

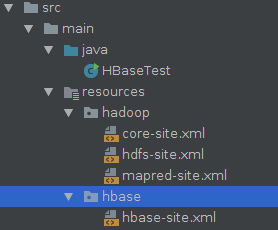

package hbaseApi; import java.io.IOException; import java.util.ArrayList; import java.util.List; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.KeyValue; import org.apache.hadoop.hbase.MasterNotRunningException; import org.apache.hadoop.hbase.ZooKeeperConnectionException; import org.apache.hadoop.hbase.client.Delete; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.HBaseAdmin; import org.apache.hadoop.hbase.client.HTable; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest { private static final String TABLE_NAME = "demo_table"; public static Configuration conf = null; public HTable table = null; public HBaseAdmin admin = null; static { conf = HBaseConfiguration.create(); System.setProperty("hadoop.home.dir", "D:\alamps\hadoop-2.4.1"); System.out.println(conf.get("hbase.zookeeper.quorum")); } /** * 创建一张表 */ public static void creatTable(String tableName, String[] familys) throws Exception { HBaseAdmin admin = new HBaseAdmin(conf); if (admin.tableExists(tableName)) { System.out.println("table already exists!"); } else { HTableDescriptor tableDesc = new HTableDescriptor(tableName); for (int i = 0; i < familys.length; i++) { tableDesc.addFamily(new HColumnDescriptor(familys[i])); } admin.createTable(tableDesc); System.out.println("create table " + tableName + " ok."); } } /** * 删除表 */ public static void deleteTable(String tableName) throws Exception { try { HBaseAdmin admin = new HBaseAdmin(conf); admin.disableTable(tableName); admin.deleteTable(tableName); System.out.println("delete table " + tableName + " ok."); } catch (MasterNotRunningException e) { e.printStackTrace(); } catch (ZooKeeperConnectionException e) { e.printStackTrace(); } } /** * 插入一行记录 */ public static void addRecord(String tableName, String rowKey, String family, String qualifier, String value) throws Exception { try { HTable table = new HTable(conf, tableName); Put put = new Put(Bytes.toBytes(rowKey)); put.add(Bytes.toBytes(family), Bytes.toBytes(qualifier), Bytes.toBytes(value)); table.put(put); System.out.println("insert recored " + rowKey + " to table " + tableName + " ok."); } catch (IOException e) { e.printStackTrace(); } } /** * 删除一行记录 */ public static void delRecord(String tableName, String rowKey) throws IOException { HTable table = new HTable(conf, tableName); List list = new ArrayList(); Delete del = new Delete(rowKey.getBytes()); list.add(del); table.delete(list); System.out.println("del recored " + rowKey + " ok."); } /** * 查找一行记录 */ public static void getOneRecord(String tableName, String rowKey) throws IOException { HTable table = new HTable(conf, tableName); Get get = new Get(rowKey.getBytes()); Result rs = table.get(get); for (KeyValue kv : rs.raw()) { System.out.print(new String(kv.getRow()) + " "); System.out.print(new String(kv.getFamily()) + ":"); System.out.print(new String(kv.getQualifier()) + " "); System.out.print(kv.getTimestamp() + " "); System.out.println(new String(kv.getValue())); } } /** * 显示所有数据 */ public static void getAllRecord(String tableName) { try { HTable table = new HTable(conf, tableName); Scan s = new Scan(); ResultScanner ss = table.getScanner(s); for (Result r : ss) { for (KeyValue kv : r.raw()) { System.out.print(new String(kv.getRow()) + " "); System.out.print(new String(kv.getFamily()) + ":"); System.out.print(new String(kv.getQualifier()) + " "); System.out.print(kv.getTimestamp() + " "); System.out.println(new String(kv.getValue())); } } } catch (IOException e) { e.printStackTrace(); } } public static void main(String[] args) { try { String tablename = "scores"; String[] familys = { "grade", "course" }; HBaseTest.creatTable(tablename, familys); // add record zkb HBaseTest.addRecord(tablename, "zkb", "grade", "", "5"); HBaseTest.addRecord(tablename, "zkb", "course", "", "90"); HBaseTest.addRecord(tablename, "zkb", "course", "math", "97"); HBaseTest.addRecord(tablename, "zkb", "course", "art", "87"); // add record baoniu HBaseTest.addRecord(tablename, "baoniu", "grade", "", "4"); HBaseTest .addRecord(tablename, "baoniu", "course", "math", "89"); System.out.println("===========get one record========"); HBaseTest.getOneRecord(tablename, "zkb"); System.out.println("===========show all record========"); HBaseTest.getAllRecord(tablename); System.out.println("===========del one record========"); HBaseTest.delRecord(tablename, "baoniu"); HBaseTest.getAllRecord(tablename); System.out.println("===========show all record========"); HBaseTest.getAllRecord(tablename); } catch (Exception e) { e.printStackTrace(); } } } // // ///home/hadoop/app/jdk1.8.0_144/bin/java -javaagent:/home/hadoop/app/idea-IC-172.4343.14/lib/idea_rt.jar=32921:/home/hadoop/app/idea-IC-172.4343.14/bin -Dfile.encoding=UTF-8 -classpath /home/hadoop/app/jdk1.8.0_144/jre/lib/charsets.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/deploy.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/cldrdata.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/dnsns.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/jaccess.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/jfxrt.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/localedata.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/nashorn.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/sunec.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/sunjce_provider.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/sunpkcs11.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/zipfs.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/javaws.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jce.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jfr.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jfxswt.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jsse.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/management-agent.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/plugin.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/resources.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/rt.jar:/home/hadoop/IdeaProjects/HbaseAPI/target/classes:/home/hadoop/hbase-0.96.2/lib/xz-1.0.jar:/home/hadoop/hbase-0.96.2/lib/asm-3.1.jar:/home/hadoop/hbase-0.96.2/lib/guice-3.0.jar:/home/hadoop/hbase-0.96.2/lib/avro-1.7.4.jar:/home/hadoop/hbase-0.96.2/lib/junit-4.11.jar:/home/hadoop/hbase-0.96.2/lib/jsch-0.1.42.jar:/home/hadoop/hbase-0.96.2/lib/xmlenc-0.52.jar:/home/hadoop/hbase-0.96.2/lib/guava-12.0.1.jar:/home/hadoop/hbase-0.96.2/lib/jets3t-0.6.1.jar:/home/hadoop/hbase-0.96.2/lib/jetty-6.1.26.jar:/home/hadoop/hbase-0.96.2/lib/jsr305-1.3.9.jar:/home/hadoop/hbase-0.96.2/lib/log4j-1.2.17.jar:/home/hadoop/hbase-0.96.2/lib/paranamer-2.3.jar:/home/hadoop/hbase-0.96.2/lib/activation-1.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-el-1.0.jar:/home/hadoop/hbase-0.96.2/lib/commons-io-2.4.jar:/home/hadoop/hbase-0.96.2/lib/httpcore-4.1.3.jar:/home/hadoop/hbase-0.96.2/lib/javax.inject-1.jar:/home/hadoop/hbase-0.96.2/lib/jaxb-api-2.2.2.jar:/home/hadoop/hbase-0.96.2/lib/jettison-1.3.1.jar:/home/hadoop/hbase-0.96.2/lib/jsp-2.1-6.1.14.jar:/home/hadoop/hbase-0.96.2/lib/aopalliance-1.0.jar:/home/hadoop/hbase-0.96.2/lib/commons-cli-1.2.jar:/home/hadoop/hbase-0.96.2/lib/commons-net-3.1.jar:/home/hadoop/hbase-0.96.2/lib/jersey-core-1.8.jar:/home/hadoop/hbase-0.96.2/lib/jersey-json-1.8.jar:/home/hadoop/hbase-0.96.2/lib/libthrift-0.9.0.jar:/home/hadoop/hbase-0.96.2/lib/slf4j-api-1.6.4.jar:/home/hadoop/hbase-0.96.2/lib/zookeeper-3.4.5.jar:/home/hadoop/hbase-0.96.2/lib/commons-lang-2.6.jar:/home/hadoop/hbase-0.96.2/lib/commons-math-2.1.jar:/home/hadoop/hbase-0.96.2/lib/htrace-core-2.04.jar:/home/hadoop/hbase-0.96.2/lib/httpclient-4.1.3.jar:/home/hadoop/hbase-0.96.2/lib/jackson-xc-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/jersey-guice-1.9.jar:/home/hadoop/hbase-0.96.2/lib/commons-codec-1.7.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-rcm-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/guice-servlet-3.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-auth-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-hdfs-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hamcrest-core-1.3.jar:/home/hadoop/hbase-0.96.2/lib/javax.servlet-3.1.jar:/home/hadoop/hbase-0.96.2/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/hbase-0.96.2/lib/jersey-client-1.9.jar:/home/hadoop/hbase-0.96.2/lib/jersey-server-1.8.jar:/home/hadoop/hbase-0.96.2/lib/jetty-util-6.1.26.jar:/home/hadoop/hbase-0.96.2/lib/netty-3.6.6.Final.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-http-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/jsp-api-2.1-6.1.14.jar:/home/hadoop/hbase-0.96.2/lib/metrics-core-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-client-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/jackson-jaxrs-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/jamon-runtime-2.3.1.jar:/home/hadoop/hbase-0.96.2/lib/jersey-grizzly2-1.9.jar:/home/hadoop/hbase-0.96.2/lib/protobuf-java-2.5.0.jar:/home/hadoop/hbase-0.96.2/lib/slf4j-log4j12-1.6.4.jar:/home/hadoop/hbase-0.96.2/lib/snappy-java-1.0.4.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-digester-1.8.jar:/home/hadoop/hbase-0.96.2/lib/jruby-complete-1.6.8.jar:/home/hadoop/hbase-0.96.2/lib/commons-daemon-1.0.13.jar:/home/hadoop/hbase-0.96.2/lib/commons-logging-1.1.1.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-api-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/jasper-runtime-5.5.23.jar:/home/hadoop/hbase-0.96.2/lib/commons-compress-1.4.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-httpclient-3.1.jar:/home/hadoop/hbase-0.96.2/lib/jackson-core-asl-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/jasper-compiler-5.5.23.jar:/home/hadoop/hbase-0.96.2/lib/jetty-sslengine-6.1.26.jar:/home/hadoop/hbase-0.96.2/lib/servlet-api-2.5-6.1.14.jar:/home/hadoop/hbase-0.96.2/lib/commons-beanutils-1.7.0.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-framework-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-hdfs-2.2.0-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-it-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/javax.servlet-api-3.0.1.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-annotations-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-client-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/jackson-mapper-asl-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/commons-collections-3.2.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-configuration-1.6.jar:/home/hadoop/hbase-0.96.2/lib/gmbal-api-only-3.0.0-b023.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-http-server-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/management-api-3.0.0-b012.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-http-servlet-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-shell-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-client-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-common-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-server-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-thrift-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/commons-beanutils-core-1.8.0.jar:/home/hadoop/hbase-0.96.2/lib/findbugs-annotations-1.3.9-1.jar:/home/hadoop/hbase-0.96.2/lib/hbase-examples-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-it-0.96.2-hadoop2-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-protocol-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/jersey-test-framework-core-1.9.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-server-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hbase-prefix-tree-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-app-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hbase-common-0.96.2-hadoop2-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-server-0.96.2-hadoop2-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-testing-util-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-core-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hbase-hadoop-compat-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/jersey-test-framework-grizzly2-1.9.jar:/home/hadoop/hbase-0.96.2/lib/hbase-hadoop2-compat-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-server-nodemanager-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-shuffle-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-jobclient-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar HBaseTest //localhost //2017-11-24 21:57:11,425 WARN [main] util.NativeCodeLoader (NativeCodeLoader.java:<clinit>(62)) - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable //2017-11-24 21:57:12,561 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT //2017-11-24 21:57:12,561 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:host.name=alamps //2017-11-24 21:57:12,561 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.version=1.8.0_144 //2017-11-24 21:57:12,561 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.vendor=Oracle Corporation //2017-11-24 21:57:12,562 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.home=/home/hadoop/app/jdk1.8.0_144/jre //2017-11-24 21:57:12,562 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.class.path=/home/hadoop/app/jdk1.8.0_144/jre/lib/charsets.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/deploy.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/cldrdata.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/dnsns.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/jaccess.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/jfxrt.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/localedata.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/nashorn.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/sunec.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/sunjce_provider.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/sunpkcs11.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/ext/zipfs.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/javaws.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jce.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jfr.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jfxswt.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/jsse.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/management-agent.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/plugin.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/resources.jar:/home/hadoop/app/jdk1.8.0_144/jre/lib/rt.jar:/home/hadoop/IdeaProjects/HbaseAPI/target/classes:/home/hadoop/hbase-0.96.2/lib/xz-1.0.jar:/home/hadoop/hbase-0.96.2/lib/asm-3.1.jar:/home/hadoop/hbase-0.96.2/lib/guice-3.0.jar:/home/hadoop/hbase-0.96.2/lib/avro-1.7.4.jar:/home/hadoop/hbase-0.96.2/lib/junit-4.11.jar:/home/hadoop/hbase-0.96.2/lib/jsch-0.1.42.jar:/home/hadoop/hbase-0.96.2/lib/xmlenc-0.52.jar:/home/hadoop/hbase-0.96.2/lib/guava-12.0.1.jar:/home/hadoop/hbase-0.96.2/lib/jets3t-0.6.1.jar:/home/hadoop/hbase-0.96.2/lib/jetty-6.1.26.jar:/home/hadoop/hbase-0.96.2/lib/jsr305-1.3.9.jar:/home/hadoop/hbase-0.96.2/lib/log4j-1.2.17.jar:/home/hadoop/hbase-0.96.2/lib/paranamer-2.3.jar:/home/hadoop/hbase-0.96.2/lib/activation-1.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-el-1.0.jar:/home/hadoop/hbase-0.96.2/lib/commons-io-2.4.jar:/home/hadoop/hbase-0.96.2/lib/httpcore-4.1.3.jar:/home/hadoop/hbase-0.96.2/lib/javax.inject-1.jar:/home/hadoop/hbase-0.96.2/lib/jaxb-api-2.2.2.jar:/home/hadoop/hbase-0.96.2/lib/jettison-1.3.1.jar:/home/hadoop/hbase-0.96.2/lib/jsp-2.1-6.1.14.jar:/home/hadoop/hbase-0.96.2/lib/aopalliance-1.0.jar:/home/hadoop/hbase-0.96.2/lib/commons-cli-1.2.jar:/home/hadoop/hbase-0.96.2/lib/commons-net-3.1.jar:/home/hadoop/hbase-0.96.2/lib/jersey-core-1.8.jar:/home/hadoop/hbase-0.96.2/lib/jersey-json-1.8.jar:/home/hadoop/hbase-0.96.2/lib/libthrift-0.9.0.jar:/home/hadoop/hbase-0.96.2/lib/slf4j-api-1.6.4.jar:/home/hadoop/hbase-0.96.2/lib/zookeeper-3.4.5.jar:/home/hadoop/hbase-0.96.2/lib/commons-lang-2.6.jar:/home/hadoop/hbase-0.96.2/lib/commons-math-2.1.jar:/home/hadoop/hbase-0.96.2/lib/htrace-core-2.04.jar:/home/hadoop/hbase-0.96.2/lib/httpclient-4.1.3.jar:/home/hadoop/hbase-0.96.2/lib/jackson-xc-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/jersey-guice-1.9.jar:/home/hadoop/hbase-0.96.2/lib/commons-codec-1.7.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-rcm-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/guice-servlet-3.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-auth-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-hdfs-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hamcrest-core-1.3.jar:/home/hadoop/hbase-0.96.2/lib/javax.servlet-3.1.jar:/home/hadoop/hbase-0.96.2/lib/jaxb-impl-2.2.3-1.jar:/home/hadoop/hbase-0.96.2/lib/jersey-client-1.9.jar:/home/hadoop/hbase-0.96.2/lib/jersey-server-1.8.jar:/home/hadoop/hbase-0.96.2/lib/jetty-util-6.1.26.jar:/home/hadoop/hbase-0.96.2/lib/netty-3.6.6.Final.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-http-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/jsp-api-2.1-6.1.14.jar:/home/hadoop/hbase-0.96.2/lib/metrics-core-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-client-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/jackson-jaxrs-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/jamon-runtime-2.3.1.jar:/home/hadoop/hbase-0.96.2/lib/jersey-grizzly2-1.9.jar:/home/hadoop/hbase-0.96.2/lib/protobuf-java-2.5.0.jar:/home/hadoop/hbase-0.96.2/lib/slf4j-log4j12-1.6.4.jar:/home/hadoop/hbase-0.96.2/lib/snappy-java-1.0.4.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-digester-1.8.jar:/home/hadoop/hbase-0.96.2/lib/jruby-complete-1.6.8.jar:/home/hadoop/hbase-0.96.2/lib/commons-daemon-1.0.13.jar:/home/hadoop/hbase-0.96.2/lib/commons-logging-1.1.1.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-api-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/jasper-runtime-5.5.23.jar:/home/hadoop/hbase-0.96.2/lib/commons-compress-1.4.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-httpclient-3.1.jar:/home/hadoop/hbase-0.96.2/lib/jackson-core-asl-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/jasper-compiler-5.5.23.jar:/home/hadoop/hbase-0.96.2/lib/jetty-sslengine-6.1.26.jar:/home/hadoop/hbase-0.96.2/lib/servlet-api-2.5-6.1.14.jar:/home/hadoop/hbase-0.96.2/lib/commons-beanutils-1.7.0.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-framework-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-hdfs-2.2.0-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-it-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/javax.servlet-api-3.0.1.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-annotations-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-client-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/jackson-mapper-asl-1.8.8.jar:/home/hadoop/hbase-0.96.2/lib/commons-collections-3.2.1.jar:/home/hadoop/hbase-0.96.2/lib/commons-configuration-1.6.jar:/home/hadoop/hbase-0.96.2/lib/gmbal-api-only-3.0.0-b023.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-http-server-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/management-api-3.0.0-b012.jar:/home/hadoop/hbase-0.96.2/lib/grizzly-http-servlet-2.1.2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-shell-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-client-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-common-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-server-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-thrift-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/commons-beanutils-core-1.8.0.jar:/home/hadoop/hbase-0.96.2/lib/findbugs-annotations-1.3.9-1.jar:/home/hadoop/hbase-0.96.2/lib/hbase-examples-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hbase-it-0.96.2-hadoop2-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-protocol-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/jersey-test-framework-core-1.9.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-server-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hbase-prefix-tree-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-app-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hbase-common-0.96.2-hadoop2-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-server-0.96.2-hadoop2-tests.jar:/home/hadoop/hbase-0.96.2/lib/hbase-testing-util-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-core-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hbase-hadoop-compat-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/jersey-test-framework-grizzly2-1.9.jar:/home/hadoop/hbase-0.96.2/lib/hbase-hadoop2-compat-0.96.2-hadoop2.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-common-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-yarn-server-nodemanager-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-shuffle-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-jobclient-2.2.0.jar:/home/hadoop/hbase-0.96.2/lib/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/home/hadoop/app/idea-IC-172.4343.14/lib/idea_rt.jar //2017-11-24 21:57:12,562 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.library.path=/home/hadoop/app/idea-IC-172.4343.14/bin::/usr/java/packages/lib/i386:/lib:/usr/lib //2017-11-24 21:57:12,569 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.io.tmpdir=/tmp //2017-11-24 21:57:12,569 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:java.compiler=<NA> //2017-11-24 21:57:12,569 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.name=Linux //2017-11-24 21:57:12,570 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.arch=i386 //2017-11-24 21:57:12,570 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:os.version=2.6.32-358.el6.i686 //2017-11-24 21:57:12,570 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.name=hadoop //2017-11-24 21:57:12,570 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.home=/home/hadoop //2017-11-24 21:57:12,570 INFO [main] zookeeper.ZooKeeper (Environment.java:logEnv(100)) - Client environment:user.dir=/home/hadoop/IdeaProjects/HbaseAPI //2017-11-24 21:57:12,578 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=hconnection-0x8931cf, quorum=localhost:2181, baseZNode=/hbase //2017-11-24 21:57:12,682 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) //2017-11-24 21:57:12,691 INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=hconnection-0x8931cf connecting to ZooKeeper ensemble=localhost:2181 //2017-11-24 21:57:12,728 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to localhost/127.0.0.1:2181, initiating session //2017-11-24 21:57:13,817 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x15ff0f658660008, negotiated timeout = 90000 //2017-11-24 21:57:15,423 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x8931cf, quorum=localhost:2181, baseZNode=/hbase //2017-11-24 21:57:15,424 INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=catalogtracker-on-hconnection-0x8931cf connecting to ZooKeeper ensemble=localhost:2181 //2017-11-24 21:57:15,429 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) //2017-11-24 21:57:15,429 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to localhost/127.0.0.1:2181, initiating session //2017-11-24 21:57:15,433 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x15ff0f658660009, negotiated timeout = 90000 //2017-11-24 21:57:15,492 INFO [main] Configuration.deprecation (Configuration.java:warnOnceIfDeprecated(840)) - hadoop.native.lib is deprecated. Instead, use io.native.lib.available //2017-11-24 21:57:16,450 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x15ff0f658660009 closed //2017-11-24 21:57:16,451 INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(509)) - EventThread shut down //2017-11-24 21:57:27,630 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x8931cf, quorum=localhost:2181, baseZNode=/hbase //2017-11-24 21:57:27,803 INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=catalogtracker-on-hconnection-0x8931cf connecting to ZooKeeper ensemble=localhost:2181 //2017-11-24 21:57:27,807 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server localhost/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error) //2017-11-24 21:57:28,396 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to localhost/0:0:0:0:0:0:0:1:2181, initiating session //2017-11-24 21:57:28,553 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, sessionid = 0x15ff0f65866000a, negotiated timeout = 90000 //2017-11-24 21:57:31,999 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x15ff0f65866000a closed //2017-11-24 21:57:32,001 INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(509)) - EventThread shut down //create table Student ok. //2017-11-24 21:57:32,246 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x8931cf, quorum=localhost:2181, baseZNode=/hbase //2017-11-24 21:57:32,255 INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=catalogtracker-on-hconnection-0x8931cf connecting to ZooKeeper ensemble=localhost:2181 //2017-11-24 21:57:32,255 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) //2017-11-24 21:57:32,256 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to localhost/127.0.0.1:2181, initiating session //2017-11-24 21:57:32,265 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server localhost/127.0.0.1:2181, sessionid = 0x15ff0f65866000b, negotiated timeout = 90000 //2017-11-24 21:57:32,320 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x15ff0f65866000b closed //2017-11-24 21:57:32,321 INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(509)) - EventThread shut down //2017-11-24 21:57:44,917 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:<init>(438)) - Initiating client connection, connectString=localhost:2181 sessionTimeout=90000 watcher=catalogtracker-on-hconnection-0x8931cf, quorum=localhost:2181, baseZNode=/hbase //2017-11-24 21:57:44,918 INFO [main] zookeeper.RecoverableZooKeeper (RecoverableZooKeeper.java:<init>(120)) - Process identifier=catalogtracker-on-hconnection-0x8931cf connecting to ZooKeeper ensemble=localhost:2181 //2017-11-24 21:57:44,921 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:logStartConnect(966)) - Opening socket connection to server localhost/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error) //2017-11-24 21:57:44,923 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:primeConnection(849)) - Socket connection established to localhost/0:0:0:0:0:0:0:1:2181, initiating session //2017-11-24 21:57:44,969 INFO [main-SendThread(localhost:2181)] zookeeper.ClientCnxn (ClientCnxn.java:onConnected(1207)) - Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, sessionid = 0x15ff0f65866000c, negotiated timeout = 90000 //2017-11-24 21:57:44,981 INFO [main] zookeeper.ZooKeeper (ZooKeeper.java:close(684)) - Session: 0x15ff0f65866000c closed //create table scores ok. //2017-11-24 21:57:44,985 INFO [main-EventThread] zookeeper.ClientCnxn (ClientCnxn.java:run(509)) - EventThread shut down //insert recored zkb to table scores ok. //insert recored zkb to table scores ok. //insert recored zkb to table scores ok. //insert recored zkb to table scores ok. //insert recored baoniu to table scores ok. //insert recored baoniu to table scores ok. //===========get one record======== //zkb course: 1511589465200 90 //zkb course:art 1511589465347 87 //zkb course:math 1511589465340 97 //zkb grade: 1511589465136 5 //===========show all record======== //baoniu course:math 1511589465359 89 //baoniu grade: 1511589465352 4 //zkb course: 1511589465200 90 //zkb course:art 1511589465347 87 //zkb course:math 1511589465340 97 //zkb grade: 1511589465136 5 //===========del one record======== //del recored baoniu ok. //zkb course: 1511589465200 90 //zkb course:art 1511589465347 87 //zkb course:math 1511589465340 97 //zkb grade: 1511589465136 5 //===========show all record======== //zkb course: 1511589465200 90 //zkb course:art 1511589465347 87 //zkb course:math 1511589465340 97 //zkb grade: 1511589465136 5 // //Process finished with exit code 0