原创作品转载请注明出处《Linux内核分析》MOOC课程http://mooc.study.163.com/course/USTC-1000029000

如果我写的不好或者有误的地方请留言

-

题目自拟,内容围绕系统调用system_call的处理过程进行;

-

博客内容中需要仔细分析system_call对应的汇编代码的工作过程,特别注意系统调用返回iret之前的进程调度时机等。

-

总结部分需要阐明自己对“系统调用处理过程”的理解,进一步推广到一般的中断处理过程。

实验报告:

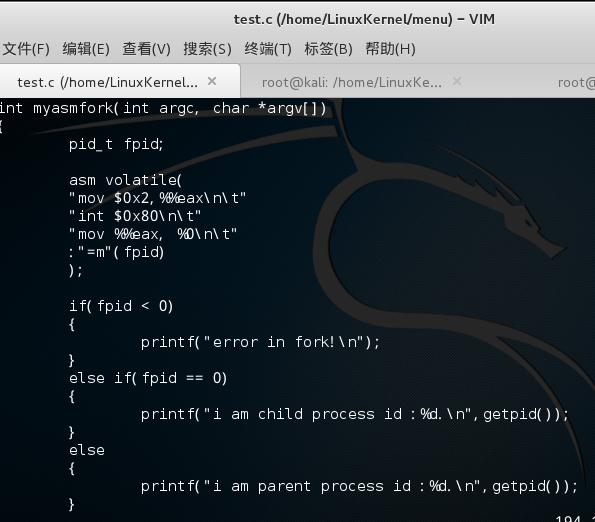

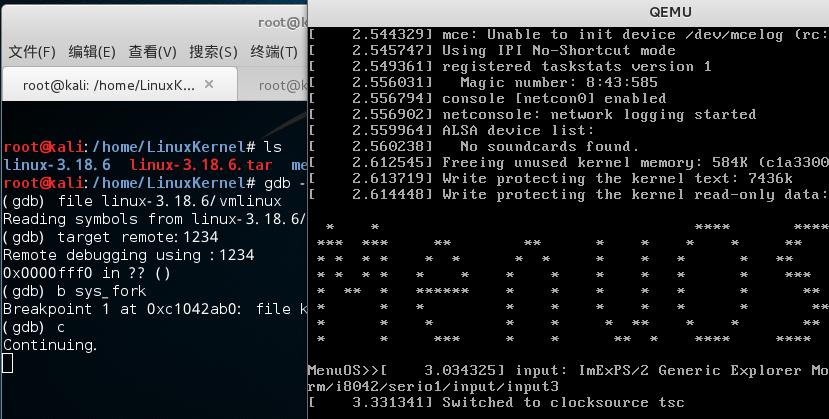

1.将myfork()和myasmfork()函数写入menu/text.c中

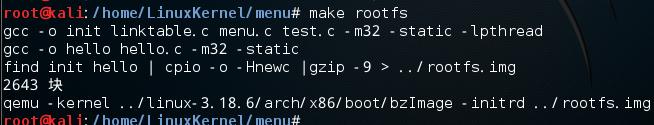

2.重新编译make rootfs

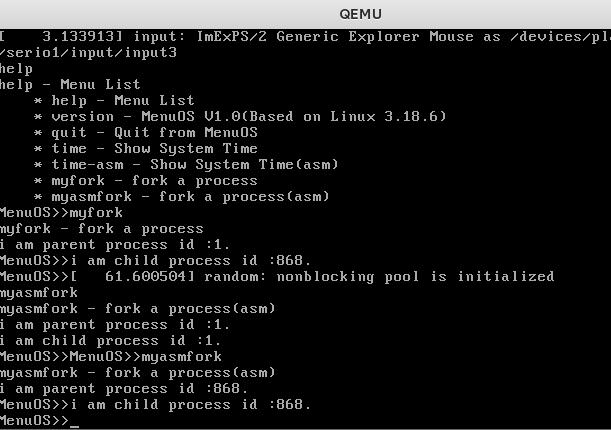

3.运行myfork和myasmfork

4.下断点sys_fork

5.查看上下文

6.单步调试

在sys_fork设置断点

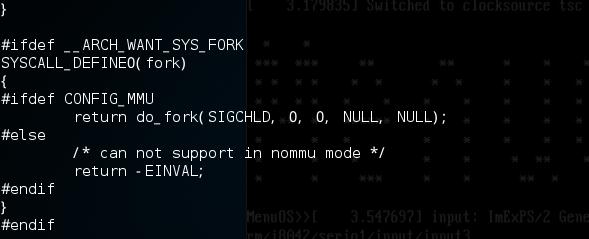

定位到kernel/fork.c 1704行

n:我们可以看到sys_fork的执行流程:只有do_fork

我们进入do_fork

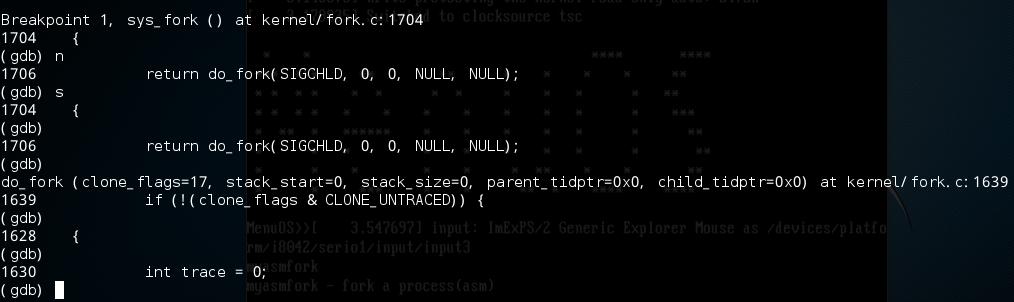

s:单步进入函数do_fork

通过单步调试

主要经历了以下函数

up_task_struct

alloc_task_struct_node

kmem_cache_alloc_node

alloc_kmem_pages_node

alloc_thread_info_node

clear_tsk_need_resched

clear_tsk_thread_flag

set_task_stack_end_magic

rt_mutex_init_task

copy_process

rt_mutex_init_task

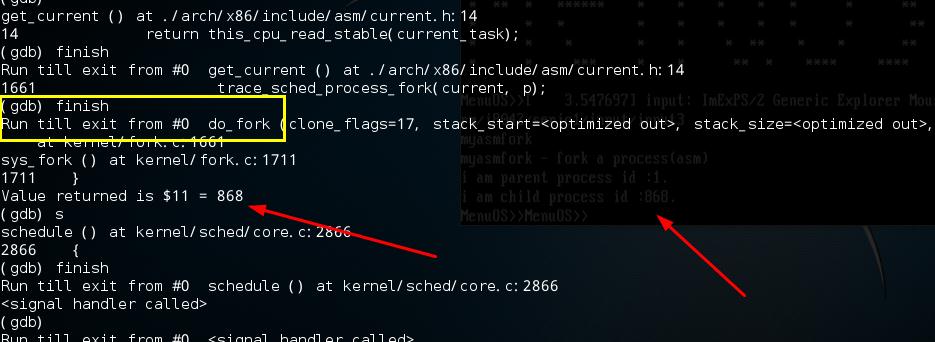

最后创建了新的进程

pid = 868

7.分析entry_32.S

其中entry_32.S如下:

1 /* 2 * 3 * Copyright (C) 1991, 1992 Linus Torvalds 4 */ 5 6 /* 7 * entry.S contains the system-call and fault low-level handling routines. 8 * This also contains the timer-interrupt handler, as well as all interrupts 9 * and faults that can result in a task-switch. 10 * 11 * NOTE: This code handles signal-recognition, which happens every time 12 * after a timer-interrupt and after each system call. 13 * 14 * I changed all the .align's to 4 (16 byte alignment), as that's faster 15 * on a 486. 16 * 17 * Stack layout in 'syscall_exit': 18 * ptrace needs to have all regs on the stack. 19 * if the order here is changed, it needs to be 20 * updated in fork.c:copy_process, signal.c:do_signal, 21 * ptrace.c and ptrace.h 22 * 23 * 0(%esp) - %ebx 24 * 4(%esp) - %ecx 25 * 8(%esp) - %edx 26 * C(%esp) - %esi 27 * 10(%esp) - %edi 28 * 14(%esp) - %ebp 29 * 18(%esp) - %eax 30 * 1C(%esp) - %ds 31 * 20(%esp) - %es 32 * 24(%esp) - %fs 33 * 28(%esp) - %gs saved iff !CONFIG_X86_32_LAZY_GS 34 * 2C(%esp) - orig_eax 35 * 30(%esp) - %eip 36 * 34(%esp) - %cs 37 * 38(%esp) - %eflags 38 * 3C(%esp) - %oldesp 39 * 40(%esp) - %oldss 40 * 41 * "current" is in register %ebx during any slow entries. 42 */ 43 44 #include <linux/linkage.h> 45 #include <linux/err.h> 46 #include <asm/thread_info.h> 47 #include <asm/irqflags.h> 48 #include <asm/errno.h> 49 #include <asm/segment.h> 50 #include <asm/smp.h> 51 #include <asm/page_types.h> 52 #include <asm/percpu.h> 53 #include <asm/dwarf2.h> 54 #include <asm/processor-flags.h> 55 #include <asm/ftrace.h> 56 #include <asm/irq_vectors.h> 57 #include <asm/cpufeature.h> 58 #include <asm/alternative-asm.h> 59 #include <asm/asm.h> 60 #include <asm/smap.h> 61 62 /* Avoid __ASSEMBLER__'ifying <linux/audit.h> just for this. */ 63 #include <linux/elf-em.h> 64 #define AUDIT_ARCH_I386 (EM_386|__AUDIT_ARCH_LE) 65 #define __AUDIT_ARCH_LE 0x40000000 66 67 #ifndef CONFIG_AUDITSYSCALL 68 #define sysenter_audit syscall_trace_entry 69 #define sysexit_audit syscall_exit_work 70 #endif 71 72 .section .entry.text, "ax" 73 74 /* 75 * We use macros for low-level operations which need to be overridden 76 * for paravirtualization. The following will never clobber any registers: 77 * INTERRUPT_RETURN (aka. "iret") 78 * GET_CR0_INTO_EAX (aka. "movl %cr0, %eax") 79 * ENABLE_INTERRUPTS_SYSEXIT (aka "sti; sysexit"). 80 * 81 * For DISABLE_INTERRUPTS/ENABLE_INTERRUPTS (aka "cli"/"sti"), you must 82 * specify what registers can be overwritten (CLBR_NONE, CLBR_EAX/EDX/ECX/ANY). 83 * Allowing a register to be clobbered can shrink the paravirt replacement 84 * enough to patch inline, increasing performance. 85 */ 86 87 #ifdef CONFIG_PREEMPT 88 #define preempt_stop(clobbers) DISABLE_INTERRUPTS(clobbers); TRACE_IRQS_OFF 89 #else 90 #define preempt_stop(clobbers) 91 #define resume_kernel restore_all 92 #endif 93 94 .macro TRACE_IRQS_IRET 95 #ifdef CONFIG_TRACE_IRQFLAGS 96 testl $X86_EFLAGS_IF,PT_EFLAGS(%esp) # interrupts off? 97 jz 1f 98 TRACE_IRQS_ON 99 1: 100 #endif 101 .endm 102 103 /* 104 * User gs save/restore 105 * 106 * %gs is used for userland TLS and kernel only uses it for stack 107 * canary which is required to be at %gs:20 by gcc. Read the comment 108 * at the top of stackprotector.h for more info. 109 * 110 * Local labels 98 and 99 are used. 111 */ 112 #ifdef CONFIG_X86_32_LAZY_GS 113 114 /* unfortunately push/pop can't be no-op */ 115 .macro PUSH_GS 116 pushl_cfi $0 117 .endm 118 .macro POP_GS pop=0 119 addl $(4 + pop), %esp 120 CFI_ADJUST_CFA_OFFSET -(4 + pop) 121 .endm 122 .macro POP_GS_EX 123 .endm 124 125 /* all the rest are no-op */ 126 .macro PTGS_TO_GS 127 .endm 128 .macro PTGS_TO_GS_EX 129 .endm 130 .macro GS_TO_REG reg 131 .endm 132 .macro REG_TO_PTGS reg 133 .endm 134 .macro SET_KERNEL_GS reg 135 .endm 136 137 #else /* CONFIG_X86_32_LAZY_GS */ 138 139 .macro PUSH_GS 140 pushl_cfi %gs 141 /*CFI_REL_OFFSET gs, 0*/ 142 .endm 143 144 .macro POP_GS pop=0 145 98: popl_cfi %gs 146 /*CFI_RESTORE gs*/ 147 .if pop <> 0 148 add $pop, %esp 149 CFI_ADJUST_CFA_OFFSET -pop 150 .endif 151 .endm 152 .macro POP_GS_EX 153 .pushsection .fixup, "ax" 154 99: movl $0, (%esp) 155 jmp 98b 156 .popsection 157 _ASM_EXTABLE(98b,99b) 158 .endm 159 160 .macro PTGS_TO_GS 161 98: mov PT_GS(%esp), %gs 162 .endm 163 .macro PTGS_TO_GS_EX 164 .pushsection .fixup, "ax" 165 99: movl $0, PT_GS(%esp) 166 jmp 98b 167 .popsection 168 _ASM_EXTABLE(98b,99b) 169 .endm 170 171 .macro GS_TO_REG reg 172 movl %gs, eg 173 /*CFI_REGISTER gs, eg*/ 174 .endm 175 .macro REG_TO_PTGS reg 176 movl eg, PT_GS(%esp) 177 /*CFI_REL_OFFSET gs, PT_GS*/ 178 .endm 179 .macro SET_KERNEL_GS reg 180 movl $(__KERNEL_STACK_CANARY), eg 181 movl eg, %gs 182 .endm 183 184 #endif /* CONFIG_X86_32_LAZY_GS */ 185 186 .macro SAVE_ALL 187 cld 188 PUSH_GS 189 pushl_cfi %fs 190 /*CFI_REL_OFFSET fs, 0;*/ 191 pushl_cfi %es 192 /*CFI_REL_OFFSET es, 0;*/ 193 pushl_cfi %ds 194 /*CFI_REL_OFFSET ds, 0;*/ 195 pushl_cfi %eax 196 CFI_REL_OFFSET eax, 0 197 pushl_cfi %ebp 198 CFI_REL_OFFSET ebp, 0 199 pushl_cfi %edi 200 CFI_REL_OFFSET edi, 0 201 pushl_cfi %esi 202 CFI_REL_OFFSET esi, 0 203 pushl_cfi %edx 204 CFI_REL_OFFSET edx, 0 205 pushl_cfi %ecx 206 CFI_REL_OFFSET ecx, 0 207 pushl_cfi %ebx 208 CFI_REL_OFFSET ebx, 0 209 movl $(__USER_DS), %edx 210 movl %edx, %ds 211 movl %edx, %es 212 movl $(__KERNEL_PERCPU), %edx 213 movl %edx, %fs 214 SET_KERNEL_GS %edx 215 .endm 216 217 .macro RESTORE_INT_REGS 218 popl_cfi %ebx 219 CFI_RESTORE ebx 220 popl_cfi %ecx 221 CFI_RESTORE ecx 222 popl_cfi %edx 223 CFI_RESTORE edx 224 popl_cfi %esi 225 CFI_RESTORE esi 226 popl_cfi %edi 227 CFI_RESTORE edi 228 popl_cfi %ebp 229 CFI_RESTORE ebp 230 popl_cfi %eax 231 CFI_RESTORE eax 232 .endm 233 234 .macro RESTORE_REGS pop=0 235 RESTORE_INT_REGS 236 1: popl_cfi %ds 237 /*CFI_RESTORE ds;*/ 238 2: popl_cfi %es 239 /*CFI_RESTORE es;*/ 240 3: popl_cfi %fs 241 /*CFI_RESTORE fs;*/ 242 POP_GS pop 243 .pushsection .fixup, "ax" 244 4: movl $0, (%esp) 245 jmp 1b 246 5: movl $0, (%esp) 247 jmp 2b 248 6: movl $0, (%esp) 249 jmp 3b 250 .popsection 251 _ASM_EXTABLE(1b,4b) 252 _ASM_EXTABLE(2b,5b) 253 _ASM_EXTABLE(3b,6b) 254 POP_GS_EX 255 .endm 256 257 .macro RING0_INT_FRAME 258 CFI_STARTPROC simple 259 CFI_SIGNAL_FRAME 260 CFI_DEF_CFA esp, 3*4 261 /*CFI_OFFSET cs, -2*4;*/ 262 CFI_OFFSET eip, -3*4 263 .endm 264 265 .macro RING0_EC_FRAME 266 CFI_STARTPROC simple 267 CFI_SIGNAL_FRAME 268 CFI_DEF_CFA esp, 4*4 269 /*CFI_OFFSET cs, -2*4;*/ 270 CFI_OFFSET eip, -3*4 271 .endm 272 273 .macro RING0_PTREGS_FRAME 274 CFI_STARTPROC simple 275 CFI_SIGNAL_FRAME 276 CFI_DEF_CFA esp, PT_OLDESP-PT_EBX 277 /*CFI_OFFSET cs, PT_CS-PT_OLDESP;*/ 278 CFI_OFFSET eip, PT_EIP-PT_OLDESP 279 /*CFI_OFFSET es, PT_ES-PT_OLDESP;*/ 280 /*CFI_OFFSET ds, PT_DS-PT_OLDESP;*/ 281 CFI_OFFSET eax, PT_EAX-PT_OLDESP 282 CFI_OFFSET ebp, PT_EBP-PT_OLDESP 283 CFI_OFFSET edi, PT_EDI-PT_OLDESP 284 CFI_OFFSET esi, PT_ESI-PT_OLDESP 285 CFI_OFFSET edx, PT_EDX-PT_OLDESP 286 CFI_OFFSET ecx, PT_ECX-PT_OLDESP 287 CFI_OFFSET ebx, PT_EBX-PT_OLDESP 288 .endm 289 290 ENTRY(ret_from_fork) 291 CFI_STARTPROC 292 pushl_cfi %eax 293 call schedule_tail 294 GET_THREAD_INFO(%ebp) 295 popl_cfi %eax 296 pushl_cfi $0x0202 # Reset kernel eflags 297 popfl_cfi 298 jmp syscall_exit 299 CFI_ENDPROC 300 END(ret_from_fork) 301 302 ENTRY(ret_from_kernel_thread) 303 CFI_STARTPROC 304 pushl_cfi %eax 305 call schedule_tail 306 GET_THREAD_INFO(%ebp) 307 popl_cfi %eax 308 pushl_cfi $0x0202 # Reset kernel eflags 309 popfl_cfi 310 movl PT_EBP(%esp),%eax 311 call *PT_EBX(%esp) 312 movl $0,PT_EAX(%esp) 313 jmp syscall_exit 314 CFI_ENDPROC 315 ENDPROC(ret_from_kernel_thread) 316 317 /* 318 * Return to user mode is not as complex as all this looks, 319 * but we want the default path for a system call return to 320 * go as quickly as possible which is why some of this is 321 * less clear than it otherwise should be. 322 */ 323 324 # userspace resumption stub bypassing syscall exit tracing 325 ALIGN 326 RING0_PTREGS_FRAME 327 ret_from_exception: 328 preempt_stop(CLBR_ANY) 329 ret_from_intr: 330 GET_THREAD_INFO(%ebp) 331 #ifdef CONFIG_VM86 332 movl PT_EFLAGS(%esp), %eax # mix EFLAGS and CS 333 movb PT_CS(%esp), %al 334 andl $(X86_EFLAGS_VM | SEGMENT_RPL_MASK), %eax 335 #else 336 /* 337 * We can be coming here from child spawned by kernel_thread(). 338 */ 339 movl PT_CS(%esp), %eax 340 andl $SEGMENT_RPL_MASK, %eax 341 #endif 342 cmpl $USER_RPL, %eax 343 jb resume_kernel # not returning to v8086 or userspace 344 345 ENTRY(resume_userspace) 346 LOCKDEP_SYS_EXIT 347 DISABLE_INTERRUPTS(CLBR_ANY) # make sure we don't miss an interrupt 348 # setting need_resched or sigpending 349 # between sampling and the iret 350 TRACE_IRQS_OFF 351 movl TI_flags(%ebp), %ecx 352 andl $_TIF_WORK_MASK, %ecx # is there any work to be done on 353 # int/exception return? 354 jne work_pending 355 jmp restore_all 356 END(ret_from_exception) 357 358 #ifdef CONFIG_PREEMPT 359 ENTRY(resume_kernel) 360 DISABLE_INTERRUPTS(CLBR_ANY) 361 need_resched: 362 cmpl $0,PER_CPU_VAR(__preempt_count) 363 jnz restore_all 364 testl $X86_EFLAGS_IF,PT_EFLAGS(%esp) # interrupts off (exception path) ? 365 jz restore_all 366 call preempt_schedule_irq 367 jmp need_resched 368 END(resume_kernel) 369 #endif 370 CFI_ENDPROC 371 372 /* SYSENTER_RETURN points to after the "sysenter" instruction in 373 the vsyscall page. See vsyscall-sysentry.S, which defines the symbol. */ 374 375 # sysenter call handler stub 376 ENTRY(ia32_sysenter_target) 377 CFI_STARTPROC simple 378 CFI_SIGNAL_FRAME 379 CFI_DEF_CFA esp, 0 380 CFI_REGISTER esp, ebp 381 movl TSS_sysenter_sp0(%esp),%esp 382 sysenter_past_esp: 383 /* 384 * Interrupts are disabled here, but we can't trace it until 385 * enough kernel state to call TRACE_IRQS_OFF can be called - but 386 * we immediately enable interrupts at that point anyway. 387 */ 388 pushl_cfi $__USER_DS 389 /*CFI_REL_OFFSET ss, 0*/ 390 pushl_cfi %ebp 391 CFI_REL_OFFSET esp, 0 392 pushfl_cfi 393 orl $X86_EFLAGS_IF, (%esp) 394 pushl_cfi $__USER_CS 395 /*CFI_REL_OFFSET cs, 0*/ 396 /* 397 * Push current_thread_info()->sysenter_return to the stack. 398 * A tiny bit of offset fixup is necessary - 4*4 means the 4 words 399 * pushed above; +8 corresponds to copy_thread's esp0 setting. 400 */ 401 pushl_cfi ((TI_sysenter_return)-THREAD_SIZE+8+4*4)(%esp) 402 CFI_REL_OFFSET eip, 0 403 404 pushl_cfi %eax 405 SAVE_ALL 406 ENABLE_INTERRUPTS(CLBR_NONE) 407 408 /* 409 * Load the potential sixth argument from user stack. 410 * Careful about security. 411 */ 412 cmpl $__PAGE_OFFSET-3,%ebp 413 jae syscall_fault 414 ASM_STAC 415 1: movl (%ebp),%ebp 416 ASM_CLAC 417 movl %ebp,PT_EBP(%esp) 418 _ASM_EXTABLE(1b,syscall_fault) 419 420 GET_THREAD_INFO(%ebp) 421 422 testl $_TIF_WORK_SYSCALL_ENTRY,TI_flags(%ebp) 423 jnz sysenter_audit 424 sysenter_do_call: 425 cmpl $(NR_syscalls), %eax 426 jae sysenter_badsys 427 call *sys_call_table(,%eax,4) 428 sysenter_after_call: 429 movl %eax,PT_EAX(%esp) 430 LOCKDEP_SYS_EXIT 431 DISABLE_INTERRUPTS(CLBR_ANY) 432 TRACE_IRQS_OFF 433 movl TI_flags(%ebp), %ecx 434 testl $_TIF_ALLWORK_MASK, %ecx 435 jne sysexit_audit 436 sysenter_exit: 437 /* if something modifies registers it must also disable sysexit */ 438 movl PT_EIP(%esp), %edx 439 movl PT_OLDESP(%esp), %ecx 440 xorl %ebp,%ebp 441 TRACE_IRQS_ON 442 1: mov PT_FS(%esp), %fs 443 PTGS_TO_GS 444 ENABLE_INTERRUPTS_SYSEXIT 445 446 #ifdef CONFIG_AUDITSYSCALL 447 sysenter_audit: 448 testl $(_TIF_WORK_SYSCALL_ENTRY & ~_TIF_SYSCALL_AUDIT),TI_flags(%ebp) 449 jnz syscall_trace_entry 450 /* movl PT_EAX(%esp), %eax already set, syscall number: 1st arg to audit */ 451 movl PT_EBX(%esp), %edx /* ebx/a0: 2nd arg to audit */ 452 /* movl PT_ECX(%esp), %ecx already set, a1: 3nd arg to audit */ 453 pushl_cfi PT_ESI(%esp) /* a3: 5th arg */ 454 pushl_cfi PT_EDX+4(%esp) /* a2: 4th arg */ 455 call __audit_syscall_entry 456 popl_cfi %ecx /* get that remapped edx off the stack */ 457 popl_cfi %ecx /* get that remapped esi off the stack */ 458 movl PT_EAX(%esp),%eax /* reload syscall number */ 459 jmp sysenter_do_call 460 461 sysexit_audit: 462 testl $(_TIF_ALLWORK_MASK & ~_TIF_SYSCALL_AUDIT), %ecx 463 jne syscall_exit_work 464 TRACE_IRQS_ON 465 ENABLE_INTERRUPTS(CLBR_ANY) 466 movl %eax,%edx /* second arg, syscall return value */ 467 cmpl $-MAX_ERRNO,%eax /* is it an error ? */ 468 setbe %al /* 1 if so, 0 if not */ 469 movzbl %al,%eax /* zero-extend that */ 470 call __audit_syscall_exit 471 DISABLE_INTERRUPTS(CLBR_ANY) 472 TRACE_IRQS_OFF 473 movl TI_flags(%ebp), %ecx 474 testl $(_TIF_ALLWORK_MASK & ~_TIF_SYSCALL_AUDIT), %ecx 475 jne syscall_exit_work 476 movl PT_EAX(%esp),%eax /* reload syscall return value */ 477 jmp sysenter_exit 478 #endif 479 480 CFI_ENDPROC 481 .pushsection .fixup,"ax" 482 2: movl $0,PT_FS(%esp) 483 jmp 1b 484 .popsection 485 _ASM_EXTABLE(1b,2b) 486 PTGS_TO_GS_EX 487 ENDPROC(ia32_sysenter_target) 488 489 # system call handler stub 490 ENTRY(system_call) 491 RING0_INT_FRAME # can't unwind into user space anyway 492 ASM_CLAC 493 pushl_cfi %eax # save orig_eax 494 SAVE_ALL 495 GET_THREAD_INFO(%ebp) 496 # system call tracing in operation / emulation 497 testl $_TIF_WORK_SYSCALL_ENTRY,TI_flags(%ebp) 498 jnz syscall_trace_entry 499 cmpl $(NR_syscalls), %eax 500 jae syscall_badsys 501 syscall_call: 502 call *sys_call_table(,%eax,4) 503 syscall_after_call: 504 movl %eax,PT_EAX(%esp) # store the return value 505 syscall_exit: 506 LOCKDEP_SYS_EXIT 507 DISABLE_INTERRUPTS(CLBR_ANY) # make sure we don't miss an interrupt 508 # setting need_resched or sigpending 509 # between sampling and the iret 510 TRACE_IRQS_OFF 511 movl TI_flags(%ebp), %ecx 512 testl $_TIF_ALLWORK_MASK, %ecx # current->work 513 jne syscall_exit_work 514 515 restore_all: 516 TRACE_IRQS_IRET 517 restore_all_notrace: 518 #ifdef CONFIG_X86_ESPFIX32 519 movl PT_EFLAGS(%esp), %eax # mix EFLAGS, SS and CS 520 # Warning: PT_OLDSS(%esp) contains the wrong/random values if we 521 # are returning to the kernel. 522 # See comments in process.c:copy_thread() for details. 523 movb PT_OLDSS(%esp), %ah 524 movb PT_CS(%esp), %al 525 andl $(X86_EFLAGS_VM | (SEGMENT_TI_MASK << 8) | SEGMENT_RPL_MASK), %eax 526 cmpl $((SEGMENT_LDT << 8) | USER_RPL), %eax 527 CFI_REMEMBER_STATE 528 je ldt_ss # returning to user-space with LDT SS 529 #endif 530 restore_nocheck: 531 RESTORE_REGS 4 # skip orig_eax/error_code 532 irq_return: 533 INTERRUPT_RETURN 534 .section .fixup,"ax" 535 ENTRY(iret_exc) 536 pushl $0 # no error code 537 pushl $do_iret_error 538 jmp error_code 539 .previous 540 _ASM_EXTABLE(irq_return,iret_exc) 541 542 #ifdef CONFIG_X86_ESPFIX32 543 CFI_RESTORE_STATE 544 ldt_ss: 545 #ifdef CONFIG_PARAVIRT 546 /* 547 * The kernel can't run on a non-flat stack if paravirt mode 548 * is active. Rather than try to fixup the high bits of 549 * ESP, bypass this code entirely. This may break DOSemu 550 * and/or Wine support in a paravirt VM, although the option 551 * is still available to implement the setting of the high 552 * 16-bits in the INTERRUPT_RETURN paravirt-op. 553 */ 554 cmpl $0, pv_info+PARAVIRT_enabled 555 jne restore_nocheck 556 #endif 557 558 /* 559 * Setup and switch to ESPFIX stack 560 * 561 * We're returning to userspace with a 16 bit stack. The CPU will not 562 * restore the high word of ESP for us on executing iret... This is an 563 * "official" bug of all the x86-compatible CPUs, which we can work 564 * around to make dosemu and wine happy. We do this by preloading the 565 * high word of ESP with the high word of the userspace ESP while 566 * compensating for the offset by changing to the ESPFIX segment with 567 * a base address that matches for the difference. 568 */ 569 #define GDT_ESPFIX_SS PER_CPU_VAR(gdt_page) + (GDT_ENTRY_ESPFIX_SS * 8) 570 mov %esp, %edx /* load kernel esp */ 571 mov PT_OLDESP(%esp), %eax /* load userspace esp */ 572 mov %dx, %ax /* eax: new kernel esp */ 573 sub %eax, %edx /* offset (low word is 0) */ 574 shr $16, %edx 575 mov %dl, GDT_ESPFIX_SS + 4 /* bits 16..23 */ 576 mov %dh, GDT_ESPFIX_SS + 7 /* bits 24..31 */ 577 pushl_cfi $__ESPFIX_SS 578 pushl_cfi %eax /* new kernel esp */ 579 /* Disable interrupts, but do not irqtrace this section: we 580 * will soon execute iret and the tracer was already set to 581 * the irqstate after the iret */ 582 DISABLE_INTERRUPTS(CLBR_EAX) 583 lss (%esp), %esp /* switch to espfix segment */ 584 CFI_ADJUST_CFA_OFFSET -8 585 jmp restore_nocheck 586 #endif 587 CFI_ENDPROC 588 ENDPROC(system_call) 589 590 # perform work that needs to be done immediately before resumption 591 ALIGN 592 RING0_PTREGS_FRAME # can't unwind into user space anyway 593 work_pending: 594 testb $_TIF_NEED_RESCHED, %cl 595 jz work_notifysig 596 work_resched: 597 call schedule 598 LOCKDEP_SYS_EXIT 599 DISABLE_INTERRUPTS(CLBR_ANY) # make sure we don't miss an interrupt 600 # setting need_resched or sigpending 601 # between sampling and the iret 602 TRACE_IRQS_OFF 603 movl TI_flags(%ebp), %ecx 604 andl $_TIF_WORK_MASK, %ecx # is there any work to be done other 605 # than syscall tracing? 606 jz restore_all 607 testb $_TIF_NEED_RESCHED, %cl 608 jnz work_resched 609 610 work_notifysig: # deal with pending signals and 611 # notify-resume requests 612 #ifdef CONFIG_VM86 613 testl $X86_EFLAGS_VM, PT_EFLAGS(%esp) 614 movl %esp, %eax 615 jne work_notifysig_v86 # returning to kernel-space or 616 # vm86-space 617 1: 618 #else 619 movl %esp, %eax 620 #endif 621 TRACE_IRQS_ON 622 ENABLE_INTERRUPTS(CLBR_NONE) 623 movb PT_CS(%esp), %bl 624 andb $SEGMENT_RPL_MASK, %bl 625 cmpb $USER_RPL, %bl 626 jb resume_kernel 627 xorl %edx, %edx 628 call do_notify_resume 629 jmp resume_userspace 630 631 #ifdef CONFIG_VM86 632 ALIGN 633 work_notifysig_v86: 634 pushl_cfi %ecx # save ti_flags for do_notify_resume 635 call save_v86_state # %eax contains pt_regs pointer 636 popl_cfi %ecx 637 movl %eax, %esp 638 jmp 1b 639 #endif 640 END(work_pending) 641 642 # perform syscall exit tracing 643 ALIGN 644 syscall_trace_entry: 645 movl $-ENOSYS,PT_EAX(%esp) 646 movl %esp, %eax 647 call syscall_trace_enter 648 /* What it returned is what we'll actually use. */ 649 cmpl $(NR_syscalls), %eax 650 jnae syscall_call 651 jmp syscall_exit 652 END(syscall_trace_entry) 653 654 # perform syscall exit tracing 655 ALIGN 656 syscall_exit_work: 657 testl $_TIF_WORK_SYSCALL_EXIT, %ecx 658 jz work_pending 659 TRACE_IRQS_ON 660 ENABLE_INTERRUPTS(CLBR_ANY) # could let syscall_trace_leave() call 661 # schedule() instead 662 movl %esp, %eax 663 call syscall_trace_leave 664 jmp resume_userspace 665 END(syscall_exit_work) 666 CFI_ENDPROC 667 668 RING0_INT_FRAME # can't unwind into user space anyway 669 syscall_fault: 670 ASM_CLAC 671 GET_THREAD_INFO(%ebp) 672 movl $-EFAULT,PT_EAX(%esp) 673 jmp resume_userspace 674 END(syscall_fault) 675 676 syscall_badsys: 677 movl $-ENOSYS,%eax 678 jmp syscall_after_call 679 END(syscall_badsys) 680 681 sysenter_badsys: 682 movl $-ENOSYS,%eax 683 jmp sysenter_after_call 684 END(sysenter_badsys) 685 CFI_ENDPROC 686 687 .macro FIXUP_ESPFIX_STACK 688 /* 689 * Switch back for ESPFIX stack to the normal zerobased stack 690 * 691 * We can't call C functions using the ESPFIX stack. This code reads 692 * the high word of the segment base from the GDT and swiches to the 693 * normal stack and adjusts ESP with the matching offset. 694 */ 695 #ifdef CONFIG_X86_ESPFIX32 696 /* fixup the stack */ 697 mov GDT_ESPFIX_SS + 4, %al /* bits 16..23 */ 698 mov GDT_ESPFIX_SS + 7, %ah /* bits 24..31 */ 699 shl $16, %eax 700 addl %esp, %eax /* the adjusted stack pointer */ 701 pushl_cfi $__KERNEL_DS 702 pushl_cfi %eax 703 lss (%esp), %esp /* switch to the normal stack segment */ 704 CFI_ADJUST_CFA_OFFSET -8 705 #endif 706 .endm 707 .macro UNWIND_ESPFIX_STACK 708 #ifdef CONFIG_X86_ESPFIX32 709 movl %ss, %eax 710 /* see if on espfix stack */ 711 cmpw $__ESPFIX_SS, %ax 712 jne 27f 713 movl $__KERNEL_DS, %eax 714 movl %eax, %ds 715 movl %eax, %es 716 /* switch to normal stack */ 717 FIXUP_ESPFIX_STACK 718 27: 719 #endif 720 .endm 721 722 /* 723 * Build the entry stubs and pointer table with some assembler magic. 724 * We pack 7 stubs into a single 32-byte chunk, which will fit in a 725 * single cache line on all modern x86 implementations. 726 */ 727 .section .init.rodata,"a" 728 ENTRY(interrupt) 729 .section .entry.text, "ax" 730 .p2align 5 731 .p2align CONFIG_X86_L1_CACHE_SHIFT 732 ENTRY(irq_entries_start) 733 RING0_INT_FRAME 734 vector=FIRST_EXTERNAL_VECTOR 735 .rept (NR_VECTORS-FIRST_EXTERNAL_VECTOR+6)/7 736 .balign 32 737 .rept 7 738 .if vector < NR_VECTORS 739 .if vector <> FIRST_EXTERNAL_VECTOR 740 CFI_ADJUST_CFA_OFFSET -4 741 .endif 742 1: pushl_cfi $(~vector+0x80) /* Note: always in signed byte range */ 743 .if ((vector-FIRST_EXTERNAL_VECTOR)%7) <> 6 744 jmp 2f 745 .endif 746 .previous 747 .long 1b 748 .section .entry.text, "ax" 749 vector=vector+1 750 .endif 751 .endr 752 2: jmp common_interrupt 753 .endr 754 END(irq_entries_start) 755 756 .previous 757 END(interrupt) 758 .previous 759 760 /* 761 * the CPU automatically disables interrupts when executing an IRQ vector, 762 * so IRQ-flags tracing has to follow that: 763 */ 764 .p2align CONFIG_X86_L1_CACHE_SHIFT 765 common_interrupt: 766 ASM_CLAC 767 addl $-0x80,(%esp) /* Adjust vector into the [-256,-1] range */ 768 SAVE_ALL 769 TRACE_IRQS_OFF 770 movl %esp,%eax 771 call do_IRQ 772 jmp ret_from_intr 773 ENDPROC(common_interrupt) 774 CFI_ENDPROC 775 776 #define BUILD_INTERRUPT3(name, nr, fn) 777 ENTRY(name) 778 RING0_INT_FRAME; 779 ASM_CLAC; 780 pushl_cfi $~(nr); 781 SAVE_ALL; 782 TRACE_IRQS_OFF 783 movl %esp,%eax; 784 call fn; 785 jmp ret_from_intr; 786 CFI_ENDPROC; 787 ENDPROC(name) 788 789 790 #ifdef CONFIG_TRACING 791 #define TRACE_BUILD_INTERRUPT(name, nr) 792 BUILD_INTERRUPT3(trace_##name, nr, smp_trace_##name) 793 #else 794 #define TRACE_BUILD_INTERRUPT(name, nr) 795 #endif 796 797 #define BUILD_INTERRUPT(name, nr) 798 BUILD_INTERRUPT3(name, nr, smp_##name); 799 TRACE_BUILD_INTERRUPT(name, nr) 800 801 /* The include is where all of the SMP etc. interrupts come from */ 802 #include <asm/entry_arch.h> 803 804 ENTRY(coprocessor_error) 805 RING0_INT_FRAME 806 ASM_CLAC 807 pushl_cfi $0 808 pushl_cfi $do_coprocessor_error 809 jmp error_code 810 CFI_ENDPROC 811 END(coprocessor_error) 812 813 ENTRY(simd_coprocessor_error) 814 RING0_INT_FRAME 815 ASM_CLAC 816 pushl_cfi $0 817 #ifdef CONFIG_X86_INVD_BUG 818 /* AMD 486 bug: invd from userspace calls exception 19 instead of #GP */ 819 661: pushl_cfi $do_general_protection 820 662: 821 .section .altinstructions,"a" 822 altinstruction_entry 661b, 663f, X86_FEATURE_XMM, 662b-661b, 664f-663f 823 .previous 824 .section .altinstr_replacement,"ax" 825 663: pushl $do_simd_coprocessor_error 826 664: 827 .previous 828 #else 829 pushl_cfi $do_simd_coprocessor_error 830 #endif 831 jmp error_code 832 CFI_ENDPROC 833 END(simd_coprocessor_error) 834 835 ENTRY(device_not_available) 836 RING0_INT_FRAME 837 ASM_CLAC 838 pushl_cfi $-1 # mark this as an int 839 pushl_cfi $do_device_not_available 840 jmp error_code 841 CFI_ENDPROC 842 END(device_not_available) 843 844 #ifdef CONFIG_PARAVIRT 845 ENTRY(native_iret) 846 iret 847 _ASM_EXTABLE(native_iret, iret_exc) 848 END(native_iret) 849 850 ENTRY(native_irq_enable_sysexit) 851 sti 852 sysexit 853 END(native_irq_enable_sysexit) 854 #endif 855 856 ENTRY(overflow) 857 RING0_INT_FRAME 858 ASM_CLAC 859 pushl_cfi $0 860 pushl_cfi $do_overflow 861 jmp error_code 862 CFI_ENDPROC 863 END(overflow) 864 865 ENTRY(bounds) 866 RING0_INT_FRAME 867 ASM_CLAC 868 pushl_cfi $0 869 pushl_cfi $do_bounds 870 jmp error_code 871 CFI_ENDPROC 872 END(bounds) 873 874 ENTRY(invalid_op) 875 RING0_INT_FRAME 876 ASM_CLAC 877 pushl_cfi $0 878 pushl_cfi $do_invalid_op 879 jmp error_code 880 CFI_ENDPROC 881 END(invalid_op) 882 883 ENTRY(coprocessor_segment_overrun) 884 RING0_INT_FRAME 885 ASM_CLAC 886 pushl_cfi $0 887 pushl_cfi $do_coprocessor_segment_overrun 888 jmp error_code 889 CFI_ENDPROC 890 END(coprocessor_segment_overrun) 891 892 ENTRY(invalid_TSS) 893 RING0_EC_FRAME 894 ASM_CLAC 895 pushl_cfi $do_invalid_TSS 896 jmp error_code 897 CFI_ENDPROC 898 END(invalid_TSS) 899 900 ENTRY(segment_not_present) 901 RING0_EC_FRAME 902 ASM_CLAC 903 pushl_cfi $do_segment_not_present 904 jmp error_code 905 CFI_ENDPROC 906 END(segment_not_present) 907 908 ENTRY(stack_segment) 909 RING0_EC_FRAME 910 ASM_CLAC 911 pushl_cfi $do_stack_segment 912 jmp error_code 913 CFI_ENDPROC 914 END(stack_segment) 915 916 ENTRY(alignment_check) 917 RING0_EC_FRAME 918 ASM_CLAC 919 pushl_cfi $do_alignment_check 920 jmp error_code 921 CFI_ENDPROC 922 END(alignment_check) 923 924 ENTRY(divide_error) 925 RING0_INT_FRAME 926 ASM_CLAC 927 pushl_cfi $0 # no error code 928 pushl_cfi $do_divide_error 929 jmp error_code 930 CFI_ENDPROC 931 END(divide_error) 932 933 #ifdef CONFIG_X86_MCE 934 ENTRY(machine_check) 935 RING0_INT_FRAME 936 ASM_CLAC 937 pushl_cfi $0 938 pushl_cfi machine_check_vector 939 jmp error_code 940 CFI_ENDPROC 941 END(machine_check) 942 #endif 943 944 ENTRY(spurious_interrupt_bug) 945 RING0_INT_FRAME 946 ASM_CLAC 947 pushl_cfi $0 948 pushl_cfi $do_spurious_interrupt_bug 949 jmp error_code 950 CFI_ENDPROC 951 END(spurious_interrupt_bug) 952 953 #ifdef CONFIG_XEN 954 /* Xen doesn't set %esp to be precisely what the normal sysenter 955 entrypoint expects, so fix it up before using the normal path. */ 956 ENTRY(xen_sysenter_target) 957 RING0_INT_FRAME 958 addl $5*4, %esp /* remove xen-provided frame */ 959 CFI_ADJUST_CFA_OFFSET -5*4 960 jmp sysenter_past_esp 961 CFI_ENDPROC 962 963 ENTRY(xen_hypervisor_callback) 964 CFI_STARTPROC 965 pushl_cfi $-1 /* orig_ax = -1 => not a system call */ 966 SAVE_ALL 967 TRACE_IRQS_OFF 968 969 /* Check to see if we got the event in the critical 970 region in xen_iret_direct, after we've reenabled 971 events and checked for pending events. This simulates 972 iret instruction's behaviour where it delivers a 973 pending interrupt when enabling interrupts. */ 974 movl PT_EIP(%esp),%eax 975 cmpl $xen_iret_start_crit,%eax 976 jb 1f 977 cmpl $xen_iret_end_crit,%eax 978 jae 1f 979 980 jmp xen_iret_crit_fixup 981 982 ENTRY(xen_do_upcall) 983 1: mov %esp, %eax 984 call xen_evtchn_do_upcall 985 jmp ret_from_intr 986 CFI_ENDPROC 987 ENDPROC(xen_hypervisor_callback) 988 989 # Hypervisor uses this for application faults while it executes. 990 # We get here for two reasons: 991 # 1. Fault while reloading DS, ES, FS or GS 992 # 2. Fault while executing IRET 993 # Category 1 we fix up by reattempting the load, and zeroing the segment 994 # register if the load fails. 995 # Category 2 we fix up by jumping to do_iret_error. We cannot use the 996 # normal Linux return path in this case because if we use the IRET hypercall 997 # to pop the stack frame we end up in an infinite loop of failsafe callbacks. 998 # We distinguish between categories by maintaining a status value in EAX. 999 ENTRY(xen_failsafe_callback) 1000 CFI_STARTPROC 1001 pushl_cfi %eax 1002 movl $1,%eax 1003 1: mov 4(%esp),%ds 1004 2: mov 8(%esp),%es 1005 3: mov 12(%esp),%fs 1006 4: mov 16(%esp),%gs 1007 /* EAX == 0 => Category 1 (Bad segment) 1008 EAX != 0 => Category 2 (Bad IRET) */ 1009 testl %eax,%eax 1010 popl_cfi %eax 1011 lea 16(%esp),%esp 1012 CFI_ADJUST_CFA_OFFSET -16 1013 jz 5f 1014 jmp iret_exc 1015 5: pushl_cfi $-1 /* orig_ax = -1 => not a system call */ 1016 SAVE_ALL 1017 jmp ret_from_exception 1018 CFI_ENDPROC 1019 1020 .section .fixup,"ax" 1021 6: xorl %eax,%eax 1022 movl %eax,4(%esp) 1023 jmp 1b 1024 7: xorl %eax,%eax 1025 movl %eax,8(%esp) 1026 jmp 2b 1027 8: xorl %eax,%eax 1028 movl %eax,12(%esp) 1029 jmp 3b 1030 9: xorl %eax,%eax 1031 movl %eax,16(%esp) 1032 jmp 4b 1033 .previous 1034 _ASM_EXTABLE(1b,6b) 1035 _ASM_EXTABLE(2b,7b) 1036 _ASM_EXTABLE(3b,8b) 1037 _ASM_EXTABLE(4b,9b) 1038 ENDPROC(xen_failsafe_callback) 1039 1040 BUILD_INTERRUPT3(xen_hvm_callback_vector, HYPERVISOR_CALLBACK_VECTOR, 1041 xen_evtchn_do_upcall) 1042 1043 #endif /* CONFIG_XEN */ 1044 1045 #if IS_ENABLED(CONFIG_HYPERV) 1046 1047 BUILD_INTERRUPT3(hyperv_callback_vector, HYPERVISOR_CALLBACK_VECTOR, 1048 hyperv_vector_handler) 1049 1050 #endif /* CONFIG_HYPERV */ 1051 1052 #ifdef CONFIG_FUNCTION_TRACER 1053 #ifdef CONFIG_DYNAMIC_FTRACE 1054 1055 ENTRY(mcount) 1056 ret 1057 END(mcount) 1058 1059 ENTRY(ftrace_caller) 1060 pushl %eax 1061 pushl %ecx 1062 pushl %edx 1063 pushl $0 /* Pass NULL as regs pointer */ 1064 movl 4*4(%esp), %eax 1065 movl 0x4(%ebp), %edx 1066 movl function_trace_op, %ecx 1067 subl $MCOUNT_INSN_SIZE, %eax 1068 1069 .globl ftrace_call 1070 ftrace_call: 1071 call ftrace_stub 1072 1073 addl $4,%esp /* skip NULL pointer */ 1074 popl %edx 1075 popl %ecx 1076 popl %eax 1077 ftrace_ret: 1078 #ifdef CONFIG_FUNCTION_GRAPH_TRACER 1079 .globl ftrace_graph_call 1080 ftrace_graph_call: 1081 jmp ftrace_stub 1082 #endif 1083 1084 .globl ftrace_stub 1085 ftrace_stub: 1086 ret 1087 END(ftrace_caller) 1088 1089 ENTRY(ftrace_regs_caller) 1090 pushf /* push flags before compare (in cs location) */ 1091 1092 /* 1093 * i386 does not save SS and ESP when coming from kernel. 1094 * Instead, to get sp, ®s->sp is used (see ptrace.h). 1095 * Unfortunately, that means eflags must be at the same location 1096 * as the current return ip is. We move the return ip into the 1097 * ip location, and move flags into the return ip location. 1098 */ 1099 pushl 4(%esp) /* save return ip into ip slot */ 1100 1101 pushl $0 /* Load 0 into orig_ax */ 1102 pushl %gs 1103 pushl %fs 1104 pushl %es 1105 pushl %ds 1106 pushl %eax 1107 pushl %ebp 1108 pushl %edi 1109 pushl %esi 1110 pushl %edx 1111 pushl %ecx 1112 pushl %ebx 1113 1114 movl 13*4(%esp), %eax /* Get the saved flags */ 1115 movl %eax, 14*4(%esp) /* Move saved flags into regs->flags location */ 1116 /* clobbering return ip */ 1117 movl $__KERNEL_CS,13*4(%esp) 1118 1119 movl 12*4(%esp), %eax /* Load ip (1st parameter) */ 1120 subl $MCOUNT_INSN_SIZE, %eax /* Adjust ip */ 1121 movl 0x4(%ebp), %edx /* Load parent ip (2nd parameter) */ 1122 movl function_trace_op, %ecx /* Save ftrace_pos in 3rd parameter */ 1123 pushl %esp /* Save pt_regs as 4th parameter */ 1124 1125 GLOBAL(ftrace_regs_call) 1126 call ftrace_stub 1127 1128 addl $4, %esp /* Skip pt_regs */ 1129 movl 14*4(%esp), %eax /* Move flags back into cs */ 1130 movl %eax, 13*4(%esp) /* Needed to keep addl from modifying flags */ 1131 movl 12*4(%esp), %eax /* Get return ip from regs->ip */ 1132 movl %eax, 14*4(%esp) /* Put return ip back for ret */ 1133 1134 popl %ebx 1135 popl %ecx 1136 popl %edx 1137 popl %esi 1138 popl %edi 1139 popl %ebp 1140 popl %eax 1141 popl %ds 1142 popl %es 1143 popl %fs 1144 popl %gs 1145 addl $8, %esp /* Skip orig_ax and ip */ 1146 popf /* Pop flags at end (no addl to corrupt flags) */ 1147 jmp ftrace_ret 1148 1149 popf 1150 jmp ftrace_stub 1151 #else /* ! CONFIG_DYNAMIC_FTRACE */ 1152 1153 ENTRY(mcount) 1154 cmpl $__PAGE_OFFSET, %esp 1155 jb ftrace_stub /* Paging not enabled yet? */ 1156 1157 cmpl $ftrace_stub, ftrace_trace_function 1158 jnz trace 1159 #ifdef CONFIG_FUNCTION_GRAPH_TRACER 1160 cmpl $ftrace_stub, ftrace_graph_return 1161 jnz ftrace_graph_caller 1162 1163 cmpl $ftrace_graph_entry_stub, ftrace_graph_entry 1164 jnz ftrace_graph_caller 1165 #endif 1166 .globl ftrace_stub 1167 ftrace_stub: 1168 ret 1169 1170 /* taken from glibc */ 1171 trace: 1172 pushl %eax 1173 pushl %ecx 1174 pushl %edx 1175 movl 0xc(%esp), %eax 1176 movl 0x4(%ebp), %edx 1177 subl $MCOUNT_INSN_SIZE, %eax 1178 1179 call *ftrace_trace_function 1180 1181 popl %edx 1182 popl %ecx 1183 popl %eax 1184 jmp ftrace_stub 1185 END(mcount) 1186 #endif /* CONFIG_DYNAMIC_FTRACE */ 1187 #endif /* CONFIG_FUNCTION_TRACER */ 1188 1189 #ifdef CONFIG_FUNCTION_GRAPH_TRACER 1190 ENTRY(ftrace_graph_caller) 1191 pushl %eax 1192 pushl %ecx 1193 pushl %edx 1194 movl 0xc(%esp), %edx 1195 lea 0x4(%ebp), %eax 1196 movl (%ebp), %ecx 1197 subl $MCOUNT_INSN_SIZE, %edx 1198 call prepare_ftrace_return 1199 popl %edx 1200 popl %ecx 1201 popl %eax 1202 ret 1203 END(ftrace_graph_caller) 1204 1205 .globl return_to_handler 1206 return_to_handler: 1207 pushl %eax 1208 pushl %edx 1209 movl %ebp, %eax 1210 call ftrace_return_to_handler 1211 movl %eax, %ecx 1212 popl %edx 1213 popl %eax 1214 jmp *%ecx 1215 #endif 1216 1217 #ifdef CONFIG_TRACING 1218 ENTRY(trace_page_fault) 1219 RING0_EC_FRAME 1220 ASM_CLAC 1221 pushl_cfi $trace_do_page_fault 1222 jmp error_code 1223 CFI_ENDPROC 1224 END(trace_page_fault) 1225 #endif 1226 1227 ENTRY(page_fault) 1228 RING0_EC_FRAME 1229 ASM_CLAC 1230 pushl_cfi $do_page_fault 1231 ALIGN 1232 error_code: 1233 /* the function address is in %gs's slot on the stack */ 1234 pushl_cfi %fs 1235 /*CFI_REL_OFFSET fs, 0*/ 1236 pushl_cfi %es 1237 /*CFI_REL_OFFSET es, 0*/ 1238 pushl_cfi %ds 1239 /*CFI_REL_OFFSET ds, 0*/ 1240 pushl_cfi %eax 1241 CFI_REL_OFFSET eax, 0 1242 pushl_cfi %ebp 1243 CFI_REL_OFFSET ebp, 0 1244 pushl_cfi %edi 1245 CFI_REL_OFFSET edi, 0 1246 pushl_cfi %esi 1247 CFI_REL_OFFSET esi, 0 1248 pushl_cfi %edx 1249 CFI_REL_OFFSET edx, 0 1250 pushl_cfi %ecx 1251 CFI_REL_OFFSET ecx, 0 1252 pushl_cfi %ebx 1253 CFI_REL_OFFSET ebx, 0 1254 cld 1255 movl $(__KERNEL_PERCPU), %ecx 1256 movl %ecx, %fs 1257 UNWIND_ESPFIX_STACK 1258 GS_TO_REG %ecx 1259 movl PT_GS(%esp), %edi # get the function address 1260 movl PT_ORIG_EAX(%esp), %edx # get the error code 1261 movl $-1, PT_ORIG_EAX(%esp) # no syscall to restart 1262 REG_TO_PTGS %ecx 1263 SET_KERNEL_GS %ecx 1264 movl $(__USER_DS), %ecx 1265 movl %ecx, %ds 1266 movl %ecx, %es 1267 TRACE_IRQS_OFF 1268 movl %esp,%eax # pt_regs pointer 1269 call *%edi 1270 jmp ret_from_exception 1271 CFI_ENDPROC 1272 END(page_fault) 1273 1274 /* 1275 * Debug traps and NMI can happen at the one SYSENTER instruction 1276 * that sets up the real kernel stack. Check here, since we can't 1277 * allow the wrong stack to be used. 1278 * 1279 * "TSS_sysenter_sp0+12" is because the NMI/debug handler will have 1280 * already pushed 3 words if it hits on the sysenter instruction: 1281 * eflags, cs and eip. 1282 * 1283 * We just load the right stack, and push the three (known) values 1284 * by hand onto the new stack - while updating the return eip past 1285 * the instruction that would have done it for sysenter. 1286 */ 1287 .macro FIX_STACK offset ok label 1288 cmpw $__KERNEL_CS, 4(%esp) 1289 jne ok 1290 label: 1291 movl TSS_sysenter_sp0 + offset(%esp), %esp 1292 CFI_DEF_CFA esp, 0 1293 CFI_UNDEFINED eip 1294 pushfl_cfi 1295 pushl_cfi $__KERNEL_CS 1296 pushl_cfi $sysenter_past_esp 1297 CFI_REL_OFFSET eip, 0 1298 .endm 1299 1300 ENTRY(debug) 1301 RING0_INT_FRAME 1302 ASM_CLAC 1303 cmpl $ia32_sysenter_target,(%esp) 1304 jne debug_stack_correct 1305 FIX_STACK 12, debug_stack_correct, debug_esp_fix_insn 1306 debug_stack_correct: 1307 pushl_cfi $-1 # mark this as an int 1308 SAVE_ALL 1309 TRACE_IRQS_OFF 1310 xorl %edx,%edx # error code 0 1311 movl %esp,%eax # pt_regs pointer 1312 call do_debug 1313 jmp ret_from_exception 1314 CFI_ENDPROC 1315 END(debug) 1316 1317 /* 1318 * NMI is doubly nasty. It can happen _while_ we're handling 1319 * a debug fault, and the debug fault hasn't yet been able to 1320 * clear up the stack. So we first check whether we got an 1321 * NMI on the sysenter entry path, but after that we need to 1322 * check whether we got an NMI on the debug path where the debug 1323 * fault happened on the sysenter path. 1324 */ 1325 ENTRY(nmi) 1326 RING0_INT_FRAME 1327 ASM_CLAC 1328 #ifdef CONFIG_X86_ESPFIX32 1329 pushl_cfi %eax 1330 movl %ss, %eax 1331 cmpw $__ESPFIX_SS, %ax 1332 popl_cfi %eax 1333 je nmi_espfix_stack 1334 #endif 1335 cmpl $ia32_sysenter_target,(%esp) 1336 je nmi_stack_fixup 1337 pushl_cfi %eax 1338 movl %esp,%eax 1339 /* Do not access memory above the end of our stack page, 1340 * it might not exist. 1341 */ 1342 andl $(THREAD_SIZE-1),%eax 1343 cmpl $(THREAD_SIZE-20),%eax 1344 popl_cfi %eax 1345 jae nmi_stack_correct 1346 cmpl $ia32_sysenter_target,12(%esp) 1347 je nmi_debug_stack_check 1348 nmi_stack_correct: 1349 /* We have a RING0_INT_FRAME here */ 1350 pushl_cfi %eax 1351 SAVE_ALL 1352 xorl %edx,%edx # zero error code 1353 movl %esp,%eax # pt_regs pointer 1354 call do_nmi 1355 jmp restore_all_notrace 1356 CFI_ENDPROC 1357 1358 nmi_stack_fixup: 1359 RING0_INT_FRAME 1360 FIX_STACK 12, nmi_stack_correct, 1 1361 jmp nmi_stack_correct 1362 1363 nmi_debug_stack_check: 1364 /* We have a RING0_INT_FRAME here */ 1365 cmpw $__KERNEL_CS,16(%esp) 1366 jne nmi_stack_correct 1367 cmpl $debug,(%esp) 1368 jb nmi_stack_correct 1369 cmpl $debug_esp_fix_insn,(%esp) 1370 ja nmi_stack_correct 1371 FIX_STACK 24, nmi_stack_correct, 1 1372 jmp nmi_stack_correct 1373 1374 #ifdef CONFIG_X86_ESPFIX32 1375 nmi_espfix_stack: 1376 /* We have a RING0_INT_FRAME here. 1377 * 1378 * create the pointer to lss back 1379 */ 1380 pushl_cfi %ss 1381 pushl_cfi %esp 1382 addl $4, (%esp) 1383 /* copy the iret frame of 12 bytes */ 1384 .rept 3 1385 pushl_cfi 16(%esp) 1386 .endr 1387 pushl_cfi %eax 1388 SAVE_ALL 1389 FIXUP_ESPFIX_STACK # %eax == %esp 1390 xorl %edx,%edx # zero error code 1391 call do_nmi 1392 RESTORE_REGS 1393 lss 12+4(%esp), %esp # back to espfix stack 1394 CFI_ADJUST_CFA_OFFSET -24 1395 jmp irq_return 1396 #endif 1397 CFI_ENDPROC 1398 END(nmi) 1399 1400 ENTRY(int3) 1401 RING0_INT_FRAME 1402 ASM_CLAC 1403 pushl_cfi $-1 # mark this as an int 1404 SAVE_ALL 1405 TRACE_IRQS_OFF 1406 xorl %edx,%edx # zero error code 1407 movl %esp,%eax # pt_regs pointer 1408 call do_int3 1409 jmp ret_from_exception 1410 CFI_ENDPROC 1411 END(int3) 1412 1413 ENTRY(general_protection) 1414 RING0_EC_FRAME 1415 pushl_cfi $do_general_protection 1416 jmp error_code 1417 CFI_ENDPROC 1418 END(general_protection) 1419 1420 #ifdef CONFIG_KVM_GUEST 1421 ENTRY(async_page_fault) 1422 RING0_EC_FRAME 1423 ASM_CLAC 1424 pushl_cfi $do_async_page_fault 1425 jmp error_code 1426 CFI_ENDPROC 1427 END(async_page_fault) 1428 #endif

其中system_call如下:

1 ENTRY(system_call) 2 RING0_INT_FRAME # can't unwind into user space anyway 3 ASM_CLAC 4 pushl_cfi %eax # save orig_eax 5 SAVE_ALL 6 GET_THREAD_INFO(%ebp) 7 # system call tracing in operation / emulation 8 testl $_TIF_WORK_SYSCALL_ENTRY,TI_flags(%ebp) 9 jnz syscall_trace_entry 10 cmpl $(NR_syscalls), %eax 11 jae syscall_badsys 12 syscall_call: 13 call *sys_call_table(,%eax,4) 14 syscall_after_call: 15 movl %eax,PT_EAX(%esp) # store the return value 16 syscall_exit: 17 LOCKDEP_SYS_EXIT 18 DISABLE_INTERRUPTS(CLBR_ANY) # make sure we don't miss an interrupt 19 # setting need_resched or sigpending 20 # between sampling and the iret 21 TRACE_IRQS_OFF 22 movl TI_flags(%ebp), %ecx 23 testl $_TIF_ALLWORK_MASK, %ecx # current->work 24 jne syscall_exit_work 25 26 restore_all: 27 TRACE_IRQS_IRET 28 restore_all_notrace: 29 #ifdef CONFIG_X86_ESPFIX32 30 movl PT_EFLAGS(%esp), %eax # mix EFLAGS, SS and CS 31 # Warning: PT_OLDSS(%esp) contains the wrong/random values if we 32 # are returning to the kernel. 33 # See comments in process.c:copy_thread() for details. 34 movb PT_OLDSS(%esp), %ah 35 movb PT_CS(%esp), %al 36 andl $(X86_EFLAGS_VM | (SEGMENT_TI_MASK << 8) | SEGMENT_RPL_MASK), %eax 37 cmpl $((SEGMENT_LDT << 8) | USER_RPL), %eax 38 CFI_REMEMBER_STATE 39 je ldt_ss # returning to user-space with LDT SS 40 #endif 41 restore_nocheck: 42 RESTORE_REGS 4 # skip orig_eax/error_code 43 irq_return: 44 INTERRUPT_RETURN 45 .section .fixup,"ax" 46 ENTRY(iret_exc) 47 pushl $0 # no error code 48 pushl $do_iret_error 49 jmp error_code 50 .previous 51 _ASM_EXTABLE(irq_return,iret_exc) 52 53 #ifdef CONFIG_X86_ESPFIX32 54 CFI_RESTORE_STATE 55 ldt_ss: 56 #ifdef CONFIG_PARAVIRT 57 /* 58 * The kernel can't run on a non-flat stack if paravirt mode 59 * is active. Rather than try to fixup the high bits of 60 * ESP, bypass this code entirely. This may break DOSemu 61 * and/or Wine support in a paravirt VM, although the option 62 * is still available to implement the setting of the high 63 * 16-bits in the INTERRUPT_RETURN paravirt-op. 64 */ 65 cmpl $0, pv_info+PARAVIRT_enabled 66 jne restore_nocheck 67 #endif 68 69 /* 70 * Setup and switch to ESPFIX stack 71 * 72 * We're returning to userspace with a 16 bit stack. The CPU will not 73 * restore the high word of ESP for us on executing iret... This is an 74 * "official" bug of all the x86-compatible CPUs, which we can work 75 * around to make dosemu and wine happy. We do this by preloading the 76 * high word of ESP with the high word of the userspace ESP while 77 * compensating for the offset by changing to the ESPFIX segment with 78 * a base address that matches for the difference. 79 */ 80 #define GDT_ESPFIX_SS PER_CPU_VAR(gdt_page) + (GDT_ENTRY_ESPFIX_SS * 8) 81 mov %esp, %edx /* load kernel esp */ 82 mov PT_OLDESP(%esp), %eax /* load userspace esp */ 83 mov %dx, %ax /* eax: new kernel esp */ 84 sub %eax, %edx /* offset (low word is 0) */ 85 shr $16, %edx 86 mov %dl, GDT_ESPFIX_SS + 4 /* bits 16..23 */ 87 mov %dh, GDT_ESPFIX_SS + 7 /* bits 24..31 */ 88 pushl_cfi $__ESPFIX_SS 89 pushl_cfi %eax /* new kernel esp */ 90 /* Disable interrupts, but do not irqtrace this section: we 91 * will soon execute iret and the tracer was already set to 92 * the irqstate after the iret */ 93 DISABLE_INTERRUPTS(CLBR_EAX) 94 lss (%esp), %esp /* switch to espfix segment */ 95 CFI_ADJUST_CFA_OFFSET -8 96 jmp restore_nocheck 97 #endif 98 CFI_ENDPROC 99 ENDPROC(system_call)

精简其中主要的如下:

1 ENTRY(system_call) 2 SAVE_ALL #保存现场 3 syscall_call: 4 call *sys_call_table(,%eax,4) #中断向量表 5 syscall_after_call: 6 movl %eax,PT_EAX(%esp) # 存储返回值

7 syscall_exit: 8 LOCKDEP_SYS_EXIT 9 DISABLE_INTERRUPTS(CLBR_ANY) # 确保不会错过中断 10 restore_all: 11 TRACE_IRQS_IRET 12 irq_return: 13 INTERRUPT_RETURN 14 ENDPROC(system_call)

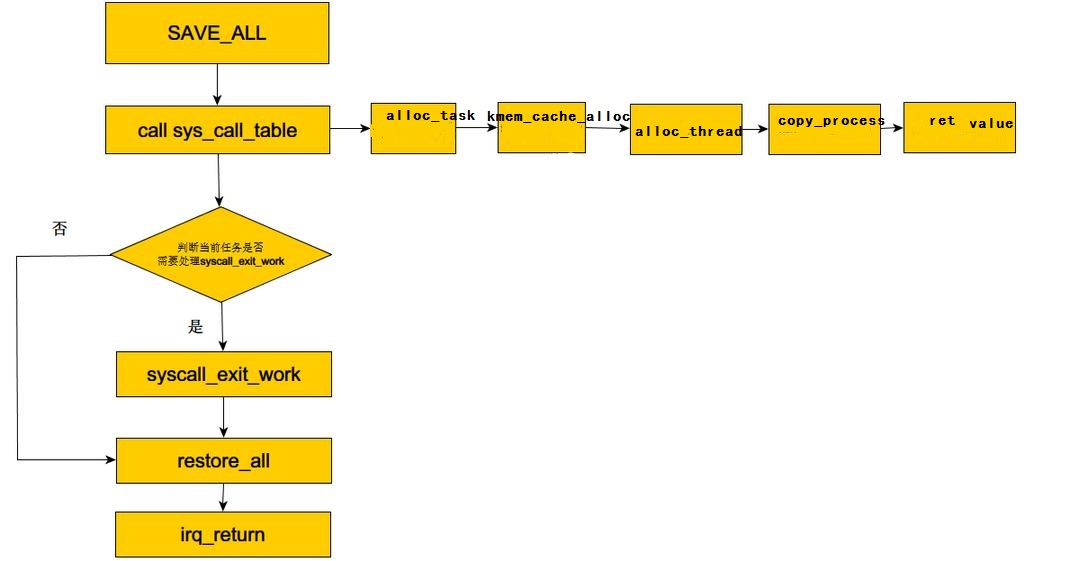

借图 修改一下系统调用部分

流程图